HardOCP News

[H] News

- Joined

- Dec 31, 1969

- Messages

- 0

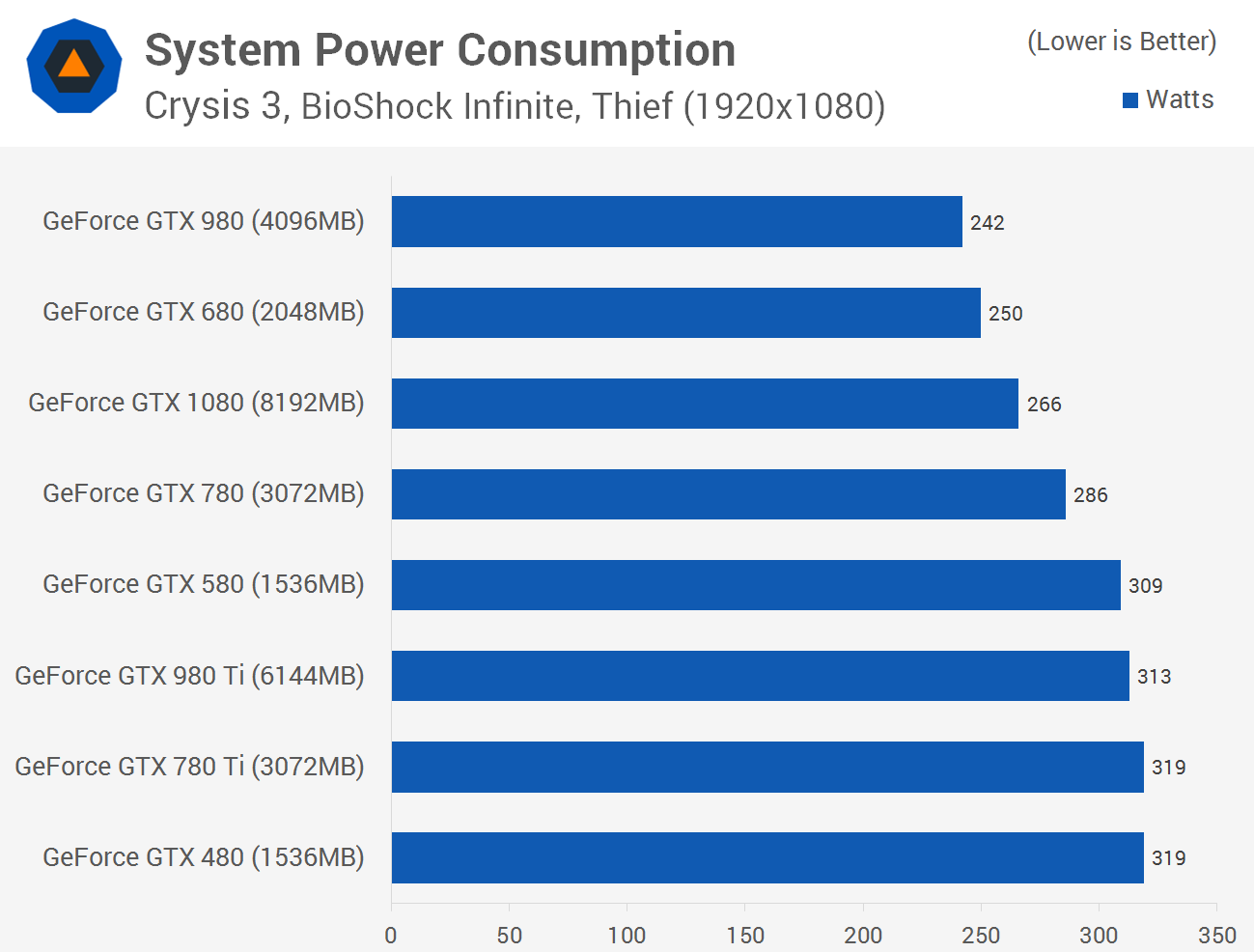

TechSpot has put together a comparison of six generations of GeForce graphics cards from the GTX 480 all the way up to the new GTX 1080. This article is handy if you are upgrading from an old card like a GTX 680 or the likes.

Now with the release of Pascal the time has come to revisit history and see how six generations of Nvidia GeForce graphics cards compare. To streamline testing we'll be sticking to DirectX 11 titles which is supported by all GeForce series, old and new, so we can accurately compare them.

Now with the release of Pascal the time has come to revisit history and see how six generations of Nvidia GeForce graphics cards compare. To streamline testing we'll be sticking to DirectX 11 titles which is supported by all GeForce series, old and new, so we can accurately compare them.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)