Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

40 and 50 TB drives from Seagate

- Thread starter philb2

- Start date

exactly and probably make my troubling upgrade that much easierLove this. Two of these would back up my entire NAS

Thug Esquire

[H]ard|Gawd

- Joined

- May 4, 2005

- Messages

- 1,494

I love the idea of running fewer disks for the same capacity, because you get the power savings. In some places like Europe where energy prices are spiking, this is a much bigger deal than it used to be. But I'm not sure storage controller speed (or drive cache) has kept up with these capacity improvements.

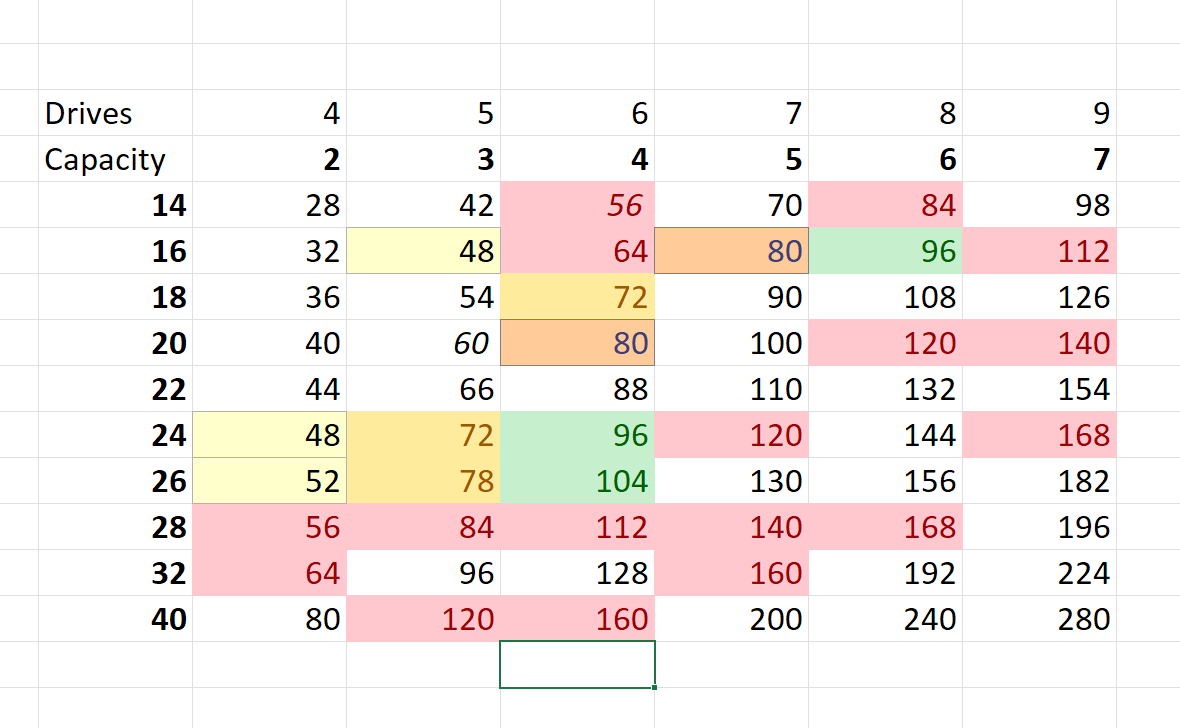

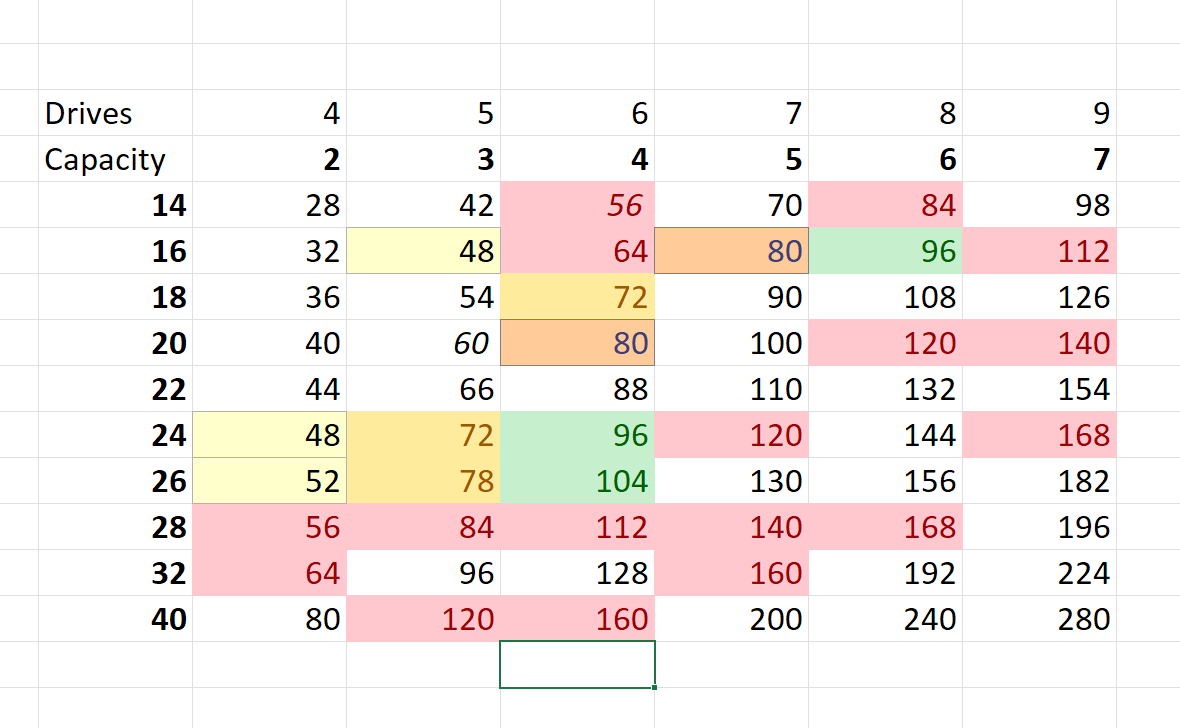

For example, if I had 8x16TB drives in RAID6 I'd get the capacity of 6 of them (6*16=96). To get similar capacity/fault tolerance out of 32TB drives I'd need 5 (3*32=96, 2 for parity). In theory that'll yield about a three disk energy savings. But which array would rebuild faster?

For example, if I had 8x16TB drives in RAID6 I'd get the capacity of 6 of them (6*16=96). To get similar capacity/fault tolerance out of 32TB drives I'd need 5 (3*32=96, 2 for parity). In theory that'll yield about a three disk energy savings. But which array would rebuild faster?

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,456

As amazing as that density is, I bet the rebuild/scrub times would not be fun to wait for.

Red FalconAs amazing as that density is, I bet the rebuild/scrub times would not be fun to wait for.

Maybe the existence of these drives will lead to a much faster SATA 4 standard. Or maybe SAS ports on consumer motherboards. Or maybe neither.

HDD are not that close to saturate SATA 3 speed limit 600 MB/s for sustained long job no ?Maybe the existence of these drives will lead to a much faster SATA 4 standard

Drive speed is the limitation more than the port, wouldn't SAS have the same issue ? I could be all wrong here

LukeTbkHDD are not that close to saturate SATA 3 speed limit 600 MB/s for sustained long job no ?

Drive speed is the limitation more than the port, wouldn't SAS have the same issue ? I could be all wrong here

OK, I should have written out my assumption that Seagate (and WD?) will be able to improve transfer rate due to increased bit density.

The initial customers for these drives will be data centers and cloud services. They will tell Seagate what they need to make these drives practical.

Pure speculation, for shits and grins. Imagine if Seagate or WD used this technology in a 5 1/4" form factor. Or to really go back in time, 8" form factor. https://www.storagenewsletter.com/2018/07/22/history-1979-shugart-associates-sa1000/

They could double it and still be ok, latest dense HDD reach 275 mb/s which is significantly faster than in the past but still a lot of room from 600MB/s.OK, I should have written out my assumption that Seagate (and WD?) will be able to improve transfer rate due to increased bit density.

Old 15,000 RPM ultra fast SCSI HDD in the past reached 160mb/s for example

Pretty sure that there are Seagate Exos Mach.2 (edited that in) that have 500+ MB/s transfer. They have something like two independent actuators that will show up as two different drives if your backplane doesn't support it.

They have quite the price increase on them compared to similarly sized Exos drives.

https://www.seagate.com/innovation/multi-actuator-hard-drives/

They have quite the price increase on them compared to similarly sized Exos drives.

https://www.seagate.com/innovation/multi-actuator-hard-drives/

Imagine if Seagate or WD used this technology in a 5 1/4" form factor.

Market failure cause nobody has 5.25" bays... But I once got some surplus 5.25" full height drives, model Seagate Elite 47. They were monsters, with 28 heads and weighed 7 pounds each. Of course, the drives speced here have 10 platters and 20 heads in a regular 3.5" form factor. I'm guessing you could get at least 40 platters in a full height 5.25", and the platters would be at least double the area, so like 8x the storage. Likely doesn't make sense though.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,456

I doubt it, the SATA protocol has been maxed out for over a half-decade now, and HDDs don't really come close to saturating it even in sequential transfers.Red Falcon

Maybe the existence of these drives will lead to a much faster SATA 4 standard. Or maybe SAS ports on consumer motherboards. Or maybe neither.

Even the dual-actuator HDDs, as mentioned above, only get up so high, and an array rebuild just takes time to run through the whole platter, which on something upwards of 50TB would probably take over a week to rebuild, especially so with a single-actuator HDD.

Other than cold storage or archiving data, these drives are almost obsolete out of the gate just due to their sheer size and minuscule transfer rates.

Grebuloner

2[H]4U

- Joined

- Jul 31, 2009

- Messages

- 2,056

Rotational speed would take a hit as a consequence without new and amazing platter tech and the arms would also increase in size. Latency/seek times would massively increase.I'm guessing you could get at least 40 platters in a full height 5.25", and the platters would be at least double the area, so like 8x the storage. Likely doesn't make sense though.

10k/15k rpm 3.5" drives were basically tall 2.5" drives on the inside.

Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,219

Still want the sweet spot for price/performance and capacity. Spinning disk is used for large media storage only. Don't care about performance much.

They could double it and still be ok, latest dense HDD reach 275 mb/s which is significantly faster than in the past but still a lot of room from 600MB/s.

Old 15,000 RPM ultra fast SCSI HDD in the past reached 160mb/s for example

If density increases equally in both dimensions, then it would take a 4x (per platter) density increase to double the transfer rate.

So why did Seagate release these drives? Presumably they did some market or customer research.Other than cold storage or archiving data, these drives are almost obsolete out of the gate just due to their sheer size and minuscule transfer rates.

And the larger question is: what is the future of spinning HDDs? How many years until SSDs dominate the market for "normal" use, even say 8 ot 16 TB drives for consumer use. Personally I could certainly use an 8 TB SSD to store photos (Nikon NEF), music, books, etc. But the price of an 8 TB NVMe drive is way more than I can justify.

Not sure about the minuscule transfer rate, if you have say only 6 of them in a fast ZFS mode, it would already saturate 10gb no ? And 16 drive pool would need 40+gb type connection, well maybe that count as minuscule transfer rates now a day.

I imagine it is not uncommon for HDD storage pool to still have more possible theoretical max bandwidth than their network connexion allow, those drive are very slow for small file, but for large file transfer 250-275mbs by second is not that far from sata SSD (specially over long sustained work).

I imagine it is not uncommon for HDD storage pool to still have more possible theoretical max bandwidth than their network connexion allow, those drive are very slow for small file, but for large file transfer 250-275mbs by second is not that far from sata SSD (specially over long sustained work).

Last edited:

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,456

You are giving these largecorps far more credit for intelligence and "customer research" than they deserve.So why did Seagate release these drives? Presumably they did some market or customer research.

Do ZFS arrays scale out linearly with drives?Not sure about the minuscule transfer rate, if you have say only 6 of them in a fast ZFS mode, it would already saturate 10gb no ? And 16 drive pool would need 40+gb type connection, well maybe that count as minuscule transfer rates now a day.

I imagine it is not uncommon for HDD storage pool to still have more possible theoretical max bandwidth than their network connexion allow, those drive are very slow for small file, but for large file transfer 250-275mbs by second is not that far from sata SSD (specially over long sustained work).

Traditional RAID, even RAID 0, starts to bottom out on sequential transfer rates after about 6-8 HDDs with anything added afterwards having negligible transfer rate improvements.

I'm also in the camp that doesn't need much performance, and 1 week rebuilds aren't a problem (although I find HDDs pretty reliable, I have 19 14TB WD taken out of external drives and no failure after 3 years), with RAIDZ3 providing great safety with one drive down.

If performance is needed SSDs are king, and are now available at reasonable prices up to a few TBs so should be enough for plenty of uses like running a massive database. At work on virtualized servers we're definitely going all SSD including keeping existing servers but replacing storage arrays from spinning rust to flash.

For years I got used to getting cheaper and bigger drives all the time, then there were the Thailand floods and things never got back to how they were before.

Early 2020 during lockdown I got these 14TB NAS drives for 250€/each, now more than 3 years later 250€ still gets me 14TB drives, maybe 16TB if I look hard, or 18TB if I add a few euros, but nothing exciting.

If performance is needed SSDs are king, and are now available at reasonable prices up to a few TBs so should be enough for plenty of uses like running a massive database. At work on virtualized servers we're definitely going all SSD including keeping existing servers but replacing storage arrays from spinning rust to flash.

For years I got used to getting cheaper and bigger drives all the time, then there were the Thailand floods and things never got back to how they were before.

Early 2020 during lockdown I got these 14TB NAS drives for 250€/each, now more than 3 years later 250€ still gets me 14TB drives, maybe 16TB if I look hard, or 18TB if I add a few euros, but nothing exciting.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)