i currently run 3x catleaps off of a pair of 680 classified.... they handle older games that support the high res just fine...like source games... but more demanding games I'm forced to use only one monitor because if anything dips below 60fps... I can't handle it... :/

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

3x 2560x1440 monitors - best card(s) to push them with?

- Thread starter Jahx

- Start date

I am assuming you have 3 2560 monitors already and that is why you feel the need to use all three of them. I would not do that to start. Get a card you will feel good about upgrading from to Xfire of SLI start with one good card and later when the prices come down get a second. Amazon has ASUS R9 290X cards for $580 but you have to wait about a month. I would NOT get a reference card at this point but a card with good custom cooling.

What you can also do is play slower strategy games on the 3 monitors to see if you really like surround monitor gaming. Some have thought they would but the bezels or other things turned them off. You have waited this many years so there is no rush now. I just upgraded from 5870's in xfire to a R9 290. I have 3 2560 monitors in addition to the 3 1920*1200 I have in my signature. I still play on 1 2560 for the most part. I also have a 4K monitor I play on occasionally.

Other Info for you:

If your motherboard will handle 3 cards @ 8X there is no loss for you over having 2 cards @ 16X. I would personally put my vote in for you getting AMD cards because of the Mantle driver recently introduced. It has great promise for the future over the proprietary nature of Nvidia offerings. These are things you need to look at prior to dropping cash too quickly.

You will also drop more cash for a 290X, than a 290 BUT the 290X has a smaller price/performance ratio. Problem is the 290's are harder to find at a decent price. I bought a 290 for $400 (MSRP)when they first came out not the going price is usually higher than the introductory price of the 290X which was $550. Like I said above, Amazon has the ASUS 290X for $580 but you have to wait a few weeks for it to arrive.

GO SLOW, NO NEED TO RUSH, STUDY THE MARKET AND TECHNOLOGY FIRST.

What you can also do is play slower strategy games on the 3 monitors to see if you really like surround monitor gaming. Some have thought they would but the bezels or other things turned them off. You have waited this many years so there is no rush now. I just upgraded from 5870's in xfire to a R9 290. I have 3 2560 monitors in addition to the 3 1920*1200 I have in my signature. I still play on 1 2560 for the most part. I also have a 4K monitor I play on occasionally.

Other Info for you:

If your motherboard will handle 3 cards @ 8X there is no loss for you over having 2 cards @ 16X. I would personally put my vote in for you getting AMD cards because of the Mantle driver recently introduced. It has great promise for the future over the proprietary nature of Nvidia offerings. These are things you need to look at prior to dropping cash too quickly.

You will also drop more cash for a 290X, than a 290 BUT the 290X has a smaller price/performance ratio. Problem is the 290's are harder to find at a decent price. I bought a 290 for $400 (MSRP)when they first came out not the going price is usually higher than the introductory price of the 290X which was $550. Like I said above, Amazon has the ASUS 290X for $580 but you have to wait a few weeks for it to arrive.

GO SLOW, NO NEED TO RUSH, STUDY THE MARKET AND TECHNOLOGY FIRST.

dpoverlord

[H]ard|Gawd

- Joined

- Nov 18, 2004

- Messages

- 1,931

read this will save you a lot of time.

http://www.overclock.net/t/1415441/...3-4-way-sli-gk110-scaling/0_100#post_20536893

http://www.overclock.net/t/1415441/...3-4-way-sli-gk110-scaling/0_100#post_20536893

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

So you still didn't read the review. Stop talking out of your ass and spreading misinformation.

You obviously have no idea what you are talking about. I like Nvidia as much as the next guy, but performance is performance. And the reviews show that AMDs 290x outperforms the GTX 780 Ti at large resolutions. Which if I am not mistaken, is exactly what we are talking about here.

I concede that a reference 780ti will be beaten at 4k in most games by a reference 290x. It can go either way though, and the significantly higher overclocking headroom of the 780 ti (especially non reference models like the Classy and Kingpin" make it clear winner in every resolution. Here's a review of the tri-x 290x http://www.techpowerup.com/reviews/Sapphire/R9_290X_Tri-X_OC/6.html. The 780ti beats it in nearly every game at 5760x1080. Here's another comparison of the reference 290x vs 780 ti http://hexus.net/tech/reviews/graphics/62213-nvidia-geforce-gtx-780-ti-vs-amd-radeon-r9-290x-4k/?page=2 Once again, the 780ti pulls ahead at 4k in most games.

Add in overclocking, and the gap grows. There aren't any non reference 290xs that can keep up with the better non reference tis.

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

I concede that a reference 780ti will be beaten at 4k in most games by a reference 290x. It can go either way though, and the significantly higher overclocking headroom of the 780 ti (especially non reference models like the Classy and Kingpin" make it clear winner in every resolution. Here's a review of the tri-x 290x http://www.techpowerup.com/reviews/Sapphire/R9_290X_Tri-X_OC/6.html. The 780ti beats it in nearly every game at 5760x1080. Here's another comparison of the reference 290x vs 780 ti http://hexus.net/tech/reviews/graphics/62213-nvidia-geforce-gtx-780-ti-vs-amd-radeon-r9-290x-4k/?page=2 Once again, the 780ti pulls ahead at 4k in most games.

Add in overclocking, and the gap grows. There aren't any non reference 290xs that can keep up with the better non reference tis.

Again you are showing stock cards in the second one, at stock clocks without any reference. Also you have shown zero proof on your continuing claims for the OC abilities. You have one review that says OC, but the techpowerup does not explain what they did to determine its max OC.

EDIT: My bad the techpowerup bought an OC card but did not attempt to OC it themselves...so against, cherry picking reviews.

Last edited:

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

Are you really that unfamiliar with the overclocked capabilities of GK110 and Hawaii? I thought this was an enthusiast forum. Check out the results yourself http://www.overclock.net/t/1436635/official-ocns-team-green-vs-team-red-gk110-vs-hawaii

This works too http://www.3dmark.com/hall-of-fame-2/

There's a ridiculous amount of overclocked benchmark comparisons between the two architectures. It's not difficult to see which one is ultimately more powerful.

This works too http://www.3dmark.com/hall-of-fame-2/

There's a ridiculous amount of overclocked benchmark comparisons between the two architectures. It's not difficult to see which one is ultimately more powerful.

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

Are you really that unfamiliar with the overclocked capabilities of GK110 and Hawaii? I thought this was an enthusiast forum. Check out the results yourself http://www.overclock.net/t/1436635/official-ocns-team-green-vs-team-red-gk110-vs-hawaii

This works too http://www.3dmark.com/hall-of-fame-2/

There's a ridiculous amount of overclocked benchmark comparisons between the two architectures. It's not difficult to see which one is ultimately more powerful.

Benchmarks mean nothing to gameplay or overall performance. They are e-peen ratings. They also don't show what those OC values do for large resolutions which is what we are talking about here. Also if you go back and read some of those previous links your presented, they even called out the fact the GTX 780 Ti was struggling with its RAM at the higher resolutions. So yeah, this is an enthusiast forum, not a benchmark groupie forum. But I thought you would have gathered that by now...

EDIT: By the way your overclock.net thread is a joke. Look how many participants there are...and the actual benches they are doing...

Last edited:

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

Benchmarks mean nothing to gameplay or overall performance. They are e-peen ratings. They also don't show what those OC values do for large resolutions which is what we are talking about here. Also if you go back and read some of those previous links your presented, they even called out the fact the GTX 780 Ti was struggling with its RAM at the higher resolutions. So yeah, this is an enthusiast forum, not a benchmark groupie forum. But I thought you would have gathered that by now...

lol seriously? Benchmarks absolutely do show the capabilities and overclocking potential of a card. How could you possibly dispute that? Go read through the hundreds of examples of overclocks in the thread I linked and then come back and tell me I'm wrong. GK110 clocks higher than Hawaii, PERIOD. Resolution doesn't affect how high a card can overclock lol. A given card will overclock exactly the same whether it's at 1080p or 4k.

And there are gaming benchmarks in that thread as well.

I'm providing concrete examples here, what exactly have you done to back up your argument?

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

lol seriously? Benchmarks absolutely do show the capabilities and overclocking potential of a card. How could you possibly dispute that? Go read through the hundreds of examples of overclocks in the thread I linked and then come back and tell me I'm wrong. GK110 clocks higher than Hawaii, PERIOD.

And there are gaming benchmarks in that thread as well.

I'm providing concrete examples here, what exactly have you done to back up your argument?

You aren't providing anything but FUD. Benchmarks are for checking speeds of a card, but that does not translate the same to performance in games. Nor does it translate to performance at larger resolutions. I don't need to back up my argument, it was made using the reviews done right here at HardOCP. You have shown absolutely nothing to refute that.

Please when you get out of the stone age with your

comment, then we can discuss. But as long as you are trying to pose clock speed as the same as real world performance, you are at a serious disadvantage.GK110 clocks higher than Hawaii, PERIOD.

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

So from your perspective, overclocking a card doesn't show an improvement in gaming performance? You did see that every single one of the links I provided with the exception of the 3DMark HOF showed gaming benchmarks, right? Ya know, tests that show a card's performance in gaming. I showed that reference GK110 and Hawaii are very close at stock speeds and high resolutions... you can find examples of either arch performing better in different games, due to differences in testing methodology, drivers, game selection, and silicone lottery. My additional argument was that GK110 does generally overclock higher than Hawaii. This has been proven time and time again. GK110 has better non reference models at the moment, runs cooler, and uses less power, all of which contribute to the better overall overclocking results. If you seriously don't consider overclocking cards when deciding on a purchase, I think you're really missing out. It's one of the most important factors that enthusiasts consider when choosing a card. Generally, higher clock speed=better performance, with stability and temperature being the main limiting factors. There are literally thousands of examples of overclocked gaming benchmarks out there, and in the vast majority of cases GK110 outperforms Hawaii when overclocked. If you can prove otherwise, please provide some evidence. I feel that I've provided you with enough examples for you to at least see where I'm coming from lol. I've owned 5 780s, 2 Titans, 2 290s, and a 290x over the last several months, and my personal experience with these cards have led me to same conclusion that I've drawn from the information online.

Last edited:

You aren't providing anything but FUD. Benchmarks are for checking speeds of a card, but that does not translate the same to performance in games. Nor does it translate to performance at larger resolutions. I don't need to back up my argument, it was made using the reviews done right here at HardOCP. You have shown absolutely nothing to refute that.

Please when you get out of the stone age with your comment, then we can discuss. But as long as you are trying to pose clock speed as the same as real world performance, you are at a serious disadvantage.

overclocking the part of the card that is limiting your performance will give you better performance at 4k, if thats memory bandwidth, overclock memory, if its processing, overclock core/shaders. The only way overclocking won't help is if it isn't the bottleneck.

Also "I don't need to back up my argument"? Yeah, nothing you say will have any relevance without backing it up, this is the internet, anyone can pull shit out of their ass.

This just in, Nvidia and AMD got together to make the 9001GTFaIL with 1024 bit memory bus, 16GB VRAM, 4k shaders, and a clock speed of 6GHZ.

See?

Either way, from a quick google it looks like they are neck and neck, if OP is going to play mantle games i'd go with 290x's, otherwise, 780TI's.

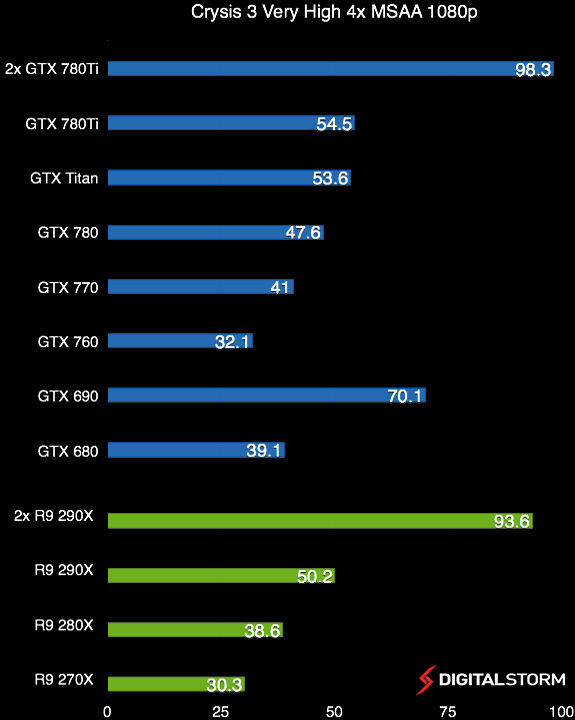

Note this graph doesn't have mantle benchamarks in it but from what I've read mantle helps a lot in dual/tri CF applications.

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

overclocking the part of the card that is limiting your performance will give you better performance at 4k, if thats memory bandwidth, overclock memory, if its processing, overclock core/shaders. The only way overclocking won't help is if it isn't the bottleneck.

Also "I don't need to back up my argument"? Yeah, nothing you say will have any relevance without backing it up, this is the internet, anyone can pull shit out of their ass.

First off I never said anything about OCs not being relevant, only benchmarks. There are not reviews out there showing both cards at max OCs running 4k resolution. You missed the whole point. My argument was backed up by the post I already had regarding {H]'s own review of both 780Tis and 290x at 4k resolution. Large resolutions is what this particular thread is about. It still comes down to, why would it be foolish for the OP to buy AMD 290xs? failwheeldrive claimed that the 780Ti was far superior to the 290x and it would be silly to buy 290xs for this setup...

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

lol seriously? Benchmarks absolutely do show the capabilities and overclocking potential of a card. How could you possibly dispute that? Go read through the hundreds of examples of overclocks in the thread I linked and then come back and tell me I'm wrong. GK110 clocks higher than Hawaii, PERIOD. Resolution doesn't affect how high a card can overclock lol. A given card will overclock exactly the same whether it's at 1080p or 4k.

And there are gaming benchmarks in that thread as well.

I'm providing concrete examples here, what exactly have you done to back up your argument?

That was not at all what I said. Benchmarks do not reflect real world performance in games especially at higher resolutions. That is why {H} and many other sites do their reviews using games instead of benchmarks..

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

That was not at all what I said. Benchmarks do not reflect real world performance in games especially at higher resolutions. That is why {H} and many other sites do their reviews using games instead of benchmarks..

How can you possibly say that GAMING benchmarks don't accurately reflect GAMING performance? You did see that the links I posted showed gaming benchmarks, right? I only said that about 15 times lol.

And regardless, the links I posted were to show the overclocking potential of GK110 and Hawaii, not how much overclocking affects high resolutions (which it does, exactly the same as it does lower resolutions.) I posted links to reviews that showed 780 tis neck and neck and even beating out the 290x at stock clocks. I even agreed with you when saying they're really similar in terms of 4k performance. My secondary argument is simply that GK110 has more overclocking headroom than Hawaii, which I backed up with fact based evidence (when I could have simply given you anecdotal evidence based on my ownership of both arches.) If you want to try and downplay the importance of overclocking, then that's totally fine. The fact remains that overclocking remains a valid way to increase performance, REGARDLESS OF RESOLUTION. Playing at 4k or 720p doesn't make a bit of difference assuming there isn't some other bottleneck (ram, stability, temps, etc.)... when both cards perform as similarly as they do at reference/stock speeds, the card that overclocks higher is going to win.

Last edited:

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

How can you possibly say that GAMING benchmarks don't accurately reflect GAMING performance? You did see that the links I posted showed gaming benchmarks, right? I only said that about 15 times lol.

And regardless, the links I posted were to show the overclocking potential of GK110 and Hawaii, not how much overclocking affects high resolutions (which it does, exactly the same as it does lower resolutions.) I posted links to reviews that showed 780 tis neck and neck and even beating out the 290x at stock clocks. I even agreed with you when saying they're really similar in terms of 4k performance. My secondary argument is simply that GK110 has more overclocking headroom than Hawaii, which I backed up with fact based evidence (when I could have simply given you anecdotal evidence based on my ownership of both arches.) If you want to try and downplay the importance of overclocking, then that's totally fine. The fact remains that overclocking remains a valid way to increase performance, REGARDLESS OF RESOLUTION. Playing at 4k or 720p doesn't make a bit of difference assuming there isn't some other bottleneck (ram, stability, temps, etc.)... when both cards perform as similarly as they do at reference/stock speeds, the card that overclocks higher is going to win.

But that isn't true. You continued to say the 780Ti was the only way to go and that you would get the same bottleneck in both GPUs, which actually isn't true and some of the links you provided even said that same thing, that the 290x does better at larger resolutions. That is what we are discussing here, larger resolutions. And higher OCs do not necessarily reflect in performance for high resolutions. The links you provided for 'gaming' performance do not show the scenarios we are talking about. The [H] review however, does. So I still fail to see what you are trying to gain here. You didn't suggest they were close, you continued and still continue to say that the 780Ti is hands down the best. Which it isn't for this application.

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

But that isn't true. You continued to say the 780Ti was the only way to go and that you would get the same bottleneck in both GPUs, which actually isn't true and some of the links you provided even said that same thing, that the 290x does better at larger resolutions. That is what we are discussing here, larger resolutions. And higher OCs do not necessarily reflect in performance for high resolutions. The links you provided for 'gaming' performance do not show the scenarios we are talking about. The [H] review however, does. So I still fail to see what you are trying to gain here. You didn't suggest they were close, you continued and still continue to say that the 780Ti is hands down the best. Which it isn't for this application.

Dude, reread my earlier posts. One of the first things I said was that the 290x hangs with and even beats the 780 ti at 4k for some games... as I have shown from the other 4k comparisons I posted, either one can end up beating the other by a small amount.

I said that the memory in the 290x would be a bottleneck when playing modded skyrim at 4k. I didn't say every game, just Skyrim and other graphically intensive games with mods.

Once again, the 4k comparisons I posted showed the 780 ti beating the 290x in most games. The other links showed that the 780 ti overclocks higher than the 290x. Now please explain to me how a card that hangs with and even beats another card is going to loose to the second card when it overclocks significantly higher. It completely defies logic. If both cards offer similar stock clocked performance at 4k, the card that overclocks by 30% is going to spank the card that overclocks by 10%. It's just how it is.

And finally, please post some 4k gaming benchmarks that prove the 3gb frame buffer is going to be a limiting factor. Unless the game is modded with insane textures, the 3gb card pulls off the exact same numbers as the 4gb card. Hell, the 2gb Mars 760 pulls off the same 4k performance as the 4gb 290x, despite having less memory and significantly less memory bandwidth. All you've done this entire time is completely ignore rational thought and fact based argument with the response of "nuh uh doesn't prove it nope." It couldn't be clearer if smacked you in the face, but I'll go ahead and break it down for the 50th time: both cards offer similar stock performance... the 780 ti overclocks much higher... winrar=780 ti.

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

Dude, reread my earlier posts. One of the first things I said was that the 290x hangs with and even beats the 780 ti at 4k for some games... as I have shown from the other 4k comparisons I posted, either one can end up beating the other by a small amount.

I said that the memory in the 290x would be a bottleneck when playing modded skyrim at 4k. I didn't say every game, just Skyrim and other graphically intensive games with mods.

I think it is you who needs to reread what you posted. Here, let me repost them for you. Tell me where you stated any such thing? And why would memory on a 290x be a bottleneck?

In reference to the 290x vs 780Ti, here is what you said:

First thing you said:

Remember you're going to be gaming on a larger res than 4k, and Skyrim can easily break 3gb vram at 1080p with mods. Since both cards are going to be a bottleneck, get the 780 ti. It's flat out faster, cooler, and quieter. A non ref ti like the Classy or Kingpin is your best bet. The 780 is still better bang for your buck though.

Second thing you said:

The 780 ti will be faster than the 290x at these resolutions and will be fine in most games without aa, but the fact remains any single card solution won't be enough for this resolution at decent settings.

Third thing you said:

Unless OP is planning on water cooling, I'd avoid the 290 and 290x (especially reference) like the plague. They're hot, loud, and don't overclock for ****. While the extra 1gb vram over the 780 ti and 780 is nice, Nvidia still has the faster, cooler, and quieter cards, even at 4k+. Add overclocking to the mix and Nvidia wins hands down. And if OP lives in the States, he'll have to deal with the ridiculous AMD tax that retailers have been piling on the red cards.

Fourth thing you said:

Lol no. The 780 ti edges out the 290x at stock clocks across tge board. Some games run slightly better kn the 290x, but overall the ti is faster. Once you add in overclocking, the ti is the clear winner. A non ref ti at 1300+ decimates every current overclocked 290x.

Please tell me where you ever said the 290x and 780Ti traded off or ever made any comments other than insinuating the 780 Ti is the best card out there flat out.

Now for the rest:

Once again, the 4k comparisons I posted showed the 780 ti beating the 290x in most games. The other links showed that the 780 ti overclocks higher than the 290x. Now please explain to me how a card that hangs with and even beats another card is going to loose to the second card when it overclocks significantly higher. It completely defies logic. If both cards offer similar stock clocked performance at 4k, the card that overclocks by 30% is going to spank the card that overclocks by 10%. It's just how it is.

And finally, please post some 4k gaming benchmarks that prove the 3gb frame buffer is going to be a limiting factor. Unless the game is modded with insane textures, the 3gb card pulls off the exact same numbers as the 4gb card. Hell, the 2gb Mars 760 pulls off the same 4k performance as the 4gb 290x, despite having less memory and significantly less memory bandwidth. All you've done this entire time is completely ignore rational thought and fact based argument with the response of "nuh uh doesn't prove it nope." It couldn't be clearer if smacked you in the face, but I'll go ahead and break it down for the 50th time: both cards offer similar stock performance... the 780 ti overclocks much higher... winrar=780 ti.

I am sorry but which of your reviews show that? The Hexus review was a single GPU review, not a CF/SLI review. Your Techpowerup did not show 4k at all, and even in that most, most of the games seem to be leaning toward 290x even without putting it in Uber mode. Remember the Uber mode is similar to the overclock that makes a GTX780 a 780 Ti. The Overclock.net site only had 5 people posting official numbers and 4 of them were Nvida users. And then you post a benchmark site, 3Mark, i Mean seriously? Let us go back to some adequate reviews on the actual topic being discussed in this thread, ultra large resolutions. Here look at this. Here again is the [H] review and here is the Anand comparison. It also isn't just the higher VRAM, it is also how it is used. Note that pretty much across the board at ultra high resolutions the 290x wins pretty much every time. And this is what this thread is about, high resolutions. Now please stop making false claims.

Last edited:

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

lol I'm glad you reread my posts, but you're obviously still a little confused. Do you see where I said that Skyrim can use more than 3gb at 1080p while modded? That game will be a bottleneck at 4k+ on either card. That's what I was saying.

The next post of mine you quoted simply says that a single card won't handle 3x1440p at decent settings lol. Which is true... a single 780 ti or 290x would be crippled at this res.

No idea why you quoted the next two things... lol

And this is where I said they trade blows at stock (and even that the 290x wins in a lot of cases.)

Finally, how many times do I have to remind you that the overclocking results I linked on OCN and 3DMark were to show the overclocking headroom of both architectures, NOT to show overclocked 4k performance. Dunno what you're talking about by only 5 people in that thread, there are dozens of different individuals providing benchmarks in that thread. The techpowerup had results 5760x1080, which is considered high resolution lol. It was also pitting the fastest currently available non reference 290x against the 780 ti, and it STILL LOST. I didn't know we were only talking about multi-GPU configs now, too? Wow, you just keep raising the bar, don't you? lol

And once again, you still can't provide a single benchmark example of the 780 ti's 3gb being a bottleneck. They're neck and neck in pretty much every single game lol. The difference is one card doesn't run like a nuclear reactor, sound like a hair dryer, or throttle like crazy, preventing any decent overclock.

The next post of mine you quoted simply says that a single card won't handle 3x1440p at decent settings lol. Which is true... a single 780 ti or 290x would be crippled at this res.

No idea why you quoted the next two things... lol

And this is where I said they trade blows at stock (and even that the 290x wins in a lot of cases.)

I concede that a reference 780ti will be beaten at 4k in most games by a reference 290x. It can go either way though, and the significantly higher overclocking headroom of the 780 ti (especially non reference models like the Classy and Kingpin" make it clear winner in every resolution. Here's a review of the tri-x 290x http://www.techpowerup.com/reviews/Sapphire/R9_290X_Tri-X_OC/6.html. The 780ti beats it in nearly every game at 5760x1080. Here's another comparison of the reference 290x vs 780 ti http://hexus.net/tech/reviews/graphics/62213-nvidia-geforce-gtx-780-ti-vs-amd-radeon-r9-290x-4k/?page=2 Once again, the 780ti pulls ahead at 4k in most games.

Add in overclocking, and the gap grows. There aren't any non reference 290xs that can keep up with the better non reference tis.

Finally, how many times do I have to remind you that the overclocking results I linked on OCN and 3DMark were to show the overclocking headroom of both architectures, NOT to show overclocked 4k performance. Dunno what you're talking about by only 5 people in that thread, there are dozens of different individuals providing benchmarks in that thread. The techpowerup had results 5760x1080, which is considered high resolution lol. It was also pitting the fastest currently available non reference 290x against the 780 ti, and it STILL LOST. I didn't know we were only talking about multi-GPU configs now, too? Wow, you just keep raising the bar, don't you? lol

And once again, you still can't provide a single benchmark example of the 780 ti's 3gb being a bottleneck. They're neck and neck in pretty much every single game lol. The difference is one card doesn't run like a nuclear reactor, sound like a hair dryer, or throttle like crazy, preventing any decent overclock.

Last edited:

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

Finally, how many times do I have to remind you that the overclocking results I linked on OCN and 3DMark were to show the overclocking headroom of both architectures, NOT to show overclocked 4k performance. Dunno what you're talking about by only 5 people in that thread, there are dozens of different individuals providing benchmarks in that thread. The techpowerup had results 5760x1080, which is considered high resolution lol. It was also pitting the fastest currently available non reference 290x against the 780 ti, and it STILL LOST. I didn't know we were only talking about multi-GPU configs now, too? Wow, you just keep raising the bar, don't you? lol

And once again, you still can't provide a single benchmark example of the 780 ti's 3gb being a bottleneck. They're neck and neck in pretty much every single game lol. The difference is one card doesn't run like a nuclear reactor, sound like a hair dryer, or throttle like crazy, preventing any decent overclock.

You can say all you want, but you have been proven wrong over and over, you just refuse to see. I provided you all the information, you just refuse to look at it, instead posting stuff that doesn't have any affect on this thread. 5760x1080 is not 7680x1440, it isn't even close. It's not even in the same ballpark as 4k. That is what we are discussing here, but you are too ignorant to see that or follow the many posts, information, and links provided for you.

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

If we're only talking about 7680x1440 then the benchmarks you posted aren't applicable either now are they? And how is 5760x1080 not in the ballpark of 4k? It's within 25% of the total pixel count, pretty close if you asked me. In fact, 5760x1080 is closer to 4k than 7680x1440 (roughly 33% higher resolution than 4k.) And I did post links to 4k results too that showed the 780 ti beating the 290x at most games. That isn't even the argument here though, as I've said a dozen times they both perform roughly exactly the same at stock clocks. The area where the 780 ti trumps the 290x is in overclocking, and that's something you haven't been able to refute with a single shred of evidence. You only come back with the ridiculous argument that overclocking doesn't increase gaming performance, which is a huge load of garbage lol.

Last edited:

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

If we're only talking about 7680x1440 then the benchmarks you posted aren't applicable either now are they? And how is 5760x1080 not in the ballpark of 4k? It's within 25% of the total pixel count, pretty close if you asked me. In fact, 5760x1080 is closer to 4k than 7680x1440 (roughly 33% higher resolution than 4k.) And I did post links to 4k results too that showed the 780 ti beating the 290x at most games. That isn't even the argument here though, as I've said a dozen times they both perform roughly exactly the same at stock clocks. The area where the 780 ti trumps the 290x is in overclocking, and that's something you haven't been able to refute with a single shred of evidence. You only come back with the ridiculous argument that overclocking doesn't increase gaming performance, which is a huge load of garbage lol.

Really? Come on man. This thread is about 7680x1440 because that is what the OP asked about. Your comment about 5760x1080 being closer to 4k is ridiculous in that context. Why would you use reviews of cards that are even lower than 4k to try and determine the best scenario for 7680x1440? That makes zero sense whatsoever. Almost every review out there shows that the higher the res goes, the better the 290x performs vs the 780Ti plain and simple. You can scream OC til you are blue in the face, it doesn't get you any closer to the truth. The simple fact is, the 290x CF just performs better for 4k and up.

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

The point is, you can focus on 4k benchmarks in a thread about 7680x1440, but I bring up a single review that uses 5760x1080 and I'm somehow being ridiculous lol? The fact remains that 5760x1080 is closer to 4k than 7680x1440. You say that 5760x1080 is nowhere near 4k, then 4k is nowhere near 7680x1440. Se the hypocrisy in that? And once again you're still focusing on that one review when I posted another with it that included 4k testing. I could link more that I looked up the other night, but I'm pretty tired of this argument at this point lol

And I still disagree with you completely. The 290x and 780 ti perform similarly at 4k. When you bring cfx in to the mix that tends to favor AMD with their better multi gpu scaling, but any serious hardware enthusiast spending thousands on a beastly rig and multimonitor setup isn't going to focus only on stock clocked reference cards. The 780 ti flat out overclocks higher. You know it, I know it, why ignore it?

And I still disagree with you completely. The 290x and 780 ti perform similarly at 4k. When you bring cfx in to the mix that tends to favor AMD with their better multi gpu scaling, but any serious hardware enthusiast spending thousands on a beastly rig and multimonitor setup isn't going to focus only on stock clocked reference cards. The 780 ti flat out overclocks higher. You know it, I know it, why ignore it?

probably GTX Titan if the only game you care about is modded Skyrim at that resolution, and maybe BF4... is it worth it? Not really...

GTX 780TI for everything else, although it's hard to say after the release of mantle. haven't seen too much yet to make that claim.

GTX 780TI for everything else, although it's hard to say after the release of mantle. haven't seen too much yet to make that claim.

Ozymandias

[H]ard|Gawd

- Joined

- Jun 21, 2004

- Messages

- 1,610

7680x1440 = 11.05 Megapixels

4K (3840x2160) = 8.29 Megapixels

5760x1080 = 6.22 Megapixels

Why use 5760x1080 benchmarks when 4K benchmarks are closer to the resolution that OP wants to play at? Overclocks aren't guaranteed and OP hasn't mentioned it at all - maybe he (or she) isn't interested.

4K (3840x2160) = 8.29 Megapixels

5760x1080 = 6.22 Megapixels

Why use 5760x1080 benchmarks when 4K benchmarks are closer to the resolution that OP wants to play at? Overclocks aren't guaranteed and OP hasn't mentioned it at all - maybe he (or she) isn't interested.

It's a bit of a strange situation in terms of "how" 4k resolution is rendered at the current time. Since no devices have HDMI 2.0, and it doesn't appear to be hitting the market anytime soon, the current method for 4k resolution is to "stitch" 2 1920 resolution images side by side via MST, which is essentially 2 screen surround. Whereas, 5760*1080 or 1200 is 3 screen surround.

To my understanding, nvidia only recently added 2 screen surround support for 3d applications specifically for 4k resolution, while nvidia has had 3 screen surround for 3d apps for a very long time (obviously..). My theory is that this is why there are some anomalies for NV at this resolution - i've seen tons of 5760*1080 benchmarks with the NV hardware having a clear lead in benchmarks, whereas 4k takes a precipitous drop, generally speaking. I personally think it's because of this reason, but i'm not sure - that doesn't excuse nvidia from getting it to work a bit more fluid, but nonetheless. AMD has had 2 screen 3d application support for a while while NV has not. So it could be a driver issue being that 4k benchmarks are really off compared to 3 screen resolutions such as 5760*1080.

In any case, it would be interesting to see direct benchmarks between the two at 7680*1440. NV has generally always done well in 3 screen surround benchmarks especially with Kepler, but I have not seen any websites benchmarking 7680*1440.

To my understanding, nvidia only recently added 2 screen surround support for 3d applications specifically for 4k resolution, while nvidia has had 3 screen surround for 3d apps for a very long time (obviously..). My theory is that this is why there are some anomalies for NV at this resolution - i've seen tons of 5760*1080 benchmarks with the NV hardware having a clear lead in benchmarks, whereas 4k takes a precipitous drop, generally speaking. I personally think it's because of this reason, but i'm not sure - that doesn't excuse nvidia from getting it to work a bit more fluid, but nonetheless. AMD has had 2 screen 3d application support for a while while NV has not. So it could be a driver issue being that 4k benchmarks are really off compared to 3 screen resolutions such as 5760*1080.

In any case, it would be interesting to see direct benchmarks between the two at 7680*1440. NV has generally always done well in 3 screen surround benchmarks especially with Kepler, but I have not seen any websites benchmarking 7680*1440.

Read [H]'s review of the XFX 290X DD. Anyone who looks at this and somehow thinks that the 780 ti is a better choice either has an agenda to push nVidia cards or suffers a severe lack of reading comprehension.

It's also obvious from looking at this and other reviews the 780 ti suffers further as res increases (or the 290X suffers less if you prefer). 7680*1440 is going to extend the differences. Add to that the extra VRAM it's going to take to push so many pixels.

WARNING:

With that said, there is still the possibility of hardware incompatibility and possible driver issues at that res. I wouldn't buy anything until I could be assured the setup will work together. That's a lot of pixels and a lot of bandwidth. If your monitors aren't DP capable (which I would highly recommend) you are going to require an adapter to run the third monitor off of the DP out of the card. That could create issues. There are a lot of reported issues with DP adapters, generally speaking. You also need to be concerned that the mobo has no issues with AMD's new bridge-less crossfire. I haven't read about any mobo incompatibilities, but there isn't a lot of info available for that res online and I've seen issues in the past with some chipsets (X58) or mobos needing bios updates.

It's also obvious from looking at this and other reviews the 780 ti suffers further as res increases (or the 290X suffers less if you prefer). 7680*1440 is going to extend the differences. Add to that the extra VRAM it's going to take to push so many pixels.

WARNING:

With that said, there is still the possibility of hardware incompatibility and possible driver issues at that res. I wouldn't buy anything until I could be assured the setup will work together. That's a lot of pixels and a lot of bandwidth. If your monitors aren't DP capable (which I would highly recommend) you are going to require an adapter to run the third monitor off of the DP out of the card. That could create issues. There are a lot of reported issues with DP adapters, generally speaking. You also need to be concerned that the mobo has no issues with AMD's new bridge-less crossfire. I haven't read about any mobo incompatibilities, but there isn't a lot of info available for that res online and I've seen issues in the past with some chipsets (X58) or mobos needing bios updates.

I had 3x780s under water daily clocked@1202mhz and I currently own 4x290x ON AIR (got the blocks but a lot of work related things to be done first) and my current setup is 3x1440 X-Star DP2710.

3x780s@1202mhz vs 3x290x ON AIR and downclocking to 800mhz@1440

Most of the games... the 290s will rip the 780s easily no joking.

Examples:

BF4:

780s = Unplayable... Major Stuttering

290Xs = 60-100 fps... On Quadfire 70-120 fps

Minimum frames are much much better on the 290Xs.

BF3:

780s = Very playable and FPS is around 50-70

290Xs = Extreamlly playable and had 2xAA FPS=75-120 on 2xAA!. Didn't test Quadfire.

Hitman Absolution:

780s: Very Playable 45-60 fps.

290xs: Extreamly Playable 2xAA and fps 50-65. Quadfire = 55-70 fps.

These were the games I tested before I took down the rig for the rebuilt and WCing.

The 780s had a 3930k clocked at 5ghz and they were running@1202mhz and PCIE 3.0 patched.

The 290Xs Trifire had a 4930k clocked at 4.5ghz and they were running pure stock and were downclocking to 800-900mhz after 10 minutes of gaming due to heat and PCIE 3.0 was enabled with no patch.

DP issues seem to fare better on the 290xs then my previous 7950s... ALOT LESS signal loss on the same adapter and the only thing I was missing on the 290xs when compared to the 780s was overclocking my screens to 96hz (Can do it to 75mhz on the current adapter) but I just ordered the 120hz 3D adapater from Accell which allows me to go over 100mhz.

TL R If you are gaming on BF4 go for 290Xs Trifire Minium and you want play to play Assassins Creed go for 780ti.

R If you are gaming on BF4 go for 290Xs Trifire Minium and you want play to play Assassins Creed go for 780ti.

3x780s@1202mhz vs 3x290x ON AIR and downclocking to 800mhz@1440

Most of the games... the 290s will rip the 780s easily no joking.

Examples:

BF4:

780s = Unplayable... Major Stuttering

290Xs = 60-100 fps... On Quadfire 70-120 fps

Minimum frames are much much better on the 290Xs.

BF3:

780s = Very playable and FPS is around 50-70

290Xs = Extreamlly playable and had 2xAA FPS=75-120 on 2xAA!. Didn't test Quadfire.

Hitman Absolution:

780s: Very Playable 45-60 fps.

290xs: Extreamly Playable 2xAA and fps 50-65. Quadfire = 55-70 fps.

These were the games I tested before I took down the rig for the rebuilt and WCing.

The 780s had a 3930k clocked at 5ghz and they were running@1202mhz and PCIE 3.0 patched.

The 290Xs Trifire had a 4930k clocked at 4.5ghz and they were running pure stock and were downclocking to 800-900mhz after 10 minutes of gaming due to heat and PCIE 3.0 was enabled with no patch.

DP issues seem to fare better on the 290xs then my previous 7950s... ALOT LESS signal loss on the same adapter and the only thing I was missing on the 290xs when compared to the 780s was overclocking my screens to 96hz (Can do it to 75mhz on the current adapter) but I just ordered the 120hz 3D adapater from Accell which allows me to go over 100mhz.

TL

failwheeldrive

Gawd

- Joined

- Jun 25, 2013

- Messages

- 530

7680x1440 = 11.05 Megapixels

4K (3840x2160) = 8.29 Megapixels

5760x1080 = 6.22 Megapixels

Why use 5760x1080 benchmarks when 4K benchmarks are closer to the resolution that OP wants to play at? Overclocks aren't guaranteed and OP hasn't mentioned it at all - maybe he (or she) isn't interested.

I never claimed that 5760x1080 is closer to 7680x1440 than 4k. My point was that if 5760x1080 is nowhere near 4k like the guy I was speaking with said, then 4k itself is also nowhere near 7680x1440, making the benchmarks he linked completely invalid as well. I simply linked a single review that had 5760x1080 benchmarks because it was a comparison of the best non reference 290x currently available.

And yeah, I'm sure an enthusiast that spends $5k+ on their gaming setup isn't at all interested in overclocking. It's just too difficult to master

And no, overclocking isn't guaranteed, especially if you're going with reference AMD. You'd be hard pressed to find a 780 ti that doesn't overclock at all though. The average reference ti does 1200+ on the core at 1.2v. Better non reference cards are closer to 1300.

NoOther

Supreme [H]ardness

- Joined

- May 14, 2008

- Messages

- 6,468

I never claimed that 5760x1080 is closer to 7680x1440 than 4k. My point was that if 5760x1080 is nowhere near 4k like the guy I was speaking with said, then 4k itself is also nowhere near 7680x1440, making the benchmarks he linked completely invalid as well. I simply linked a single review that had 5760x1080 benchmarks because it was a comparison of the best non reference 290x currently available.

The guy you were speaking to was me, and my point was extremely valid. Your links were not good ones for the topic at hand. They failed to provide enough adequate information especially when reviews at higher resolutions show different results. And the resolutions we are talking about are even higher than that. The fact still remains, if the topic is about 7680x1440, why would you choose reviews at 5760x1080 instead of ones that are at least at 4k. It makes no sense. How come so many people here can see that, but you refuse to listen?

And yeah, I'm sure an enthusiast that spends $5k+ on their gaming setup isn't at all interested in overclocking. It's just too difficult to master

I know a great many people that drop that amount of money down and don't overclock. I think you would be quite surprised by the percentage of people with high end setups who do not overclock. In fact there are a lot people who used to be heavy overclockers even in this forum and others that simply don't bother anymore.

veedubfreak

2[H]4U

- Joined

- Dec 10, 2007

- Messages

- 2,070

My 290s don't overclock for crap. I'm really debating on selling my 3 unlocked 290s and getting 2 classified Ti's, mostly because the main game I play currently doesn't support SLI/Crossfire. The only reason this has crossed my mind is that I can sell my 3 cards for a profit, and I'm too lazy to figure out how to altcoin mine.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)