Hey Guys,

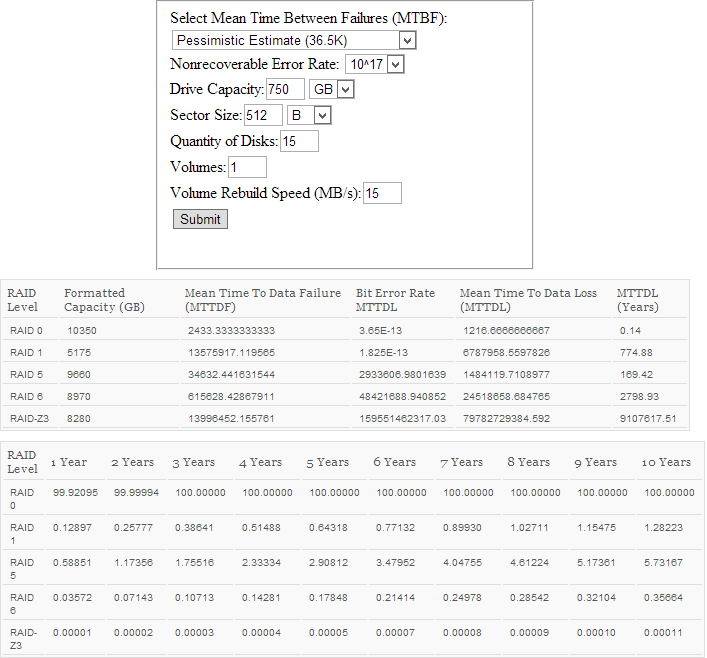

Thanks to you guys i'm nearly done my setup, best tech forum on the internet. How would you guys split 15 x 750g drives in ZFS? I'm thinking just a Z2 but would you go so far as a Z3? or maybe Z2 w/ a hot spare? This is for media storage

They are enterprise drives with low usage but will run 24/7, although they are enterprise drives i'm still scared because there are 15 of them.

Let me know your thoughts, thanks!

Thanks to you guys i'm nearly done my setup, best tech forum on the internet. How would you guys split 15 x 750g drives in ZFS? I'm thinking just a Z2 but would you go so far as a Z3? or maybe Z2 w/ a hot spare? This is for media storage

They are enterprise drives with low usage but will run 24/7, although they are enterprise drives i'm still scared because there are 15 of them.

Let me know your thoughts, thanks!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)