Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10 Gb Connection

- Thread starter N Bates

- Start date

Yawn, I am a Storage admin for a Enterprise company, you don't need to tell me about disk limitations when I work on EMC storages all day long.

If you guys would buy a real SAN you wouldn't be so busy

Forgot to add 1B = 8b

N Bates You will have to check your NAS for some kind of performance monitor and check the activity time % on each of the disks. If they are all at 100% when doing the transfers, assuming the disks are in a raid array, then you're being limited by disk speed.

Didn't forget to add that. I just assumed that people in here would know how bits and bytes relate to each other.

How is that? My WD Gold 10TB is hitting 230-260 MB/S during backups and its the same read/write. I am sure there are faster spinners than this but maybe not by much.

Umm, mb doesn't equal GB. Read the link I posted.

Yawn, I am a Storage admin for a Enterprise company, you don't need to tell me about disk limitations when I work on EMC storages all day long.

Then I am not sure how you don't understand that 260 MB/s > 1 Gbps (in fact it's a little over 2 Gbps). Even if his speed is because of caching, other drives can hit over 180 MB/s sustained writes which is well over 1 Gbps. https://us.hardware.info/reviews/72...ve-models-compared-test-results-hd-tune-write Yawn indeed, you need more coffee before you continue to "admin" your "Enterprise company".

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,445

Apparently, he does since you don't appear to realize that a modern 7200RPM WD Gold HDD can far exceed a 1 Gigabit connection and can even saturate a 2 Gigabit connection... just saying.Yawn, I am a Storage admin for a Enterprise company, you don't need to tell me about disk limitations when I work on EMC storages all day long.

Remains the question why iperf3 gives 2-3 Gbit/s pure network performance while nearly 10 Gbit/s can be achieved when everything is perfect (nic, driver, settings, cables). In my tests mostly Windows (nic, nic setting or driver) or cabling was the reason for less than 3 Gbit/s in a 10Gbit/s network. For Intel nics, the newest drivers from Intel are better than the included. Next I would try a different (crossover) cable to connect server and client directly to rule out a bad cable.

If you would read above he has faster transfer rates when using the 1gb nic so I'm still assuming there's some configuration issues here, regardless of disk speed maximums.

Huh? He has iperf tests showing 3.5Gb/s transfers.... That's not possible on a 1Gb nic.

Didn't forget to add that. I just assumed that people in here would know how bits and bytes relate to each other.

Then I am not sure how you don't understand that 260 MB/s > 1 Gbps (in fact it's a little over 2 Gbps). Even if his speed is because of caching, other drives can hit over 180 MB/s sustained writes which is well over 1 Gbps. https://us.hardware.info/reviews/72...ve-models-compared-test-results-hd-tune-write Yawn indeed, you need more coffee before you continue to "admin" your "Enterprise company".

I think you'd be surprised how few people know how B/b relate.

The OP never mentioned what disk he's using, so you're the one assuming the speed/size of his disks. The only thing NIZMO was incorrect saying was that NO spinning disks will saturate a 1Gb lan (~112MB/s w/ overhead), but a large majority will not. Especially smaller, cheaper consumer drives most likely being used in this NAS.

Richneerd

Gawd

- Joined

- Jan 17, 2010

- Messages

- 642

It's working, you're just hitting the limitations due to the hard drives.

Enjoy your 10GB devices.

Enjoy your 10GB devices.

If you guys would buy a real SAN you wouldn't be so busy

LOL. If only they would listen.......they like EMC, but EMC support is the worst.

Didn't forget to add that. I just assumed that people in here would know how bits and bytes relate to each other.

Then I am not sure how you don't understand that 260 MB/s > 1 Gbps (in fact it's a little over 2 Gbps). Even if his speed is because of caching, other drives can hit over 180 MB/s sustained writes which is well over 1 Gbps. https://us.hardware.info/reviews/72...ve-models-compared-test-results-hd-tune-write Yawn indeed, you need more coffee before you continue to "admin" your "Enterprise company".

Whatever you say. lol You just proved more how uneducated you are in this field. Others have answered correctly, so you can read their responses. I am done with you.

Last edited:

Apparently, he does since you don't appear to realize that a modern 7200RPM WD Gold HDD can far exceed a 1 Gigabit connection and can even saturate a 2 Gigabit connection... just saying.

Funny, it can't. We have a brand new Dell server with spinning 7200 rpm SAS disks in it. And it can't max out the 10gb card it has on it. Barely makes it to half the speed of it actually. But we have SSDs as the O/S drive in it, and guess what, it does max out the card! Enough said. People on the web, keep believing whatever joe blow says on the web out there. You aren't going to get it. Even Dell has manuals that say it.

Last edited:

I think you'd be surprised how few people know how B/b relate.

The OP never mentioned what disk he's using, so you're the one assuming the speed/size of his disks. The only thing NIZMO was incorrect saying was that NO spinning disks will saturate a 1Gb lan (~112MB/s w/ overhead), but a large majority will not. Especially smaller, cheaper consumer drives most likely being used in this NAS.

Exactly! Yes, it's hard for me to respond while I am busy in detail. But you nailed it. Thank you.

Richneerd

Gawd

- Joined

- Jan 17, 2010

- Messages

- 642

Well regardless what anyone says in here, 10GbE is the future and it's slowly being adopted. It's already widely known in the server world, but for consumers, it will take a while.

Apple is pushing it with all their future computers though, the new iMac Pro's have them built in.

Everyone enjoy the 10GbE bandwagon, we are in for a hell of a ride.

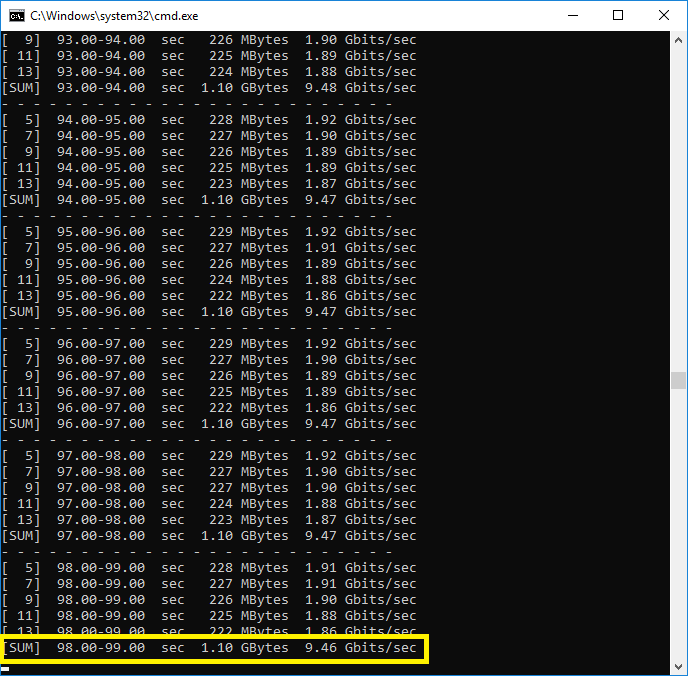

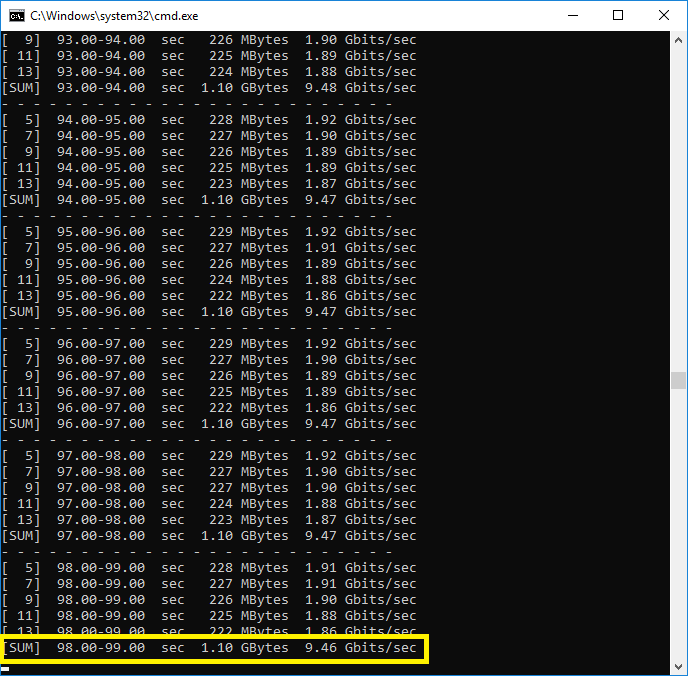

Here is my workstation, just did a iperf test at my lab.

Apple is pushing it with all their future computers though, the new iMac Pro's have them built in.

Everyone enjoy the 10GbE bandwagon, we are in for a hell of a ride.

Here is my workstation, just did a iperf test at my lab.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,445

Um, I didn't say a single WD Gold 7200RPM HDD would max out a 10 Gigabit connection.Funny, it can't. We have a brand new Dell server with spinning 7200 rpm SAS disks in it. And it can't max out the 10gb card it has on it. Barely makes it to half the speed of it actually. But we have SSDs as the O/S drive in it, and guess what, it does max out the card! Enough said. People on the web, keep believing whatever joe blow says on the web out there. You aren't going to get it. Even Dell has manuals that say it.

Let me show you again exactly what I said since apparently you can't read, either:

I even bolded and underlined it specifically so you won't miss it.Apparently, he does since you don't appear to realize that a modern 7200RPM WD Gold HDD can far exceed a 1 Gigabit connection and can even saturate a 2 Gigabit connection... just saying.

I also never said anything about SSDs, so I'm not sure why you are directing that comment at me.

Just a tip, you probably shouldn't be bragging about your "storage admin" title around here, considering how much you are missing basic information, putting words into others mouths, and all around posting generally incorrect or misguided information.

Last edited:

Um, I didn't say a single WD Gold 7200RPM HDD would max out a 10 Gigabit connection.

Let me show you again exactly what I said since apparently you can't read, either:

I even bolded and underlined it specifically so you won't miss it.

I also never said anything about SSDs, so I'm not sure why you are directing that comment at me.

Just a tip, you probably shouldn't be bragging about your "storage admin" title around here, considering how much you are missing basic information, putting words into others mouths, and all around posting generally incorrect or misguided information.

Let me explain something to you. You did say a single one. "a modern 7200RPM WD Gold HDD can far exceed a 1 Gigabit connection and can even saturate a 2 Gigabit connectio"

I made it RED where it refers to a SINGLE hard drive. Anyways, even if it was a RAID of them it still would not do it well for 10gb. 1GB can be had with a spinning disk, these days as long as it's one that can do 210 MB/s and not the 110 MB/s. 2GB, won't happen with a single one. A Raid sure. 10GB, not happening. We have them and have tried many times. It won't happen as others here have proved as well.

Last edited:

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,445

The POINT was that a single WD Gold 7200RPM HDD would saturate 1 and 2 Gigabit connections - nothing was mentioned in my post about 10 Gigabit with the single drive.Let me explain something to you. You did say a single one. "a modern 7200RPM WD Gold HDD can far exceed a 1 Gigabit connection and can even saturate a 2 Gigabit connectio"

I made it RED where it refers to a SINGLE hard drive. Anyways, even if it was a RAID of them it still would not do it well for 10gb. 1GB can be had with a spinning disk, these days as long as it's one that can do 210 MB/s and not the 110 MB/s. 2GB, won't happen with a single one. A Raid sure. 10GB, not happening. We have them and have tried many times. It won't happen as others here have proved as well.

I never said anything about 10 Gigabit in my initial post.

A single WD Gold 7200RPM HDD in a sequential transfer can certainly get upwards of 200-220MB/s+ depending on the disk capacity.

You are right, that is no where near a 10 Gigabit connection (again, I never mentioned 10 Gigabit).

However, it is certainly faster than a 1 Gigabit connection and nearly saturates a 2 Gigabit connection (assuming it is a single 2 Gigabit connection, not two 1 Gigabit tethered connections).

Again, you are putting words into my mouth - please stop.

The POINT was that a single WD Gold 7200RPM HDD would saturate 1 and 2 Gigabit connections - nothing was mentioned in my post about 10 Gigabit with the single drive.

I never said anything about 10 Gigabit in my initial post.

A single WD Gold 7200RPM HDD in a sequential transfer can certainly get upwards of 200-220MB/s+ depending on the disk capacity.

You are right, that is no where near a 10 Gigabit connection (again, I never mentioned 10 Gigabit).

However, it is certainly faster than a 1 Gigabit connection and nearly saturates a 2 Gigabit connection (assuming it is a single 2 Gigabit connection, not two 1 Gigabit tethered connections).

Again, you are putting words into my mouth - please stop.

It's only faster than a 1GB connection when its on the outer spindle. The inner won't do more than 90 MB/s. Again, SSDs rule this area.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,445

You aren't wrong about the inner-spindle having slower speeds, but on the central to outer-spindles it will most certainly do 200-220MB/s+ just like I stated.It's only faster than a 1GB connection when its on the outer spindle. The inner won't do more than 90 MB/s. Again, SSDs rule this area.

I never said anything about SSDs, and again, I have no idea why you are stating the obvious and things which I never once mentioned.

D

Deleted member 88301

Guest

I actually did understand a little of the above. Think I'll have a beer.

Last edited by a moderator:

Whatever you say. lol You just proved more how uneducated you are in this field. Others have answered correctly, so you can read their responses. I am done with you.

How did I prove that I am uneducated in this field? By doing simple math? I think YOU just proved how uneducated you are period by not being able to do either simple math or simple comprehension. The original quotes were talking about a spinning disk being able to transfer at 1 Gbps speed which it clearly can. Yes, other's have answered correctly saying that it DOES NOT TAKE AN SSD to saturate 1 Gbps.

And then you go and flop and FINALLY admit that that a single disk can hit 1 Gb (you even use B vs b wrong, who the HELL can you call yourself an "admin"?) . This just goes further to prove my point.It's only faster than a 1GB connection when its on the outer spindle. The inner won't do more than 90 MB/s. Again, SSDs rule this area.

Anyway, you're right, this convo is done. I can't have a discussion with someone who doesn't can't do simple math (120MB > 1 Gbit) nor do simple comprehension.

How did I prove that I am uneducated in this field? By doing simple math? I think YOU just proved how uneducated you are period by not being able to do either simple math or simple comprehension. The original quotes were talking about a spinning disk being able to transfer at 1 Gbps speed which it clearly can. Yes, other's have answered correctly saying that it DOES NOT TAKE AN SSD to saturate 1 Gbps.

And then you go and flop and FINALLY admit that that a single disk can hit 1 Gb (you even use B vs b wrong, who the HELL can you call yourself an "admin"?) . This just goes further to prove my point.

Anyway, you're right, this convo is done. I can't have a discussion with someone who doesn't can't do simple math (120MB > 1 Gbit) nor do simple comprehension.

Your math is flawed and others proved it. I never even said anything about the B vs b. You must be talking about someone else. And I guess Dell and HP are wrong too when their documents state spinning hard disks aren't enough for 10gb connections that their support and sales people have. A consumer drive is not enough for 1GB usually either. Especially the older ones. I'm done here. There is a reason I work and have worked in Enterprise for 25 years.

CopyRunStart

Limp Gawd

- Joined

- Apr 3, 2014

- Messages

- 155

Your math is flawed and others proved it. I never even said anything about the B vs b. You must be talking about someone else. And I guess Dell and HP are wrong too when their documents state spinning hard disks aren't enough for 10gb connections that their support and sales people have. A consumer drive is not enough for 1GB usually either. Especially the older ones. I'm done here. There is a reason I work and have worked in Enterprise for 25 years.

Bro, you're letting your ego get to you. You're clearly wrong here. In post 13 you speculated that his issue is disk, not network (fair point), but then stated:

This is clearly not true. Spinning disks often go over 1Gb/s (125MB/s). Not always, but they can, and you said:Regular spinning disks will never be able to go over what a 1gb connection can do

never

Then in post 25, JargonGR states his spinning disk saturates a 1Gb NIC @ 230MB/s (1.8Gb/s). Then in post 31 you say:

which has no relevancy to what JargonGR said. JargonGR is clearly aware of the conversion of MB to Gb and gave his number in MB/s, which would saturate a 1Gb/s connection.Umm, mb doesn't equal GB. Read the link I posted

You then resort to insulting everyone who points out your technical errors. Even if your point about some spinning disks not being able to saturate 1Gb/s NIC is true, you were still technically incorrect in saying:

Other members nicely pointed out your technical error, because this is a technical forum and someone could be reading this in the future and get bad information. You then got defensive and attacked everyone.never

@OP: With your latest iPerf results, you're clearly getting over 1Gb which is a good start. Oddly enough on my Solaris 11.3 server iPerf maxes out at 5Gb/s, but file transfers are able to saturate the 10Gb/s NICs (X540-T2). Can you provide a sequential disk benchmark to see what your sequential read/write speeds are?

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,445

Seriously, "1GB"???Your math is flawed and others proved it. I never even said anything about the B vs b. You must be talking about someone else. And I guess Dell and HP are wrong too when their documents state spinning hard disks aren't enough for 10gb connections that their support and sales people have. A consumer drive is not enough for 1GB usually either. Especially the older ones. I'm done here. There is a reason I work and have worked in Enterprise for 25 years.

It's either 1Gb/s or 1Gbps, aka 1 gigabit.

What you are saying, "1GB", means 1 gigabyte.

B = byte

b = bit

8 bits = 1 byte

How the hell do you not know this, and especially after, holy shit, 25 years in the industry???

It is clear you have no clue about anything and are clearly talking out your ass, and when nearly everyone in this thread is pointing out your technical flaws again and again, that might be a sign to be an adult and simply admit you are wrong - you will at least have a lot more respect from me if you do.

Also, yes, even 2.5" portable-class HDDs are very capable of reaching 130-160MB/s+ (megabytes - just so we are clear), which is far above a 1Gbps (125MB/s [actual data is around ~115MB/s]), and 3.5" desktop-class HDDs can do even more, let alone nearline-class HDDs.

As for older models of HDDs that couldn't do this, you would have to go back to HDDs from nearly 15 years ago to make that statement correct, and even then, who would be running HDDs that old in an enterprise environment?

You really should be done here, because you are wrong as all hell and your nomenclature is embarrassingly incorrect, especially for a 25-year enterprise "storage admin" such as yourself - shameful, sad to say.

Phew......., I am back, I had to deal with a motherboard failure on the NAS, so had to replace the motherboard, RAM and while I was at it changed the controller as well, now before I start testing the 10G network again, do I need to re-install Omnios or not?, I have booted the NAS and it boots fine except for at the end I do get as loads of warning messages for the drives as per below:

WARNING: Disk, '/dev/dsk/c3t2d0s0', has a block alignment that is larger than the pool's alignment

Now, I haven't exported the pool, should I just access the NAS via Napp-it without re-install and see whether I get the choice to import or do a fresh install before doing anything first?

WARNING: Disk, '/dev/dsk/c3t2d0s0', has a block alignment that is larger than the pool's alignment

Now, I haven't exported the pool, should I just access the NAS via Napp-it without re-install and see whether I get the choice to import or do a fresh install before doing anything first?

Seriously, "1GB"???

It's either 1Gb/s or 1Gbps, aka 1 gigabit.

What you are saying, "1GB", means 1 gigabyte.

B = byte

b = bit

8 bits = 1 byte

How the hell do you not know this, and especially after, holy shit, 25 years in the industry???

It is clear you have no clue about anything and are clearly talking out your ass, and when nearly everyone in this thread is pointing out your technical flaws again and again, that might be a sign to be an adult and simply admit you are wrong - you will at least have a lot more respect from me if you do.

Also, yes, even 2.5" portable-class HDDs are very capable of reaching 130-160MB/s+ (megabytes - just so we are clear), which is far above a 1Gbps (125MB/s [actual data is around ~115MB/s]), and 3.5" desktop-class HDDs can do even more, let alone nearline-class HDDs.

As for older models of HDDs that couldn't do this, you would have to go back to HDDs from nearly 15 years ago to make that statement correct, and even then, who would be running HDDs that old in an enterprise environment?

You really should be done here, because you are wrong as all hell and your nomenclature is embarrassingly incorrect, especially for a 25-year enterprise "storage admin" such as yourself - shameful, sad to say.

That is me just holding the shfit key down has nothing to do with B vs b. Keep running your mouth off which is clear you have no clue. You clearly can't read either. I said consumer HDs. I wouldn't be where I am today if I didn't know what I was talking about. Shameful to yourself.

EOD.

https://www.lifewire.com/definition-of-gigabit-ethernet-816338

Last edited:

CopyRunStart

Limp Gawd

- Joined

- Apr 3, 2014

- Messages

- 155

Phew......., I am back, I had to deal with a motherboard failure on the NAS, so had to replace the motherboard, RAM and while I was at it changed the controller as well, now before I start testing the 10G network again, do I need to re-install Omnios or not?, I have booted the NAS and it boots fine except for at the end I do get as loads of warning messages for the drives as per below:

WARNING: Disk, '/dev/dsk/c3t2d0s0', has a block alignment that is larger than the pool's alignment

Now, I haven't exported the pool, should I just access the NAS via Napp-it without re-install and see whether I get the choice to import or do a fresh install before doing anything first?

This likely means when you created the pool, you created it with ashift=9 but should have done ashift=12. Can anybody else comment on whether I am right on this?

In any case, it shouldn't be anything to worry about, but it can cause performance issues and you should enable proper alignment if you ever re-create the pool. One again, I would wait for others like Gea to comment so you can be 100% sure.

Yes I would try to see if you can import the pool. When you created the pool, did you do it based on WWN ID numbers?

CopyRunStart

Limp Gawd

- Joined

- Apr 3, 2014

- Messages

- 155

I use Solaris, not Omni but I believe in some OSs it might default to ashift=9 if the disks are 4K but emulate 512.

Nexenta and OmniOS are both Illumos distributions. A pool move should be no problem

(beside mountpoint, Nexenta use /volumes/poolname per default while all other use /poolname).

ZFS use per default the ashift value (per vdev) that is determined by the disk physical sectorsize size.

If at least one disk in a vdev is physical sectorsize=4k (512e or 4kn), the vdev is ashift=12

If you want to force ashift=12 because your disks are 512n and you want to be able to replace with

modern 512e disks or if they lie about their real 4k internals with a 512n value (like very first 4k disks did),

you can force ashift by modifying disk parameters in sd.conf, https://wiki.illumos.org/display/illumos/ZFS+and+Advanced+Format+disks

Only problem can happen with partition chemes/ using not whole disks ex from BSD to Solarish.

In such a case import problems can happen and you should reinitialize disks/ recreate pools.

(beside mountpoint, Nexenta use /volumes/poolname per default while all other use /poolname).

ZFS use per default the ashift value (per vdev) that is determined by the disk physical sectorsize size.

If at least one disk in a vdev is physical sectorsize=4k (512e or 4kn), the vdev is ashift=12

If you want to force ashift=12 because your disks are 512n and you want to be able to replace with

modern 512e disks or if they lie about their real 4k internals with a 512n value (like very first 4k disks did),

you can force ashift by modifying disk parameters in sd.conf, https://wiki.illumos.org/display/illumos/ZFS+and+Advanced+Format+disks

Only problem can happen with partition chemes/ using not whole disks ex from BSD to Solarish.

In such a case import problems can happen and you should reinitialize disks/ recreate pools.

Nexenta and OmniOS are both Illumos distributions. A pool move should be no problem

(beside mountpoint, Nexenta use /volumes/poolname per default while all other use /poolname).

ZFS use per default the ashift value (per vdev) that is determined by the disk physical sectorsize size.

If at least one disk in a vdev is physical sectorsize=4k (512e or 4kn), the vdev is ashift=12

If you want to force ashift=12 because your disks are 512n and you want to be able to replace with

modern 512e disks or if they lie about their real 4k internals with a 512n value (like very first 4k disks did),

you can force ashift by modifying disk parameters in sd.conf, https://wiki.illumos.org/display/illumos/ZFS+and+Advanced+Format+disks

Only problem can happen with partition chemes/ using not whole disks ex from BSD to Solarish.

In such a case import problems can happen and you should reinitialize disks/ recreate pools.

What is the safest way to access my data without loosing it and correct the pool size alignment errors?

Many thanks.

To modify ashift=9 to ashift=12 you must

- backup all data

- destroy the pool

- recreate the pool with ashift=12 (mix a vdev with newer 512e/4kn disks or modify sd.conf)

- restore data

Can I just carry on using the pool as is until such a time where I will purchase a backup server, is it safe to do so?

Remains the question why iperf3 gives 2-3 Gbit/s pure network performance while nearly 10 Gbit/s can be achieved when everything is perfect (nic, driver, settings, cables). In my tests mostly Windows (nic, nic setting or driver) or cabling was the reason for less than 3 Gbit/s in a 10Gbit/s network. For Intel nics, the newest drivers from Intel are better than the included. Next I would try a different (crossover) cable to connect server and client directly to rule out a bad cable.

I have just noticed the bit about the crossover cable, does this needs to be a true crossover cable T568A one end to T567B other end or will a straight through cable work, I am currently using a straight through cable, I though the NIC's will automatically work out whether the cables are crossover or not and negociate automatically?

I have just noticed the bit about the crossover cable, does this needs to be a true crossover cable T568A one end to T567B other end or will a straight through cable work, I am currently using a straight through cable, I though the NIC's will automatically work out whether the cables are crossover or not and negociate automatically?

For the 10G SPF cables, you don't need anything special to direct connect 2 cards (I have tested myself between 2 X520s). The auto-crossover was added to the network standard when 1G came out, so I don't think anything other than 100Mb or lower would need a C.O. cable for direct connect between 2 Nics.

I have just noticed the bit about the crossover cable, does this needs to be a true crossover cable T568A one end to T567B other end or will a straight through cable work, I am currently using a straight through cable, I though the NIC's will automatically work out whether the cables are crossover or not and negociate automatically?

The point is to connect server and client directly over a new cat6 cable. A crossover cable always works as you must crossover nic to nic or switch to switch. If one of the nics or switches can do auto-crossover (not all can), you can use a 1:1 patchcable.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)