DHarkKnight10

n00b

- Joined

- Oct 19, 2019

- Messages

- 3

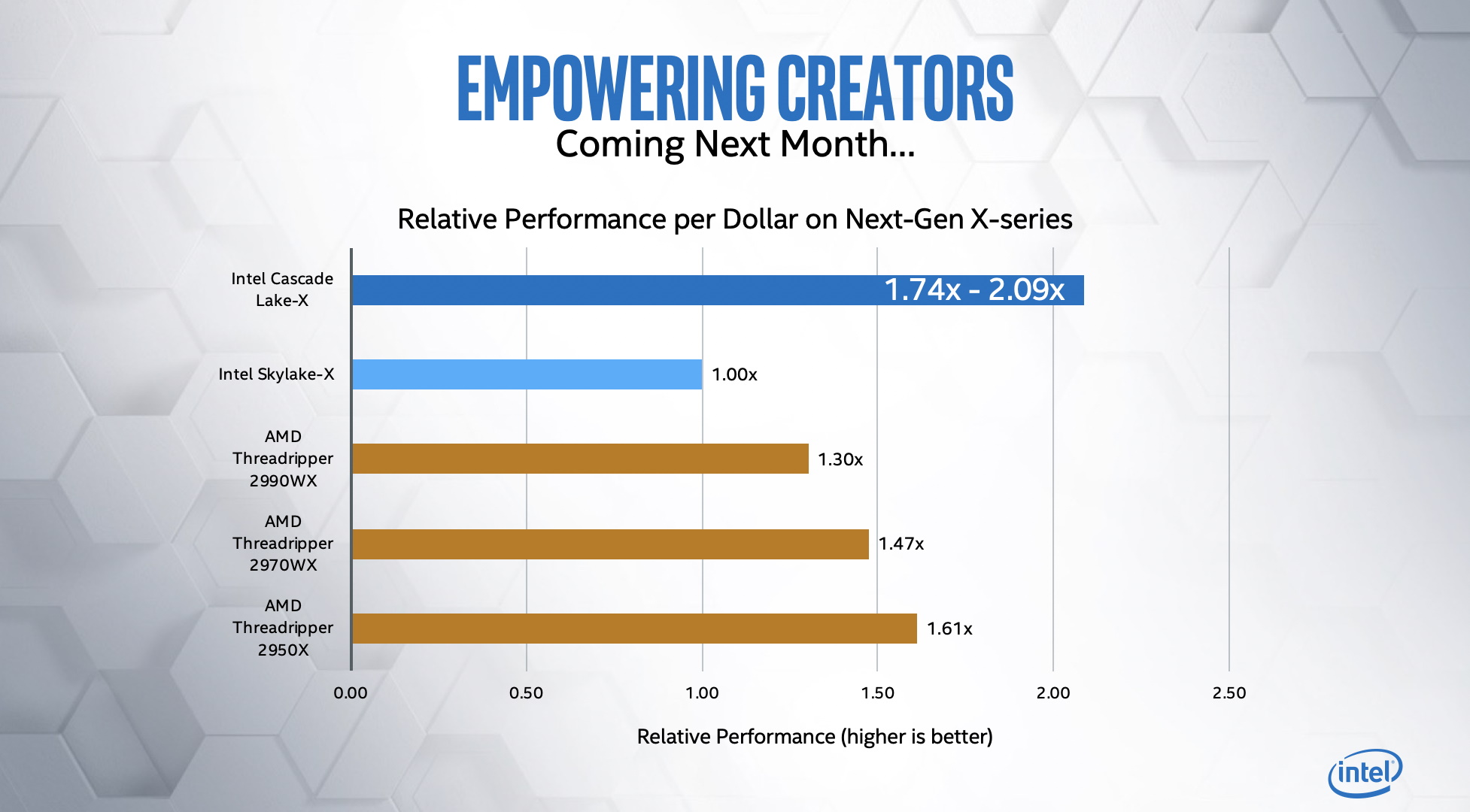

So glad to see that Intel has responded to AMD as far as price. What a time to be alive

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

So glad to see that Intel has responded to AMD as far as price. What a time to be alive

Intel doesn't really respond to AMD as much as they respond to demand. Intel's biggest issue isn't that AMD has become competitive, but that they're having issues shipping their own designs in volume.

AMD's Ryzen boost debacle is a much greater marketing fail than anything Intel has messed up lately.

Bullshit

Yeah... that's why after patching my 6700K @ 4GHz, for gaming and video editing, it suddenly felt like a 2500K @ 3.3GHz.For consumers? Since the necessary workloads and levels of exposure of threat surface needed to exploit are extremely rare for consumer workloads, AMDs marketing / engineering repeatedly falling short of the company's claims is much more headline grabbing, most especially since they just lost a lawsuit over their false marketing claims for their last architecture.

Yeah... that's why after patching my 6700K @ 4GHz, for gaming and video editing, it suddenly felt like a 2500K @ 3.3GHz.

Gaming performance, depending on the game, tanked, and video renders that used to take 10 minutes now take between 15-25 minutes for the same job.

I paid for 6700K-level performance, not 2500K-level performance - is Intel going to give me a refund?

Perhaps the level of exposure on the consumer-level is low, but, are you willing to take that risk and go totally unpatched in microcode/firmware and software for 22 exploits???

I sure as hell wouldn't.

We're talking about pricing, move along.

Intel doesn't really respond to AMD as much as they respond to demand. Intel's biggest issue isn't that AMD has become competitive, but that they're having issues shipping their own designs in volume.

Well, to be fair, the datacenter admins I know have had to continuously patch thousands of systems on a system-to-system basis due to the firmware/microcode updates, and I know they are getting tired of it and have started to migrate to AMD Epyc-based systems.While I don't doubt your experience as it tracks in the same direction as fact, you are quoting your own feelings here.

Intel doesn't really respond to AMD as much as they respond to demand. Intel's biggest issue isn't that AMD has become competitive, but that they're having issues shipping their own designs in volume.

Meh, its all bitching. They resolved that with bios updates and to be honest if I asked this question to 100 times "Did you buy these processors for single core performance" the answer will likely be no 90 percent of the time. I never did but I understand the bitching but that is mostly fixed for those who wanna stare at monitoring software lol.

Well, to be fair, the datacenter admins I know have had to continuously patch thousands of systems on a system-to-system basis due to the firmware/microcode updates, and I know they are getting tired of it and have started to migrate to AMD Epyc-based systems.

They did tell me that many VM workloads and CPU-intensive tasks are taking much longer to complete after the exploit patches, or are performing poorly in general.

So I guess it is nice to see that the hundreds of systems I manage aren't the only ones getting performance hits?

Its lunacy to suggest that Intel's MASSIVE and NEVER BEFORE SEEN price cuts are not a response to AMD kicking their ass. If not for AMD, Intel could keep plodding along like it has for years with no concern. They wouldn't bother cutting prices because there would be no incentive for them to do so as there wouldn't be competition breathing down their neck. AMD isn't the only reason they slashed prices like they did, but there is a less than zero chance that they weren't a significant part of it.

People explained, several times, in the thread about the boost clocks why they were talking about it and why they thought it was an issue. You willfully chose to ignore those people and that's on you. Don't pretend you don't understand when all you did was cover your eyes and pretend rational explanations didn't exist.

Yeah... that's why after patching my 6700K @ 4GHz, for gaming and video editing, it suddenly felt like a 2500K @ 3.3GHz.

Gaming performance, depending on the game, tanked, and video renders that used to take 10 minutes now take between 15-25 minutes for the same job.

No, I totally get what you are saying.I cannot speak to rendering or video editing performance, but gaming performance for me on a gently-overclocked (4.8G all core) 8700k has been not measurably changed since I got the CPU at launch.

I can speak to big development compilation tasks. I work on a large C++ projects with a few million lines of code and keep lots of metrics on compile time. It's within 5% of the performance at launch, prior to mitigations.

Please do not take this as discounting your data (a different CPU, mostly different tasks), I'm just providing my experience. To me, the issues have... not been an issue. That said, I'd like them to address the issues in HW and avoid penalties all the same.

AMD's Ryzen boost debacle is a much greater marketing fail than anything Intel has messed up lately.

Its lunacy to suggest that Intel's MASSIVE and NEVER BEFORE SEEN price cuts are not a response to AMD kicking their ass.

Do you support this point with the price cuts seen with the Pentium IV and Athlon 64? Intel will price according to market demand.

That's how pricing works.

That's only a debacle if you bleed blue. Most of it was from uneducated user base expecting Intel like boost.

Well considering that Intel used illegal tactics in order to keep AMD from being a bigger threat.....

Derangel is talking about all of the anti-consumer and OEM-conning tactics that Intel did indeed pull, and were found guilty of, back in the 2000s during their Netburst-era.AMD rode the same core for longer than Intel has ridden Skylake -- whatever tactics Intel used, AMD failed to perform.

Intel used Netburst for roughly 8 years from 2000-2008 with the Pentium 4 and Pentium D CPUs - I think Intel has screwed the pooch a bit more than AMD has.

Intel has one hell of an uphill battle from a pit that they themselves dug

Which "Athlon" architecture are you talking about?AMD barely upgraded the Athlon architecture; they did less than Intel has done with Core. 64bit? IMC? Shrink-based clock increases? More cores?

Great -- but those aren't really architectural improvements, and they followed that track record up with Bulldozer.

AMD fixed the memory architectural issues from Zen/Zen+ with Zen 2, which was a massive performance issue when more than 16-cores are in use.Hopefully they do better with Zen, but it's going to need a lot of work to continue to compete.

While I agree with this, primarily because we can't see the future, I would trust further improvements in Zen 2/3 beyond anything Intel has to offer at this point.However, there's no certainty that AMD will continue to improve Zen beyond where it is now, and there's no certainty that TSMC will continue to deliver process improvements in volume.

Gosh can you ever admit when amd does something good? IMC was one of the biggest changes since fpus were socketed.. Even Intel followed suit after...AMD barely upgraded the Athlon architecture; they did less than Intel has done with Core. 64bit? IMC? Shrink-based clock increases? More cores?

I'm going to assume that many to most expected boost clocks to sustain more than a millisecond or two.

That's a statistically irrelevant boost, which is what is being argued with statements that dismiss the 'boost clock fixes' because they do not provide a performance benefit.

Yeup although I'm not putting much stock in that bench yet. AMD mtIPC is terrific and why they are shitting on intel in servers, even in intel extension optimised benchmarks lol.Current Intel ring bus vs AMD mesh, Intel leads slightly in gaming. Intel mesh vs AMD mesh looks to be a very different story:

https://www.tweaktown.com/news/6827...ntels-i9-10980xe-3dmark-firestrike/index.html

Current Intel ring bus vs AMD mesh, Intel leads slightly in gaming. Intel mesh vs AMD mesh looks to be a very different story:

https://www.tweaktown.com/news/6827...ntels-i9-10980xe-3dmark-firestrike/index.html

Intel will become a company similar to Oracle and IBM, floating on their x86 ISA license sales and existing as 10% technology and 90% attorneys.

Not really sure. Graphics and Combined is using the GPU and since two different GPUs were used that would throw those scores out. I just ran fire strike to compare and my physics score is 26,114What am I supposed to be gathering from that link? Physics score?

From that article: “Keep in mind however that this is likely not the final sample of the 10980XE and the performance gap could close drastically with improved clocks ( in other words, pinch of salt as always).”

doesnt mean it’s not true either. Standard disclaimer.

just as much fact to it as most of the baseless conjecture that gets thrown around here. Plus given the recent cpu performance of each company- makes total sense.For these processors, if we don’t know the coolers and amount of thermal throttling it’s mostly meaningless.

doesnt mean it’s not true either. Standard disclaimer.

wccftech is usually wrong. Their clickbait / "leaks" have been wrong so many times they were banned from many subreddits lol.

According to them ryzen OCs to 5ghz all cores hahaha.

who would have thought a couple years ago Amd would be spanking intel everywhere? Intel has basically left the desktop market to Amd because they can’t compete. Hahaha.

who would have thought a couple years ago Amd would be spanking intel everywhere? Intel has basically left the desktop market to Amd because they can’t compete. Hahaha.

That’s a bit dramatic. In the hands of an overclocker Intel still handily beats AMD in (high Hz) gaming and single thread. AMD has the best value in HEDT but I think that matters even less in that realm.

We’ll see how it pans out with actual reviews but given what we know for 9900k vs 3800x I am skeptical of a 16 core AMD beating a 18 core Intel with proper setups.