Sprayingmango

[H]ard|Gawd

- Joined

- Jan 20, 2012

- Messages

- 1,259

So I don't know what I was smoking last night, SLI is not really working, even in DX11. I was sure I was getting good performance, but maybe it was just the level I was testing.

I tried again tonight. With DX11 SLI I was getting activity on both cards, but they were staying under 40% usage on both. Tested in the France SP level, getting in the 90 - 100 fps range, not as impressive. I also noticed bad blur artifacts that seem SLI related.

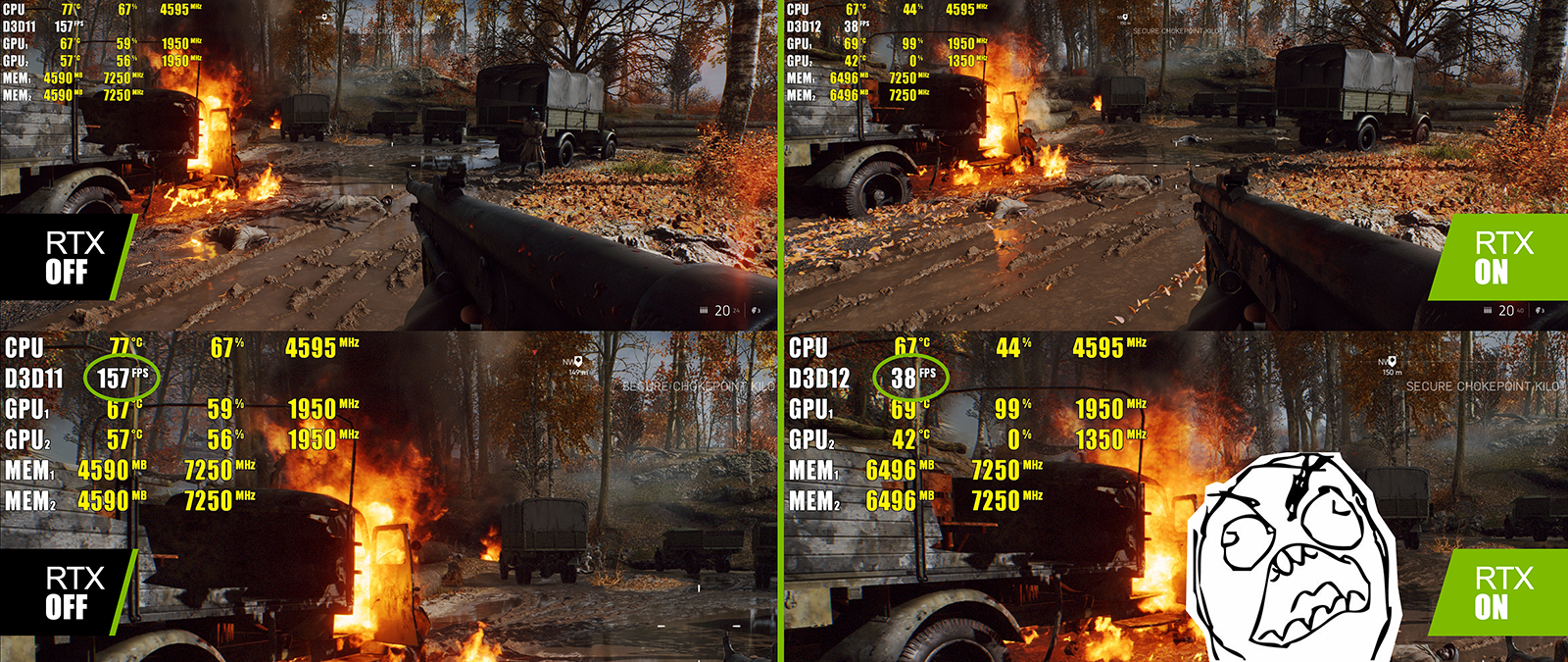

Tested DX12 DXR in the France map, at DXR Ultra was getting 30 - 40 fps, completely unplayable. With DXR Low it was hitting the 60 - 70 fps range, so just making the cut (though still choppy for my taste). Putting everything else on Low did not improve fps at all.

Then I tested DX12 with DXR off, and this was the most playable for me. Getting around 135 fps average, give or take. Lowering settings helped a bit, but even on Low everything it was still only around 155 fps, not worth sacrificing the image quality for 20 fps.

I'm going to try with DXR but with frame limit at 60Hz. Maybe that will be playable.

I used Nvidia Inspector to change the compability bits for SLI to use the Battlefront II bits for my SLI 1080Ti's at 4k HDR. I get almost perfect scaling on both cards 99-98% usage with all settings at High, some at Ultra. It's important to turn future frame rendering ON for this, which set my cards to almost max usage. I get 120 FPS avg.

I'm under the impression that with DXR you cannot use SLI at the same time.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)