danny_discus

Gawd

- Joined

- Dec 13, 2006

- Messages

- 612

XoR_ its basically hit or miss on whether the converter actually works due to the faulty cable...

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

After reading this post I boght Delock 62967. It is quite cheap and for future compatibility it will suffice as I do not really care for anything higher than 1920x1200@96Hz

Should I expect any compatibility issues due to default cable being bad quality?

Yes I do. It's an i1 Pro. I've done a full white balance calibration already.

I'm just not sure I completely follow your instructions regarding determining whether the adapter is 10 bit.

And if the adapter is NOT 10 bits what does this mean practically? Does it only matter if I'm adjusting the gamma with dispcal? Or would I see banding artifacts anyway?

I'm happy to run the tests and let everyone know if you could provide some easy to follow instructions.

Sorry for the delayed reply was away for a few days.

You said i1 pro - do you mean the i1 display pro (colorimeter), or the i1 pro (spectrophotometer/spectroradiometer)?

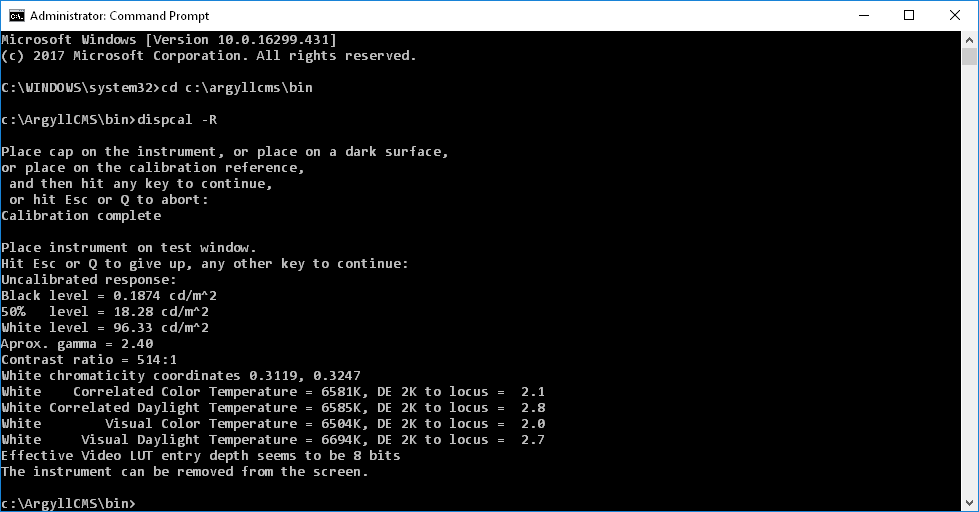

Assuming you have argyllCMS installed (instructions are on the WPB guide), all you have to do is place the measuring instrument so that it's measuring the center of the screen, and type dispcal -R in the command line. A test will be performed that tries to determine how many bits you have, and then it will print the results on the screen.

Having a 10 bit LUT is important if you want to do any software color calibration after performing the hardware calibration.

Most of us (there are some exceptions) are running in an environment that has 8 bits per channel framebuffers. This means each pixel, in any given image, is constructed by choosing from a palette of 256 unique values for each of the RGB channels. The video LUT (look up table) is a list of values that maps how these values get interpreted into voltages that are sent to the CRT. Think of it as a matrix that has three columns (one for each channel), and 256 rows. Each element of this matrix will have a value that is represented with 16 bit precision in windows. That means that each value can be an integer between 0 and 65535.

The default LUT is a linear one, which means that the values between 0 and 65535 are evenly divided up by 256. So the first value would be 0, the second would be 255, the third would be 511, and the 256th would be 65535.

Now even though the LUT is specified with 16 bits of precision, this isn't 16 effective bits. For example, if you change the value from 511 to 513, you might think you'd get a small bump in voltage. But with 8 bits of precision, you won't get a bump in voltage until you reach 767.

With 10 bits of precision, even though you can define only 256 video input levels, you can choose from a palette of 1024 values for each of those 256 levels.

Suppose you've hardware calibrated your CRT to perfection, and need no software calibration (i.e. modification to the LUT). Then everything is fine.

But suppose you calibrated your CRT so that you get really inky blacks by using a lower than normal G2 voltage. A side effect of having such a low G2 is that the gamma/luminance function/EOTF of the display isn't ideal. The blacks will be crushed (high gamma). The way to address this is to adjust the LUT.

Here's the important part: with a LUT that has only 8 bits of precision, you only have 256 unique voltages to assign to each of the 256 video input levels. If you want to change the gamma, by raising or lowering the default voltage of one of the video input levels, this new voltage will be the same voltage as a neighbouring video input level. Each time you make a change in this fashion, you drop the number of unique voltages being used, and therefore reduce the number of unique colors being displayed. The more radical the change to the gamma, the more number of unique colors you sacrifice.

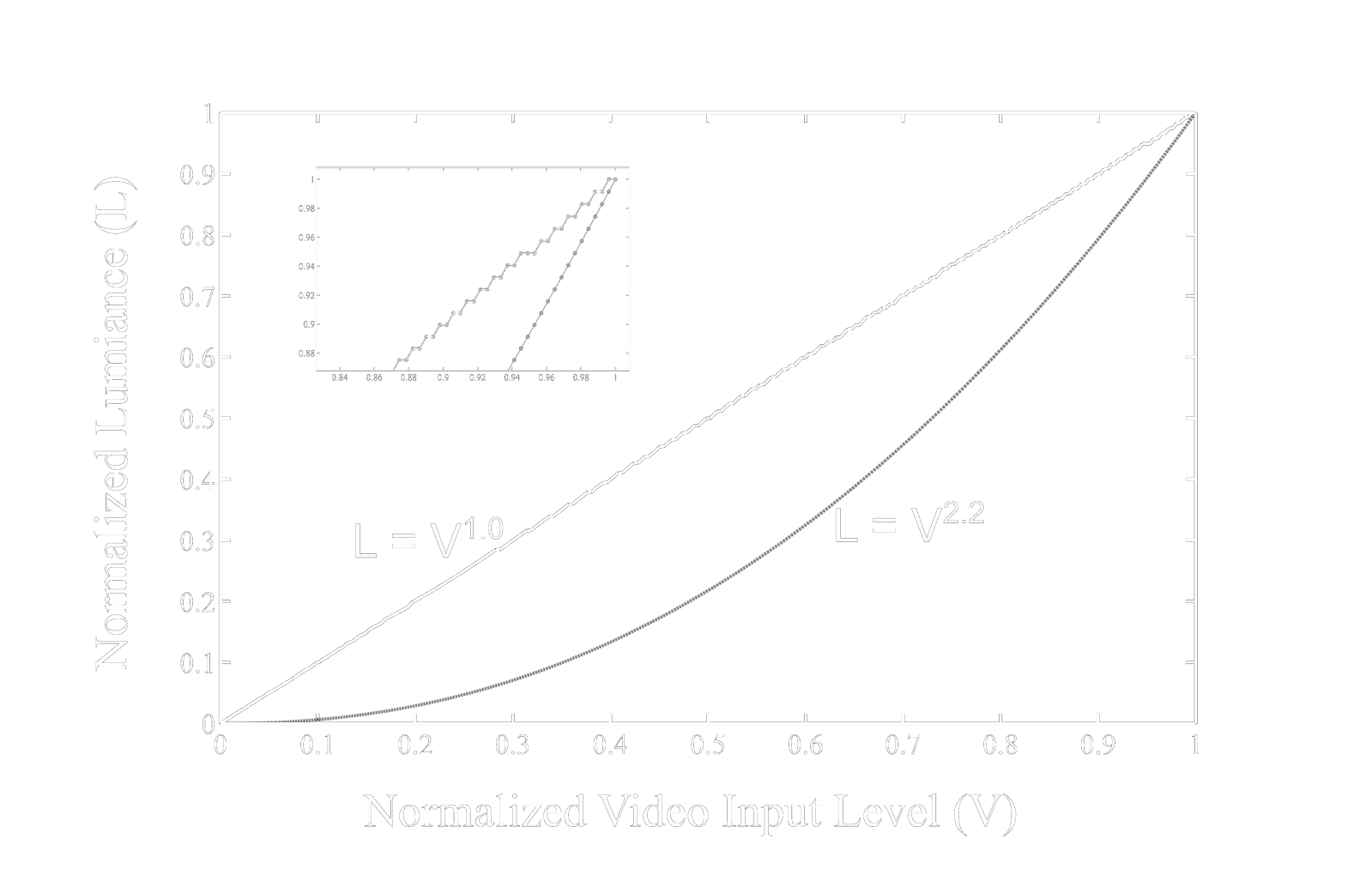

Here's an image I made that may help illustrate the limitation of having only 8 bits of LUT precision:

View attachment 72120

The gray line represents the default luminance function of a hypothetical display (gamma = 2.2).

The black line represents the target luminance function (gamma = 1.0, which is the standard in many visual psychophysical experiments).

In order to change the gamm a from 2.2 to 1.0, you end up dropping from 256 unique luminance levels to 184 luminance levels (you can see the quantization artifacts more clearly in the magnified inset).

Thanks for sharing these results. The i1 pro spectro is a good instrument to have, but the i1 display pro can read much lower luminance levels. That's going to be important when doing software calibration with deep blacks.

Spectrometer is great to measure gamut but not really to calibrate CRT'sThanks for the instructions. I'm using an i1 Pro spectrophotometer, not the i1 Display Pro.

So... 8 bits.

After using the 1070 Ti and Sunix adapter for about a week or so, I'm really considering going back to the 980 Ti. Maybe I'll even find a Titan X.

I'm much more concerned with absolute image quality than the small increase in FPS I'd get in some games with the 1070 Ti.

I've also noticed that the Sunix adapter causes periodic wavy distortion, and the screen will flicker black every once in a while. Subjectively, the image quality seems a bit worse to me.

On the plus side, I'm able to run any of the resolutions and refresh rates that the 980 Ti could run, but I can't push it past the 400mhz ceiling.

I'll see if EVGA will replace this one, or I'll have to sell it and buy a different card from Ebay.

I have been thinking about getting an i1 Display Pro, then profiling it to the i1 Pro. Then I'd have the best accuracy and the speed and ability to read to low luminance levels.

The problem with colorimeters is that they drift so you can't really know whether they are accurate without having a spectro to measure them against. I actually own an old i1 Display 2 which reads lower than the i1 Pro but not nearly as low as the i1 Display Pro. I might profile it against the i1 Pro and recalibrate the display and adjust the gamma with ArgyllCMS. But I agree with you, as far as colorimeters go, the i1 Display Pro is the one to get.

Sorry to hear the Sunix adapter didn't work out =(. I guess it really does seem to be lottery with the Sunix (or maybe it doesn't like NVidia cards). Not sure you missed it, but for me, to fix the wavy, I had to use a refresh rate not ending with 5. So 60, 70, 80, 90, 100 Hertz work while 65, 75, 85 had wavy. Also to fix the flicker black, I used USB power.

On Radeon 8bit is not an issue due to dithering which imho excellently implemented.I'm using USB power and I've tried using different refresh rates. The wavy distortion comes and goes and it may happen less at refresh rates that don't end in 5, but it still happens. And periodic flashes to black still occur. Now, these don't happen too often, but coupled with all the other little hassles I've experienced I'd rather just forget this card and go back to a 980 Ti or a Titan X.

Don't get me wrong. It's not terrible, all things considered. If I actually needed the extra speed, I might put up with it or seek a workaround. But I prioritize absolute image quality now, and issues like the 8 bit LUT versus 10 bit would bother me since I use argyllcms to adjust gamma. Maybe it is just because I had to switch to a different cable, but text seems a little fuzzier.

It's a combination of all these things actually. Others might have a better experience.

Does it work with a vga to BNC adapter? I'd rather use BNC to my FW-900

Can the Delock 62967 do 1920x1200 96hz guaranteed or is it a lottery? Also where is everyone in the US buying them from? I found this website but not sure if it's the best place to order it. http://www.grooves-inc.com/delock-d...7PfhcCuq0tILjqCJji78ilu_C9L8uEewaApSUEALw_wcB

Does it work with a vga to BNC adapter? I'd rather use BNC to my FW-900

If you see a COM port in the Device Manager, you're good to go driver-wise.Ok, I plugged my USB TTL cable into the monitor.

Windows Knows it is a USB to serial however, it won't install drivers. I am reading about RDX and TXD cables being swapped? Does the TXD go to the RXD and vice versa?

At a base level, this is true; the RGBHV lines don't change just because they're going through quintuple BNC connectors rather than a DE-15.Why wouldn't it work through BNC? BNC and VGA carry the same signal.

it is so f*****g sad when FW900 diesHV_FAILURE Amber (0.5 sec) and Off (0.5 sec) and Amber (0.25 sec) and Off (1.25 sec) Extremely high voltage, or high voltage stop

HV_FAILURE

If high voltage detection continues for more than 2 sec, or if the voltage value is out of the specified value, the system is forcibly shut down. In concrete, the voltage of pin 86 (HVDET) is detected.

Please report results, dying FW900 makes me nervousI won't let it die, don't worry.

Mine does 348. It does not work at 349 and in the middle there are artifacts.I'm trying to convince Delock to do the 62967 without the cable,like this:

http://www.delock.com/produkte/1023_Displayport-male---VGA-female/65653/merkmale.html

62967 is amazing,it costs nothing,it is stable as a rock,i have a sample that can do 355 MHz,the most unlucky sample i have can do 340 MHz and if i remember correctly it can do interlaced resolutions,any resolution within that pixel clock is accepted without problems.

But they used a shit cable,it doesn't work good with most video cards and i want to solve this problem.

Who does not care for a higher pixel clock it is the best solution.

About the Sunix adapter,inside the Synaptics VMM2322 chipset there is a triple 8 bit DAC

Mine does 348. It does not work at 349 and in the middle there are artifacts.

I do not have FW900 here and do tests on Dell P1110 and maximum resolution I tried 2360x1770@60Hz to test FastSync and RTSS at 60fps. Butter smooth and less input lag than V-Sync ON

Given that I took time to set it up I also added 1920x1150p at 90/1.001, 96/1.001 and 100Hz and madVR resolution auto-switching and will use it to play videos instead of HP LP2480zx. Now I have full vacuum tube experience

Dell P1110 have much better better ambient light blocking/reflecting than FW900 with polarizer (screen look blacker when off and have less flaring/inner glass reflections) and maximum whites in 'my eyes hurt it is so bright range'. I do not even want to imagine how momentary brightness of raster dot is... if it wasn't moving around so fast it would be probably be like watching at sun

So far I have not seen anything I could complain about with Delock 62967. It handles any resolution and refresh rate I throw at it without any issues

I will do sharpness comparison with 980Ti later.

Oh and it does have banding on NV card because adapter is 8bit. On AMD cards there won't be any banding because they know word 'dithering'

Without changing GPU LUT content there is no banding. On AMD cards there won't be any banding at all because their output implementation does not suck balls.So it works well and you didn't have to change the cable,what video card do you have?

348 MHz is a nice sample,if you want to be sure it is stable you can try with right click on a black desktop looking for little noise on window border

The behavior of your sample is right,between a perfect stable image and black screen usually there are 2-3 MHz max

About the banding on Nvidia cards i'v never seen it during my test (2D and 3D) on a 1070,but it's not my computer and i did only short tests,so maybe i didn't notice it.

Is there a specific test to do?

Did you see banding on games or only on specific things?

I'm interested on this because probably my next card will be Nvidia

I investigated this further today. The capacitor in question is C919 BTW. The original one measured about 4150 pF after being heated with desoldering (meaning the value was probably lower before) instead of 4700pF. Not out of specs yet but pretty much worn out considering it may have measured up to 8460pF when new.The display is significantly brighter, and quite blurry though, I still have to investigate if there is another issue to fix or if it just needs to be calibrated again. While it was open I did replace a disc capacitor which is plain crap on the G2 line (type E ceramics with -20+80% tolerance, and insane capacitance drop with temperature/voltage + fast aging). This may be the reason of the sudden change. I suspect that capacitor is the actual root of the G2 drift issue.

The circle part is actually the front of the base and the USB ports are supposed to point right, so you shouldn't have issues with that.Oh wow didn't think it could replaced! thats nice to know. any other before and afters DIY film replacement?

Ok this thing is heavy so i'm gonna ask first! Can i rotate the based 90 degrees so my keyboard isn't ramming.interfering with the USB ports?