Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,954

Depends on your usage scenario.

For me the usage scenario would be as a "command center" or "battlestation" style setup where the screen would be decoupled from the desk in order to allow for increased viewing distance and a more optimal viewing angle for a larger screen.

For a theoretical 8k screen with a similar format to the 1000R curvature of the samsung ark for example , in 55inch or 65 inch size:

..the center point of the circle. 1000R means a 1000mm radius. That's nearly 40 inch view distance for all of the pixels to remain pointed at you, equidistant from your eyes along the curve, remaining on axis in relation to you all the way to the ends of the screen.

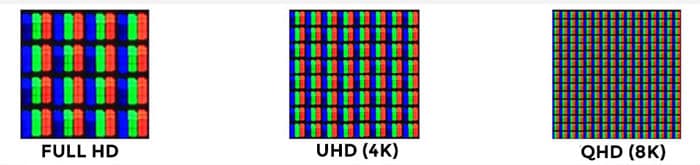

..Pixels per degree is a much better measurement of the pixel pixel density you'll be seeinging than just stating ppi alone.

At longer view distances on larger screens, for example 32inch to 40inches away on a 55inch or 65 inch - you'd benefit from much higher ppi realized as PPD. A 55inch ark gets more like a 1440p desktop screen's pixel sizes to your perspective when sitting even 32 inches away, worse when closer. Even at 38 inch viewing distance its only getting 60ppd, which is ok but not stellar. I use my 48cx at around 65 to 70 PPD, 60deg to 55deg angle viewing distance wise but if it was mounted right on my desk it would be nore like 50 ppd ~ 1500p looking.

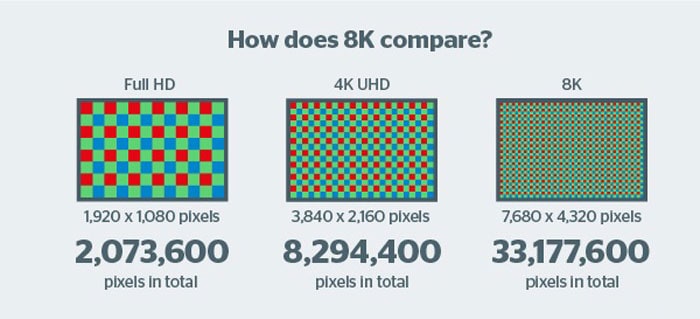

.. the desktop/app real-estate estate would be much greater on a larger gaming tv style 8k screen. They promote the 55inch 4k ark as a bezel free multiple monitor setup replacement but it's quads of desktop real estate are only 1080p. Most people using multiple screens are likely using at least 1440 per screen space if not 4k, and many seeking the real estate using at least one 4k screen space in the mix I'd guess. No matter how you look at it, 1080p screen spaces aren't going to cut it imo.

.. for gaming, on a larger format screen like those described, optimally you'd be able to run smaller screen spaces 1:1 pixel when desired for more demanding games, more encompassing field of view and/or when desiring the opposite in an uw format. E.g. 4k at higher hz than 8k native. Also 5k, 6k, x1600, x2160 ultrawide resolutions etc. 1:1 w/o scaling. On a larger screen those smaller portions of the overall screen would still be decent sizes and also in relation to your perspective as you might sit closer to the screen while viewing games in those fields and sit farther when using the rest of the desktop/app/OS real-estate.

Gaming on a large format 8k screen(full screen or in smaller screen spaces 1:1) would be leaning on dlss AI upscaling and frame generation of today but also as it matures along with more powerful 5000 series gpus (2025 most likely, should have better 8k options and competitors by then too) and into the years of gpus and advancements beyond (perhaps eventually more vector informing: game engines,game development, os, drivers/peripherals to allow several frames to be generated more accurately rather than a single "tween" frame - using that kind of actual informed vector system rather than solely guessing what vectors might be between two frames).

.......

"All I'll ever need."

I can understand the sentiment at this stage considering what is available right now. However people said the same things about 1080p vs 1440p. 1440p vs 4k , 60hz vs 120hz, HDR nits, etc.

Right now I'd love a 65" 8k screen that could do 8k 120hz desktop / windowed games, higher hz ( ~ 144hz+) at lower resolutions 1:1 ~ letterboxed and at 4k upscaled full screen. I'm probably going to wait it out until more mfgs come out of the deep freeze they put 8k gaming tvs into though. More competition in pricing, models ,features and 8k a little less green on the vine.

I guess I just don't see the visual benefit of going greater than the equivalent of 100ppi at ~2ft distance. Once you hit 100ppi at that distance serious limiting returns start setting in.

My rule of thumb is that if your combination of screen size, resolution and viewing distance requires you to use any scaling factor greater than 100% to comfortably use the windows desktop UI, then you are essentially just wasting pixels, and would be better off with a lower resolution screen. it would save on a needlessly expensive screen, a needlessly expensive GPU and on wasted power.

If it weren't for laptop manufacturer "big number go up = sale" marketing resulting in ludicrously over-rwsolytioned screens being put in laptops that are way too small for the resolution, desktop scaling shouldn't even hen need to exist. Everyone should be running at 100% all the time.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)