MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,524

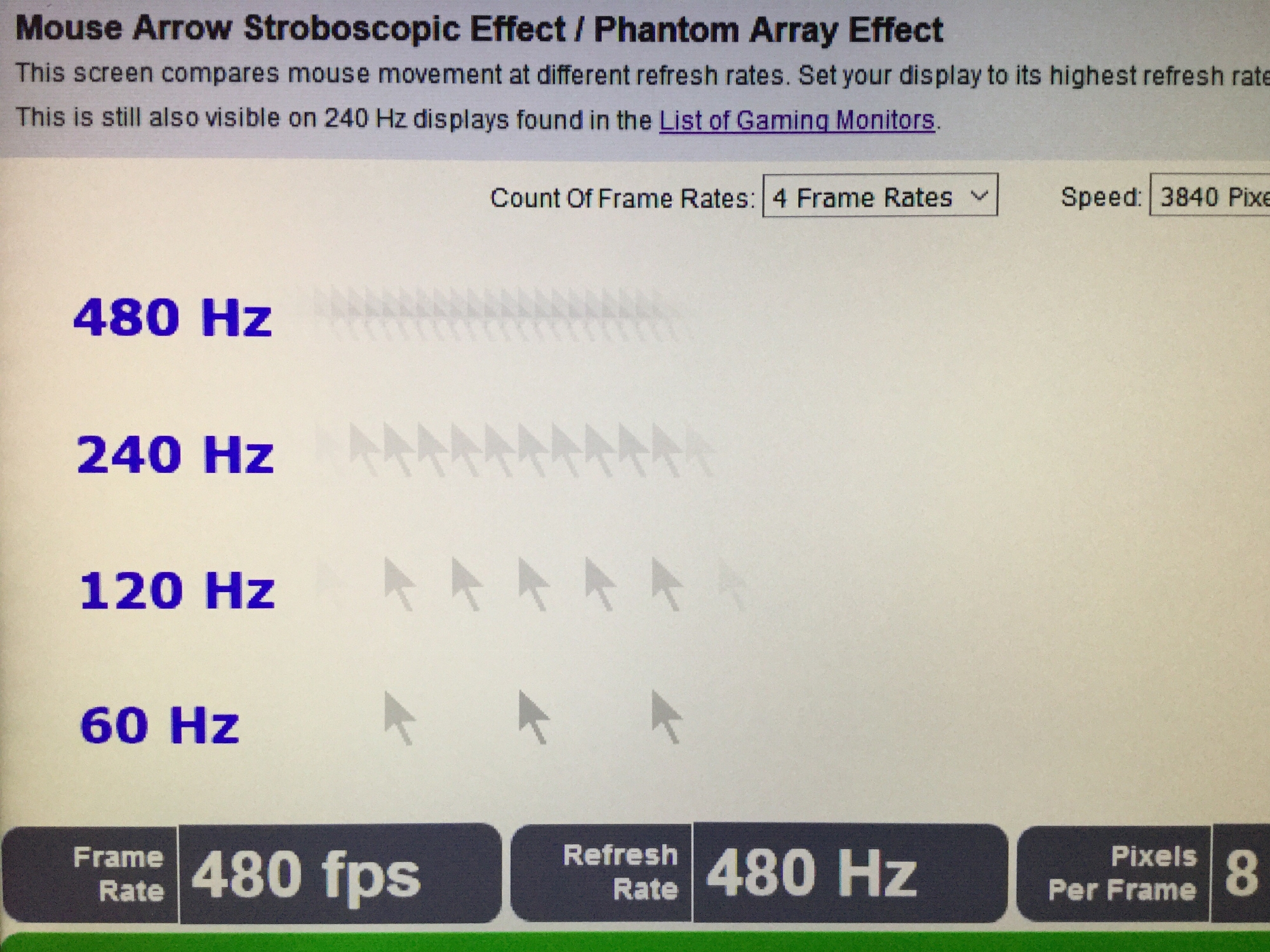

I love higher fpsHz. However I've always suspected that you'd get diminishing returns locally after 240fpsHz on the motion definition, motion articulation aspect (more dots per dotted line curve, more unique animation pages in an animation flip book that is flipping faster). For the blur reduction aspect, the sky is the limit to 1000fpsHz (or higher, but oled is theoretically capable of 1000Hz) though, so the more Hz (with the least tradeoffs) the better.

It seems like a very nice screen, and I can see where people would be happy with it. I just dislike when very high performance (locally) things are promoted as a 1:1 relationship to advantage in online gaming systems as they function now. Online games through servers are all lower rates (some very very low) and rubberbanding all of the time, it's just a matter of whether its a short enough rubberband for you to not notice it so much. Making the rubberband tinier and tinier locally isn't going to change the online latency, tick rates, biased interpolated results of the (online rather than LAN competition) game server's rubberband. Most testing of high fpsHz screens in online reviews showing the advantages is done locally or on a LAN, and often vs bots. I'm assuming your spheretrack was done locally in the same fashion, with the sphere being analagous to a bot.

Still it's pretty cool. Can you run a tracker similar to that which is running through a remote online game server's interpolation code? and get a report card readout of the % accuracy/cursor-hits delivered back to you? Then compare that to the local/LAN version.

The thing is, in online gaming server systems - when you see your cursor on the ball a certain % at the center of the ball, or how quickly you got your cursor onto the ball - it's not necessarily how the online server is processing the result back to you, or even where the ball exactly "was" in the first place at any given time during it's directional changes, according to the server's rates and the server's final process.. So even if you are tracking things better locally that doesn't translate 1:1 to the online clockwork. a.k.a. rubberbanding, temporal shift, etc.

I'm curious of what your 240fpsHz vs 480fpsHz blind testing results would be across days of testing just locally even,

but I wonder what your results would be with some system to record how accurate to the ball you were able to be (at least considering what you were seeing vs what the server processed) at 240fpsHz solid vs 480fpsHz after the latent round trip and through a typical game server's biased processing over a bunch of testing days.

It would have to be blind testing of the fpsHz while doing each run, so no placebo or bias in your performance.

Might have to be multiple players, and who is quicker to the ball and then more accurate to the moving ball at any given time changes it's color for it to hash out like online gaming would be though, as servers usually do a balancing act with latency compensation, but how they do it depends on each game's server code design choices.

Not trying to be too argumentative in saying that, I'm genuinely curious about things like that.

If 480fpsHz locally lets you flow better regardless of how in sync it is to the server clockwork I can understand that, especially from a blur reduction aspect but I'm curious about the motion definition benefits past 240fpsHz solid, and especially in regard to online gaming dynamics and server code.

The detail provided by 4k for far away objects/opponents in games can be valuable. It might depend on the game's graphics (detailed graphics rather than cartoonish or older games), your graphics settings on that detailed game, and also the size and expanse of the game's arenas (large outdoor areas vs corridor shooters for example) - you'd be losing that 4k detail plus muddying the screen at 1080p more than a 1080p native screen would.

Are you somehow implying that "server workings" are going to negate how much better I can track targets when I'm playing at higher frame rates? Because I hope not lol.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)