KickAssCop

[H]F Junkie

- Joined

- Mar 19, 2003

- Messages

- 8,330

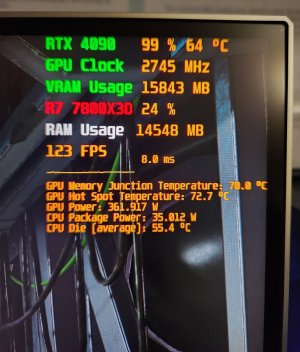

Got stuff installed. Added 4 TB Gen 4 SSD space as well. Now for a total of like 8.75 TB.

Some photos for viewing pleasure. Everything booted up fine with my old setup. Even my fans and colors are all the same. Just need to fix ram colors maybe. Will get to it after I play some games.

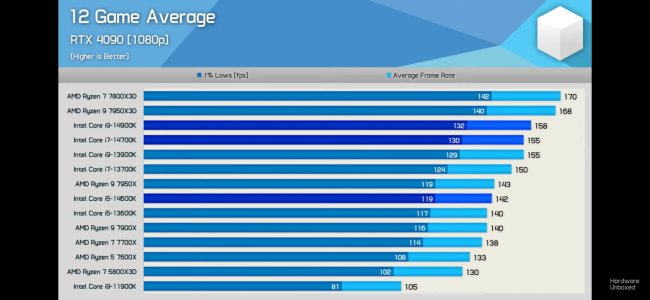

For now, this is right up there in an upgrade I didn’t need but one I deserved after 3 years of running AM4 with 5900X/5800X3D.

Dope af.

Some photos for viewing pleasure. Everything booted up fine with my old setup. Even my fans and colors are all the same. Just need to fix ram colors maybe. Will get to it after I play some games.

For now, this is right up there in an upgrade I didn’t need but one I deserved after 3 years of running AM4 with 5900X/5800X3D.

Dope af.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)