erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,310

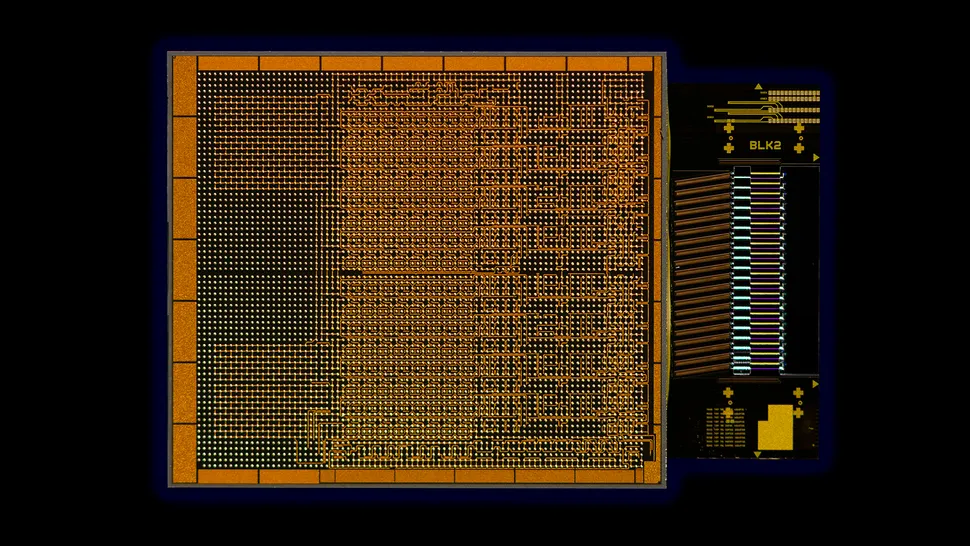

"The current optical I/O chiplet is largely a prototype, and Intel is collaborating with select customers to further develop and integrate this device with next-generation systems-on-chips (SoCs) and system-in-packages (SiPs).

"The ever-increasing movement of data from server to server is straining the capabilities of today’s data center infrastructure, and current solutions are rapidly approaching the practical limits of electrical I/O performance," said Thomas Liljeberg, senior director of product Management and Strategy, Integrated Photonics Solutions (IPS) Group. "However, Intel’s groundbreaking achievement empowers customers to seamlessly integrate co-packaged silicon photonics interconnect solutions into next-generation compute systems. Our OCI chiplet boosts bandwidth, reduces power consumption and increases reach, enabling ML workload acceleration that promises to revolutionize high-performance AI infrastructure."

Intel's silicon photonics initiative is backed by over 25 years of research and extensive deployment in data centers. The company's hybrid laser-on-wafer technology and direct integration approach offer high reliability and cost efficiency, which Intel says sets it apart from competitors.

So far, Intel has shipped over 8 million photonic integrated circuits (PICs) with more than 32 million integrated on-chip lasers. These PICs were integrated into pluggable transceiver modules and used in large data centers at major cloud providers for 100, 200, and 400 Gbps applications. Next-generation PICs, supporting 200G per lane, are being developed for 800 Gbps and 1.6 Tbps applications."

Source: https://www.tomshardware.com/deskto...g-4-tbps-optical-connectivity-to-cpus-or-gpus

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)