sblantipodi

2[H]4U

- Joined

- Aug 29, 2010

- Messages

- 3,765

As title.

I'm using an Acer XV273K in 4K, 120Hz and HDR and GSYNC.

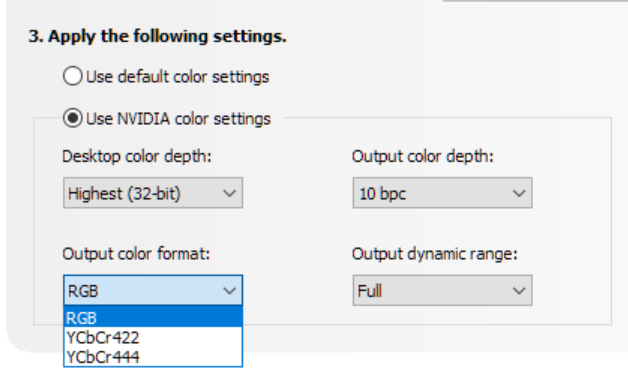

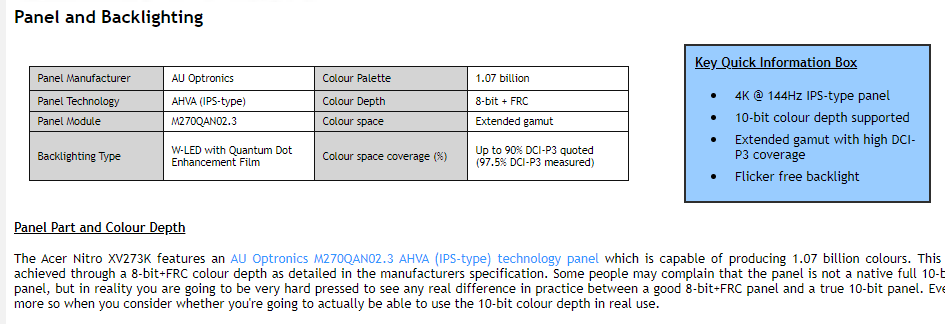

When using all those features my monitors goes down to 8bit + FRC.

Isn't this a condition when chrome subsampling should arise?

Is this test enough for testing chrome subsampling?

I see no "errors" in this image.

Can you help me understand? Thanks

I'm using an Acer XV273K in 4K, 120Hz and HDR and GSYNC.

When using all those features my monitors goes down to 8bit + FRC.

Isn't this a condition when chrome subsampling should arise?

Is this test enough for testing chrome subsampling?

I see no "errors" in this image.

Can you help me understand? Thanks

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)