pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,104

Though we are still very early, thought that I start this thread where folks can chime in if they do find any information on how well these Turing cards will perform in DC world rather than in games. Better still, those lucky [H] who can afford one or more, please share the DC performance result.

Other than the qualitative discussion on how fast (or just meh) this Turings cards are, best to link to some BOINC/project/forum sites where actual numbers are being reported or sites that give a good indication of how well these RTX cards might perform generally in DC.

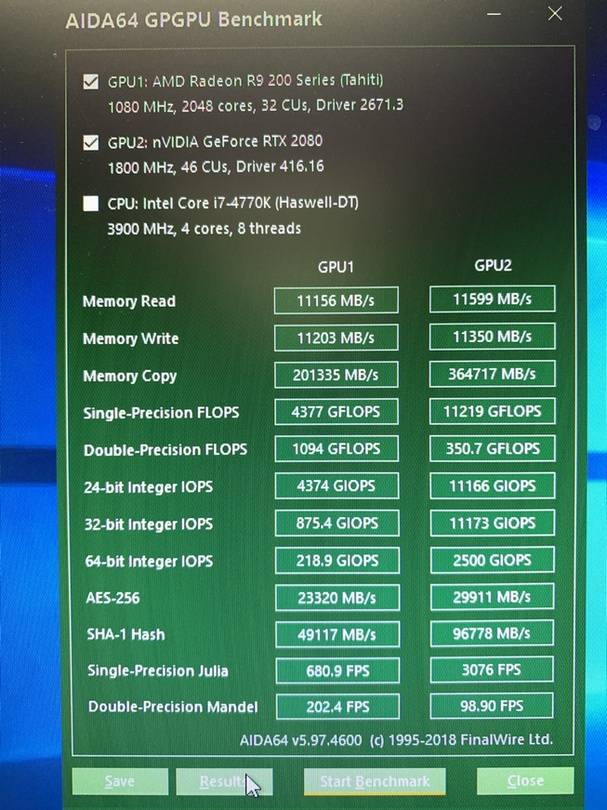

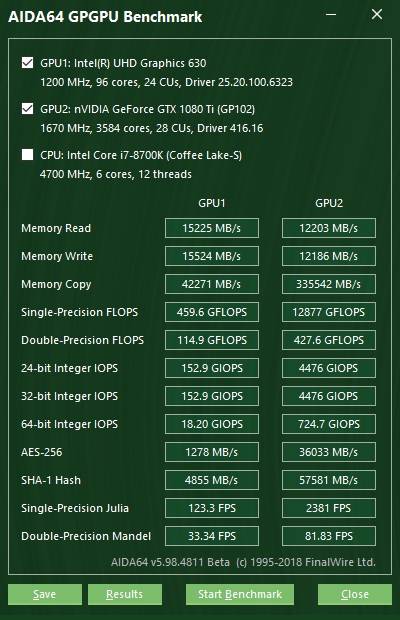

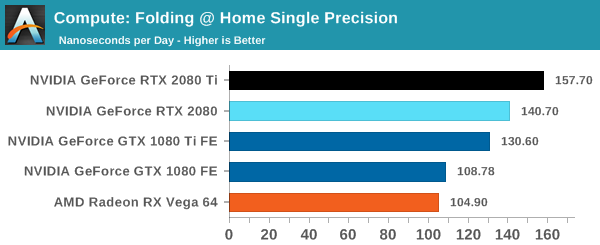

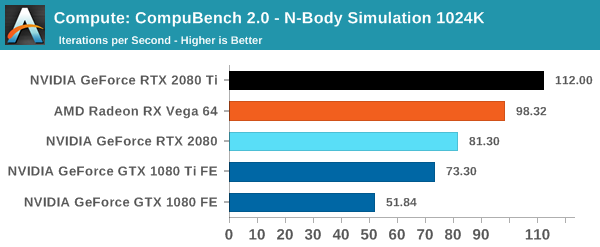

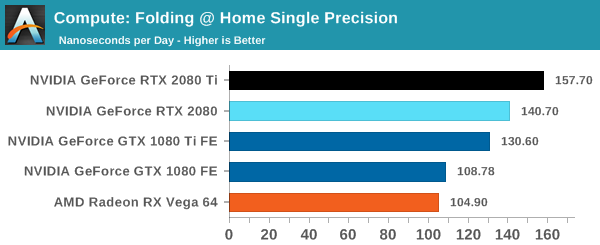

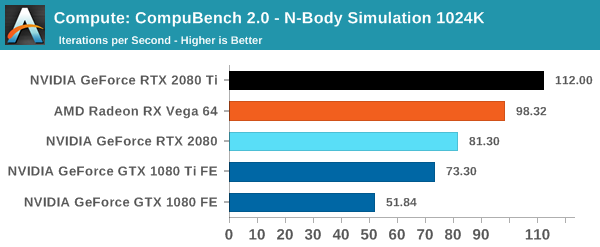

AnandTech has a review page of some of the DC-like performance comparison. All cards ran at Nvidia "reference" stock setting.

Some notable ones. Not sure about the rest.

From FAHBench, I'm guessing. Unfortunately PPD does not scale linearly. Forgot how to convert the ns/day to PPD.

N-body simulation (astrophysics). RTX 2080 slower than Vega 64?

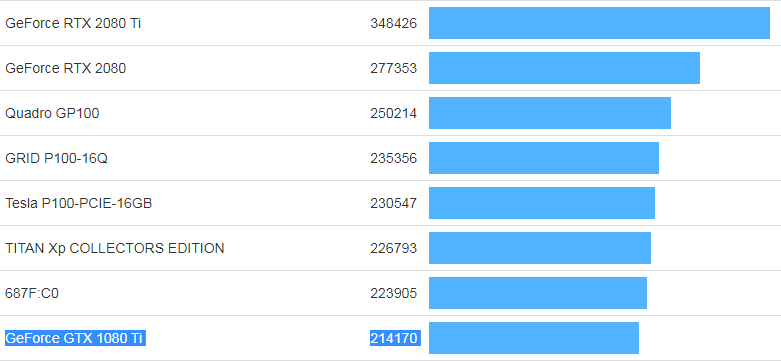

CUDA benchmark from Geekbench. No RTX result in yet.

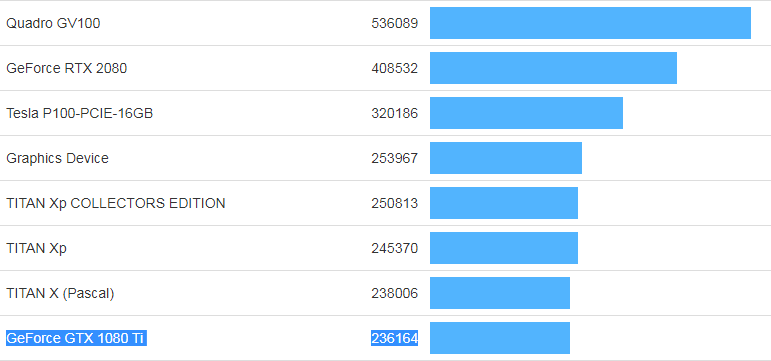

OpenCL benchmark from Geekbench. No RTX result in yet.

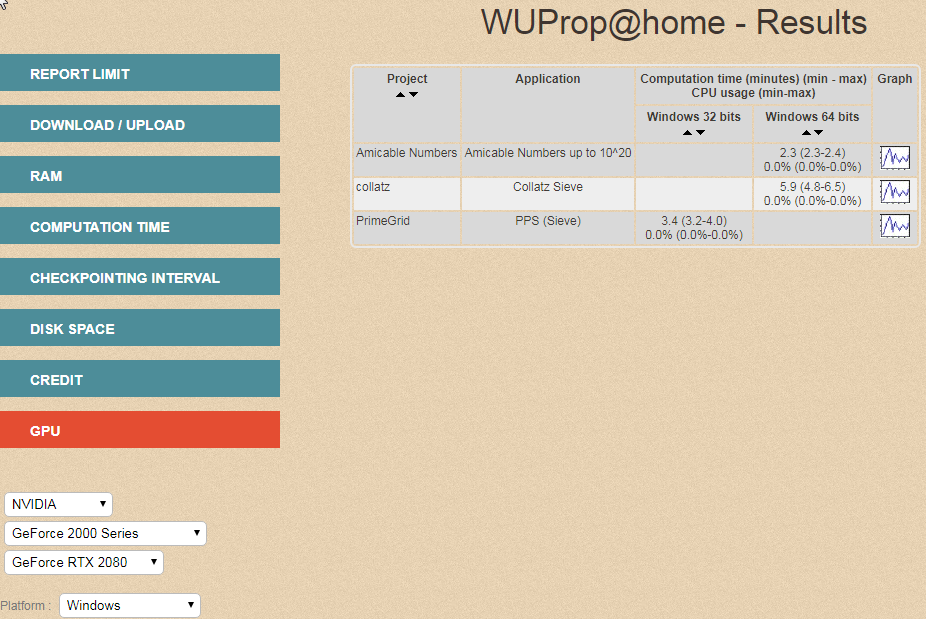

WUProp@home. No RTX result in yet.

I"m sure there are other sites but please add links or info to this thread for future reference. Please no games benchmarking

Other than the qualitative discussion on how fast (or just meh) this Turings cards are, best to link to some BOINC/project/forum sites where actual numbers are being reported or sites that give a good indication of how well these RTX cards might perform generally in DC.

AnandTech has a review page of some of the DC-like performance comparison. All cards ran at Nvidia "reference" stock setting.

Some notable ones. Not sure about the rest.

From FAHBench, I'm guessing. Unfortunately PPD does not scale linearly. Forgot how to convert the ns/day to PPD.

N-body simulation (astrophysics). RTX 2080 slower than Vega 64?

CUDA benchmark from Geekbench. No RTX result in yet.

OpenCL benchmark from Geekbench. No RTX result in yet.

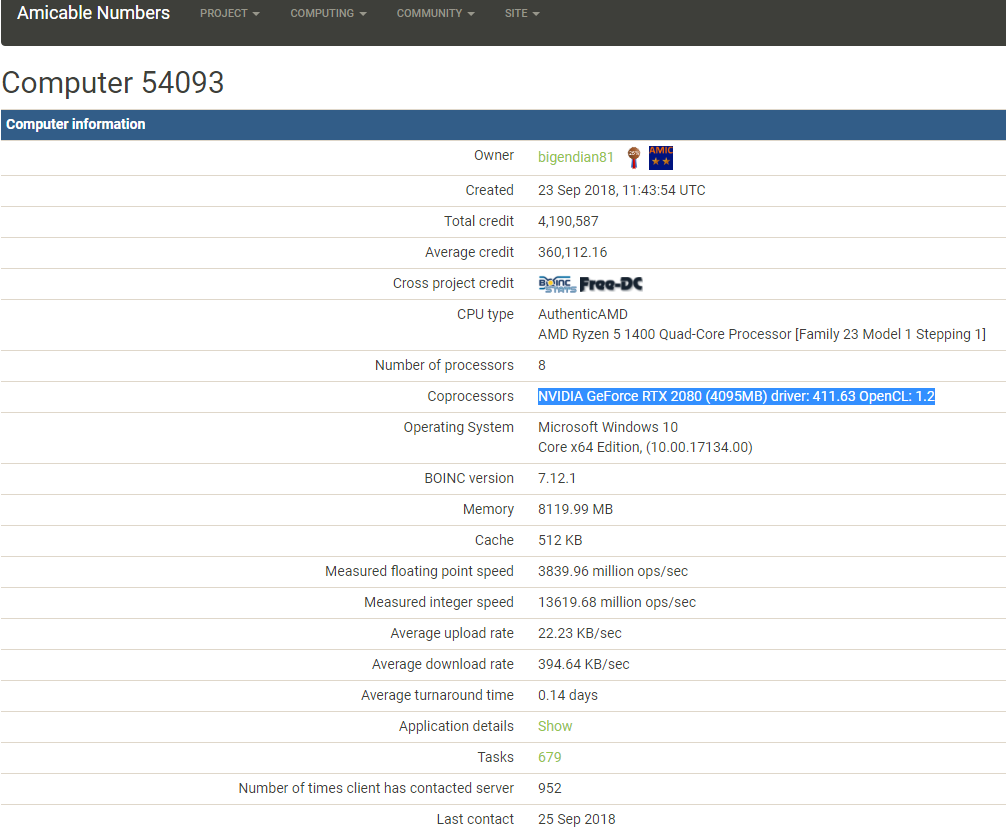

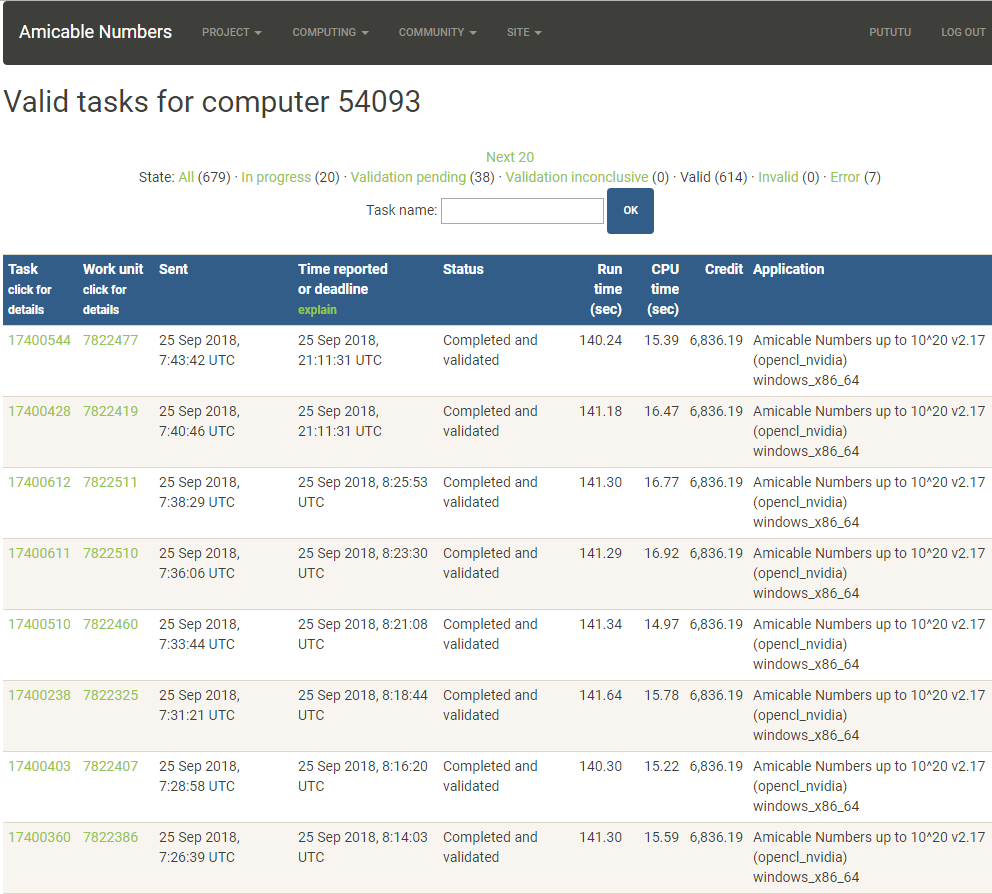

WUProp@home. No RTX result in yet.

I"m sure there are other sites but please add links or info to this thread for future reference. Please no games benchmarking

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)