Personally the ray tracing aspect is cool but it's really about the performance increase outside of ray tracing that will determine whether I buy an RTX or not. There's no way in hell I'm going back to *maybe* 60 FPS at 1080p at this point, and I would think that anyone with a 2K or 4K monitor (or multiple monitors) would be thinking the same thing.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA’s RTX Speed Claims “Fall Short,” Ray Tracing Merely “Hype”

- Thread starter Megalith

- Start date

Were the 2080/ti a bit better. 15/30% and the new AA able to function with little or no fps loss (specially at 4kish res) then I suspect this will be a win for NV regardless of ray tracing.

We will see.

i would expect them to be in the 25% to 30% range for sure no way its less from what i have seen

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

Well that's not actually pre-ordering. That's a reservation.Wow, that is cool. That's how pre-ordering should work

Anyway it is possible that they created the ray tracing hype to divert attention away from the actual performance gains. Or lack thereof.

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

It's ridiculous for them to think that anyone would go back to 1080 to get some tacked on ray tracing in a few games. This seems like they're scrambling, if they had ray tracing planned for a longer time they should have approached devs to implement it properly and not in the form of a last minute patch. But build the game with that in mind. Maybe then it would actually have a visual impact that would impress me. Because from what I've seen I barely notice it.Personally the ray tracing aspect is cool but it's really about the performance increase outside of ray tracing that will determine whether I buy an RTX or not. There's no way in hell I'm going back to *maybe* 60 FPS at 1080p at this point, and I would think that anyone with a 2K or 4K monitor (or multiple monitors) would be thinking the same thing.

RogueTadhg

[H]ard|Gawd

- Joined

- Dec 14, 2011

- Messages

- 1,527

Unfortunately, the games that support SLi are bocoming few and far between.

I'm with the group that is going to keep what I have for a while and see how the reviews play out.

I'm not all that optomistic.

This reminds me of when DX 11 and Vista rolled around, it was supposed to be the next big deal, well that was a pile of shit.

I'm sure in a couple of years ray tracing will be commonplace.

$1000 just isn't on my radar.

There's really only game that I care about. And it supports SLI nicely.

from all that i have read, to say the RTX cards do ray tracing.. is half true.

They do do ray tracing, but its only really shadows/reflections. NOT the entire scene, which is still rendered the normal way, just with the fancy ray traced reflections added on.

Still, should look nice. on your flat monitors.

What i am really thinking as to where it will shine, is in VR. At least thats my hope

They do do ray tracing, but its only really shadows/reflections. NOT the entire scene, which is still rendered the normal way, just with the fancy ray traced reflections added on.

Still, should look nice. on your flat monitors.

What i am really thinking as to where it will shine, is in VR. At least thats my hope

DuronBurgerMan

[H]ard|Gawd

- Joined

- Mar 13, 2017

- Messages

- 1,340

Real time ray tracing is cool. But I don't expect it to be all that useful on the first gen. Performance numbers compared to existing gen are more important to me. And as was said earlier, I don't see crushing performance gains based on clockspeed and number of SMs. Not for the 2080 vs 1080 ti, anyway. 2080 ti will crush the 1080 ti, but its pricing is ridiculous. Much higher than the 1080 ti launch price. That's the thing that bugs me. Pricing for each tier is climbing. Not all at once, but a bit here and there, with each generation. We're already at the point where a 2080 ti could easily outweigh the entire rest of the build combined.

It's too high. Yes, yes, I know many will buy it anyway, because latest and greatest. Can't fault that. It's good hardware, but I wish AMD could push Nvidia the way they are pushing Intel. Nvidia, at least, hasn't been lazy on performance like Intel was during the AMD Bulldozer years. But they've been greedy on price. A more competitive AMD could push prices down a lot.

Probably wishful thinking, though.

It's too high. Yes, yes, I know many will buy it anyway, because latest and greatest. Can't fault that. It's good hardware, but I wish AMD could push Nvidia the way they are pushing Intel. Nvidia, at least, hasn't been lazy on performance like Intel was during the AMD Bulldozer years. But they've been greedy on price. A more competitive AMD could push prices down a lot.

Probably wishful thinking, though.

cptnjarhead

Crossfit Fast Walk Champion Runnerup

- Joined

- Mar 9, 2010

- Messages

- 1,669

My first video card that was purchased with video gaming in mind... was a Rendition V1000 and it came with GL-Quake...i think. The experience was awesome but not many games supported this new 3D rendering chip ... had to browse 3D guru and other sites to find demos that supported it. Fast forward a few years and Microsoft started to push hard with DX3d.. after awhile 3D cards with a proprietary API fell off the map. Isnt this RTX kind of like that? It makes games look better.. ie - lighting but its not like the jump from 2D to 3D...is it? I just do not see that major of a difference.

B00nie

[H]F Junkie

- Joined

- Nov 1, 2012

- Messages

- 9,327

This is going to be like a lot of tech breakthroughs. The first couple of generations are going to be lackluster performance, low support in games and astronomical cost. Early adopters are going to get a bad value for money for sure.

Increased pc performance is not related to price. It’s supply and demand. If pc prices were scaled with performance, they’d be unaffordable. Even avaricious Apple keeps their flagship the same price year after year. Nvidia should be punished for their flagrant greed

TheRealDarkphoX

n00b

- Joined

- Mar 2, 2016

- Messages

- 29

I don't really understand the volume of posts suggesting early adopters are going to get a bad value. Every other post, article, or video reminds me of a teeter totter. In one opinion, it's gonna be great, with RT as an added benefit that will continue to grow. Then in another, it's all the way to the other side where it's going to be the worst thing ever and we should all #occupyNvidia.

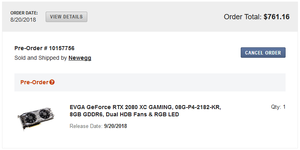

I pre-ordered a 2080, but I'm still on the fence about whether to keep it. Here's the thing though, Newegg doesn't take my money until it ships. So I've got until the 19th to really make up my mind and cancel that ish if I feel like it. But, to go a step further, value is subjective. I'm upgrading an R9 290. Is $760 a lot of money to me? yes, of course. But, I see the potential for improved performance as worth while.

Now if I came from a 1080 or 1080Ti, I might feel differently, but I still wouldn't be bothered by other people getting a 20xx. The fact that so many folks are quick to make that call on behalf of someone else bothers me more than the Nvidia's marketing BS and the lack of benchmarks. I'll worry about the numbers when they get here, but why be concerned about what anyone else is doing with their own money until then, especially if it's not even drawn from the bank yet?

I pre-ordered a 2080, but I'm still on the fence about whether to keep it. Here's the thing though, Newegg doesn't take my money until it ships. So I've got until the 19th to really make up my mind and cancel that ish if I feel like it. But, to go a step further, value is subjective. I'm upgrading an R9 290. Is $760 a lot of money to me? yes, of course. But, I see the potential for improved performance as worth while.

Now if I came from a 1080 or 1080Ti, I might feel differently, but I still wouldn't be bothered by other people getting a 20xx. The fact that so many folks are quick to make that call on behalf of someone else bothers me more than the Nvidia's marketing BS and the lack of benchmarks. I'll worry about the numbers when they get here, but why be concerned about what anyone else is doing with their own money until then, especially if it's not even drawn from the bank yet?

Attachments

Last edited:

I believe based on what we've seen from the specs, 2080 and 2070 only exist to sell bad 2080 TI cores. By buying them, you are doing NVIDIA a favor for their supply chain.

I also believe 2070 and 2080 will be too slow to EVER use ray tracing. This means everyone buying 2070 and 2080 are paying for a massive core with ray-tracing features they will NEVER at any time be able to use with 1080p/60+ fps.

I don't have a problem with ray tracing being on the top-of-the line 2080 TI, because people have paid to run cool tech demos since the beginning of the GPU, but the other models should be discounted not priced at a premium. That's NVIDIA having its cake and eating theirs/yours too.

I also believe 2070 and 2080 will be too slow to EVER use ray tracing. This means everyone buying 2070 and 2080 are paying for a massive core with ray-tracing features they will NEVER at any time be able to use with 1080p/60+ fps.

I don't have a problem with ray tracing being on the top-of-the line 2080 TI, because people have paid to run cool tech demos since the beginning of the GPU, but the other models should be discounted not priced at a premium. That's NVIDIA having its cake and eating theirs/yours too.

SLI / CF have never been on my book, if one card cant do it then the software are broken, or just something i should stay away from.

Granted you can take some product or brand, and have it be your poison of choice, but you should not do that with too many things, personally i just do it with Microsoft and Google and the Danish government, and i would replace all 3 in a heartbeat if there was a viable alternative to me.

Granted you can take some product or brand, and have it be your poison of choice, but you should not do that with too many things, personally i just do it with Microsoft and Google and the Danish government, and i would replace all 3 in a heartbeat if there was a viable alternative to me.

Another kiddo that doesn't understand how supply and demand works.Increased pc performance is not related to price. It’s supply and demand. If pc prices were scaled with performance, they’d be unaffordable. Even avaricious Apple keeps their flagship the same price year after year. Nvidia should be punished for their flagrant greed

If the new cards are overpriced then the market will decide and prices will come down.

All this temper tantruming about the prices is hilarious. These are flagship videocards, not an entitlement.

D

Deleted member 93354

Guest

Just like I said with AMD, my jury is OUT until the official reviews are in. It's too early to speculate. I called for about a 30%->40% improvement in performance of old titles. I'm sticking to that.

D

Deleted member 93354

Guest

Can't agree. Ray tracing is the future, and has been the future for about 40 years now. I for one am excited that the future is almost here. Once cards are fast enough to run it in real time @ 4k 60 fps, it will be worth the wait. But to get to that point, we first need to take steps to get there. This is one of those steps.

While Ray Tracing is the holy grail, NVIDIA is tackling the simplest least complex demo cases of single hit rays. Case in point: Putting a mirrored surface in front of a mirrored surface would break NVIDIA's hardware.

Sadly there have been no magic bullets to increase speed dramatically. I know as I have been following source code changes for POVRay and looking at things like bounding boxes and caustics, etc... There hasn't been a major code change in years on how these are calculated. They just modify the algorithms to use new hardware resources which helps make them faster, but makes marginal improvements at best. The last major speed boost was threading which appeared with the pentium D's

While I commend NVIDIA for tackling basic ray tracing, don't call this actual ray tracing.

d8lock

Gawd

- Joined

- Feb 12, 2012

- Messages

- 684

Newegg has that policy now too. I tried to get a refund on a broke vega and they would only do replacements. Lame, but nvidia isn't the only one doing it.Have you guys checked out the return policy for most (if not all) NVIDIA GeForce RTX 2080 and 2080 Ti GPUs? They are non-refundable. Not to beat a dead horse here, but I would an excellent idea to order after the reviews come out. No worries, NVIDIA won't run out of these.

I don't like the power consumption and the many three-slot cooling solutions that I have seen so far, so I will skip this generation. I'm looking forward to NVIDIA's 7nm refresh. By then the tech will have improved, with more support from developers, not to mention power consumption and heat output. I've been an early adopter too many times; however, usually, I got my money's worth. I don't feel like this is one of those times. These GPUs are overpriced, no matter how one looks at it an I don't don't feel like throwing money away.

I've read many of the comments on this forum regarding pricing. Some folks are of the opinion that the price hike is justified because of the increased performance. I disagree. The norm used to be that when a new product came out, it replaced an old one at the same price point. Price hikes used to be minimal and usually you as a consumer got something in return (better quality cooler or something). So this logic that you pay more for more performance with every new release is flawed. In 5 years we'll pay $1500 for a midrange GPU. It is not how things in tech have worked in the past, and I can't see this working in the future. If you want to get consumers to adopt a new product, you have to offer them bang for the buck at every price point/tier.

Wow, that is cool. That's how pre-ordering should work

D

Deleted member 93354

Guest

Sculpt3D for the win.Gee I remember back in the day a Lowly Commodore Amiga could do ray tracing. Would that be about the speed of Nvidia's first gen RTS? ha ha ha ha.. you never buy first Gen.....

D

Deleted member 93354

Guest

On a side note, I'm waiting for 7nm myself. I don't expect a big speed bump, but hope for a lower price point due to a smaller die. I used to be able to get a top tier card for $400. Even if I go 60% above $400, ($640) I'm still at middle tier. But I'll consider it if top tier prices come down. Until then I'm staying out of the market. I do NOT have $600/year to throw away on a card. ($1200/2 year upgrade cycle some of you have) I have maybe $125/year.

This is a gross oversimplification and makes far too many assumptions.

Ray tracing does not automatically guarantee better renderings. When I was doing a lot of ray tracing work, I used to run a quick render, just to make sure all the objects I wanted in the scene were there. A quick render took a few minutes to do. It used 1 ray, and 1 bounce for the calculations. It looked like crap, but it showed all the objects.

A really good ray trace needs hundreds of rays and about 16 bounces (the number will depend on the number of lights in a scene) for each pixel. I wonder how many rays NVidia is using? Is it hard coded? Is it dynamic? How many bounces? Hard coded? Dynamic? Are they changing the counts based on frame rates? Or is it up to the developer to do that? How many light sources are supported in a scene? How do they handle that?

The "AI" implies they are doing these things dynamically, which means from frame to frame there could be visual differences based on how many light sources and objects are moving around. The more light sources and moving objects, the less pixel caching you can do. Moving light sources add another level of complexity to a scene.

I have no doubt a dedicated video card with hardware based ray tracing support can beat any CPU doing it. There are just too may questions and not enough answers, right now.

I know one thing. If I do not see dedicated ray tracing programs throwing support at this, then that is all I need to know to

It is a gross oversimplification, but what i said and then reiterated later

AA is basically over rendering 3d-2d then doing a 2d average to produce a pixel.

Hybrid ray-tracing is building each pixel from a top and bottom acceleration structure. It's basically sampling in 3d and averaging in 3d.

Seems wrong to do really expensive 3d calculations, then ruin them with a 2d filter.

should hold true.

This is hybrid ray tracing (HRT) more akin to light mapping and anisotropic filtering than casted from camera ray tracing.

When doing AA on a HRT scene, you're basically casting another ray from the camera, doing a slightly skewed calculation, then averaging the results.

With HRT there already is an area for averaging results. the top and bottom acceleration structure. I have no idea how many samples this entity can support, but it is logically the best place to do it.

The AI in this case is a neural network. It optimizes the path from light to surface, (or reverse) but not to camera. I look forward to finding out what the throughput is, but by nVidia's marketing, it's ~7-12 times faster that typical GPU calculations. When we find a trustworthy source, the true value of this product will be known.

check the SIGGRAPH stuff for that looks like all the major CAD/ 3D render software will have support for it and it even looks like the major studios re; Disney and Pixar want it yesterday

one demo i found

Good. That is where I expected this to be supported best. A bit of a no-brainer there. as long as it is better or equal to what they are using now. Games, on the other hand........

Another kiddo that doesn't understand how supply and demand works.

If the new cards are overpriced then the market will decide and prices will come down.

All this temper tantruming about the prices is hilarious. These are flagship videocards, not an entitlement.

Err, isn't each one of the people "temper tantruming" a small piece of the will of the market? I mean they are the target market after all.

I don't know anyone who has never gone to a clothing store, picked up a shirt, pair of pants, shoes, whatever, looked at the price tag and said "That's re-god-damn-diculous" set it down and walked away. I don't see how this is any different.

Night_Hawk-19

Gawd

- Joined

- Jun 20, 2004

- Messages

- 825

I can. Come to Canada. This is ripoff. 1600 bucks for 1080ti doesn't include 12 percent tax. Sorry I'll wait for reasonable prices. Plus its niche thing in my eyes.

I just checked Newegg and every single 2080ti is sold out for pre-order. Sounds like they priced it too low. What the mining craze showed these guys was, they were way undervaluing their products. You can always easily lower price, but raising MSRP is just not good practice.

I don't agree w/ the prices myself, as far as for myself, but alas... supply and demand is at work now.

I don't agree w/ the prices myself, as far as for myself, but alas... supply and demand is at work now.

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

How many were available for pre-order?I just checked Newegg and every single 2080ti is sold out for pre-order. Sounds like they priced it too low. What the mining craze showed these guys was, they were way undervaluing their products. You can always easily lower price, but raising MSRP is just not good practice.

I don't agree w/ the prices myself, as far as for myself, but alas... supply and demand is at work now.

D

Deleted member 93354

Guest

I just checked Newegg and every single 2080ti is sold out for pre-order. Sounds like they priced it too low. What the mining craze showed these guys was, they were way undervaluing their products.

Blah ha ha ha ha ha

God that is so badly concluded it isn't even funny.

I guess you could say fury and fury nano we're underpriced too because they all sold out.

It's called early adopter tax. Or as I like to call it more money than brains tax. You just don't know how it will pan out until you get reviews of said units.

I think once miners see you get better value from a 1080ti in terms of mh/watt the market at that price will quickly fall. You'll get maybe 30% better performance with a 50% price boost. So it's not worth it. Miners would pay for circuitry (rtx units) they will never ever use

Last edited by a moderator:

I guess that's a fair point, I don't know how many are actually available to pre-order... there's 19 listings I see, all show out of stock and the auto-notify option. But again, not sure if those could be pre-ordered (?). I don't see a way to do that, so this may not be a good way to measure at all. My apologies.How many were available for pre-order?

DG, I only meant that MSRP on thier cards in the recent past were several hundreds of dollars less than what the products were actually selling for... I can't imagine that went down well.

Increased pc performance is not related to price. It’s supply and demand. If pc prices were scaled with performance, they’d be unaffordable. Even avaricious Apple keeps their flagship the same price year after year. Nvidia should be punished for their flagrant greed

Once the 1080 series stock dries up I strongly suspect the 2080 series will drop in price.

A less greedy entity would just lower the price on existing stock - I am better they way ramped up production for crypto-miners and it crashed.

from all that i have read, to say the RTX cards do ray tracing.. is half true.

They do do ray tracing, but its only really shadows/reflections. NOT the entire scene, which is still rendered the normal way, just with the fancy ray traced reflections added on.

Still, should look nice. on your flat monitors.

What i am really thinking as to where it will shine, is in VR. At least thats my hope

Its going to suck in VR... its going to be a performance hog. I really doubt many VR developers will even attempt to implement it.

As it is VR engine developers such as Unity... don't suggest developers use real time lighting at all, as the performance hit is to great. Ray traced lighting is going to be an even larger hit.

The real issue is VR engines use completely different rendering. Most have moved on from deferred rendering (which is what say Epic used initially for VR as it doesn't allow most forms of AA to work at all or without major performance issues) Clustered Deferred and Forward Shading are the main methods of VR rendering today.

http://advancedgraphics.marries.nl/presentationslides/09_clustered_deferred_and_forward_shading.pdf

I don't claim to be a VR programming genius or anything... but as I understand it Ray tracing light paths + clustered forms of rendering will never work. Perhaps there is some other new novel way to pull it off. IMO though VR + ray tracing isn't anything anyone is going to see for real until 3rd+ generation RTX hardware. The performance hit to calculate VR without clustering or deferring rendering so you can calculate even a handful of rays is going to simply be way to high.

For the next few years its going to be one of the other.... VR with standard (heavily controlled and mapped) lighting sources, or non VR with higher IQ in the form of RT.

The initial implementation of ray tracing via RTX will be more novelty than anything else. Limited in scope and slow. It was a mistake for Nvidia to price the RTX cards so high. If they really want this to take off it needs wide adoption for developers to take notice. With the high prices not many are going to jump on board which will stunt its overall growth. They should have made RTX as cheap as possible to get as many as they can to buy into it.

D

Deleted member 93354

Guest

Its going to suck in VR... its going to be a performance hog. I really doubt many VR developers will even attempt to implement it.

As it is VR engine developers such as Unity... don't suggest developers use real time lighting at all, as the performance hit is to great. Ray traced lighting is going to be an even larger hit.

The real issue is VR engines use completely different rendering. Most have moved on from deferred rendering (which is what say Epic used initially for VR as it doesn't allow most forms of AA to work at all or without major performance issues) Clustered Deferred and Forward Shading are the main methods of VR rendering today.

http://advancedgraphics.marries.nl/presentationslides/09_clustered_deferred_and_forward_shading.pdf

I don't claim to be a VR programming genius or anything... but as I understand it Ray tracing light paths + clustered forms of rendering will never work. Perhaps there is some other new novel way to pull it off. IMO though VR + ray tracing isn't anything anyone is going to see for real until 3rd+ generation RTX hardware. The performance hit to calculate VR without clustering or deferring rendering so you can calculate even a handful of rays is going to simply be way to high.

For the next few years its going to be one of the other.... VR with standard (heavily controlled and mapped) lighting sources, or non VR with higher IQ in the form of RT.

Unity, as a platform, ports well across multipe platforms and it relatively easy to use. But as far as bleeding edge performance goes, it is severely lacking in terms of speed. so it's well horizontally integrated, but poorly vertically implemented.

But I agree with you, that it is a speed hog. From what I understand RTX will knock your framerates down to 1/4 their previous rates at a similar resolution. I say this because all the demos are at 1080p. (1/4 the resolution) So that 90fps target will be a pipedream with RTX on.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,143

This. There is a rumor going around that NVIDIA put the squeeze on AIB supply in retaliation for the price increase on Pascal during the mining craze, holding Turing back until they can unload their Pascal inventory.How many were available for pre-order?

This. There is a rumor going around that NVIDIA put the squeeze on AIB supply in retaliation for the price increase on Pascal during the mining craze, holding Turing back until they can unload their Pascal inventory.

Ah so the rumor is Nvidia is punishing the bad AIBs... by forcing them to sell all the chips they overproduced.

Lets not make Nvidia sound like a charity here folks. lmao

The mining crazy made Nvidia A LOT of money they are not punishing anyone for selling them A LOT of cards. They are in fact rewarding their AIBs and themselves by selling through overstock at full pop. lol

Last edited:

notarat

2[H]4U

- Joined

- Mar 28, 2010

- Messages

- 2,501

Once the 1080 series stock dries up I strongly suspect the 2080 series will rise in price.

A less greedy entity would just lower the price on existing stock - I am better they way ramped up production for crypto-miners and it crashed.

FTFY

The largest chip, or there abouts, is kind of hard to ship dirt cheap.The initial implementation of ray tracing via RTX will be more novelty than anything else. Limited in scope and slow. It was a mistake for Nvidia to price the RTX cards so high. If they really want this to take off it needs wide adoption for developers to take notice. With the high prices not many are going to jump on board which will stunt its overall growth. They should have made RTX as cheap as possible to get as many as they can to buy into it.

FTFY

You may disagree with me, that is fine.

Please don't misquote me.

I just want 1 thing from these, and thats drop the price a little on Vega cards, slash 500 DKkr of a Vega 64 and i would be all over it.

Glad im not a hardware news writer anymore, trolling thru all those tech sites every day and write up the best stuff took a lot of time for a unpaid job.

When these finally release there will be reviews left and right, reaching more or less same conclusion depending on games test suite.

How do you like Them taxes now Scandinavian Socialist boy. With your 25% sales tax and ROODGROORRMOOHHHFLOOOTTEEE language.

We use good old American Oil Dollars here made by pure freedom.

But besides that anything else you said I agree with.

/s /jk - just in case nobody picked it up.

Perhaps it is because they are both relying on the tensor cores to function. There are only so many tensor cores. And if they are all being used to perform RTX ray tracing, there will be none left available to perform DLSS AA.One is lighting the other is post process AA or some type checkerboard rendering sort of thing. I don't see why they couldn't be used at the same time.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)