OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,580

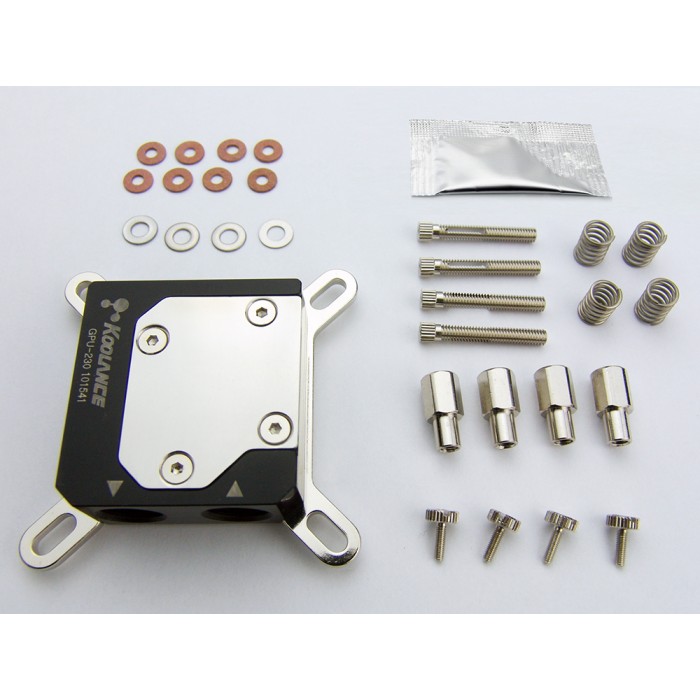

What I am saying is that:

cageymaru said that he uses custom loops because he wants his components to run cool and consume less power.

If that's truly the case, why would he buy a Radeon RX Vega 64?

It uses more power and generates more heat than the Geforce GTX 1080/1080 Ti

He could have as easily put a Geforce GTX 1080/1080 Ti in a custom loop.

It seems to contradict his own stated goal of having his components running cooler and using less power.

No it doesn't. He wants a Vega specifically, and wants that to run cooler.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)