cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

OK, I think I may be using TXAA so that is probably the difference. Not saying the review was fake, just that I am getting better performance but lots of variables in the equation.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

GTAV has the most extensive graphics tweaking options out of any game ever made, so who knows where the difference is in you settings that could be making the difference lolOK, I think I may be using TXAA so that is probably the difference. Not saying the review was fake, just that I am getting better performance but lots of variables in the equation.

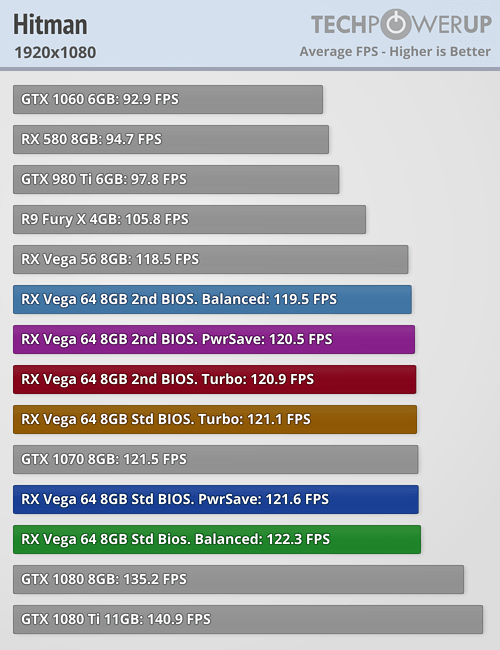

Ummm I have not seen anything that averages the 56 to be about at GTX 1080 performance. If that was the case reviewers would be going nuts singing its praise.A couple of discrepancies as well.

I noticed that according to TPU's results, Vega seems to be stuck on 77 fps in FO4:

https://www.techpowerup.com/reviews/AMD/Radeon_RX_Vega_64/17.html

But it resoundedly beats 1080 at 4k, but is still about 10~15% behind 1080ti on that.

That's the only major discrepancy, the other being Vega 56 beating 1080 at certain games, but averages to be about equal to 1080.

That's splitting hairs.

Ha, this must be your first video card launch. The 480s sold out instantly too and were hard to get for a couple weeks.Does it matter if we can't even buy the things? This launch is a joke. AMD delayed the release this long just to have cards go out of stock in 10 minutes?

That's a good point. Wonder what GCN support will look like in a few years..Vega 56 seems like the best of the cards AMD has, Vega 64 is pretty Meh. Thankfully this is the last incarnation of GCN and Navi is already done so Vega should only have little over a year to exist.

To be frank, none of those numbers are very appealing.Nearly all reviews where showing abysmal GTAV numbers. Vega seems to suffer in most other DX11 games, but nearly as bad as in GTAV.

The worst part about Vega is anyone that was dumb enough to buy a Freesync monitor is stuck with 1070/1080 performance for another year...

Such a great post!The worst part about Vega is anyone that was dumb enough to buy a Freesync monitor is stuck with 1070/1080 performance for another year...

https://www.hardocp.com/article/2017/07/26/blind_test_rx_vega_freesync_vs_gtx_1080_ti_gsyncThe worst part about Vega is anyone that was dumb enough to buy a Freesync monitor is stuck with 1070/1080 performance for another year...

https://www.hardocp.com/article/2017/07/26/blind_test_rx_vega_freesync_vs_gtx_1080_ti_gsync

You MUST have missed it. Read it.

Is that supposed to be a bad thing? Not all of us upgrade every year so the more miles we get the better. I have had my 290 for over 2 years, gave it to the wife using a freesync monitor and I am now getting the WCed Vega 64 which I will use for at least another 2-3 years which I am mating to a freesync 2440P monitor for that long lasting experience.You probably just argued the case for FuryX and Maxwell....

No need to upgrade then if going by that.

Cheers

If only they have used identical panels... Otherwise that blindtest leaves sour impression.https://www.hardocp.com/article/2017/07/26/blind_test_rx_vega_freesync_vs_gtx_1080_ti_gsync

You MUST have missed it. Read it.

Granted that is a valid scientific concern, however the number of equivalent monitors to fit that criteria are far and few between. So at best we can determine that these monitors add greatly to the experience of the user and again as I stated above adds greatlyt to the longevity of said GPU.If only they have used identical panels...

Here's this dummy's freesync monitor gaming experience which was butter smooth with a pair of Fury X in crossfire at about 70fps or above on nearly any game. I bought the pair of Fury X at $325 each about a year ago, and bought the three HP Omen freesync 32" monitors new at $300 each as well. A pair of Fury X is as fast in triple A titles as a single 1080ti. I swapped out from Fury X to a pair of 1080ti in the last month and my experience got worse, I'd switch back in a heartbeat but unfortunately I had already sold my pair of Fury X cards to fund the 1080ti. No freesync is a pretty big detriment. I went from silkily smooth to encountering microstutter and noticeable frame drops (when my FPS deviated off 60hz) and I'm back to vsync lag.The worst part about Vega is anyone that was dumb enough to buy a Freesync monitor is stuck with 1070/1080 performance for another year...

Here's this dummy's freesync monitor gaming experience which was butter smooth with a pair of Fury X in crossfire at about 70fps or above on nearly any game. I bought the pair of Fury X at $325 each about a year ago, and bought the three HP Omen freesync 32" monitors new at $300 each as well. A pair of Fury X is as fast in triple A titles as a single 1080ti. I swapped out from Fury X to a pair of 1080ti in the last month and my experience got worse, I'd switch back in a heartbeat but unfortunately I had already sold my pair of Fury X cards to fund the 1080ti. No freesync is a pretty big detriment. I went from silkily smooth to encountering microstutter and noticeable frame drops (when my FPS deviated off 60hz) and I'm back to vsync lag.. I was planning to pick up a liquid cooled Vega, and replace my 1080tis to gain back freesync.

It's been a great gaming experience.

What's your monitor experience oh wise one?

(and by the way - this is the first top shelf card that hasn't been within 10% or so of Nvidias flagship for several generations - so the performance delta hasn't been as wide up until now)

View attachment 33440

Now to apply a bit of balance:

I'm a bit bitter I can't find one single liquid cooled Vega yet. (And I did all the prep! Signing up for notifications via text and email, hitting f5 to the point of obsession, waiting for the darn things to come into stock). Come on AMD, you said you were delaying to make sure there were plenty at launch and that seems a foul lie. Microcenter in KC got four cards at launch. 4. Two air cooled gigabyte and two air cooled XFX. No water cooled. Newegg and amazon and Best Buy sold out in moments.

I'm also a bit annoyed AMD released Vega without working crossfire support because it worked so darn well with the Fury X. (I never had a single issue the entire time I used it). How does that even happen? You promoted the use of crossfire in marketing through rx480 and rx580. Crossfire has been super strong and reliable for several generations...and now it doesn't even work with the launch driver?

I'm also annoyed AMD doesnt offer a different cooling solution for the air cooled models. I agree with Brent's review in that the blower is too loud on AMD cards - making the water cooled variant the only reasonable option for those of us who like a silent PC. Something like Evga ICX cooler solution is soo much better than the blower it's unreasonable to only offer the blower at this stage.

Also a littte peeved at the MSRP bait and switch. And surprised. Prices were announced by AMD several weeks back. At launch $100 higher. Lame.

I see the disappointment Vega isn't stronger, but it never was supposed to be. Those following along knew it was only a matchup for the 1080. That's all that was ever claimed. The issue was the long delay for launch - not the performance. The parts delay made the performance seem weak. Six months ago people wouldn't have been bitter about the performance. The 1080ti wasn't out yet and AMD would have been competitive with Nvidia 1080, albeit at higher power and heat levels -- so what else is new?

A six month delay changed the whole narrative.

just get a 1080ti or Titan Xp you'll spend less and it's just as fast if not faster than corsfired vega would be

Here's this dummy's freesync monitor gaming experience which was butter smooth with a pair of Fury X in crossfire at about 70fps or above on nearly any game. I bought the pair of Fury X at $325 each about a year ago, and bought the three HP Omen freesync 32" monitors new at $300 each as well. A pair of Fury X is as fast in triple A titles as a single 1080ti. I swapped out from Fury X to a pair of 1080ti in the last month and my experience got worse, I'd switch back in a heartbeat but unfortunately I had already sold my pair of Fury X cards to fund the 1080ti. No freesync is a pretty big detriment. I went from silkily smooth to encountering microstutter and noticeable frame drops (when my FPS deviated off 60hz) and I'm back to vsync lag.. I was planning to pick up a liquid cooled Vega, and replace my 1080tis to gain back freesync.

It's been a great gaming experience.

What's your monitor experience oh wise one?

(and by the way - this is the first top shelf card that hasn't been within 10% or so of Nvidias flagship for several generations - so the performance delta hasn't been as wide up until now)

View attachment 33440

Now to apply a bit of balance:

I'm a bit bitter I can't find one single liquid cooled Vega yet. (And I did all the prep! Signing up for notifications via text and email, hitting f5 to the point of obsession, waiting for the darn things to come into stock). Come on AMD, you said you were delaying to make sure there were plenty at launch and that seems a foul lie. Microcenter in KC got four cards at launch. 4. Two air cooled gigabyte and two air cooled XFX. No water cooled. Newegg and amazon and Best Buy sold out in moments.

I'm also a bit annoyed AMD released Vega without working crossfire support because it worked so darn well with the Fury X. (I never had a single issue the entire time I used it). How does that even happen? You promoted the use of crossfire in marketing through rx480 and rx580. Crossfire has been super strong and reliable for several generations...and now it doesn't even work with the launch driver?

I'm also annoyed AMD doesnt offer a different cooling solution for the air cooled models. I agree with Brent's review in that the blower is too loud on AMD cards - making the water cooled variant the only reasonable option for those of us who like a silent PC. Something like Evga ICX cooler solution is soo much better than the blower it's unreasonable to only offer the blower at this stage.

Also a littte peeved at the MSRP bait and switch. And surprised. Prices were announced by AMD several weeks back. At launch $100 higher. Lame.

I see the disappointment Vega isn't stronger, but it never was supposed to be. Those following along knew it was only a matchup for the 1080. That's all that was ever claimed. The issue was the long delay for launch - not the performance. The parts delay made the performance seem weak. Six months ago people wouldn't have been bitter about the performance. The 1080ti wasn't out yet and AMD would have been competitive with Nvidia 1080, albeit at higher power and heat levels -- so what else is new?

A six month delay changed the whole narrative.

The worst part about Vega is anyone that was dumb enough to buy a Freesync monitor is stuck with 1070/1080 performance for another year...

R5 [email protected] | DEEPCOOL CAPTAIN 240EX {Push/Pull}

MSI B350 Tomahawk

Corsair LPX@2933

EVGA 1070 FTW@2126/8886

Seasonic 860 Platinum | HYPER X 120GB SSD | Samsung 850 Evo 500GB SSD

Odd thing to say from a guy in his own signature only has a 1070, so your making fun of people with Vega or getting Vega yet you have less graphic power then them?

Odd thing to say from a guy in his own signature only has a 1070, so your making fun of people with Vega or getting Vega yet you have less graphic power then them?

Overclocked cards use more power, and you're surprised by this? Drop to power savings, stick a water block on it and you'll get 1080 perf/watt at similar performance. Or did you not follow the reviews? Where lower power modes reduce power with almost no performance hit.Where is our prophet

Anarchist4000 when we need him? Read from the holy scriptures, tell us more about how power consumption will reduced by 30% etc. God knows, we need it.

500W in unigine heaven when overclocked. This is litetally half as efficient as a 1080

Overclocked cards use more power, and you're surprised by this? Drop to power savings, stick a water block on it and you'll get 1080 perf/watt at similar performance. Or did you not follow the reviews? Where lower power modes reduce power with almost no performance hit.

Still need the drivers tuned and enabled everywhere, nothing new there. So no idea wtf you're going on about. Or why you think something has changed since the last time you mentioned it.

I'm still sticking with my 1080ti comparison as that's where current numbers with some new features have it. I'd buy one to test if they weren't sold out instantly because demand is so damn high.

LMAO.Someone also aptly pointed out that Vega is still bottlenecked by its 4 compute engines (like Fiji) as illustrated in this game:

Anandtech asked AMD about this limitation and they essentially told them that they could have added more but didn't feel like it because its more work...lol!

LMAO.

So you're saying the card with significantly higher clockspeeds is held back by 4 shader engines? At which point it gets identical performance? Because 4 triangles per clock wouldn't be higher with more clocks? That's 3rd grade math right there.

They simply don't need more than 4SEs. Just think, only 4SEs and already beating Pascal on some titles despite Nvidia having higher clocks. Wow!

Overclocked cards use more power, and you're surprised by this? Drop to power savings, stick a water block on it and you'll get 1080 perf/watt at similar performance. Or did you not follow the reviews? Where lower power modes reduce power with almost no performance hit.

Still need the drivers tuned and enabled everywhere, nothing new there. So no idea wtf you're going on about. Or why you think something has changed since the last time you mentioned it.

I'm still sticking with my 1080ti comparison as that's where current numbers with some new features have it. I'd buy one to test if they weren't sold out instantly because demand is so damn high.

I take it you didn't read H's or any other sites reviews? Where Turbo to balance simply reduces power consumption. Power savings having very little effect in some cases while further reducing power. Your dissonance isn't my problem though, I gave fair warning this was coming.I have reported you for contributing to my

Go read the H review. All that geometry throughput and they still aren't 60% ahead in all games. Just a synthetic benchmark nobody plays anymore.When you say beating Pascal do you refer to the 1070 having 60% higher geometry tbroughput than Vega 64? 13 tflops vs 7, and the 1070 is still winning lol. Vega should be twice as fast.

But please don't let common sense stop you from making me laugh

Card barely matches a 1080 when overclocked and drawing 350W.

I take it you didn't read H's or any other sites reviews? Where Turbo to balance simply reduces power consumption. Power savings having very little effect in some cases while further reducing power. Your dissonance isn't my problem though, I gave fair warning this was coming.

Go read the H review. All that geometry throughput and they still aren't 60% ahead in all games. Just a synthetic benchmark nobody plays anymore.

Hell, if 4 tri/clock was a bottleneck, the higher clocked part should be faster. Just compare fury to Vega. Same SE limit with 1GHz vs 1.5GHz clocks and identical performance.

It wouldn't, but the original point was that the graph showed AMDs 4SE's holding back performance. Despite the obvious fact that Vega would have a 50% higher limit with higher clockspeed. Increasing throughput on a bottleneck 50% should have more than a 0% change. That part is simple.Why would 60% higher geometry throughput result in 60% higher framerate unless thats the sole bottleneck? 1070 is outperforming Vega 64 in some titles where this is obviously an issue, it's still 7 tflops,

HAlf as powerful as Vega and draws 150w.

ahahahahaha I'm sorry mate but today you've really done it I can no longer take you seriously. I have never seen a more pitiful stream of excuses in the face of such staggering evidence to the contrary.

Card barely matches a 1080 when overclocked and drawing 350W.

Drop the OC, enable power saving and you'll get the same performance for half the power draw. You have to wait for the drivers to be ready ahahahahaha

This is what he tells us while accusing people of having the mental acuity of third graders.

It wouldn't, but the original point was that the graph showed AMDs 4SE's holding back performance. Despite the obvious fact that Vega would have a 50% higher limit with higher clockspeed. Increasing throughput on a bottleneck 50% should have more than a 0% change. That part is simple.

Cover is a classic CPU bottleneck. On Linux even Further is ahead of 1080ti, albeit 0.3% along with other cards.

View attachment 33449

Not my fault if you don't believe the evidence in front of you. And yes some driver work is still needed as almost every reviewer I've seen has noted. Even Kyle mentioned it with the MSAA tests. Simple driver tuning right there.