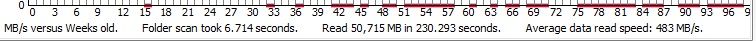

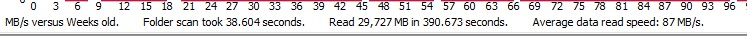

http://www.pcper.com/reviews/Editor...ptible-flash-read-speed-degradation-over-time

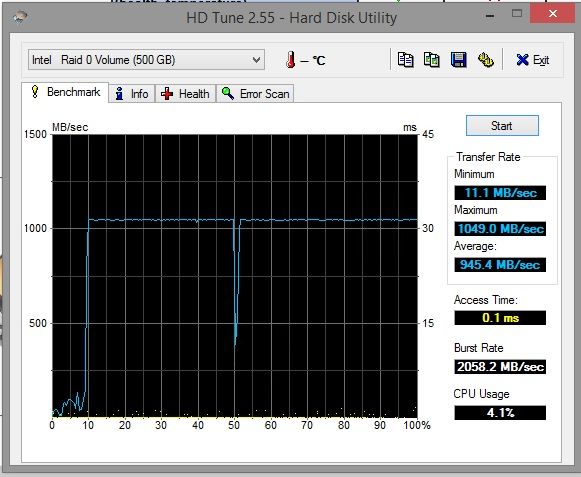

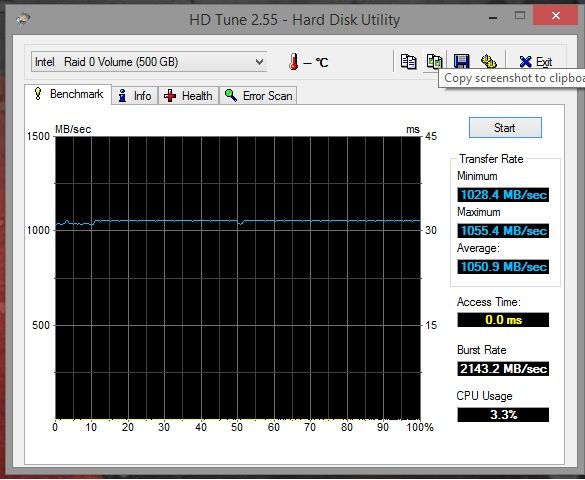

Is anyone here with 840/840 evo experiencing a drop in speed? I am running 2 250gbs in raid 0 in my system and I haven't experienced it. Just wondering if this is something I need to be concerned about.

Is anyone here with 840/840 evo experiencing a drop in speed? I am running 2 250gbs in raid 0 in my system and I haven't experienced it. Just wondering if this is something I need to be concerned about.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)