thedude0901

n00b

- Joined

- Oct 17, 2004

- Messages

- 41

Greetings,

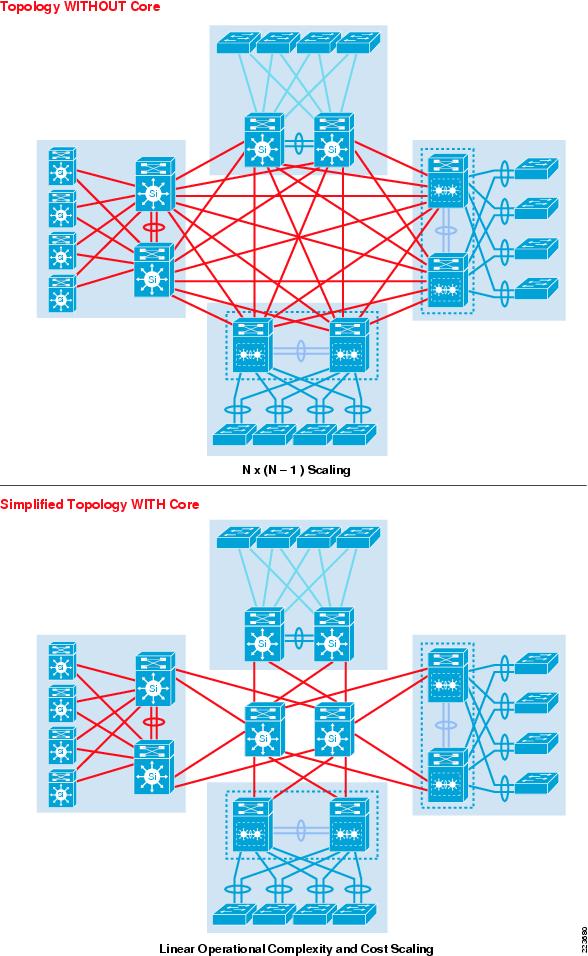

I'm redesigning my network because it's currently a tangled pile of crap. "Best practice" says to use Cisco's three layer network model but I'm wondering if I need that.

My network is as follows:

8 x 48 port POE user access switches in 3 different closets.

7 x 48 port access switches in my data center serving up about 170 servers.

The 3 layer model says I'd have a core switch going to 4distribution switches (3 closets plus data center) then to the respective access switches. While I understand the design it seems a bit over engineered. All of the traffic will be from the users to the data center with 32 remote offices talking to the data center as well. The remote offices hang off a MPLS cloud and would combined do only about 30 Mbps total.

So, to save a hop on the network, a few $$$$$ couldn't I run trunks back from the access switches to a single, large core switch?

I'm redesigning my network because it's currently a tangled pile of crap. "Best practice" says to use Cisco's three layer network model but I'm wondering if I need that.

My network is as follows:

8 x 48 port POE user access switches in 3 different closets.

7 x 48 port access switches in my data center serving up about 170 servers.

The 3 layer model says I'd have a core switch going to 4distribution switches (3 closets plus data center) then to the respective access switches. While I understand the design it seems a bit over engineered. All of the traffic will be from the users to the data center with 32 remote offices talking to the data center as well. The remote offices hang off a MPLS cloud and would combined do only about 30 Mbps total.

So, to save a hop on the network, a few $$$$$ couldn't I run trunks back from the access switches to a single, large core switch?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)