Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why OLED for PC use?

- Thread starter Quiz

- Start date

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

With the FW900 as my main display at home, a pair of IPS panels at work, I noticed the former's richer, if smaller, picture when I got home, but I didn't perceive any delta in physical effects...

I don't know when these Sony screens were manufactured. I pretty much discontinued using my last CRT in 2005, and it was manufactured in ~2000. (It was an Iiyama Visionmaster Pro 510, based around a 22" Mitsubishi Diamondtron tube) but the "hot red face and ears after too many hours infront of a CRT TV/monitor" experience has kind of been a universal one for any CRT I've ever used. it was a common enough experience that the "CRT Tan" joke in the 90's came out of it.

My guess at why we have differing experiences is that Sony's last CRT's were somehow lower emitting than any CRT's that came before them, and I haven't used those.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

2003 for mine. It was the last of them or very nearly so...Sadly.

To each their own. I honestly don't understand the love for these things.

I hated LCD's when they first showed up in PC's in the 90's, but back then they were really bad. Horrid input lag, ghosting, terrible contrast, you name it.

By 2006 or so, I switched to LCD's and never looked back.

I've found LCD's to be sharper and clearer than CRT's for 20 years now, and they lack key modern features like VRR/G-Sync/Free-Sync without which I wouldn't want to run any game these days. As someone who loves ever bigger and better displays and always has, I honestly don't miss CRT's at all

The GDM-FW900's were amazing for a brief few years from ~2000 (or whenever the first ones were made) to ~2003, but at any time after that I think I would have preferred the top end LCD's of the day.

Anyway, the part I find really surprising is that this "hot, red , flushed face and ears from TV/Monitor use" was such a huge part of every day existence in the 80's and 90's when I started in the hobby, it is absolutely amazing to me that googling it today I can find almost no reference to it.

Last edited:

Ya the clarity was a big selling point to me when I decided to switch. The size was another one, I went from a 22" CRT, which is like 20" in terms of usable, to a 24" widescreen which was real nice. Also related to the clarity was not having to adjust the geometry. One of my least favorite CRT activities was spending an hour trying to get the image to occupy as much of the screen as possible, but still be geometrically correct and in focus. Of course it couldn't hold that, so every so often you got to do it againI've found LCD's to be sharper and clearer than CRT's for 20 years now, and they lack key modern features like VRR/G-Sync/Free-Sync without which I wouldn't want to run any game these days. As someone who loves ever bigger and better displays and always has, I honestly don't miss CRT's at all

It was a tradeoff initially for sure, but still one I liked. However as time has gone on LCDs just get more and more and more advantages.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,547

I think they’re great and their motion clarity has not yet been surpassed, except for a select number of VR headsets.To each their own. I honestly don't understand the love for these things.

I hated LCD's when they first showed up in PC's in the 90's, but back then they were really bad. Horrid input lag, ghosting, terrible contrast, you name it.

By 2006 or so, I switched to LCD's and never looked back.

I've found LCD's to be sharper and clearer than CRT's for 20 years now, and they lack key modern features like VRR/G-Sync/Free-Sync without which I wouldn't want to run any game these days. As someone who loves ever bigger and better displays and always has, I honestly don't miss CRT's at all

The GDM-FW900's were amazing for a brief few years from ~2000 (or whenever the first ones were made) to ~2003, but at any time after that I think I would have preferred the top end LCD's of the day.

Anyway, the part I find really surprising is that this "hot, red , flushed face and ears from TV/Monitor use" was such a huge part of every day existence in the 80's and 90's when I started in the hobby, it is absolutely amazing to me that googling it today I can find almost no reference to it.

I’ve never heard of the CRT tan until this thread and I’ve been using them since I was a kid. I still have some PVM’s I use for gaming.

I mostly use LCD’s these days. I hope to get an OLED monitor when BFI comes around on them. Until then I’ll keep singing the praises of the Viewsonic XG2431. It’s clearer in motion than my Sony GDMs at 85hz. At 60hz it’s not quite there but much better than plasma. Obviously it falls in all the other areas, namely contrast, but it’s as close to CRT I’ve ever seen in an LCD so I’m happy.

Last edited:

I'll refer you to the Digital Foundry video on the FW900. There's a reason one of the last new ones in existence went for the price of a new car.To each their own. I honestly don't understand the love for these things.

I hated LCD's when they first showed up in PC's in the 90's, but back then they were really bad. Horrid input lag, ghosting, terrible contrast, you name it.

By 2006 or so, I switched to LCD's and never looked back.

I've found LCD's to be sharper and clearer than CRT's for 20 years now, and they lack key modern features like VRR/G-Sync/Free-Sync without which I wouldn't want to run any game these days. As someone who loves ever bigger and better displays and always has, I honestly don't miss CRT's at all

The GDM-FW900's were amazing for a brief few years from ~2000 (or whenever the first ones were made) to ~2003, but at any time after that I think I would have preferred the top end LCD's of the day.

Anyway, the part I find really surprising is that this "hot, red , flushed face and ears from TV/Monitor use" was such a huge part of every day existence in the 80's and 90's when I started in the hobby, it is absolutely amazing to me that googling it today I can find almost no reference to it.

It's arguably the finest display ever made. And it still is. Clear sharp text, modern aspect ratio, big dynamic range, great blacks, amazing clear motion, etc.

Sure, in the office, I'll take a couple or more LCDs, because it's all about the real estate, but for my enthusiast machine I wanted something that performs. And for years in this display dessert of a century there was nothing...

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

I'll refer you to the Digital Foundry video on the FW900. There's a reason one of the last new ones in existence went for the price of a new car.

It's arguably the finest display ever made. And it still is. Clear sharp text, modern aspect ratio, big dynamic range, great blacks, amazing clear motion, etc.

Sure, in the office, I'll take a couple or more LCDs, because it's all about the real estate, but for my enthusiast machine I wanted something that performs. And for years in this display dessert of a century there was nothing...

I haven't used a CRT in person in almost 20 years, so I haven't exactly seen them side by side, but I can't imagine picking any CRT ever made over any decent LCD from the G-Sync/FreeSync/VRR era.

Times are better now. I'm typing this on a CX.I haven't used a CRT in person in almost 20 years, so I haven't exactly seen them side by side, but I can't imagine picking any CRT ever made over any decent LCD from the G-Sync/FreeSync/VRR era.

I'll actually disagree on cheap LCDs being bad. If they were all that was available sure, but I'm glad that they are there because they bring great display quality to people who don't have tons of money. Cheap LCDs are way better than cheap CRTs were and are cheaper to boot. Cheap CRTs SUCKED. Small, fuzzy, low resolution, etc, etc. They were what most people had to deal with though, most couldn't afford high end CRTs. I can't find historical pricing data, but I seem to recall my no-name 17" monitor back in 1998 was around $300-500. That would be about $570-950 today. That is, at a minimum, midrange monitor money and most people would consider it high end. You can currently spend around $200, which would be like $100 back then, and get a 24" monitor that, while not amazing by today's standards, would completely kick the crap out of that CRT.

I'm happy that more people can get better displays today, and the ultra-high end has survived and there's plenty out there. While, sure, I'd like even better displays than what we have, the high end is really good.

I'm happy that more people can get better displays today, and the ultra-high end has survived and there's plenty out there. While, sure, I'd like even better displays than what we have, the high end is really good.

Touché.I'll actually disagree on cheap LCDs being bad. If they were all that was available sure, but I'm glad that they are there because they bring great display quality to people who don't have tons of money. Cheap LCDs are way better than cheap CRTs were and are cheaper to boot. Cheap CRTs SUCKED. Small, fuzzy, low resolution, etc, etc. They were what most people had to deal with though, most couldn't afford high end CRTs. I can't find historical pricing data, but I seem to recall my no-name 17" monitor back in 1998 was around $300-500. That would be about $570-950 today. That is, at a minimum, midrange monitor money and most people would consider it high end. You can currently spend around $200, which would be like $100 back then, and get a 24" monitor that, while not amazing by today's standards, would completely kick the crap out of that CRT.

I'm happy that more people can get better displays today, and the ultra-high end has survived and there's plenty out there. While, sure, I'd like even better displays than what we have, the high end is really good.

I also should add that in terms of tech getting killed off other than SED, it was for good reason. SED I don't know, I've heard conflicting things, but CRT and Plasma both had big drawbacks, even at the high end.

For CRT the size and weight were obvious ones, but another was limits to screen size. This was in part because of the size and weight, but it is just difficult to make large tubes that are also high rez and have good focus. Hence why you could find TVs in the 30-39" range, but monitors never got up to those lofty numbers. It was just too hard to make something like that with a good resolution and focus.

For Plasma, it was a whole bunch of little things. The weight was one thing, the power draw was another issue, particularly for large screens. Resolution was a big problem as well, getting that tech to scale to smaller display elements, hence why you often saw 1280x720 screens even for higher end ones and some of the smaller ones went even less than that. Burn in was somewhat of an issue too.

In general I can see why LCDs took them out, particularly as LCDs got better. While early ones competed pretty poorly with Plasma in a bunch of ways, as they improved the advantages of Plasma got less and less and the advantages of LCD grew and grew. Then of course OLED came along and given that you are using a CX, I'm guessing you'd agree that it did everything Plasma did better, and also did a bunch of other things it didn't.

Eventually I think LCD will die to OLED. Right now OLED can't compete on price, brightness, or burn-in resistance. However, those improve by the year. Eventually I imagine we'll hit a tipping point where OLEDs are good enough in all those areas to start being the preference, and LCDs become an economy and/or specialty thing, and then eventually fade away.

Also interesting side note related to SED: We might see it some day after all, in a slightly different form. Quantum Dots don't have to use visible light to stimulate them. We do right now, we put a white or blue backlight and use that to stimulate the dots to make light. However they could be stimulated using higher energy light, like UV, though you'd have to filter that. Or, and this is only in early testing, electrons directly. Shoot out electrons stimulate the Quantum Dots to make colorful light, save on energy. Also sounds an awful lot like the SED idea, but with Quantum Dots instead of phosphors. We'll see if it pans out.

For CRT the size and weight were obvious ones, but another was limits to screen size. This was in part because of the size and weight, but it is just difficult to make large tubes that are also high rez and have good focus. Hence why you could find TVs in the 30-39" range, but monitors never got up to those lofty numbers. It was just too hard to make something like that with a good resolution and focus.

For Plasma, it was a whole bunch of little things. The weight was one thing, the power draw was another issue, particularly for large screens. Resolution was a big problem as well, getting that tech to scale to smaller display elements, hence why you often saw 1280x720 screens even for higher end ones and some of the smaller ones went even less than that. Burn in was somewhat of an issue too.

In general I can see why LCDs took them out, particularly as LCDs got better. While early ones competed pretty poorly with Plasma in a bunch of ways, as they improved the advantages of Plasma got less and less and the advantages of LCD grew and grew. Then of course OLED came along and given that you are using a CX, I'm guessing you'd agree that it did everything Plasma did better, and also did a bunch of other things it didn't.

Eventually I think LCD will die to OLED. Right now OLED can't compete on price, brightness, or burn-in resistance. However, those improve by the year. Eventually I imagine we'll hit a tipping point where OLEDs are good enough in all those areas to start being the preference, and LCDs become an economy and/or specialty thing, and then eventually fade away.

Also interesting side note related to SED: We might see it some day after all, in a slightly different form. Quantum Dots don't have to use visible light to stimulate them. We do right now, we put a white or blue backlight and use that to stimulate the dots to make light. However they could be stimulated using higher energy light, like UV, though you'd have to filter that. Or, and this is only in early testing, electrons directly. Shoot out electrons stimulate the Quantum Dots to make colorful light, save on energy. Also sounds an awful lot like the SED idea, but with Quantum Dots instead of phosphors. We'll see if it pans out.

Indeed as you say, CRT was ultimately doomed, because it couldn't scale. It was killed off quite prematurely though through what I thought was very deceptive marketing, because LCDs had higher margins. I remember seeing this in person in a sense. I was at a Best Buy and saw this salesman trying to sell this elderly woman an LCD, whose screen wasn't any bigger than a CRT, but whose picture was just ghastly. And with a beautiful CRT near by. I don't remember if I said anything or not that day as that crime was taking place. I'd like to think I did.

Plasma...always the taint of burn-in and never a good enough marketing campaign to dispel that. Panasonic I think got the Kuro IP and made these great and quite superior sets. Though bright rooms could be a problem if I recall. And I guess it wasn't profitable. Or not enough.

I thought FALD was dead for a few years, but maybe I was just paying attention to smaller sets that I could potentially use as a monitor. It all came roaring back though. I think it was Vizio that announced a new line of TVs with every size including some type of FALD. Might have been end of 2013 or in 2014. And with that the dam broke. Or that's how I perceived it anyway. (With I assume OLED's arrival helping spurring FALD advancement.)

Would be cool to see SED. I think back in the day it got tangled up in some legal morass over the IP. I think that did get sorted, but too late maybe. Or not.

I tried to keep the faith with my FW900 as my main display, but needed some more real estate. Wanted a 4K for work. As I'm sure is apparent, I've not been disappointed. Especially its BFI function, which I have on just always.

Plasma...always the taint of burn-in and never a good enough marketing campaign to dispel that. Panasonic I think got the Kuro IP and made these great and quite superior sets. Though bright rooms could be a problem if I recall. And I guess it wasn't profitable. Or not enough.

I thought FALD was dead for a few years, but maybe I was just paying attention to smaller sets that I could potentially use as a monitor. It all came roaring back though. I think it was Vizio that announced a new line of TVs with every size including some type of FALD. Might have been end of 2013 or in 2014. And with that the dam broke. Or that's how I perceived it anyway. (With I assume OLED's arrival helping spurring FALD advancement.)

Would be cool to see SED. I think back in the day it got tangled up in some legal morass over the IP. I think that did get sorted, but too late maybe. Or not.

I tried to keep the faith with my FW900 as my main display, but needed some more real estate. Wanted a 4K for work. As I'm sure is apparent, I've not been disappointed. Especially its BFI function, which I have on just always.

t1337duder

Gawd

- Joined

- Sep 7, 2014

- Messages

- 783

Hard to believe but it's six years since I've been using OLED's as my PC monitor, and my main PC became an HTPC. I remember back when I first started doing it - almost nobody was doing it! I remember convincing my IT mentor & manager at my tech job to join on the OLED train, and I remember him thanking me.

silentcircuit

Limp Gawd

- Joined

- Sep 7, 2023

- Messages

- 143

Yeah, when I bought it second hand in the mid 2000s my HP P1110 (Sony Trinitron rebrand) 21" CRT was like... $650? And again, that was used, from a neighbor down the street.I'll actually disagree on cheap LCDs being bad. If they were all that was available sure, but I'm glad that they are there because they bring great display quality to people who don't have tons of money. Cheap LCDs are way better than cheap CRTs were and are cheaper to boot. Cheap CRTs SUCKED. Small, fuzzy, low resolution, etc, etc. They were what most people had to deal with though, most couldn't afford high end CRTs. I can't find historical pricing data, but I seem to recall my no-name 17" monitor back in 1998 was around $300-500. That would be about $570-950 today. That is, at a minimum, midrange monitor money and most people would consider it high end. You can currently spend around $200, which would be like $100 back then, and get a 24" monitor that, while not amazing by today's standards, would completely kick the crap out of that CRT.

I'm happy that more people can get better displays today, and the ultra-high end has survived and there's plenty out there. While, sure, I'd like even better displays than what we have, the high end is really good.

I was in highschool and it was the most money I'd ever spent on any single thing at the time - about $1000 adjusted for inflation. My friends all thought I was insane... And were very jealous.

Wasn't the problem rather that it scaled way too much?Indeed as you say, CRT was ultimately doomed, because it couldn't scale

I’m with Zarathustra on this never heard of it or experienced it and I’m an 80s kid. I’ve parked my face in front of crts for extended periods of time and other than normal flushed face from dying 800 times in a row from missed jumps in TMNT or a heated game of Doom I don’t know anyone who ascribed this phenomenon to the CRT.

Darunion

Supreme [H]ardness

- Joined

- Oct 6, 2010

- Messages

- 5,353

I just remember CRT's giving me a ton of eye strain that i never felt with lcd or oled. Also my screen size was limited to my ability to physically lift it, which isnt the case now lol.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

I'll actually disagree on cheap LCDs being bad. If they were all that was available sure, but I'm glad that they are there because they bring great display quality to people who don't have tons of money. Cheap LCDs are way better than cheap CRTs were and are cheaper to boot. Cheap CRTs SUCKED. Small, fuzzy, low resolution, etc, etc. They were what most people had to deal with though, most couldn't afford high end CRTs. I can't find historical pricing data, but I seem to recall my no-name 17" monitor back in 1998 was around $300-500. That would be about $570-950 today. That is, at a minimum, midrange monitor money and most people would consider it high end. You can currently spend around $200, which would be like $100 back then, and get a 24" monitor that, while not amazing by today's standards, would completely kick the crap out of that CRT.

I'm happy that more people can get better displays today, and the ultra-high end has survived and there's plenty out there. While, sure, I'd like even better displays than what we have, the high end is really good.

That's a good point.

The Sony GDM-FW900 which retrospectively has become so popular was over $2000 when it was new, I believe.

My 22" Iiyama Visionmaster Pro 510 cost me about $1000 in 2000.

FALD never died, it was a tech that just required other tech advancement to become better. Early sets couldn't have a lot of zones in a large part because of the computing power needed to properly control them. I men sure in theory you could develop a massive power hungry chip to do it but nobody would pay for that, so what you could get out of the kind of chips we used for control was limited. Smaller sets were simply limited by LED size. Prior to MiniLED getting good you just didn't have small, bright, LEDs that you could use on smaller displays to get good results.I thought FALD was dead for a few years, but maybe I was just paying attention to smaller sets that I could potentially use as a monitor. It all came roaring back though. I think it was Vizio that announced a new line of TVs with every size including some type of FALD. Might have been end of 2013 or in 2014. And with that the dam broke. Or that's how I perceived it anyway. (With I assume OLED's arrival helping spurring FALD advancement.)

It's also the kind of thing where you need something of a feedback cycle: Initially with little to no HDR content, FALD wasn't that interesting for TVs. Yes, it can increase contrast ratio but really VA tends to do a good enough job with SDR content for normal viewing conditions. So just not something that is a huge selling point to consumers. However as more HDR stuff becomes available, people some people are going to want that experience and you need FALD for it. Then as more TVs support HDR, more content creators see it as worth using, which means more content so more people want TVs that support it and so on.

It really hasn't been that long either. Remember that Dolby Vision, which was the first HDR format, only came out in 2014. That's when the Dolby talk about the development of the PQ curve I linked was from.

I had a sample of what might have been the first FALD released, Samsung's initial 40" model tuned to mimic a CRT. (Made quite a splash and I read an interview with its designers.) I remember watching part of "300" on it and literally thinking, "now this TV is the real deal". Someone had dropped it or something and damaged the lower right corner of the panel, so I returned it. And they offered to exchange it instead, but I thought, nah, next year's will be even better. LG, though not Samsung, did offer a 42 or 43" the following year, probably with an IPS though instead of Samsung's outsourced 3000:1 VA panel. And then I think TV manufacturers just retreated from at least the smaller sizes all together for years.

Someone here or at AVS Forum reported seeing a new patent for something called Edge Lighting. However, I'm not sure we really knew at the time the doom that portended...

EDIT: Was a misspelling.

Someone here or at AVS Forum reported seeing a new patent for something called Edge Lighting. However, I'm not sure we really knew at the time the doom that portended...

EDIT: Was a misspelling.

Last edited:

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,314

One of my FW900 from a relatively long time back but late for the crts. I've always used more than one monitor so that I could enjoy the tradeoffs of two different types.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,547

My CRT was my media monitor on my gaming computer. I never did any work on that machine. I would do the same with OLED.I like a multi-screen/type approach. It's just I have to have them in different rooms. Such is my psychology...

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,314

I have another rig for home duties with it's own screen, plus a laptop that I sometimes hook up to my 77" oled tv. I wouldn't call that multi-monitor. I have a metal stand I set my samsung tablet up on in portrait next to my laptop at times though, away from home. That's a mini multi-monitor setup. My mouse can swap between the two with a button on the fly pretty seamlessly.

. . . . . .

In the future it'll be lightweight glasses, extreme rez, high hz anywhere.

. . . .

Personally for physical screens at my main desk I focus on a single screen for gaming (my 48cx for now), close to or within the 60 to 50 degree human central viewing angle. I can glance aside if I want to while gaming to either portrait monitor though, to check another app or whatever. I don't typically use the 48cx for anything besides media and games. I sit back somewhat farther or rotate my desk slightly if I'm doing things on the side screens. Three across using sides in portrait is a setup I enjoy a lot.

I'd love something like that ark setup I linked earlier, but an 8k version of the ark format. In that kind of setup I'd use the central one for desktop/apps more than I do the oled. I'd decouple the screens from the desk and set them back farther that what is pictured below though, and would prob have to scale the 8k one up a bit considering that.

As it is, that setup is a lot like the virtual ones I put in quotes - but in physical form where you can get a lot higher resolution, hz, HDR, etc for now compared to the different goggles and glasses available but they should advance over the years. Mixed reality can offer a lot more than flat screens too, breaking the games out into the world like holograms eventually. Not that you'd have to run around a park like this guy but I found this vid interesting:

View: https://youtu.be/rfw92ENcoZg?t=148

. . . . . .

In the future it'll be lightweight glasses, extreme rez, high hz anywhere.

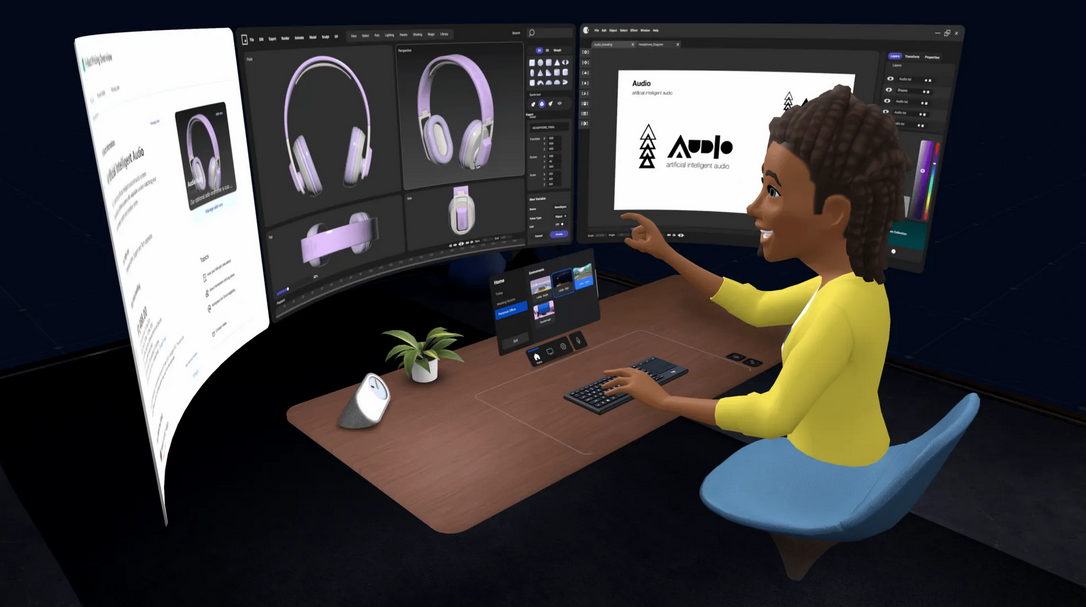

Baby steps so far, quest 3 multiscreen shows a rudimentary version of that kind of thing. It's still on a larger headset but is prob foreshadowing the way things will go in the long run :

https://www.reddit.com/r/OculusQuest/comments/17cuzz8/spiderman_2_with_the_quest_3/

. . . . . . .

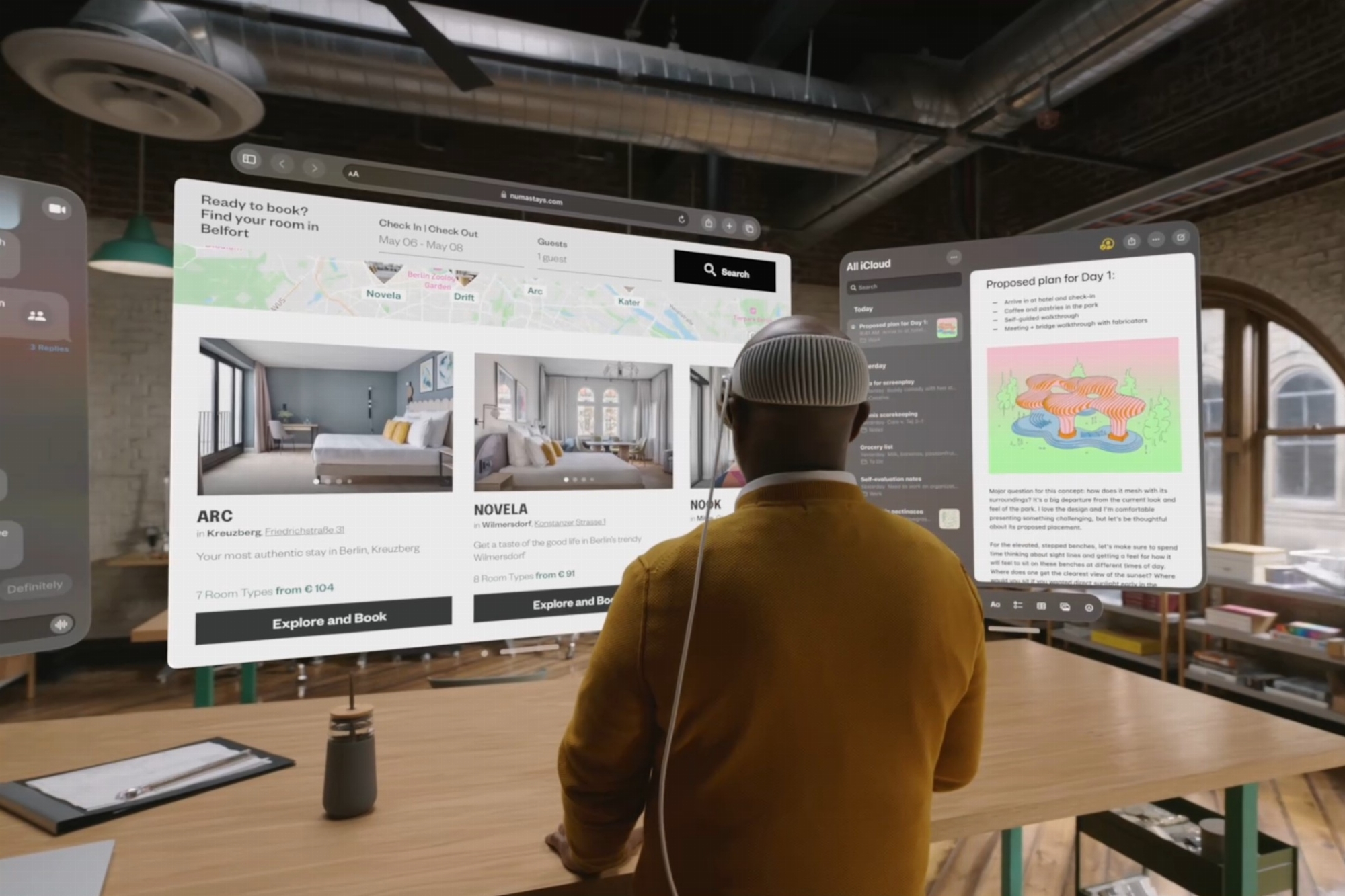

There are some 1080p microoled sunglass style devices that can show a screen in front of you too. Apple pushed their lightweight sunglasses format back to 2027, so might see some greater advancements around then.

meta (still bulky, boxy) VR headset virtual desktop marketing

Apple vision pro (still pretty bulky ~ skii goggles) marketing:

XReal AIR / air2 XR glasses. microOLED 60hz. The device's rez is only 1080p on current models so that desktop rez pictured looks like more of a simulation/marketing here. Still it works and can also play games, e.g. act like a floating display for handheld gaming devices.

nreal marketing

. . . .

Personally for physical screens at my main desk I focus on a single screen for gaming (my 48cx for now), close to or within the 60 to 50 degree human central viewing angle. I can glance aside if I want to while gaming to either portrait monitor though, to check another app or whatever. I don't typically use the 48cx for anything besides media and games. I sit back somewhat farther or rotate my desk slightly if I'm doing things on the side screens. Three across using sides in portrait is a setup I enjoy a lot.

I'd love something like that ark setup I linked earlier, but an 8k version of the ark format. In that kind of setup I'd use the central one for desktop/apps more than I do the oled. I'd decouple the screens from the desk and set them back farther that what is pictured below though, and would prob have to scale the 8k one up a bit considering that.

As it is, that setup is a lot like the virtual ones I put in quotes - but in physical form where you can get a lot higher resolution, hz, HDR, etc for now compared to the different goggles and glasses available but they should advance over the years. Mixed reality can offer a lot more than flat screens too, breaking the games out into the world like holograms eventually. Not that you'd have to run around a park like this guy but I found this vid interesting:

View: https://youtu.be/rfw92ENcoZg?t=148

Last edited:

I purchased the Alienware QD-OLED AW3423DW last week from Best Buy while it was on sale. I use it mainly for work and some gaming. So far with cleartype, the text isn't that bad. It just looks a bit blurry to me. I may try that MacType. After 20 hours, no burn in.

I hope it lasts. The faster refresh compared to my older IPS (75hz) and reduction of blurring with fast moving scenes makes it worth it in my opinion. I may change my mind by 2400 hours if/when burn in appears.

It's been about 5 months or so now and I still don't notice any burn in.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

My OLED isn't as fortunate. It lives a life of Excel, etc...

Mine (42" LG C3) lives in Word/Excel/Outlook/Corporate Web-App hell. Anywhere from 8-12 hours a day.

And thus far ~5 months in, it has been just fine. Not even a hint of any burn-in.

That said, I work in a basement office with no windows, and as such I keep the brightness down (because it is not needed, AND because I don't like my eye balls scorched) and I think this helps a lot.

I got the 5 year Best Buy coverage plan on it, so if anything ever does happen, I'll just have them take care of it. (provided they don't go out of business or something).

Only issue I've had is one stubborn intermittent "dead" pixel. It comes and it goes. I suspect it might be screen temperature related, visible when cold, and gone when warmed up, but I am not 100% sure. Thus far I am living with it, but if it gets worse or more appear, I may start talking to the Best Buy plan people.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,547

Best damn looking spreadsheets ever though, right?My OLED isn't as fortunate. It lives a life of Excel, etc...

Senn

Limp Gawd

- Joined

- Jul 29, 2021

- Messages

- 402

Nah you're doing it wrong. You don't have better images if you're not staring at 1000+ nit HDR all day long.That said, I work in a basement office with no windows, and as such I keep the brightness down (because it is not needed, AND because I don't like my eye balls scorched) and I think this helps a lot.

Well, they're definitely bigger on this thing.Best damn looking spreadsheets ever though, right?

The true blacks are great. And mostly I use a dark mode. However, for Excel, there doesn't seem to be one. At least when I looked before.

Sweet! I also keep the brightness low when connected to my work machine. Minimal really. However, with RTX HDR this may no longer be the case when it's connected to my personal machine.Mine (42" LG C3) lives in Word/Excel/Outlook/Corporate Web-App hell. Anywhere from 8-12 hours a day.

And thus far ~5 months in, it has been just fine. Not even a hint of any burn-in.

That said, I work in a basement office with no windows, and as such I keep the brightness down (because it is not needed, AND because I don't like my eye balls scorched) and I think this helps a lot.

I got the 5 year Best Buy coverage plan on it, so if anything ever does happen, I'll just have them take care of it. (provided they don't go out of business or something).

Only issue I've had is one stubborn intermittent "dead" pixel. It comes and it goes. I suspect it might be screen temperature related, visible when cold, and gone when warmed up, but I am not 100% sure. Thus far I am living with it, but if it gets worse or more appear, I may start talking to the Best Buy plan people.

Mine arrived with some bad pixels along the edges. No big deal so far. Crossing fingers....

Mine's on zero for my personal machine. Still debating on whether to leave it on all the time. Maybe though...I run in HDR mode 100% of the time on my C2 but the SDR brightness slider in Windows is set to 5% (frankly leaving it at 0 is fine) as anything brighter is just too bright for the dark room I use it in.

So, I have the TV setup for ideal HDR performance so the brightness on the TV itself is high, as it should be. However, since a large majority of the time I'm viewing my SDR desktop the SDR content brightness slider is very low (as I explained above). This means that I don't have touch anything when moving between SDR and HDR content, its just handled automatically with Windows. The SDR desktop and UI elements are all low brightness for ease of viewing and burn in prevention, but when I play an HDR video its full bright as expected of HDR content.Sweet! I also keep the brightness low when connected to my work machine. Minimal really. However, with RTX HDR this may no longer be the case when it's connected to my personal machine.

This works for me as its the best of both worlds without having to turn something on and off depending on what i'm doing. I'm extremely grateful for that SDR brightness slider in Windows.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

Nah you're doing it wrong. You don't have better images if you're not staring at 1000+ nit HDR all day long.

I know you are being facetious, but I have to say, my deeply held opinion is that absolute brightness doesn't matter much (as long as you can control room brightness). Just set it to what is comfortable for your eyes. It is dynamic range that really makes HDR the killer feature that improves appearance. Small reflections and other parts of the screen can have sky-high peak brightness, while dark shadows can be close to true black. THAT is what makes HDR look good. Not blasting the shit out of your eyeballs with photons by cranking up the brightness.

Sweet! I also keep the brightness low when connected to my work machine. Minimal really. However, with RTX HDR this may no longer be the case when it's connected to my personal machine.

Mine arrived with some bad pixels along the edges. No big deal so far. Crossing fingers....

Personally I followed this guide to get some great settings for both SDR and HDR mode:

View: https://www.youtube.com/watch?v=jK_pchCK-5I

I think it is an excellent guide.

I see no need for HDR on the desktop for things like productivity work, so for work (Excel, Word, Solidworks, Minitab, Outlook, Web-Apps, stuff like that), web browsing, youtube videos etc. For that I keep it in SDR mode, as configured in the guide above, but with a minor tweak of turning down OLED Brightness to 25, as that's where my eyes are comfortable.

I turn on HDR for gaming only. I'd probably turn it on for movies/tv shows as well, but I don't use the PC this screen is hooked up to for that. My HDR mode is set pretty much exactly per the guide above.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)