Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

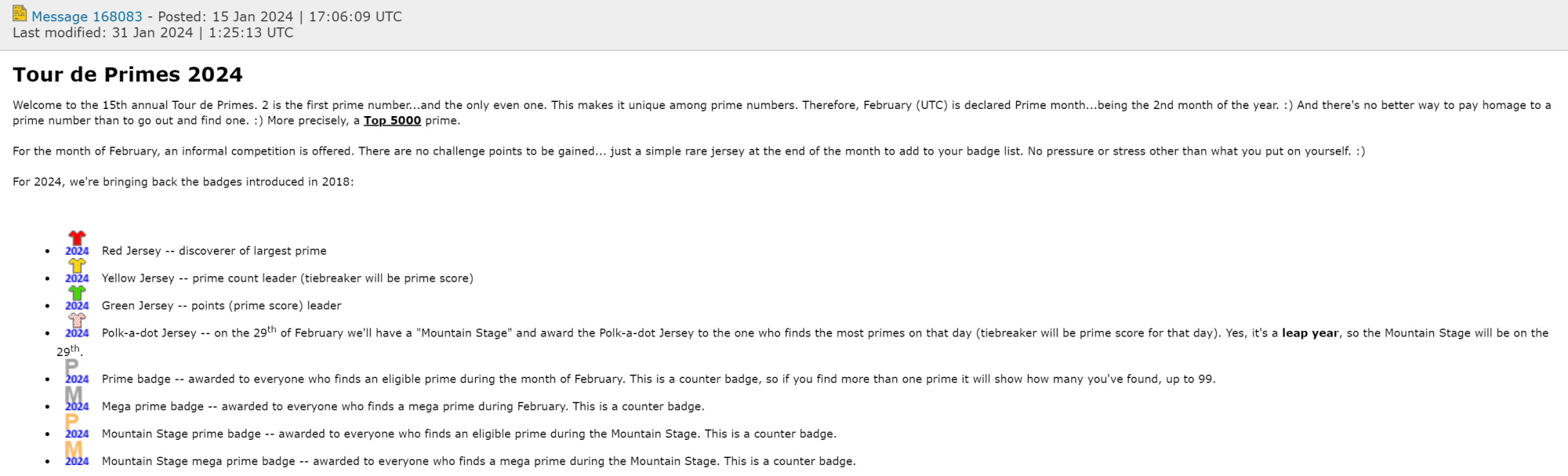

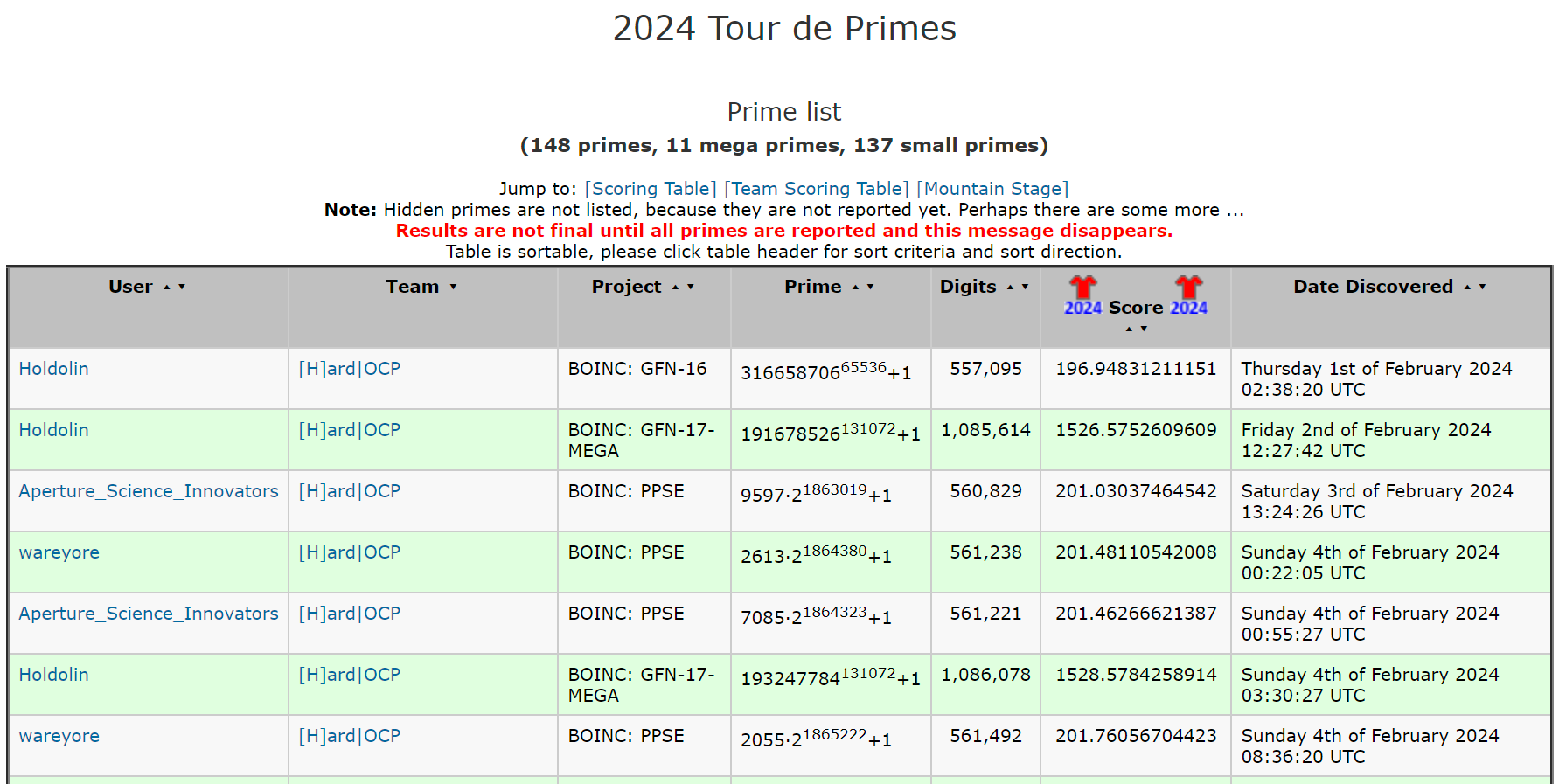

Tour de prime 2024

- Thread starter Holdolin

- Start date

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

Not many on [H] are crunching PG these days. You can see that in our overall participation in the PG Challenge series events. But, there are a few regularly crunching this monthly. And there are only a few projects that won't qualify. So, this is a good reminder of this event that runs through February - thank you!Not wanting to go off topic in the PG challenge series thread (which TdP is not included in) anybody else gonna chase more badges this year?

More info here and event specific stats here.

If you are participating in this, or any other BOINC projects, may as well sign up at Boincgames.com, too and double your fun.

Good luck everyone. I will do my usual find the smallest prime for my P badge first, (PPSE and GFN16) then if I am lucky move to a mega prime (GFN17 or PPS)

Then if I am lucky I will go for a large mega (SR5 GCW TRP) Will see how it goes om Mountain stage day give it all I got. That's the plan anyway.

Then if I am lucky I will go for a large mega (SR5 GCW TRP) Will see how it goes om Mountain stage day give it all I got. That's the plan anyway.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

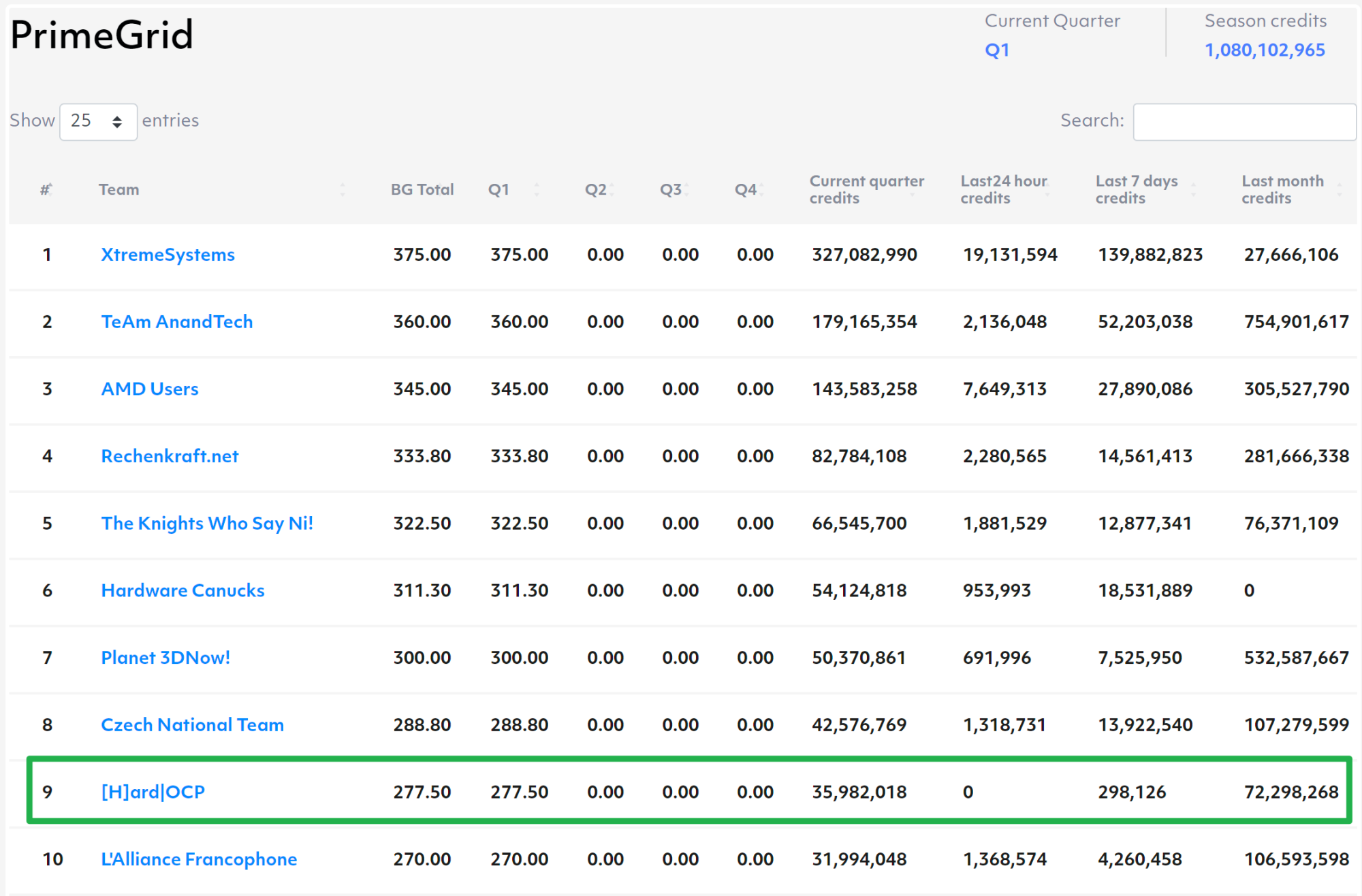

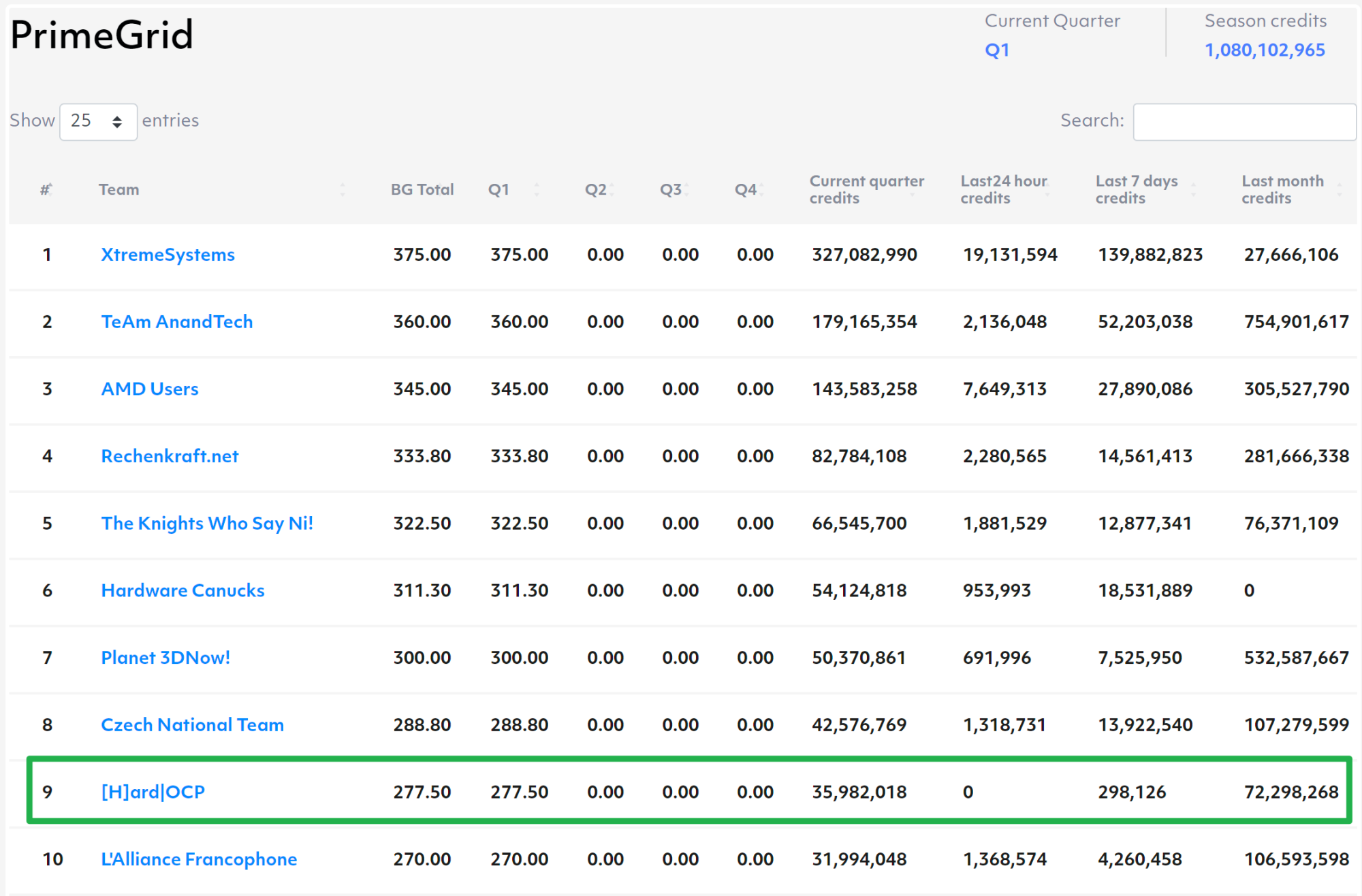

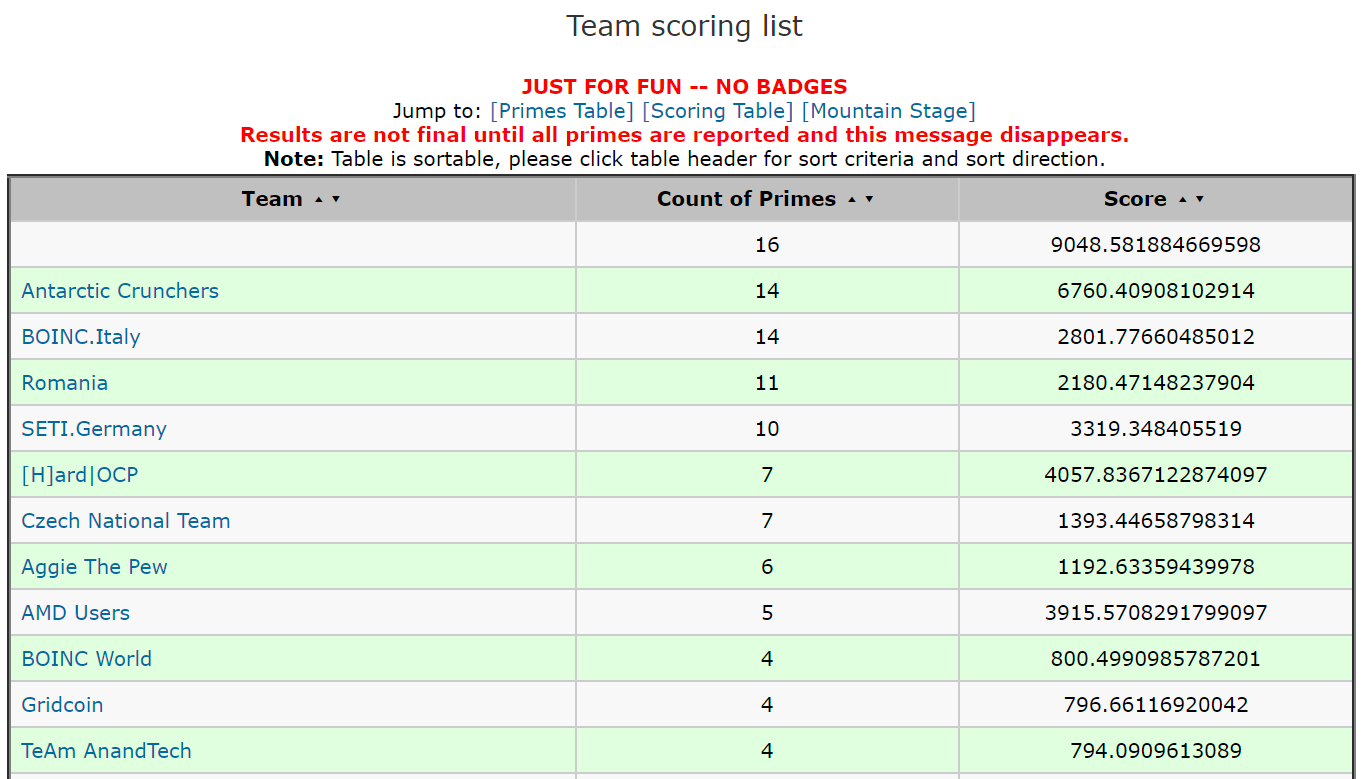

A good effort next month on the Tour could help bump us up in BG, too.

Provided everyone is registered there. I think 5th place is doable.

Provided everyone is registered there. I think 5th place is doable.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

Off and running.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

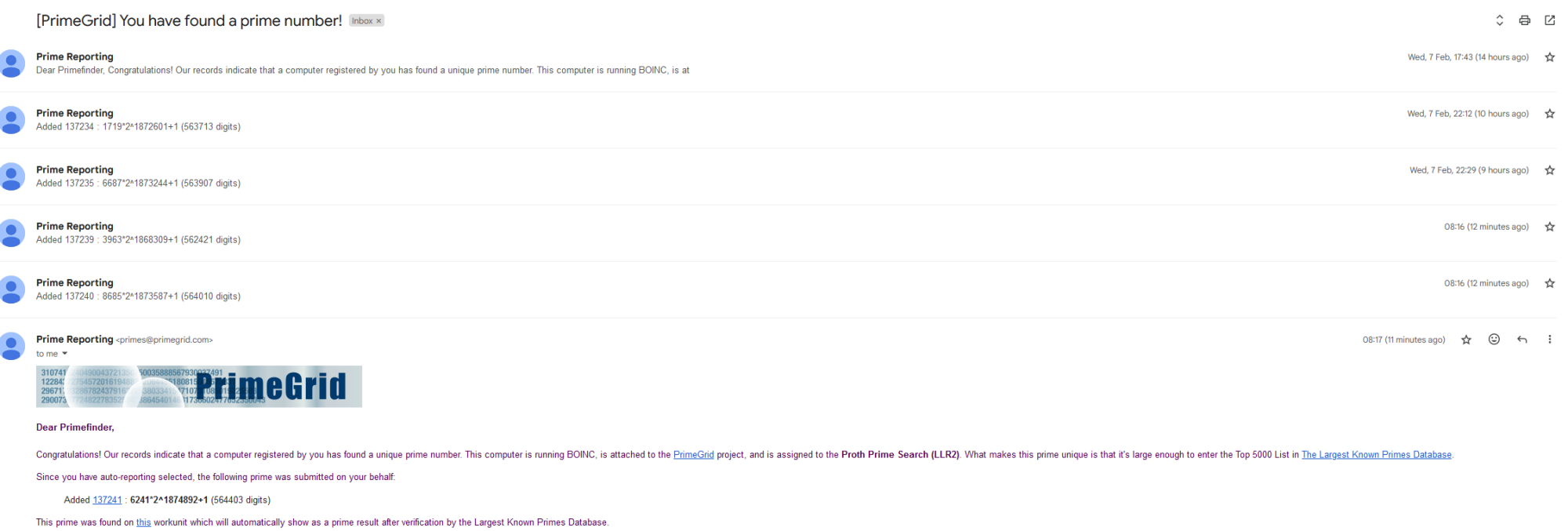

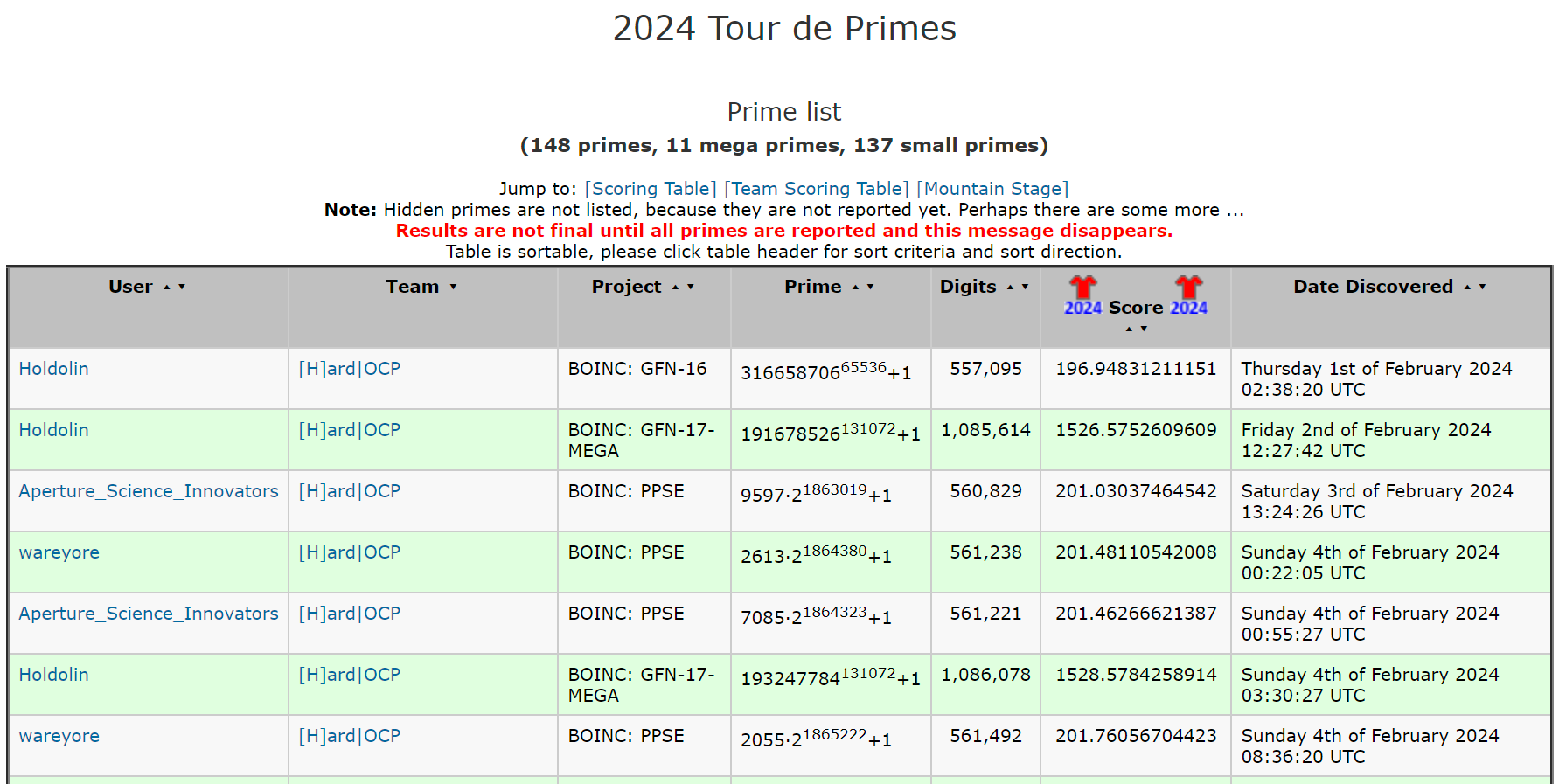

Somebody already got one:

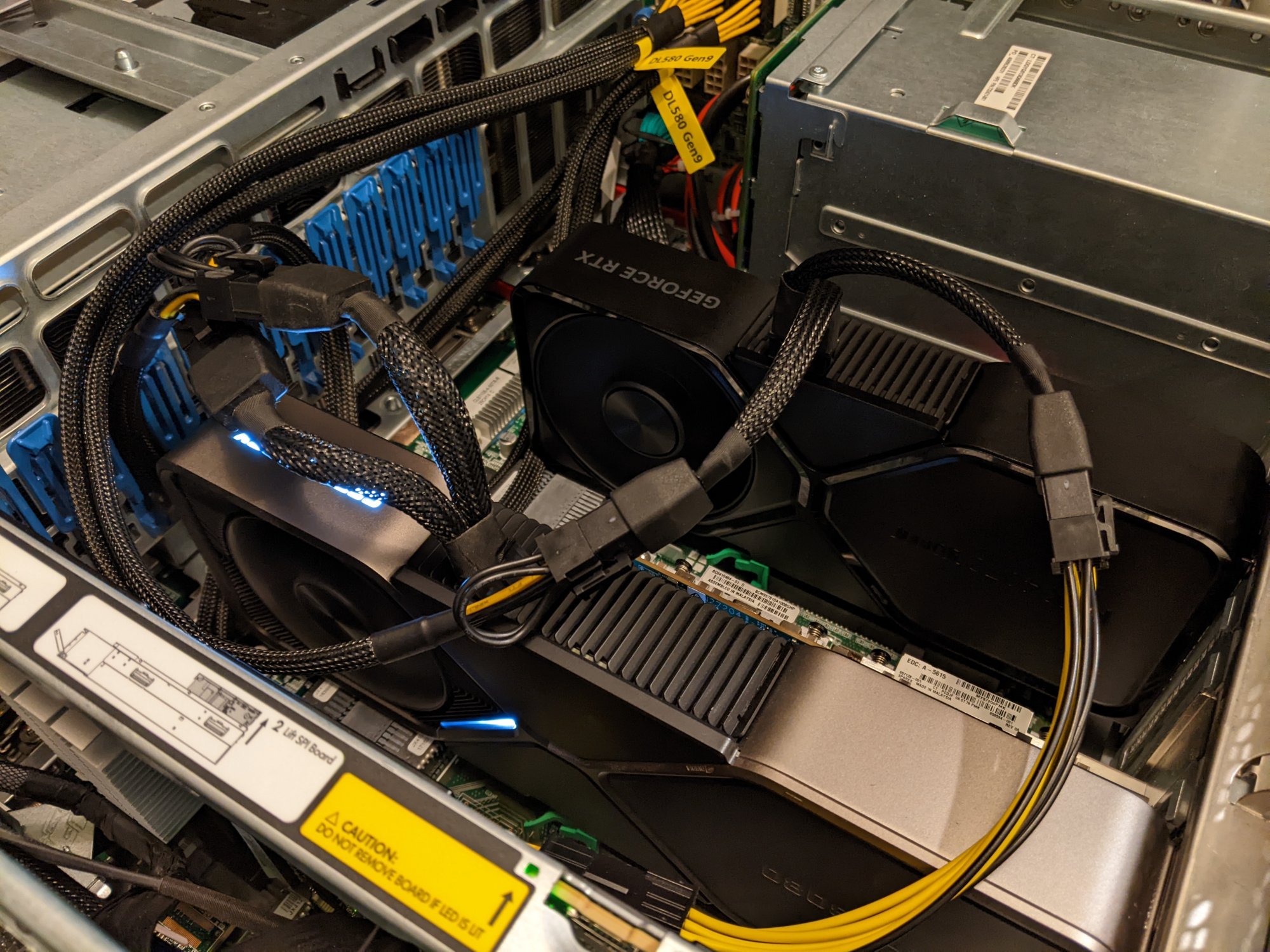

I need a dozen 4090's.

I need a dozen 4090's.

Silly me, I forgot to set my cache to keep as few WUs as possible so I had hours of work. That find was straight luck lol. Now I'm runnin the GFN-17s to let those 4090s stretch their legs. Hopin to snag a few mega's before all is said and done.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

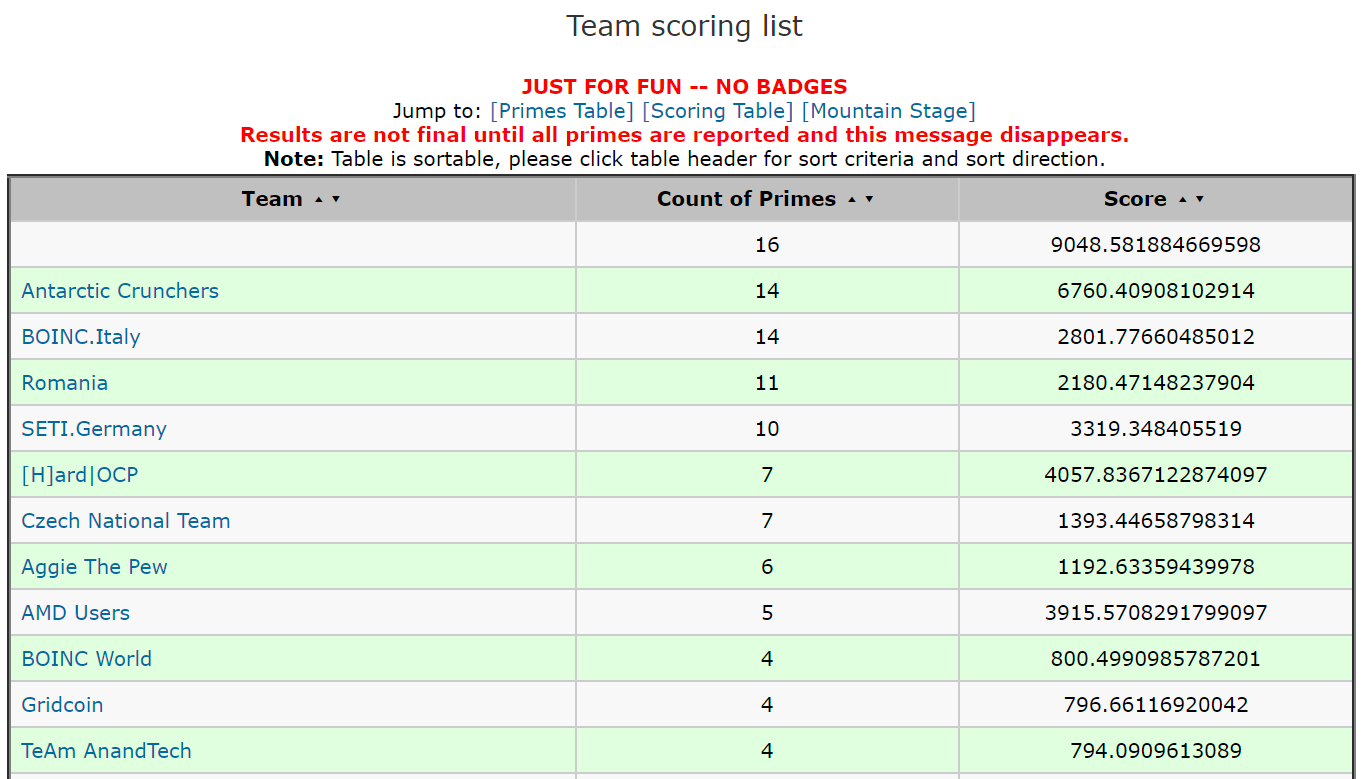

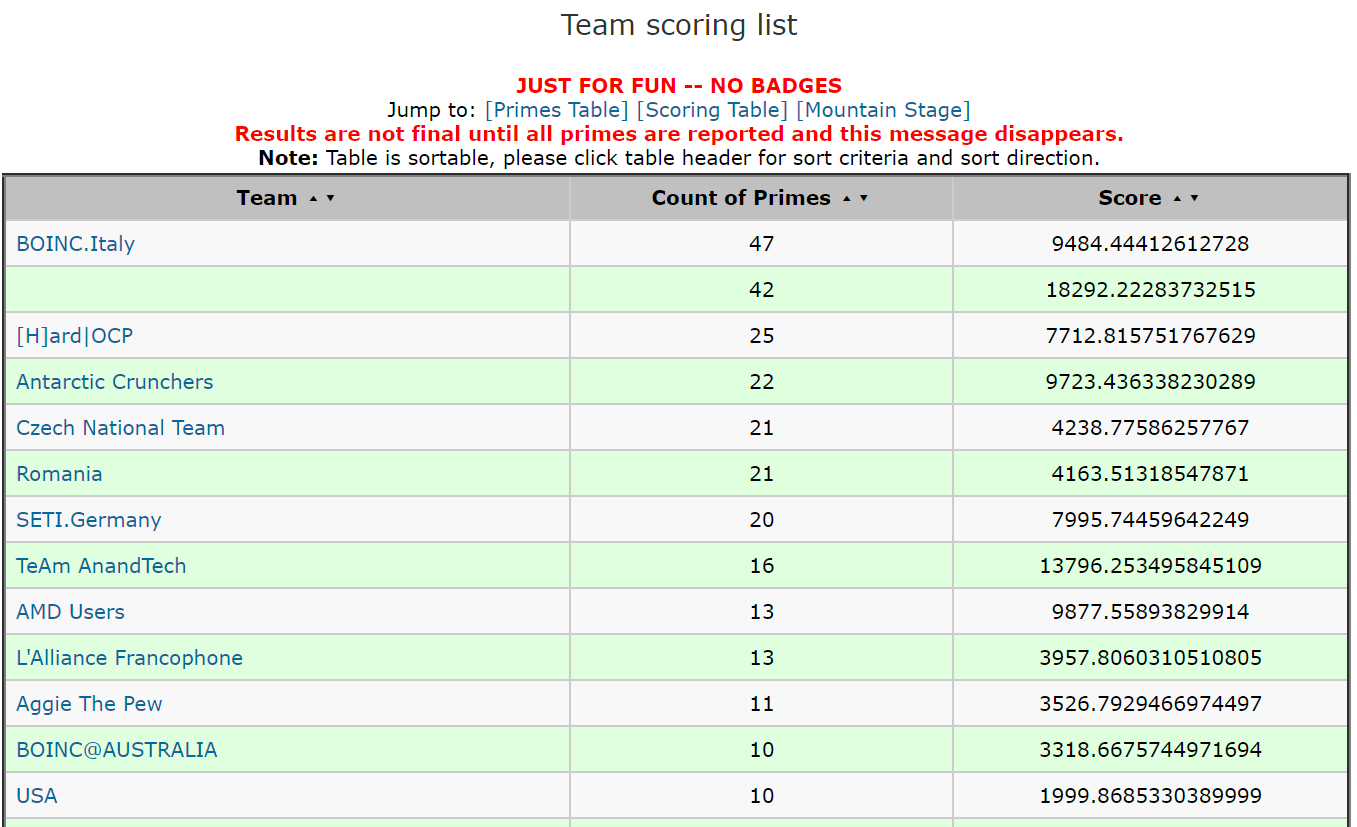

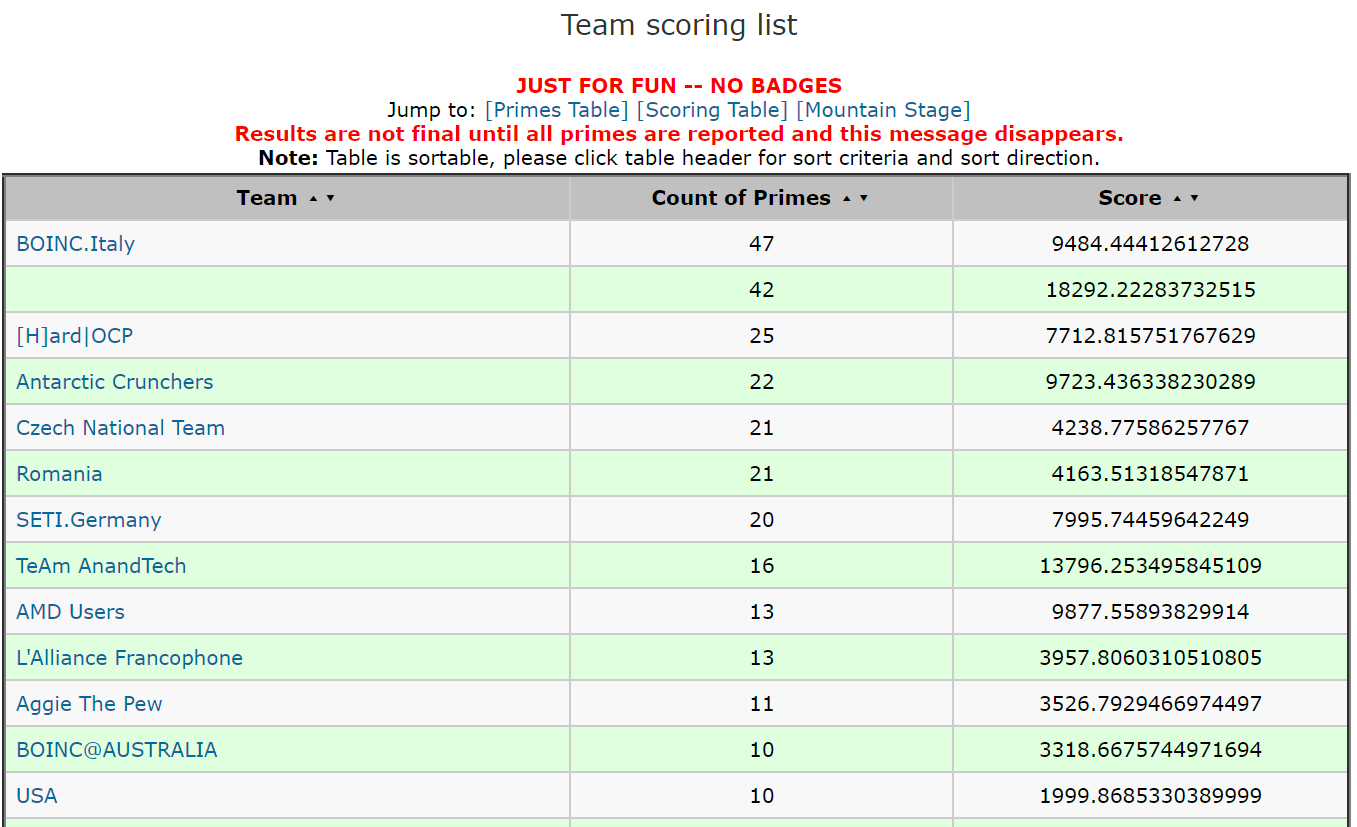

Not a competition or challenge, but they are keeping some stats.

They sure are. They're a great way to encourage participation as well IMOGood work all around

Holdolin I'm coming for you though, got another 7950X and a 4070S on the way. These monthly challenges are fun!

And unlike other DC projects I've done, it is awesome being able to see that you're finding primes and actually doing stuff beyond making amorphous progress but it's not clear what you're doing.They sure are. They're a great way to encourage participation as well IMO

OMG just don't tell my wife. She already calls me a bad influenceMay or may not have made some bad choices inspired by Holdolin . Found a "cheap" 4090 and have that on the way too. We'll see how it chews through tasks when it gets here.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

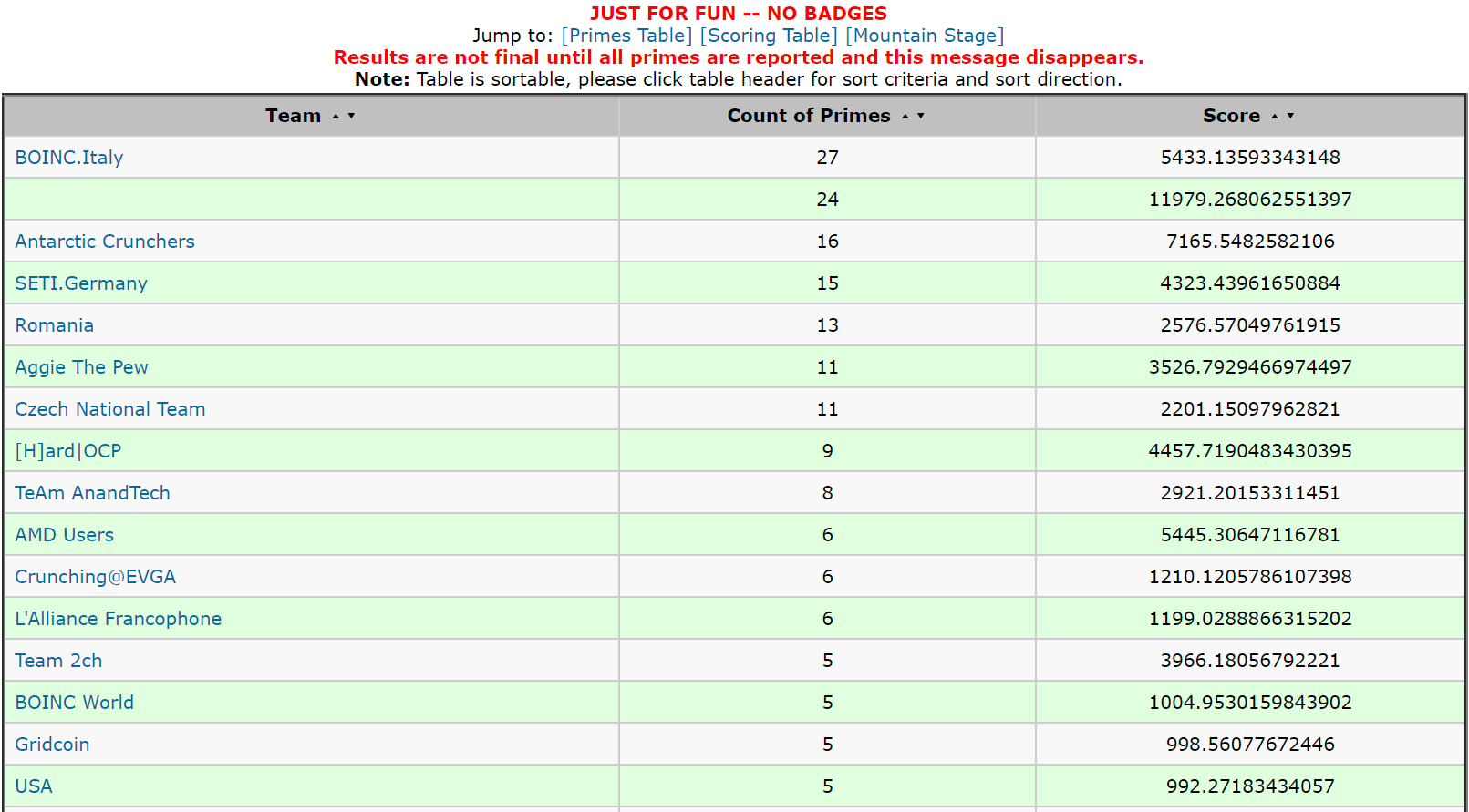

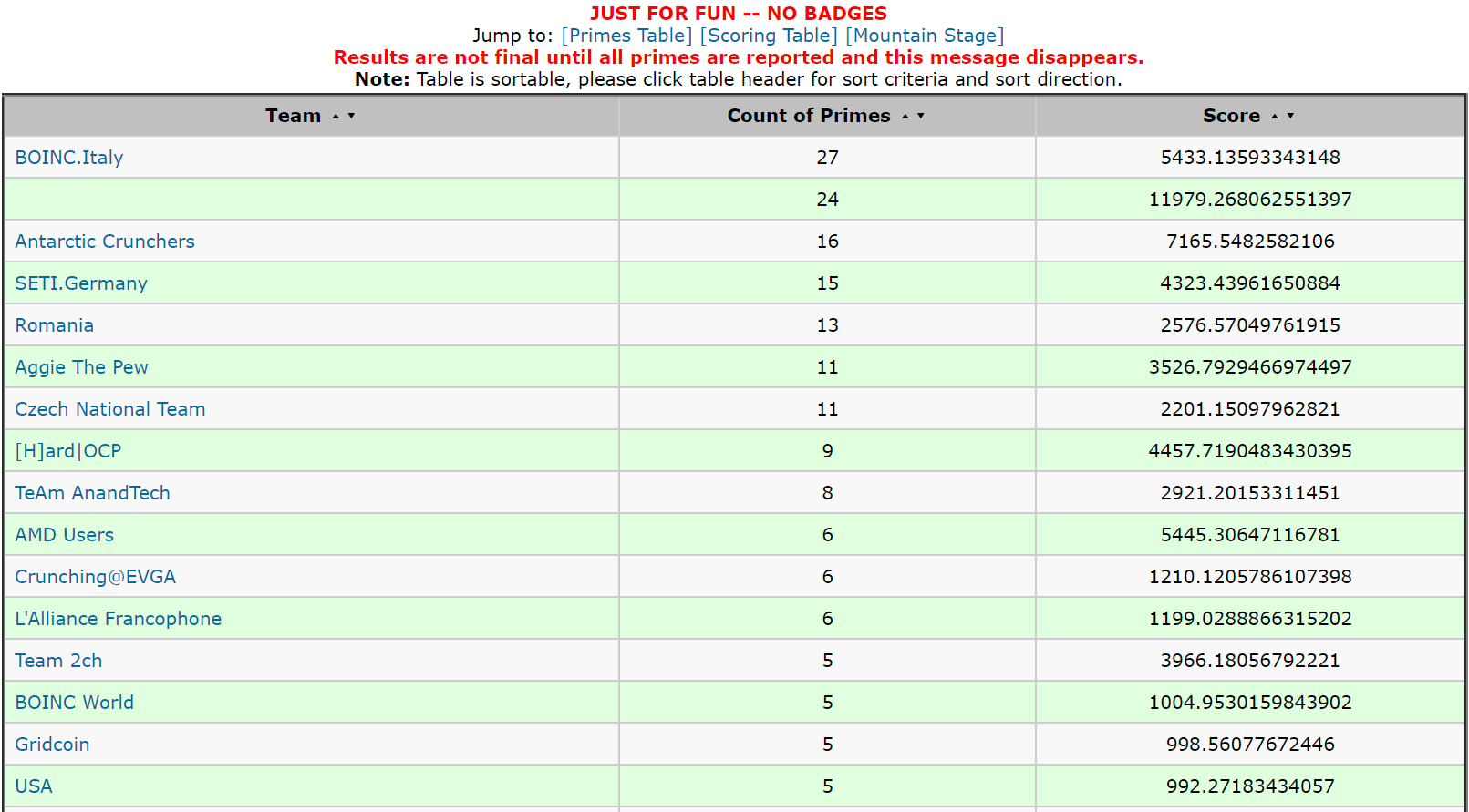

Those Italians ate their Wheaties this week.

4070S is here and dropped in next to the 3080. Nice card. Faster than the 3080, 2/3 the power draw, and much smaller. Got it running under the desk until tomorrow to make sure no issues crop up, and then down to the basement. Interestingly, at least on Linux, the nV drivers seem not sure what to make of it:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 545.29.06 Driver Version: 545.29.06 CUDA Version: 12.3 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3080 Off | 00000000:44:00.0 On | N/A |

| 50% 72C P2 316W / 320W | 957MiB / 10240MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA Graphics Device Off | 00000000:C4:00.0 Off | N/A |

| 41% 69C P2 204W / 220W | 216MiB / 12282MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1811 G /usr/lib/xorg/Xorg 100MiB |

| 0 N/A N/A 2439 G xfwm4 4MiB |

| 0 N/A N/A 4443 C ...rid.com/genefer22g_linux64_23.07.00 418MiB |

| 0 N/A N/A 4640 C ...rid.com/genefer22g_linux64_23.07.00 418MiB |

| 1 N/A N/A 1811 G /usr/lib/xorg/Xorg 4MiB |

| 1 N/A N/A 4499 C ...rid.com/genefer22g_linux64_23.07.00 200MiB |

+---------------------------------------------------------------------------------------+

"NVIDIA Graphics Device"...weird. But BOINC still seems content with it. Already updated from the 535 to the 545 drivers tonight, so I'll wait a bit and see if it finds a more updated one in a week or two.

Yeah, it's interesting the way that it goes in waves like that. I got a couple a day for a few days (all PPSE), then nothing at all for a few days, and then a batch again. Naturally started a dry spell right when TdP started, but seem to have been making up for it since then.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

I'm split 50/50 PPSE and GFN 16 at 2 each.

Wrapping up a system on F@H today. May move it to GFN 17, maybe. What's the best shot at a Mega?

Wrapping up a system on F@H today. May move it to GFN 17, maybe. What's the best shot at a Mega?

For a GPU, I would think GFN-17. Meets the 1M digit cutoff for a mega, but a "smaller" mega so odds of finding one seem better. For a CPU, I'd expect PPS for the same reason.I'm split 50/50 PPSE and GFN 16 at 2 each.

Wrapping up a system on F@H today. May move it to GFN 17, maybe. What's the best shot at a Mega?

Ya, that's what I have my systems hunting. Damn those are big numbers lol.For a GPU, I would think GFN-17. Meets the 1M digit cutoff for a mega, but a "smaller" mega so odds of finding one seem better. For a CPU, I'd expect PPS for the same reason.

wareyore

HDCOTY 2023

- Joined

- Jan 1, 2014

- Messages

- 1,890

Those Italians are still chewing through it.

I dunno what's up with my GPUs, but just having (what seems like) unbelievably bad luck with finding anything with them. 19.5k GFN-16 tasks, 17k GFN-17 tasks, and not a single prime from them. Everything is still just the PPSE tasks.

I feel ya. Got half a dozen 4090's runnin GFN-17 and I aint hit a prime in a week. Just bad luckI dunno what's up with my GPUs, but just having (what seems like) unbelievably bad luck with finding anything with them. 19.5k GFN-16 tasks, 17k GFN-17 tasks, and not a single prime from them. Everything is still just the PPSE tasks.

I dunno what's up with my GPUs, but just having (what seems like) unbelievably bad luck with finding anything with them. 19.5k GFN-16 tasks, 17k GFN-17 tasks, and not a single prime from them. Everything is still just the PPSE tasks.

I think it's because wareyore is stealing all of our primes...I feel ya. Got half a dozen 4090's runnin GFN-17 and I aint hit a prime in a week. Just bad luck

I'm still ahead of him by 3 right now, but losing ground. 4090 supposed to be here this afternoon, and then it can start earning its keep.I think it's because wareyore is stealing all of our primes...

Holdolin What subprojects are you running on the 4090s/how do you have them configured? I am running a mix of GFN-16 and GFN-17 and struggling to keep it busy. I have tried running everywhere from 1 WU at a time to 12 at a time (I tried 1 at a time, 2, 4, 8, and 12), and the most I've managed to get out of it is ~55% of TDP (240W or so) and a claimed 90% GPU utilisation (both as reported by GPU-Z and by nvidia-smi). It seems like if it's barely cracking 200W (216 right now as I type this up) that it's probably leaving the GPU far more idle than it's reporting, and I'm not getting good utilisation out of it. Are you running bigger GFN projects? Are you seeing a higher apparent load? I (very briefly) had it running a mix of GFN-16/17 & PPS-Sieve last night until the PPS-Sieve tasks ran out, and in that configuration it was doing somewhere around 420-440W, so I know that PG is capable of forcing more out of it.

I'm just running straight GFN-17's. I started TdP on the 16's, but my cards spent waay to much time doing nothing. The 17's seem to keep the cards busy most of the time and in scouting my peers it seems few are running 4090s so my theory is if I get handed a prime to crunch I should be the finder and somebody else the checker. See, IMO being the fact this is TdP the object of the game is to get the prime first, which to some extent means taking a, how shall I say, less than ideal approach to computing. Thus, I have my cache set to next to nothing with the idea as soon as a given number is handed to me, I'm ready to crunch it. While it might be a bit more efficient to run 2 concurrent 17's, for the here and now it's about speed more than efficiency. If this is a little less than clear please ask for any needed clarification, as this is a pre-coffee postHoldolin What subprojects are you running on the 4090s/how do you have them configured? I am running a mix of GFN-16 and GFN-17 and struggling to keep it busy. I have tried running everywhere from 1 WU at a time to 12 at a time (I tried 1 at a time, 2, 4, 8, and 12), and the most I've managed to get out of it is ~55% of TDP (240W or so) and a claimed 90% GPU utilisation (both as reported by GPU-Z and by nvidia-smi). It seems like if it's barely cracking 200W (216 right now as I type this up) that it's probably leaving the GPU far more idle than it's reporting, and I'm not getting good utilisation out of it. Are you running bigger GFN projects? Are you seeing a higher apparent load? I (very briefly) had it running a mix of GFN-16/17 & PPS-Sieve last night until the PPS-Sieve tasks ran out, and in that configuration it was doing somewhere around 420-440W, so I know that PG is capable of forcing more out of it.

Wow, apparently the game has changed since I been gone. It looks like now, instead of a double checker they're using 'proof tasks' so every find is a first. So small caches and inefficient crunching are no longer needed. Guess that's what I get for taking a couple years off lol. Lemmie do some research on optimizations for 4090-based crunchers and we can compare notes

I believe it doesn't work like that. I am pretty sure that with the proof tasks (which I was asking about in the main forums: https://www.primegrid.com/forum_thread.php?id=10463) then everyone is "first" -- it just depends on whether you're lucky enough to get the main tasks, or the proof tasks. The proof tasks as you said though do run the GPUs very nearly idle (they're so short that by the time that the GPU has gotten fired up & running, they're done -- I am quite literally seeing runtimes of 1-4 seconds for the GFN16/17 on the 4090)I'm just running straight GFN-17's. I started TdP on the 16's, but my cards spent waay to much time doing nothing. The 17's seem to keep the cards busy most of the time and in scouting my peers it seems few are running 4090s so my theory is if I get handed a prime to crunch I should be the finder and somebody else the checker. See, IMO being the fact this is TdP the object of the game is to get the prime first, which to some extent means taking a, how shall I say, less than ideal approach to computing. Thus, I have my cache set to next to nothing with the idea as soon as a given number is handed to me, I'm ready to crunch it. While it might be a bit more efficient to run 2 concurrent 17's, for the here and now it's about speed more than efficiency. If this is a little less than clear please ask for any needed clarification, as this is a pre-coffee post

lol yep. I was JUST reading that on their forums and made a second post. Now at least I know what these super short jobs my CPU is getting. I do PPS on my daily driver's CPU when I'm not actually working on it.I believe it doesn't work like that. I am pretty sure that with the proof tasks (which I was asking about in the main forums: https://www.primegrid.com/forum_thread.php?id=10463) then everyone is "first" -- it just depends on whether you're lucky enough to get the main tasks, or the proof tasks. The proof tasks as you said though do run the GPUs very nearly idle (they're so short that by the time that the GPU has gotten fired up & running, they're done -- I am quite literally seeing runtimes of 1-4 seconds for the GFN16/17 on the 4090)

FWIW I've found that running PPS/PPSE on my main systems make no noticeable impact on overall responsiveness. The "bigger" tasks (like SoB/ESP) seem to be hammering the CPU in a way that does make things noticeably laggy, but PPS fades into the background fine. At least on Windows. Harder to tell on the Linux boxes since I use them over SSH & don't feel responsiveness hits in quite the same way.lol yep. I was JUST reading that on their forums and made a second post. Now at least I know what these super short jobs my CPU is getting. I do PPS on my daily driver's CPU when I'm not actually working on it.

I'm feelin the same. About the only thing I've noticed is if I start doing heavier work, my crunching slows down a bit, as it should. Even then, I'm only talking a few seconds per WU. Not a big in my book. I've also spent a day testing the effectiveness of enabling SMT. After about a thousand WUs each way, I've shown ~4.5% output increase with SMT enabled. While not exactly thunderous gains I'm looking at what happens when you start adding that to multiple systems, especially if I were to start building threadripper-based systems. The 64-core systems with that 3D cache have enough cache to run 64 PPS/PPSE concurrently without a prob. Why? Because according to Michael over on their site, they have a 10 year cache of PPS work. Sounds like a challenge to meFWIW I've found that running PPS/PPSE on my main systems make no noticeable impact on overall responsiveness. The "bigger" tasks (like SoB/ESP) seem to be hammering the CPU in a way that does make things noticeably laggy, but PPS fades into the background fine. At least on Windows. Harder to tell on the Linux boxes since I use them over SSH & don't feel responsiveness hits in quite the same way.

Edited to add:

I didn't' wanna say much about a challenge in their forums, as I'm not getting the sense they enjoy a little trash talk like we do 'round here hehe

I don't have any of the 3D-cache EPYC CPUs because I'm not made of that much money, but I do have a couple of older EPYC-based systems. This one has been chewing through 128 PPSE tasks at a time:The 64-core systems with that 3D cache have enough cache to run 64 PPS/PPSE concurrently without a prob. Why? Because according to Michael over on their site, they have a 10 year cache of PPS work. Sounds like a challenge to me

Edited to add:

I didn't' wanna say much about a challenge in their forums, as I'm not getting the sense they enjoy a little trash talk like we do 'round here hehe

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 43 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 128

On-line CPU(s) list: 0-127

Vendor ID: AuthenticAMD

Model name: AMD EPYC 7H12 64-Core Processor

CPU family: 23

Model: 49

Thread(s) per core: 1

Core(s) per socket: 64

Socket(s): 2

The SM board is not entirely happy with how hard it runs the CPUs....I've got a pair of fans on the VRMs, a box fan pointed at the system, and even so if the room is much above 60C I have to power-limit the CPUs in the BIOS so it doesn't thermal throttle. But it is quick

Interesting. I wonder if my systems have just been too badly starved for memory bandwidth, but I've mostly found that if I disable SMT that it seems to result in better output (half as many tasks, but each one takes less than half the time). Now that I have a second 7950X system, I am hoping to do some more apples-to-apples comparison between one with HT on and the other with it off.I'm feelin the same. About the only thing I've noticed is if I start doing heavier work, my crunching slows down a bit, as it should. Even then, I'm only talking a few seconds per WU. Not a big in my book. I've also spent a day testing the effectiveness of enabling SMT. After about a thousand WUs each way, I've shown ~4.5% output increase with SMT enabled.

That would be my thinking. Not having the 3D cache, you're pushing some of the work out into RAM which is much slower. This is why I spend so much of my day perusing forums and doing general research. When I started back with PG, I spent like days going through the forums. It's great they give so much info to us as donors, such as how much cache a given WU requires. That lets us plan our builds and configs accordingly. A great example is the thread on ARM crunching. It's actually more simple on the Rapi than the Opi, as with the Rapi you just set the thread count to 4 and walk away. If you do that with the Opi, you end up in the mud as the system sees 8 cores and will try to do 2 concurrent WUs and that just chokes things. You also have to create/edit and app_config.xml to limit it to 1 concurrent task. Sorry, I know I get long winded but I like not only the science we're crunching for, but the science of how to get the most we can out of each system we have. I'll hush now hehe.Interesting. I wonder if my systems have just been too badly starved for memory bandwidth, but I've mostly found that if I disable SMT that it seems to result in better output (half as many tasks, but each one takes less than half the time). Now that I have a second 7950X system, I am hoping to do some more apples-to-apples comparison between one with HT on and the other with it off.

In my days of running WCG I discovered that memory bandwidth is pretty much irrelevant. I had a couple of systems running 64 or 80 WUs (2x 16-20c CPUs + HT) on 1 stick of RAM per CPU, or even one stick of RAM shared for both CPUs. PG does not like that, lol. It does make it interesting when there is more to optimise and try to min-max.That would be my thinking. Not having the 3D cache, you're pushing some of the work out into RAM which is much slower. This is why I spend so much of my day perusing forums and doing general research. When I started back with PG, I spent like days going through the forums. It's great they give so much info to us as donors, such as how much cache a given WU requires. That lets us plan our builds and configs accordingly. A great example is the thread on ARM crunching. It's actually more simple on the Rapi than the Opi, as with the Rapi you just set the thread count to 4 and walk away. If you do that with the Opi, you end up in the mud as the system sees 8 cores and will try to do 2 concurrent WUs and that just chokes things. You also have to create/edit and app_config.xml to limit it to 1 concurrent task. Sorry, I know I get long winded but I like not only the science we're crunching for, but the science of how to get the most we can out of each system we have. I'll hush now hehe.

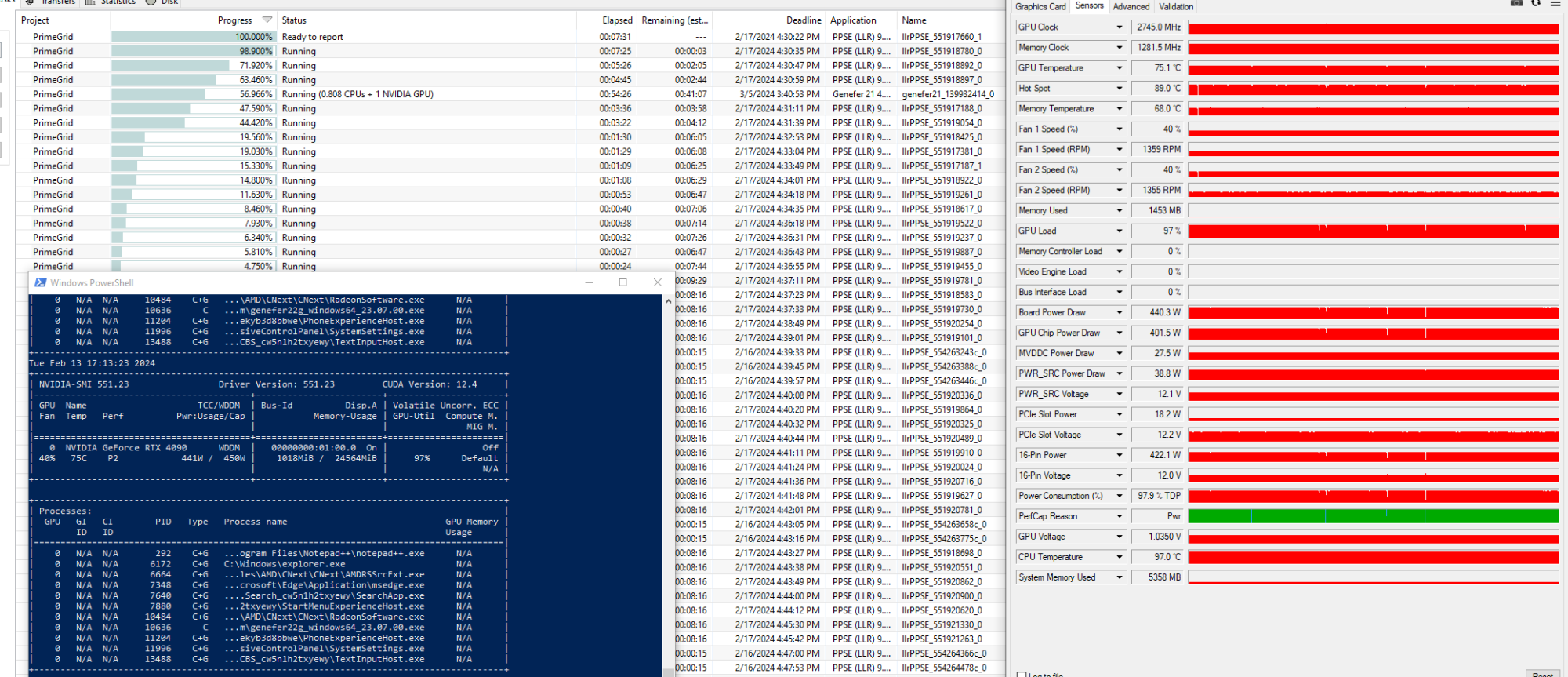

To answer my own question...the larger tasks do a better job keeping the GPU full. GFN-21 task is running it at nearly 100% of TDP indefinitely:I'm just running straight GFN-17's. I started TdP on the 16's, but my cards spent waay to much time doing nothing. The 17's seem to keep the cards busy most of the time and in scouting my peers it seems few are running 4090s so my theory is if I get handed a prime to crunch I should be the finder and somebody else the checker. See, IMO being the fact this is TdP the object of the game is to get the prime first, which to some extent means taking a, how shall I say, less than ideal approach to computing. Thus, I have my cache set to next to nothing with the idea as soon as a given number is handed to me, I'm ready to crunch it. While it might be a bit more efficient to run 2 concurrent 17's, for the here and now it's about speed more than efficiency. If this is a little less than clear please ask for any needed clarification, as this is a pre-coffee post

I'm still putting it back on GFN16/17 for now because I'd rather have a shot at more primes for the duration of TdP, but after the contest finishes I'll play around a bit with the AP27 & otherwise toss it on larger GFN tasks.

Thanks for posting the info. When TdP is over I'll prolly give my GPUs a bit meatier set of tasks as well, but for now imma just let things sail as-is.To answer my own question...the larger tasks do a better job keeping the GPU full. GFN-21 task is running it at nearly 100% of TDP indefinitely:

View attachment 634854

I'm still putting it back on GFN16/17 for now because I'd rather have a shot at more primes for the duration of TdP, but after the contest finishes I'll play around a bit with the AP27 & otherwise toss it on larger GFN tasks.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)