erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,918

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The Geforce 256 was a great card for it's time, no doubt, but in what way could it possibly lay claim to being "the first gaming GPU"? Honestly, that's a pretty stupid statement. I had an Nvidia TNT2 Ultra prior to getting a Geforce 256, and while the Geforce 256 was certainly an upgrade, it's not as if the TNT2 Ultra wasn't also a GPU...

Seems like a click-bait article aimed at those who weren't even alive back then.

I'm just going to stop you right thereIf you read the article

Technically it was a display adapter and 3d accelerator. Buzz words.if it was the first GPU overall, then why specify gaming gpu? Still seems like clickbait as the voodoo banshee I had was a video card (gpu) that came out before the geforce 256

Not great at the time? I had the SDR and it slayed Quake 3 when it released; IIRC it was a huge upgrade over the TNT.I have my 2 geforce 256's, a SDR and DDR model. Neither seemed that great at the time, and were not supported for very long. Now my geforce 2's got used a lot, and I have them in several of my retro systems. Much better and a more useful card.

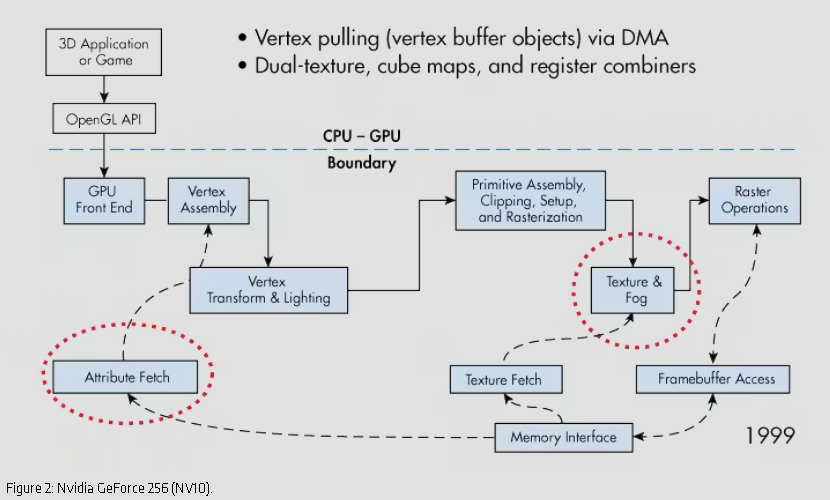

It's the first GPU with hardware T&L, which was the game-changing aspect of it. I think that you can make an argument for the claim of "first gaming GPU" using this standard. Before this the main CPU still had to do the T&L calculations. GeForce 256 eliminated that.The Geforce 256 was a great card for it's time, no doubt, but in what way could it possibly lay claim to being "the first gaming GPU"? Honestly, that's a pretty stupid statement. I had an Nvidia TNT2 Ultra prior to getting a Geforce 256, and while the Geforce 256 was certainly an upgrade, it's not as if the TNT2 Ultra wasn't also a GPU...

Seems like a click-bait article aimed at those who weren't even alive back then.

I mean it comes down to semantics, really. I would consider the Voodoo series to be gaming GPUs, they are a unit that processes graphics, and I used them for games.It's the first GPU with hardware T&L, which was the game-changing aspect of it. I think that you can make an argument for the claim of "first gaming GPU" using this standard. Before this the main CPU still had to do the T&L calculations. GeForce 256 eliminated that.

Interestingly, I have my Voodoo 3 3000 paried with a AMD Athlon XP2800+ and it performs worlds better than it did back when I actually used it on a Pentium II & III back in the day. It's an unrealistic CPU to use with it, but absolutely free's it from any limitations. I run it overclocked at 183Mhz and it can run UT99 1024x768 timedemo at 74 FPS and runs 1280x1024 timedemo at about 36 FPS. Way above what benchmarks of the time got on that card with time correct hardware.I mean it comes down to semantics, really. I would consider the Voodoo series to be gaming GPUs, they are a unit that processes graphics, and I used them for games.

Sure it beat some of the old cards when it came out, but it was quickly outdated. Plus while it worked good on the openGL shooters, that was about all it was good at. If you didnt play shooters it didnt do much for you. Geforce 2 was an all around better card.Not great at the time? I had the SDR and it slayed Quake 3 when it released; IIRC it was a huge upgrade over the TNT.

It was awesome, but at the time was severely starved of memory bandwidth (even with DDR). The GF2 opened the floodgates with crappy compression but was still starved. I think that's why it has more longevity but it's not like the GF3 didn't destroy the GF2 as well. We're talking about a time with tighter upgrade cycles.Not great at the time? I had the SDR and it slayed Quake 3 when it released; IIRC it was a huge upgrade over the TNT.

"GPU" was technically first used by Sony in the original PlayStation, though it was a loose definition at the time. It still relied on a CPU coprocessor to rasterize the image.GeForce 256 was when the term "GPU" was first introduced by Nvidia. Yes, it was really more a marketing thing than anything else, but that was the card first given that description.

The Voodoo cards still leaned on the CPU for the rasterization stage, transforming the 3D elements into a 2D image. The GeForce 256 was the first video card to eliminate the CPU from the rasterization stage and set the standard going forward. 3dfx never added hardware T&L to their video cards and is one of the reasons they fell behind the competition. They were adamant that the CPU would always be faster at processing the rasterization stage as technology advanced.I mean it comes down to semantics, really. I would consider the Voodoo series to be gaming GPUs, they are a unit that processes graphics, and I used them for games.

the card with more compute power than the whole planet 50 years ago.100 years from now: Remembering the first PC gaming GPU, the RTX 4090.

I get what you're saying and I respect that. I do, however, feel that the first GPU to include T&L sets it apart from its forefathers. Before this inclusion, the CPU was still doing some of the heavy lifting. Not all of it obviously, and that's where the old graphics accelerators saved the day, but shifting the geometry transformations away from the CPU so that the card could do the transforms, lighting, and the pixel filling; opened up a whole world of possibilities.I mean it comes down to semantics, really. I would consider the Voodoo series to be gaming GPUs, they are a unit that processes graphics, and I used them for games.

Yeah I understand the distinction, and it's fair, I just think it's kind of silly to presume that a unit that processes graphics can't be called a GPU because it doesn't process "all" of the graphics? I dunno. It's not a serious issue to me, it's just sort of goofy.I get what you're saying and I respect that. I do, however, feel that the first GPU to include T&L sets it apart from its forefathers. Before this inclusion, the CPU was still doing some of the heavy lifting. Not all of it obviously, and that's where the old graphics accelerators saved the day, but shifting the geometry transformations away from the CPU so that the card could do the transforms, lighting, and the pixel filling; opened up a whole world of possibilities.

Yep I get that. I agree. I mean the old Tseng cards can be considered gaming GPU's too right? I think they had the fastest DOS 2D performance.Yeah I understand the distinction, and it's fair, I just think it's kind of silly to presume that a unit that processes graphics can't be called a GPU because it doesn't process "all" of the graphics? I dunno. It's not a serious issue to me, it's just sort of goofy.

By that same token, one could argue the GeForce 3 was more important because it introduced programmable shaders. GeForce 256 with its fixed function T&L would seem like a dinosaur in comparison.I get what you're saying and I respect that. I do, however, feel that the first GPU to include T&L sets it apart from its forefathers. Before this inclusion, the CPU was still doing some of the heavy lifting. Not all of it obviously, and that's where the old graphics accelerators saved the day, but shifting the geometry transformations away from the CPU so that the card could do the transforms, lighting, and the pixel filling; opened up a whole world of possibilities.

Even more firsts (prior firsts) when you leave the name, Nvidia, out. But, this is [H], so I'm not sure if we're allowed to talk about that.There are many "firsts" when it comes to the history of Nvidia.

The Riva 128 was originally only Direct3d, but it did get full opengl support. I know because I bought one when they first came out.My friend got one of these on release and played a lot of Half Life on it.. I wasn't that impressed with it tbh... Another friend had a voodoo2 SLI system which I thought looked a little better... but the 256 was faster for sure. I loved my Riva TNT in 98... and the TNT2 when that dropped in 99. I finally got a Geforce2 GTS after the TNT2 which I thought was a great card too, still have it. I have a Geforce2 ultra now too in my collection, that was a pricy card back in the day! The 460mhz memory clock 128-bit bus = insane memory bw for the time. The ultra was pretty bad ass.

EDIT: Stugots - yes the Riva 128 did pre-date the 256. It did direct 3D ok but no openGL support. The TNT pre-dates the 256 also and I loved that card. Full openGL support and it slayed Quake2 with my Abit BH-6 celery 450 combo (which ran closer to 460 with a bus clock tweak.. some of them ran at 512!).. the TNT2 ultra pre-dates the 256 also by a few months and that was a great card. I felt no need to get a 256 with my TNT2 ultra on the same Abit system shredding Quake 2 frames. It was so fast!

Interesting.. I remember it was a quake2 bummer cause of that openGL thing. Maybe it was only software support ??The Riva 128 was originally only Direct3d, but it did get full opengl support. I know because I bought one when they first came out.

Nvidia bought the 3dfx patents, hired their engineers and some execs.. There's still some 3dfx magic in the Nvidia cards.the GeForce 256 was the first time I questioned my alliance to 3DFX, the GeForce2 was when I switched... still miss 3DFX dearly though

I wish nVidia would sell its gaming gpus with the 3DFX brand, and keep the current branding with all the rest.the GeForce 256 was the first time I questioned my alliance to 3DFX, the GeForce2 was when I switched... still miss 3DFX dearly though

GeForce 256 was when the term "GPU" was first introduced by Nvidia. Yes, it was really more a marketing thing than anything else, but that was the card first given that description.

The first “3D accelerators” that I remember were the S3 Virge, Rendition Verite, PowerVR (?), and a little later the 3dfx Voodoo. I was big into Mechwarrior 2, and the Verite was the one I dreamed about at the time. I would have wanted the Voodoo, but my PC didn’t have an add-in video card, so I didn’t want to shell out 300 bucks for a 3D accelerator and still have to buy a 2D video card. I was too broke to get any of them, though, and ended up waiting a few years for a Pentium 2 with a Riva TNT as my first VPU (video processing unit as some people called them at the time). I could run Thief and Half-Life at 1280 x 960 resolution, and couldn’t imagine anything looking more realistic than that.'GPU' terminology aside, wouldn't something like the Riva 128 pre-date this? I'm sure there were some predating the Riva 128 too.

Oh, my sweet summer child.The first “3D accelerators” that I remember were the S3 Virge, Rendition Verite, PowerVR (?), and a little later the 3dfx Voodoo. I was big into Mechwarrior 2, and the Verite was the one I dreamed about at the time. I would have wanted the Voodoo, but my PC didn’t have an add-in video card, so I didn’t want to shell out 300 bucks for a 3D accelerator and still have to buy a 2D video card. I was too broke to get any of them, though, and ended up waiting a few years for a Pentium 2 with a Riva TNT as my first VPU (video processing unit as some people called them at the time). I could run Thief and Half-Life at 1280 x 960 resolution, and couldn’t imagine anything looking more realistic than that.

I never had the original GeForce, got a Radeon LE instead, but I jumped back onto the NVIDIA bandwagon with the GeForce 3 and kept that for years until a discounted Radeon 9800 Pro tempted me back to team red.

The Term GPU as we know it today, Nvidia was the first to create the chip, and as far as I know, that is the only claim regarding 'GPU' that's been made, is that Nvidia was the first to create one. The term for Graphics Processing Unit had been used prior but those performed a limited portion of the work. Those were first to use the term, but they cannot make the same claim to fame that Nvidia can.The term 'gpu' was floating about long before the geforce, nvidia didn't introduce it at all.

Yeah and they didn't explain the term very well either. Speed does matter when comparing CPU's of the same family (design) where the only difference is clockspeed. Clockspeed between different designs is what cannot be compared.That's like apple laying claim to the term 'mhz myth' back when they were doing little vids showing how the p4 long pipeline affected its performance, that was a term long before apple latched on to it, despite what Jobs says in this vid 'we've given it the name mhz myth'.