So we've had some abstract convos about this in the past, but I'm ready for a GPU upgrade (1080ti) finallly, and I'm hoping for either affirmation of my conclussion, or some input on an alternative if I'm missing something.

Before I mention the conclussion I've come to; some backstory for those that don't know, to explain my criteria/caveats: I live completely off-grid in a remote part of the Appalachian mountains, 40 or so minutes from the nearest gas station, let alone a grocery store. I do not have electric service, produce my own either solar, or generator when solar isn't sufficient, due to the fact that this is also one of the highet precipitation areas of the country, so optimizing electrical consumption is definitely way up there in terms of my priorities. Pure technical FPS per input watt isn't necessarily king here, so much as balancing bang-for-buck and minimizing unnecessary power consumption, but this specifically is why I'm seeking a second opinion here, as I know this can be more complex than looking at the raw TPD at a given target FPS. Final caveat worth mentioning is that I am trying to stay below 320W, as that's the largest DC powered Pico style ATX PSU I can find that can be directly powered by my 48V battery bank. I'll be using a single dedicated 320W max PSU for only the GPU, with another powering my MB/CPU/etc, I'm mentioning this only so there isn't any confusion, not asking for input on whether this works or is a good idea (it does, and is, for me). However, I don't really trust these PSUs to their "rated" wattage, at least not continuously, so leaving some overhead here, feels prudent.

I recently acquired an LG Oled (B2) for a screaming good deal as well, which has an impact on decision, since previously I wouldn't have been necesarily fixated on 4k performance. I still likely won't game entirely/primarily on this TV however, since it's a big wattage consumer, but if I am going to upgrade GPU, I'd like to be able to utilize it reasonably well.

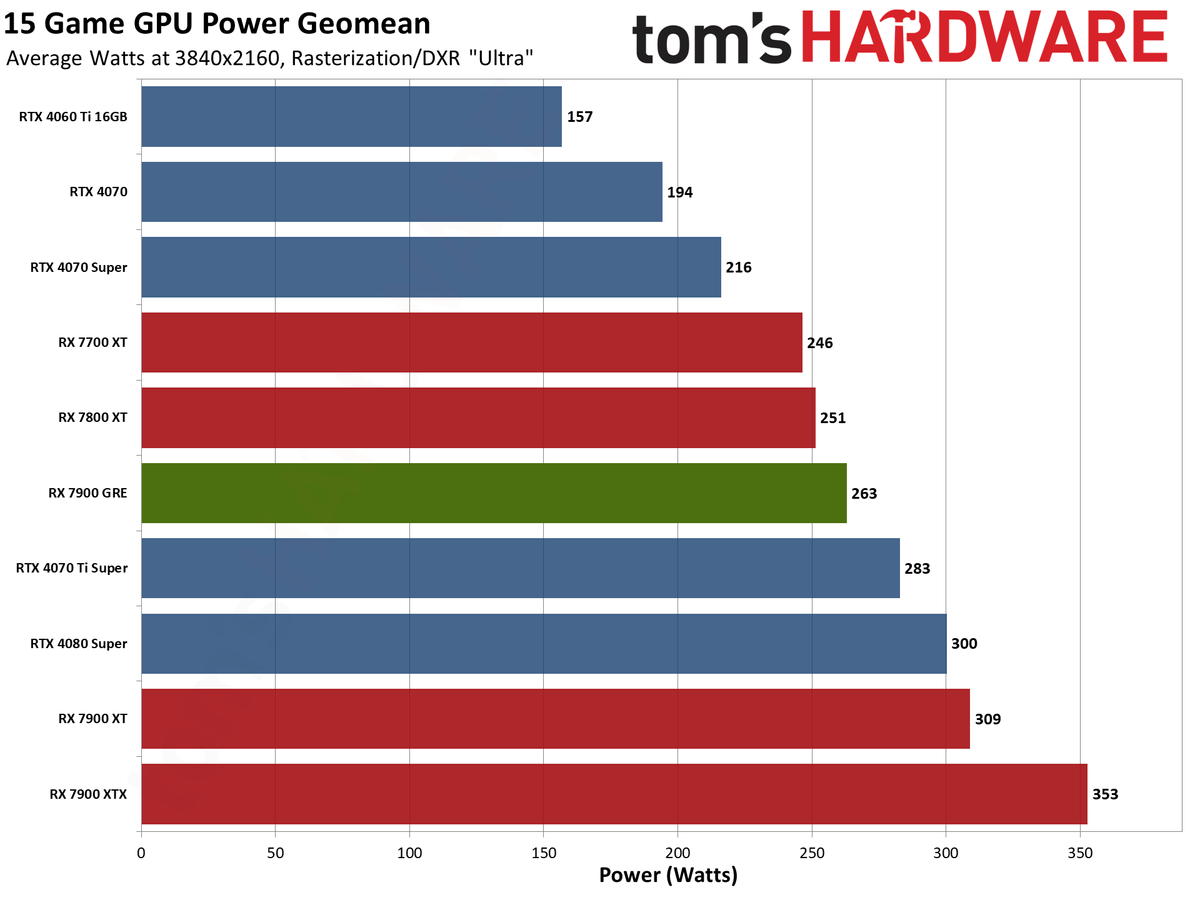

I was previously considering a 7800XT, but considering the new TV, and with the recent new models released, and looking at raw numbers, the 4070 Super looks unbeatable in terms of TDP (220W) for FPS at 4K, but am I missing something? I could stretch budget up to higher skus (but definitely not over $1k, and realistically anything over $800 would be very difficult to rationalize), but I'm not seeing the payoff in terms of the raw numbers, I mean, having more FPS is nice, having more headroom is great, and I know that there's a scenario where a higher end card could in theory actually use less power for the same FPS, which is part of why I'm asking, though I'm not sure how easily that can be objectively quantified?

I will say that, I give absolutely zero shits about any of the nvidia vs radeon features atm, more power to you if you do, but I've lived without RT and DLSS just fine for many years. I'm sure if I go with Nvidia this time, I'll try those features, and maybe enjoy them, but my primary metric is getting performance for as little watt hours as possible, while landing in comfortable territory to utilize the new 4k Oled, or push 120 Hz 1440p Ultrawide at high settings, and I definitely will not/do not give a shit about non-gaming features, other than perhaps CAD performance (but I'm not going out and buying a quadro or whatever for that alone, I do most of my design work on a T14 (amd) laptop in freecad lol).

So 220W, able to roughly handle 4k ultra at 60fps (at least on paper) for approx $600? Seems like a no-brainer, but admittedly, that's going to be pushing the whole 220w, can any other card under $1k offer me better power consumption in that scenario? I mean obviously the "gamer" part of my brain wants to find a way to justify a 7900XTX, and I could swing it price wise prob, especially for a used one, but I'm not sure that is actually a smart move. 4070 ti or 4070 ti super could be an option perhaps.

Anyway, I appreciate any insight, legitimate criticism, or just affirmation anyone may be willing to share, thanks!

Before I mention the conclussion I've come to; some backstory for those that don't know, to explain my criteria/caveats: I live completely off-grid in a remote part of the Appalachian mountains, 40 or so minutes from the nearest gas station, let alone a grocery store. I do not have electric service, produce my own either solar, or generator when solar isn't sufficient, due to the fact that this is also one of the highet precipitation areas of the country, so optimizing electrical consumption is definitely way up there in terms of my priorities. Pure technical FPS per input watt isn't necessarily king here, so much as balancing bang-for-buck and minimizing unnecessary power consumption, but this specifically is why I'm seeking a second opinion here, as I know this can be more complex than looking at the raw TPD at a given target FPS. Final caveat worth mentioning is that I am trying to stay below 320W, as that's the largest DC powered Pico style ATX PSU I can find that can be directly powered by my 48V battery bank. I'll be using a single dedicated 320W max PSU for only the GPU, with another powering my MB/CPU/etc, I'm mentioning this only so there isn't any confusion, not asking for input on whether this works or is a good idea (it does, and is, for me). However, I don't really trust these PSUs to their "rated" wattage, at least not continuously, so leaving some overhead here, feels prudent.

I recently acquired an LG Oled (B2) for a screaming good deal as well, which has an impact on decision, since previously I wouldn't have been necesarily fixated on 4k performance. I still likely won't game entirely/primarily on this TV however, since it's a big wattage consumer, but if I am going to upgrade GPU, I'd like to be able to utilize it reasonably well.

I was previously considering a 7800XT, but considering the new TV, and with the recent new models released, and looking at raw numbers, the 4070 Super looks unbeatable in terms of TDP (220W) for FPS at 4K, but am I missing something? I could stretch budget up to higher skus (but definitely not over $1k, and realistically anything over $800 would be very difficult to rationalize), but I'm not seeing the payoff in terms of the raw numbers, I mean, having more FPS is nice, having more headroom is great, and I know that there's a scenario where a higher end card could in theory actually use less power for the same FPS, which is part of why I'm asking, though I'm not sure how easily that can be objectively quantified?

I will say that, I give absolutely zero shits about any of the nvidia vs radeon features atm, more power to you if you do, but I've lived without RT and DLSS just fine for many years. I'm sure if I go with Nvidia this time, I'll try those features, and maybe enjoy them, but my primary metric is getting performance for as little watt hours as possible, while landing in comfortable territory to utilize the new 4k Oled, or push 120 Hz 1440p Ultrawide at high settings, and I definitely will not/do not give a shit about non-gaming features, other than perhaps CAD performance (but I'm not going out and buying a quadro or whatever for that alone, I do most of my design work on a T14 (amd) laptop in freecad lol).

So 220W, able to roughly handle 4k ultra at 60fps (at least on paper) for approx $600? Seems like a no-brainer, but admittedly, that's going to be pushing the whole 220w, can any other card under $1k offer me better power consumption in that scenario? I mean obviously the "gamer" part of my brain wants to find a way to justify a 7900XTX, and I could swing it price wise prob, especially for a used one, but I'm not sure that is actually a smart move. 4070 ti or 4070 ti super could be an option perhaps.

Anyway, I appreciate any insight, legitimate criticism, or just affirmation anyone may be willing to share, thanks!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)