Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

OpenSolaris derived ZFS NAS/ SAN (OmniOS, OpenIndiana, Solaris and napp-it)

- Thread starter _Gea

- Start date

_Gea for some reason napp-it has stopped running AUTO Service Jobs since Dec 2022!

I can't remember if I updated napp-it or OmniOS back in December, but I think it was one or the other. Before then, AUTO Service Jobs were running just fine!

napp-it v: 21.06a8

OmniOS: r151044

Disable auto and re-enable ex every 5 minutes in Jobs > Auto Service > every 5min

This sets a normal cronjob for root,

0,5,10,15,20,25,30,35,40,45,50,55 * * * * perl /var/web-gui/data/napp-it/zfsos/_lib/scripts/auto.pl

you can control settings (ex Putty as root)

crontab -l root

What happens if you call

perl /var/web-gui/data/napp-it/zfsos/_lib/scripts/auto.pl (should run without a message or error

Try:

re-set root password via passwd root

How can I make sure that beadm loader writes to both my mirrors?

What do you want to achieve?

Beside bootadm to install a bootloader no other tools care about vdev member disks. They write to a mirror and ZFS does the rest.

I want to make sure that if 1 rpool mirror fails the other will boot eg boot loader is on both disks. Maybe there is nothing for me to do?What do you want to achieve?

Beside bootadm to install a bootloader no other tools care about vdev member disks. They write to a mirror and ZFS does the rest.

If you want to mirror your bootdisk you must only install a bootloader on all disks of the mirror:

zpool attach -f rpool currentdisk newdisk && bootadm install-bootloader -P rpool

see https://omnios.org/info/migrate_rpool.html

Do not reboot until resilver is finished!

Set bios bootorder to boot from first disk, then from second

zpool attach -f rpool currentdisk newdisk && bootadm install-bootloader -P rpool

see https://omnios.org/info/migrate_rpool.html

Do not reboot until resilver is finished!

Set bios bootorder to boot from first disk, then from second

What happens if you call

perl /var/web-gui/data/napp-it/zfsos/_lib/scripts/auto.pl (should run without a message or error

_Gea I tried all of your suggestions, but to no end!

As for the perl command, I got an erroe message:

Code:

Tty.c: loadable library and perl binaries are mismatched (got first handshake key 12200080, needed 12280080)I'm not familiar with PERL, so any idea what that error means, or how to resolve it?

P.S. I can run all the JOBS manually, just that they wont run on the given schedule!

Tty is a binary and part of Expect.pm, a tool to run interactive console programs from a script. I asume you get the same error in menu users. Napp-it includes a Tty binary for all supported OmniOS and Perl releases.

Can you add:

- OmniOS release

- napp-it release

- Perl version (output of): perl -v

Can you add:

- OmniOS release

- napp-it release

- Perl version (output of): perl -v

_GeaTty is a binary and part of Expect.pm, a tool to run interactive console programs from a script. I asume you get the same error in menu users. Napp-it includes a Tty binary for all supported OmniOS and Perl releases.

Can you add:

- OmniOS release

- napp-it release

- Perl version (output of): perl -v

I know what I PERL is, just that I don't know much about how to write scripts with it! But more importantly, I don't know anything about that 'Tty' error. BTW I updated OmniOS to the latest release yesterday.

OmniOS: r151046-latest patch

napp-it: v21.06a8

PERL: v5.36.1

_Gea v21.06a12 doesn't show up as option for me. I only see v18.12 & v21.06.Can you update to newest 21.06a12

(About > Update > Download 21.06)

A newer OmniOS requires a newer napp-it that supports its Perl release

Did you release v21.06a12 to general public?

If you download 21.06 free, you just get the newest 21.06 (a12) with all backported bug and security fixes

There is no option to download older releases, always newest (but you can activate last version in About > Update)

With an evalkey you can even update to newer/dev releases

There is no option to download older releases, always newest (but you can activate last version in About > Update)

With an evalkey you can even update to newer/dev releases

_GeaIf you download 21.06 free, you just get the newest 21.06 (a12) with all backported bug and security fixes

There is no option to download older releases, always newest (but you can activate last version in About > Update)

With an evalkey you can even update to newer/dev releases

The update drop down list only shows v21.06, but doesn't list a12, so I was confused!

Anyway I have updated to the latest release (a12), & now the command runs without any error:

Code:

perl /var/web-gui/data/napp-it/zfsos/_lib/scripts/auto.plStill not sure if any of the AUTO jobs are running properly, but I'll be monitoring this for the next few days & if the problem persists I'll post back here.

Thanks for all the help.

BTW, I have a suggestion for the (About

Maybe add/display the minor release/revision on that page so users can know that there is newer revision available.

Currently it shows:

running version : 21.06a8

free updates : 18.12, 21.06

pro updates : 22.01, 22.02, 22.03, 22.06, 23.01, 23.06, 23.dev

wget updates : 21.06

Maybe show something like this:

running version : 21.06a8

free updates : 18.12, 21.06a12

pro updates : 22.01, 22.02, 22.03, 22.06, 23.01, 23.06, 23.dev

wget updates : 21.06a12

Possibly even add it to the 'Please select' drop down list.

update:

Current napp-it releases show newest release numbers in About > Update

more https://www.napp-it.org/downloads/changelog_en.html

Current napp-it releases show newest release numbers in About > Update

more https://www.napp-it.org/downloads/changelog_en.html

Last edited:

Hello, long time I didn't say hello, as I'm too busy working and my server just runs fine. One thing I've always put off is encryption. I don't have super sensitive data so figured I didn't need it, and I'm worried I might do more harm than good if something goes wrong. So, is there a way to backup encryption keys ? From my understanding there is a master key, but we never have access to the actual encryption key, so is there a mechanism to still have a backup ?

Even without encryption, if my array went down and couldn't self repair, even with disks let's say 99,9% readable, I would be at a loss to reconstitute data...

Even without encryption, if my array went down and couldn't self repair, even with disks let's say 99,9% readable, I would be at a loss to reconstitute data...

ZFS encryption works with a key per filesystem. The key can be a simple passphrase (min 8 char) or a file with the key. Both can be saved elsewhere. Napp-it additionally supports webbased keyserver and keysplit (half of key on two locations) or lock/unlock via SMB shares.

Encrypted ZFS filesystems are as save as unencrypted as long as you have the key. You can replicate encrypted filesystems in raw mode (Open-ZFS, not original ZFS)

Encrypted ZFS filesystems are as save as unencrypted as long as you have the key. You can replicate encrypted filesystems in raw mode (Open-ZFS, not original ZFS)

Security and feature update OmniOS r151046h (Solaris fork, 2023-06-20)

https://omnios.org/releasenotes.html

Weekly release for w/c 19th of June 2023.

This update requires a reboot

Security Fixes

Python has been updated to version 3.11.4;

Vim has been updated to version 9.0.1443.

Other Changes

SMB NetLogon Client Seal support;

Windows clients could get disconnected when copying files to an SMB share;

%ymm registers were not correctly restored after signal handler;

The svccfg command now supports a -z flag to manage services within zones;

The startup timeout for the system/zones service has been increased to resolve problems when starting a large number of bhyve zones in parallel in conjunction with a memory reservoir configuration;

Use automatic IBRS when available;

blkdev and lofi did not properly initialise cmlb minor nodes;

The ping command would fail when invoked with -I 0.01;

In exceptional circumstances, a zone could become stuck during halt due to lingering IP references;

An issue with resolving DNS names which have only multiple AAAA records has been resolved;

Improvements within the nvme driver to resolve a race and allow it to bind to devices that are under a legacy PCI root;

In exception circumstances, the system could panic when dumping a userland process core.

https://omnios.org/releasenotes.html

Weekly release for w/c 19th of June 2023.

This update requires a reboot

Security Fixes

Python has been updated to version 3.11.4;

Vim has been updated to version 9.0.1443.

Other Changes

SMB NetLogon Client Seal support;

Windows clients could get disconnected when copying files to an SMB share;

%ymm registers were not correctly restored after signal handler;

The svccfg command now supports a -z flag to manage services within zones;

The startup timeout for the system/zones service has been increased to resolve problems when starting a large number of bhyve zones in parallel in conjunction with a memory reservoir configuration;

Use automatic IBRS when available;

blkdev and lofi did not properly initialise cmlb minor nodes;

The ping command would fail when invoked with -I 0.01;

In exceptional circumstances, a zone could become stuck during halt due to lingering IP references;

An issue with resolving DNS names which have only multiple AAAA records has been resolved;

Improvements within the nvme driver to resolve a race and allow it to bind to devices that are under a legacy PCI root;

In exception circumstances, the system could panic when dumping a userland process core.

Security and feature update OmniOS r151046l LTS (2023-07-20)

https://omnios.org/releasenotes.html

Weekly release for w/c 17th of July 2023.

This update requires a reboot

Security Fixes

OpenSSH updated to version 9.3p2, fixing CVE-2023-38408.

The prgetsecflags() interface leaked a small (4 byte) portion of kernel stack memory - illumos 15788.

OpenJDK packages have been updated to 11.0.20+8 and 17.0.8+7.

Other Changes

Various improvements to the SMB idmap service have been backported:

illumos 14306

illumos 15556

illumos 15564 Most notably, it was previously possible to get flurries of log messages of the form

Can't get SID for ID=0 type=0 and this is now resolved.

The UUID generation library could produce invalid V4 UUIDs.

An issue with python header files that could cause some third party software to fail compilation has been resolved.

https://omnios.org/releasenotes.html

Weekly release for w/c 17th of July 2023.

This update requires a reboot

Security Fixes

OpenSSH updated to version 9.3p2, fixing CVE-2023-38408.

The prgetsecflags() interface leaked a small (4 byte) portion of kernel stack memory - illumos 15788.

OpenJDK packages have been updated to 11.0.20+8 and 17.0.8+7.

Other Changes

Various improvements to the SMB idmap service have been backported:

illumos 14306

illumos 15556

illumos 15564 Most notably, it was previously possible to get flurries of log messages of the form

Can't get SID for ID=0 type=0 and this is now resolved.

The UUID generation library could produce invalid V4 UUIDs.

An issue with python header files that could cause some third party software to fail compilation has been resolved.

Last edited:

Critical security update OmniOS r151038dm (2023-07-25)

https://omnios.org/releasenotes.html

To update, run pkg update

To undo update: boot in former bootenvironment

Weekly release for w/c 24th of July 2023.

This update requires a reboot

Changes

AMD CPU microcode updated to 20230719, mitigating CVE-2023-20593 on some Zen2 processors.

Intel CPU microcode updated to 20230512, refer to Intel's release notes for details.

Actions you need to take:

If you are not running the affected AMD parts, then there is nothing you need to do.

If you are running the affected AMD parts then you will need to update the AMD microcode.

Note, only Zen 2 based products are impacted. These include AMD products

known as:

* AMD EPYC 7XX2 Rome (Family 17h, model 31h)

* AMD Threadripper 3000 series Castle Peak (Family 17h, model 31h)

* AMD Ryzen 3000 Series Matisse

* AMD Ryzen 4000 Series Renoir (family 17h, model 60h)

* AMD Ryzen 5000 Series Lucienne (family 17h, model 68h)

* AMD Ryzen 7020 Series Mendocino (Family 17h, model a0h)

We have pushed an initial commit which provides a microcode fix for this

issue for the following processor families:

* Family 17h, model 31h (Rome / Castle Peak)

* Family 17h, model a0h (Mendocino)

https://www.theverge.com/2023/7/25/...-processor-zenbleed-vulnerability-exploit-bug

https://omnios.org/releasenotes.html

To update, run pkg update

To undo update: boot in former bootenvironment

Weekly release for w/c 24th of July 2023.

This update requires a reboot

Changes

AMD CPU microcode updated to 20230719, mitigating CVE-2023-20593 on some Zen2 processors.

Intel CPU microcode updated to 20230512, refer to Intel's release notes for details.

Actions you need to take:

If you are not running the affected AMD parts, then there is nothing you need to do.

If you are running the affected AMD parts then you will need to update the AMD microcode.

Note, only Zen 2 based products are impacted. These include AMD products

known as:

* AMD EPYC 7XX2 Rome (Family 17h, model 31h)

* AMD Threadripper 3000 series Castle Peak (Family 17h, model 31h)

* AMD Ryzen 3000 Series Matisse

* AMD Ryzen 4000 Series Renoir (family 17h, model 60h)

* AMD Ryzen 5000 Series Lucienne (family 17h, model 68h)

* AMD Ryzen 7020 Series Mendocino (Family 17h, model a0h)

We have pushed an initial commit which provides a microcode fix for this

issue for the following processor families:

* Family 17h, model 31h (Rome / Castle Peak)

* Family 17h, model a0h (Mendocino)

https://www.theverge.com/2023/7/25/...-processor-zenbleed-vulnerability-exploit-bug

Security update OmniOSce v11 r151046n (2023-08-03)

https://omnios.org/releasenotes.html

Weekly release for w/c 31st of July 2023.

This is a non-reboot update

Security Fixes

OpenSSL packages updated to versions 3.0.10 / 1.1.1v / 1.0.2u-1, resolving CVE-2023-3817, CVE-2023-3446, CVE-2023-2975.

OpenJDK 8 has been updated to version 1.8.0u382-b05.

To update, run "pkg update"

To undo update: boot in former bootenvironment

https://omnios.org/releasenotes.html

Weekly release for w/c 31st of July 2023.

This is a non-reboot update

Security Fixes

OpenSSL packages updated to versions 3.0.10 / 1.1.1v / 1.0.2u-1, resolving CVE-2023-3817, CVE-2023-3446, CVE-2023-2975.

OpenJDK 8 has been updated to version 1.8.0u382-b05.

To update, run "pkg update"

To undo update: boot in former bootenvironment

Multithreaded Solaris/Illumos SMB server with NFS4 ACL and Windows SID

Solaris Unix is the origin of ZFS and still offers the best ZFS integration into the OS with the lowest resource needs for ZFS. While on Linux ZFS is just another filesystem among many others, Sun developped OpenSolaris together and ontop of ZFS as the primary filesystem (Oracle Solaris 11.4 and Illumos/OI/OmniOS are descendents) with many advanced features like Drace, Service Management or Container based VMs. All of these features and ideas found their way into BSD or Linux without the deep integration of NFS or SMB into ZFS. Especially the multithreaded SMB server that is part of Solaris based systems is the most common reason to use this Unix.

The most important use case of storage is SMB, the file sharing protocol from MicroSoft that introduced superiour fine granular ACL permissions with inheritance into their ntfs filesystem and SMB shares. While traditional Linux/Unix permissions or Posix ACL only offer simple read/write/execute based on a user id like 101, ntfs ACL added additional permissions to create/extend files or folders, modify or read attributes or take ownersip based on a unique id like S-1-5-21-3623811015-3361044348-30300820-1013

ACL permissions

The kernelbased Solaris/Illumos SMB server is the only one that fully integrates NFS4 ACL (a superset of Windows ntfs ACL, Posix ACL and simple Unix permissions) with Windows SID (owner/user reference) as a ZFS attribute despite the Unix ZFS filesystem that normally only accepts Unix uid/gid as a user/owner reference. Main adventage is that you can move/restore a ZFS filesystem with all Windows permissions intact. When you use SAMBA instead that relies only on Unix uid/gid, you must use complicated id mappings to assign a Unix uid to a Windows SID that differ from server to server.

https://docs.oracle.com/cd/E23824_01/html/821-1448/gbacb.html

https://en.wikipedia.org/wiki/Access-control_list

https://en.wikipedia.org/wiki/Security_Identifier

http://wiki.linux-nfs.org/wiki/index.php/ACLs

https://wiki.samba.org/index.php/NFS4_ACL_overview

SMB groups

The kernelbased Solaris/Illumos SMB server is the only one that additionally offers local SMB groups. Unlike Unix groups Windows alike SMB groups allow groups in groups in ACL settings. The group id is a Windows SID just like a user id.

Windows previous versions

The kernelbased Solaris/Illumos SMB server is the only one with a strict relation of a ZFS filesystem and a share. This is important when you want to use ZFS snaps as Windows "Previous Versions". As ZFS snaps are assigned to a filesystem, it can be quite confusing when you use SAMBA instead. As SAMBA only sees datafolders and knows nothing about ZFS, you must carefully configure and organize your shares to have this working especially with nested ZFS filesystems while on Solaris "previous Versions" just works without any settings.

Setup

In general the Solaris/Illumos SMB server is much simpler to configure and setup than SAMBA that is an option on Solaris too. No smb.conf with server settings, just set smbshare of a ZFS filesystem to on. SMB server behaviours can be set or shown with the admin tool smbadm, https://docs.oracle.com/cd/E86824_01/html/E54764/smbadm-1m.html or are ZFS properties like aclmode, aclinherit or are NFS4 file or share ACL in general.

Multithreaded

While the singlethreaded SAMBA wants best singlecore CPU performance, the kernelbased SMB server is more optimized for multicore CPUs and mmany parallel requests.

Cons

The kernelbased SMB server has less options than SAMBA and it supports Windows AD member mode only (out of the box).

Solaris Unix is the origin of ZFS and still offers the best ZFS integration into the OS with the lowest resource needs for ZFS. While on Linux ZFS is just another filesystem among many others, Sun developped OpenSolaris together and ontop of ZFS as the primary filesystem (Oracle Solaris 11.4 and Illumos/OI/OmniOS are descendents) with many advanced features like Drace, Service Management or Container based VMs. All of these features and ideas found their way into BSD or Linux without the deep integration of NFS or SMB into ZFS. Especially the multithreaded SMB server that is part of Solaris based systems is the most common reason to use this Unix.

The most important use case of storage is SMB, the file sharing protocol from MicroSoft that introduced superiour fine granular ACL permissions with inheritance into their ntfs filesystem and SMB shares. While traditional Linux/Unix permissions or Posix ACL only offer simple read/write/execute based on a user id like 101, ntfs ACL added additional permissions to create/extend files or folders, modify or read attributes or take ownersip based on a unique id like S-1-5-21-3623811015-3361044348-30300820-1013

ACL permissions

The kernelbased Solaris/Illumos SMB server is the only one that fully integrates NFS4 ACL (a superset of Windows ntfs ACL, Posix ACL and simple Unix permissions) with Windows SID (owner/user reference) as a ZFS attribute despite the Unix ZFS filesystem that normally only accepts Unix uid/gid as a user/owner reference. Main adventage is that you can move/restore a ZFS filesystem with all Windows permissions intact. When you use SAMBA instead that relies only on Unix uid/gid, you must use complicated id mappings to assign a Unix uid to a Windows SID that differ from server to server.

https://docs.oracle.com/cd/E23824_01/html/821-1448/gbacb.html

https://en.wikipedia.org/wiki/Access-control_list

https://en.wikipedia.org/wiki/Security_Identifier

http://wiki.linux-nfs.org/wiki/index.php/ACLs

https://wiki.samba.org/index.php/NFS4_ACL_overview

SMB groups

The kernelbased Solaris/Illumos SMB server is the only one that additionally offers local SMB groups. Unlike Unix groups Windows alike SMB groups allow groups in groups in ACL settings. The group id is a Windows SID just like a user id.

Windows previous versions

The kernelbased Solaris/Illumos SMB server is the only one with a strict relation of a ZFS filesystem and a share. This is important when you want to use ZFS snaps as Windows "Previous Versions". As ZFS snaps are assigned to a filesystem, it can be quite confusing when you use SAMBA instead. As SAMBA only sees datafolders and knows nothing about ZFS, you must carefully configure and organize your shares to have this working especially with nested ZFS filesystems while on Solaris "previous Versions" just works without any settings.

Setup

In general the Solaris/Illumos SMB server is much simpler to configure and setup than SAMBA that is an option on Solaris too. No smb.conf with server settings, just set smbshare of a ZFS filesystem to on. SMB server behaviours can be set or shown with the admin tool smbadm, https://docs.oracle.com/cd/E86824_01/html/E54764/smbadm-1m.html or are ZFS properties like aclmode, aclinherit or are NFS4 file or share ACL in general.

Multithreaded

While the singlethreaded SAMBA wants best singlecore CPU performance, the kernelbased SMB server is more optimized for multicore CPUs and mmany parallel requests.

Cons

The kernelbased SMB server has less options than SAMBA and it supports Windows AD member mode only (out of the box).

Last edited:

Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,216

hey _gea have you managed to make a commerical product with all your hard work?

Which Slog beside Intel Optane to protect the ZFS rambased writecache?

In the last years situation was quite easy. When you wanted to protect data in the ZFS rambased writecache using databases or VM storage you just enabled sync write. To limit the performance degration with diskbased pools you simply added an affordable lower capacity Intel Optane from the 800, 90x, 1600 or 4801 series as an Slog. Models differ mainly on guaranteed powerloss protection (every Optane is quite ok regarding plp) and max write endurance.

Nowadays it becomes harder and harder to find them, so what to do?

Diskbased pools:

If you still use diskbased pools for VMs or databases you really need an Slog. Without a ZFS pool offers no more than maybe 10-50 MB/s sync write performance. In such a situation I would try to get one of the Optanes either new or used. As an Slog has a minimal size of only around 8GB, you may also look for a used dram based RMS-200 or RMS-300 from Radian Memory Systems - more or less the only real alternative to Intel Optane.

What I would consider:

Use a large diskbased pool for filer usage or backup only where you do not need sync write. The ZFS pool is then fast enough for most use cases. Add a second smaller/ faster pool with NVME/SSDs for your VM storage or databases and simply enable sync without an extra dedicated Slog. The ZIL of the pool (a fast pool area without fragmentation) protects then sync writes. Only care about NVMe/SSD powerloss protection (a must for sync write), low latency and high 4k write iops. As a rule of thumb, search for NVMe/SSD with plp and more than say 80k write iops at 4k. Prefer NVMe over SSD and 2x 12G/24G SAS like WD SS 530/540 or Seagate Nitro (nearly as fast as NVMe) over 6G Sata SSD.

Special vdev mirror

As an option you can also use data tiering on a hybrid pool with disks + NVMe/SSD mirror. In such a case performance critical data like small io, metadata, deduptables or complete filesystems for VMs or databases with a recsize <= a setable threshold land on the faster part of a pool based on the physical data structures. Often more efficient than classic tiering methods for hot/last data that must be moved between SSD and disks.

In the last years situation was quite easy. When you wanted to protect data in the ZFS rambased writecache using databases or VM storage you just enabled sync write. To limit the performance degration with diskbased pools you simply added an affordable lower capacity Intel Optane from the 800, 90x, 1600 or 4801 series as an Slog. Models differ mainly on guaranteed powerloss protection (every Optane is quite ok regarding plp) and max write endurance.

Nowadays it becomes harder and harder to find them, so what to do?

Diskbased pools:

If you still use diskbased pools for VMs or databases you really need an Slog. Without a ZFS pool offers no more than maybe 10-50 MB/s sync write performance. In such a situation I would try to get one of the Optanes either new or used. As an Slog has a minimal size of only around 8GB, you may also look for a used dram based RMS-200 or RMS-300 from Radian Memory Systems - more or less the only real alternative to Intel Optane.

What I would consider:

Use a large diskbased pool for filer usage or backup only where you do not need sync write. The ZFS pool is then fast enough for most use cases. Add a second smaller/ faster pool with NVME/SSDs for your VM storage or databases and simply enable sync without an extra dedicated Slog. The ZIL of the pool (a fast pool area without fragmentation) protects then sync writes. Only care about NVMe/SSD powerloss protection (a must for sync write), low latency and high 4k write iops. As a rule of thumb, search for NVMe/SSD with plp and more than say 80k write iops at 4k. Prefer NVMe over SSD and 2x 12G/24G SAS like WD SS 530/540 or Seagate Nitro (nearly as fast as NVMe) over 6G Sata SSD.

Special vdev mirror

As an option you can also use data tiering on a hybrid pool with disks + NVMe/SSD mirror. In such a case performance critical data like small io, metadata, deduptables or complete filesystems for VMs or databases with a recsize <= a setable threshold land on the faster part of a pool based on the physical data structures. Often more efficient than classic tiering methods for hot/last data that must be moved between SSD and disks.

Last edited:

There are many new SMB server features and fixes - not least because of needed recent security concerns and bugs.

If you want to know whats going on with the free Illumos kernelbased SMB server, check

https://www.illumos.org/issues?utf8...&c[]=updated_on&c[]=done_ratio&group_by=&t[]=

You can switch to "open tickets" to list only open issues.

If you want to know whats going on with the free Illumos kernelbased SMB server, check

https://www.illumos.org/issues?utf8...&c[]=updated_on&c[]=done_ratio&group_by=&t[]=

You can switch to "open tickets" to list only open issues.

On the 6th of November 2023, the OmniOSce Association has released a new stable version

of OmniOS – The Open Source Enterprise Server OS. The release comes with many tool updates,

brand-new features and additional hardware support

https://github.com/omniosorg/omnios-build/blob/r151048/doc/ReleaseNotes.md

Unlike Oracle Solaris with native ZFS, OmniOS stable is based on Open-ZFS with a dedicated software repository per release.

This means that a simple 'pkg update' gives the newest state of the installed OmniOS release.

To update to a newer release, you must switch the publisher setting to the newer release.

A 'pkg update' initiates then a release update.

An update to 151048 stable is possible from 151046 LTS.

To update an earlier release, you must update in steps over the LTS versions.

OmniOS 151044 stable is EoL with no further updates.

of OmniOS – The Open Source Enterprise Server OS. The release comes with many tool updates,

brand-new features and additional hardware support

https://github.com/omniosorg/omnios-build/blob/r151048/doc/ReleaseNotes.md

Unlike Oracle Solaris with native ZFS, OmniOS stable is based on Open-ZFS with a dedicated software repository per release.

This means that a simple 'pkg update' gives the newest state of the installed OmniOS release.

To update to a newer release, you must switch the publisher setting to the newer release.

A 'pkg update' initiates then a release update.

An update to 151048 stable is possible from 151046 LTS.

To update an earlier release, you must update in steps over the LTS versions.

OmniOS 151044 stable is EoL with no further updates.

Real filebased data tiering on ZFS

I'm currently working on the topic of data tiering.

With the special vdev, ZFS offers a very intelligent approach for hybrid pools made up of large but slow disks and expensive and fast SSD/NVMe. The basic idea with ZFS is: Particularly performance-critical data is stored on the fast special vdev due to its physical data structure (small io, metadata, Dedup tables), all other data is stored on the slow pool vdev.

The main advantage is that you don't have to set anything or copy data between the fast and slow vdevs. Just set and forget. This is a perfect approach for use cases with a lot of small, volatile data from many users (e.g. university mail server) and provides significant performance.

But for a normal Office or VM server this is practically quite useless. The classic tiering approach of storing data specifically in the fast or slower part of the pool would be more suitable. There is no support for this in Open-ZFS, but it could certainly be achieved, see https://illumos.topicbox.com/groups...on-a-special-vdev-and-rule-based-data-tiering

I I'm planning a Pool > Tiering menu in napp-it to make this more convenient. Until then, everyone can try it out manually.

I'm currently working on the topic of data tiering.

With the special vdev, ZFS offers a very intelligent approach for hybrid pools made up of large but slow disks and expensive and fast SSD/NVMe. The basic idea with ZFS is: Particularly performance-critical data is stored on the fast special vdev due to its physical data structure (small io, metadata, Dedup tables), all other data is stored on the slow pool vdev.

The main advantage is that you don't have to set anything or copy data between the fast and slow vdevs. Just set and forget. This is a perfect approach for use cases with a lot of small, volatile data from many users (e.g. university mail server) and provides significant performance.

But for a normal Office or VM server this is practically quite useless. The classic tiering approach of storing data specifically in the fast or slower part of the pool would be more suitable. There is no support for this in Open-ZFS, but it could certainly be achieved, see https://illumos.topicbox.com/groups...on-a-special-vdev-and-rule-based-data-tiering

I I'm planning a Pool > Tiering menu in napp-it to make this more convenient. Until then, everyone can try it out manually.

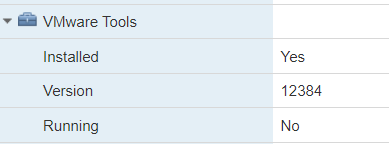

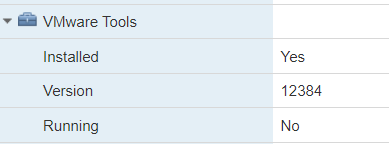

Hi Gea, Im running ESXi 6.5.0, and I just updated to latest omnios, but I noticed that my VMtools not running eventthough its installed. How do I make it run

I enable it but it didnt seem to run:

root@omnios:~# svcadm enable open-vm-tools

root@omnios:~# svcs -x open-vm-tools

svc:/system/virtualization/open-vm-tools:default (Open Virtual Machine Tools)

State: offline since Sun Nov 12 17:50:30 2023

Reason: Unknown.

See: http://illumos.org/msg/SMF-8000-AR

Impact: This service is not running.

I enable it but it didnt seem to run:

root@omnios:~# svcadm enable open-vm-tools

root@omnios:~# svcs -x open-vm-tools

svc:/system/virtualization/open-vm-tools:default (Open Virtual Machine Tools)

State: offline since Sun Nov 12 17:50:30 2023

Reason: Unknown.

See: http://illumos.org/msg/SMF-8000-AR

Impact: This service is not running.

What I can say is that i have had no problems after updating 046 to 048 on ESXi8.

What you can try:

uninstall and reinstall open-vm-tools

try a new install of an OmniOS VM (tools are installed by default)

Update ESXi (I asume this will not solve the problem)

What you can try:

uninstall and reinstall open-vm-tools

try a new install of an OmniOS VM (tools are installed by default)

Update ESXi (I asume this will not solve the problem)

Problems with Open-ZFS 2.2

During a discussion about including Open-ZFS Features to Illumos, dataloss problems with Open-ZFS 2.2 came up.

If you use Open-ZFS 2.2, you may follow the discusion as well

https://illumos.topicbox.com/groups/developer/T3938863282d879c9-Mad80187a2775599fdda57b58https://github.com/openzfs/zfs/issues

During a discussion about including Open-ZFS Features to Illumos, dataloss problems with Open-ZFS 2.2 came up.

If you use Open-ZFS 2.2, you may follow the discusion as well

https://illumos.topicbox.com/groups/developer/T3938863282d879c9-Mad80187a2775599fdda57b58https://github.com/openzfs/zfs/issues

Hi Gea,

After long time I've finally started my old server that I build 10 years ago under Esxi/OpenIndiana and napp-it.

Simple Nas with 3 pools I shared on SMB with all defaults (almost).

My Server is composed with :

¤ SuperMicro X8SIL-F-O

¤ X3430

¤ 16Gb DDR3 ECC

¤ 2 x IBM BR10i (LSI IT Firmware)

¤ 1 pool Raid-z2 (8 x 1To)

¤ 1 pool Raid-z2 (4 x 2To)

¤ 1 pool Raid-z2 (4 x 2To)

But when it booted I can't see my pools on my pc.

What I see on the monitor I hooked is simply VMware Esxi 4.1.0 screen loaded with ip I cannot access. Same to napp-it ip !

Plus I didn't remember my passwords...

So what can I do ?

After long time I've finally started my old server that I build 10 years ago under Esxi/OpenIndiana and napp-it.

Simple Nas with 3 pools I shared on SMB with all defaults (almost).

My Server is composed with :

¤ SuperMicro X8SIL-F-O

¤ X3430

¤ 16Gb DDR3 ECC

¤ 2 x IBM BR10i (LSI IT Firmware)

¤ 1 pool Raid-z2 (8 x 1To)

¤ 1 pool Raid-z2 (4 x 2To)

¤ 1 pool Raid-z2 (4 x 2To)

But when it booted I can't see my pools on my pc.

What I see on the monitor I hooked is simply VMware Esxi 4.1.0 screen loaded with ip I cannot access. Same to napp-it ip !

Plus I didn't remember my passwords...

So what can I do ?

Last edited:

You can try to solve the network and ESXi 4 problems to gain OS or napp-it ip access. Then you can reset/overwrite root and napp-it passwords. This is not straight forward and requires time and some outdated knowledge.

I would simply reinstall a current OmniOS 151048 stable or OpenIndiana 2023.10. If you do not need the old ESXi do a barebone install otherwise setup under a working ESXi (under a more current ESXi you can use my ready to use .ova ESXi template), add napp-it via wget and import the pools. Set a root password via 'passwd root' then to create the SMB password hash. Then enable SMB shares in napp-it and access shares from windows as user root.

If you want other users, create them in napp-it menu Users.

I would simply reinstall a current OmniOS 151048 stable or OpenIndiana 2023.10. If you do not need the old ESXi do a barebone install otherwise setup under a working ESXi (under a more current ESXi you can use my ready to use .ova ESXi template), add napp-it via wget and import the pools. Set a root password via 'passwd root' then to create the SMB password hash. Then enable SMB shares in napp-it and access shares from windows as user root.

If you want other users, create them in napp-it menu Users.

OmniOS r151048b (2023-11-15)

https://omnios.org/releasenotes.html

Weekly release for w/c 13th of November 2023.

To update from former OmniOS, update in steps over LTS versions

To downgrade, start a former boot environment (automatically created on updates)

https://omnios.org/releasenotes.html

Weekly release for w/c 13th of November 2023.

Security FixesThis update requires a reboot

- Intel CPU microcode updated to 20231114, including a security update for INTEL-SA-00950.

- AMD CPU microcode updated to 20231019.

- The UUID of a bhyve VM was changing on every zone restart. For VMs usingcloud-init, this caused them to be considered as a new host on each coldboot.

To update from former OmniOS, update in steps over LTS versions

To downgrade, start a former boot environment (automatically created on updates)

Do you have a guide shows how to upgrade ESXi?You can try to solve the network and ESXi 4 problems to gain OS or napp-it ip access. Then you can reset/overwrite root and napp-it passwords. This is not straight forward and requires time and some outdated knowledge.

I would simply reinstall a current OmniOS 151048 stable or OpenIndiana 2023.10. If you do not need the old ESXi do a barebone install otherwise setup under a working ESXi (under a more current ESXi you can use my ready to use .ova ESXi template), add napp-it via wget and import the pools. Set a root password via 'passwd root' then to create the SMB password hash. Then enable SMB shares in napp-it and access shares from windows as user root.

If you want other users, create them in napp-it menu Users.

Do you have a guide shows how to upgrade ESXi?

- Boot a newer version, update (preserve datastore)

- online update, https://tinkertry.com/easy-update-to-latest-esxi

- new install, preseve datastore. Import VMs via ESXi browser, right click on .vmx in VM folder

You can try to solve the network and ESXi 4 problems to gain OS or napp-it ip access. Then you can reset/overwrite root and napp-it passwords. This is not straight forward and requires time and some outdated knowledge.

I would simply reinstall a current OmniOS 151048 stable or OpenIndiana 2023.10. If you do not need the old ESXi do a barebone install otherwise setup under a working ESXi (under a more current ESXi you can use my ready to use .ova ESXi template), add napp-it via wget and import the pools. Set a root password via 'passwd root' then to create the SMB password hash. Then enable SMB shares in napp-it and access shares from windows as user root.

If you want other users, create them in napp-it menu Users.

Thank you for your quick answer.

So I've installed the last OpenIndiana on a another boot disk and add napp-it but I can't import my pools because I've got an error :

"Could not proceed due to an error. Please try again later or ask your sysadmin.

Maybe a reboot after power-off may help.

167

cannot import 'Datas01': pool is formatted using an unsupported ZFS version"

May I downgrade ZFS version ? And how ?

Have you installed a 10 years old OpenIndiana release 151a (no longer supported, the original fork of Sun OpenSolaris)

or the current OpenIndiana Hipster (ongoing Illumos)? Such a message appears only with a newer pool that you want to

open on an older OS without needed feature support.

Use OpenIndiana Hipster 2023.10 (if you need server+desktop) or OmniOS 151048 stable (Nov 2023) for filer only use.

Both support any newer Illumos ZFS version.

You cannot downgrade the ZFS version of a pool. Only option is to create an older pool and replicate/copy filesystems over.

A workaround that works with some missing features is to open a pool readonly if you only want to read.

or the current OpenIndiana Hipster (ongoing Illumos)? Such a message appears only with a newer pool that you want to

open on an older OS without needed feature support.

Use OpenIndiana Hipster 2023.10 (if you need server+desktop) or OmniOS 151048 stable (Nov 2023) for filer only use.

Both support any newer Illumos ZFS version.

You cannot downgrade the ZFS version of a pool. Only option is to create an older pool and replicate/copy filesystems over.

A workaround that works with some missing features is to open a pool readonly if you only want to read.

Last edited:

Some infos about ZFS hybridpools and data tiering

www.napp-it.org/doc/downloads/hybrid_pools.pdf

www.napp-it.org/doc/downloads/hybrid_pools.pdf

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)