Spoonie_G

Limp Gawd

- Joined

- Jan 10, 2007

- Messages

- 285

I did a search but I couldn't find anything. Please no flame war.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The ATI vs nVidia image quality question was put to bed with the 7 series GeForce cards. You can't tell em apart.

I asked the same question not too long ago, and NLW (3 posts down) linked some interesting articles regarding this ATI/Nvidia image quality thing. If you feel like it, check it out.

http://www.hardforum.com/showthread.php?t=1318153

The ATI vs nVidia image quality question was put to bed with the 7 series GeForce cards. You can't tell em apart.

Then why are the guys over on the ATI side claiming that the ATI cards have better image quality?

Nvidia G8x/G9x/G200 filtering better than Ati.

I don't know what the heck I'm going to get (gtx280 or 4870).

Then why are the guys over on the ATI side claiming that the ATI cards have better image quality?

Oddly, the NVIDIA shots appear "richer" to me, but that's likely because of an slight difference in gamma, though I'd also venture to guess that the 9800's displaying slightly more dynamic range than the 4850 (hunch). Neither output looks 'better' or 'worse' than the other to me, though. Obviously the most notable difference between the two cards is how AA affects transparent sprites (leaves), and the 4850 looks a bit more pleasing in that regard.To me, ATI's colors are much richer. The Nvidia colors seem brighter and washed out.

This link seems to show clear differences in image quality:

http://www.xbitlabs.com/articles/video/display/ati-radeon-hd4850_19.html#sect0

We can only trust that both images were taken with the same settings. To me, ATI's colors are much richer. The Nvidia colors seem brighter and washed out. This may be due to a lighting difference in the scene.

To me, ATI's colors are much richer. The Nvidia colors seem brighter and washed out. This may be due to a lighting difference in the scene.

Switching between the ATI / Nvidia images, there is no difference in gamma, lighting or colour.

The main difference is down to the shadows.

NVidia are not drawing the darker shadows which unfortunately makes some of the leaves disappear in the trees and removes some of the ambience where dark places are still well lit.

All parts of the images are identical except for the dark shadows except...

ATI's anti aliasing loses a whole side of the chain fence!

The apartment at the background shows up as a complete white blob.

Much richer colors? Seriously people.

Monitors have to be calibrated differently when used with different sources because not all sources output the exact same curve. Review sites /never/ recalibrate between shots and don't take shots with cameras, so yes, different cards will produce shots with different curves. Get over it. So long as you, on your personal setup, calibrate things properly there will be no appreciable difference in color.

Look at the HL2 screenshots. The chain-link on the right side is missing entirely on ati cards. That's not cool.

This looks like a potential Source engine fog issue to me. What would the D3D9 reference rasterizer have to say, I wonder?Like I said before, this may just be a bad example, perhaps the game had some kind of dynamic lighting that caused the scene to change so much.

To be able to notice a difference you would have to take still shots, blow them up, and examine them slowly

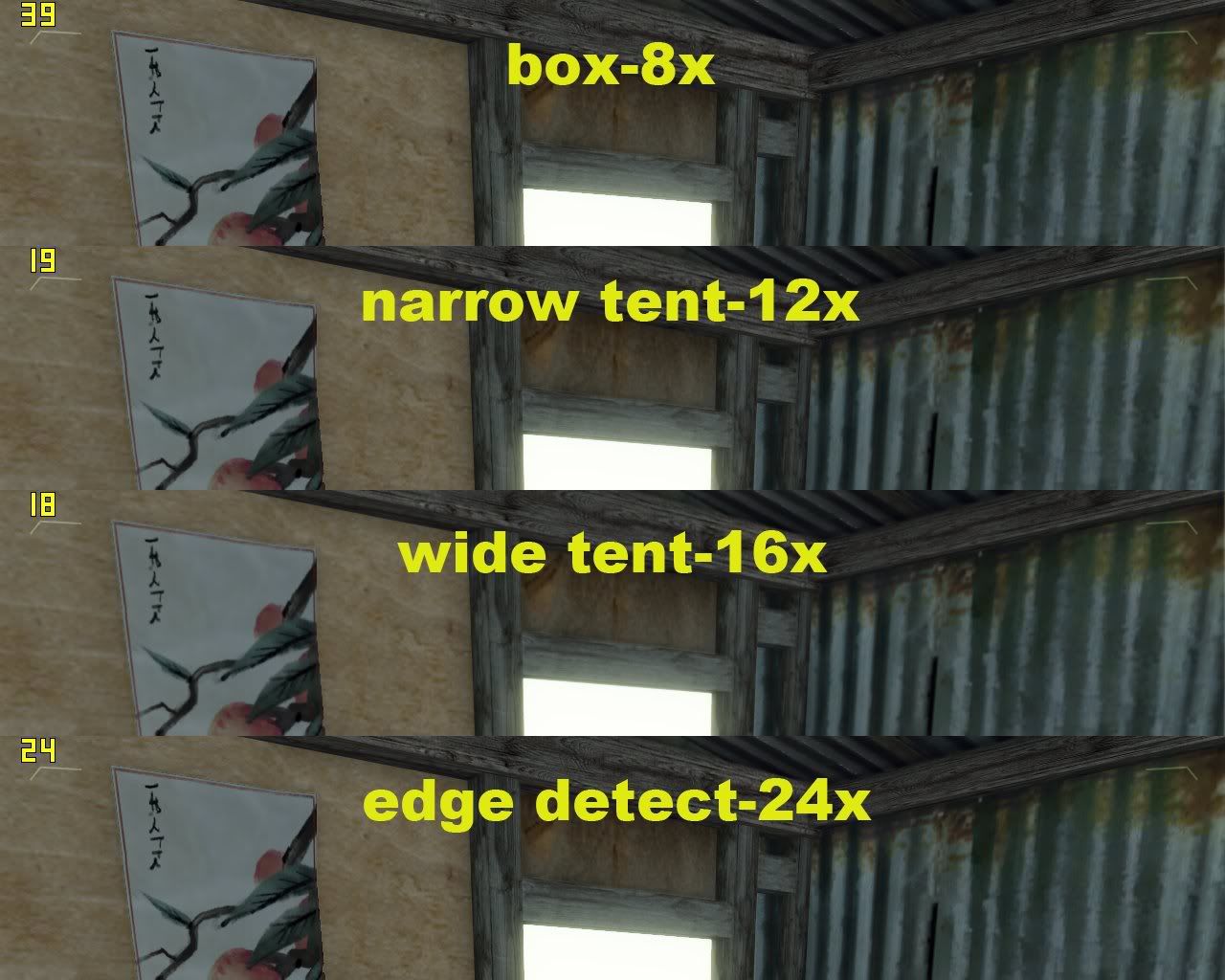

But if you do so I have felt lately NVIDIA has drawn more objects on screen since G80 and had longer dept draw in certain games (HL2) as well as rendering things like plants fuller than ATI. IMO NVIDIA also gives better filtering quality.

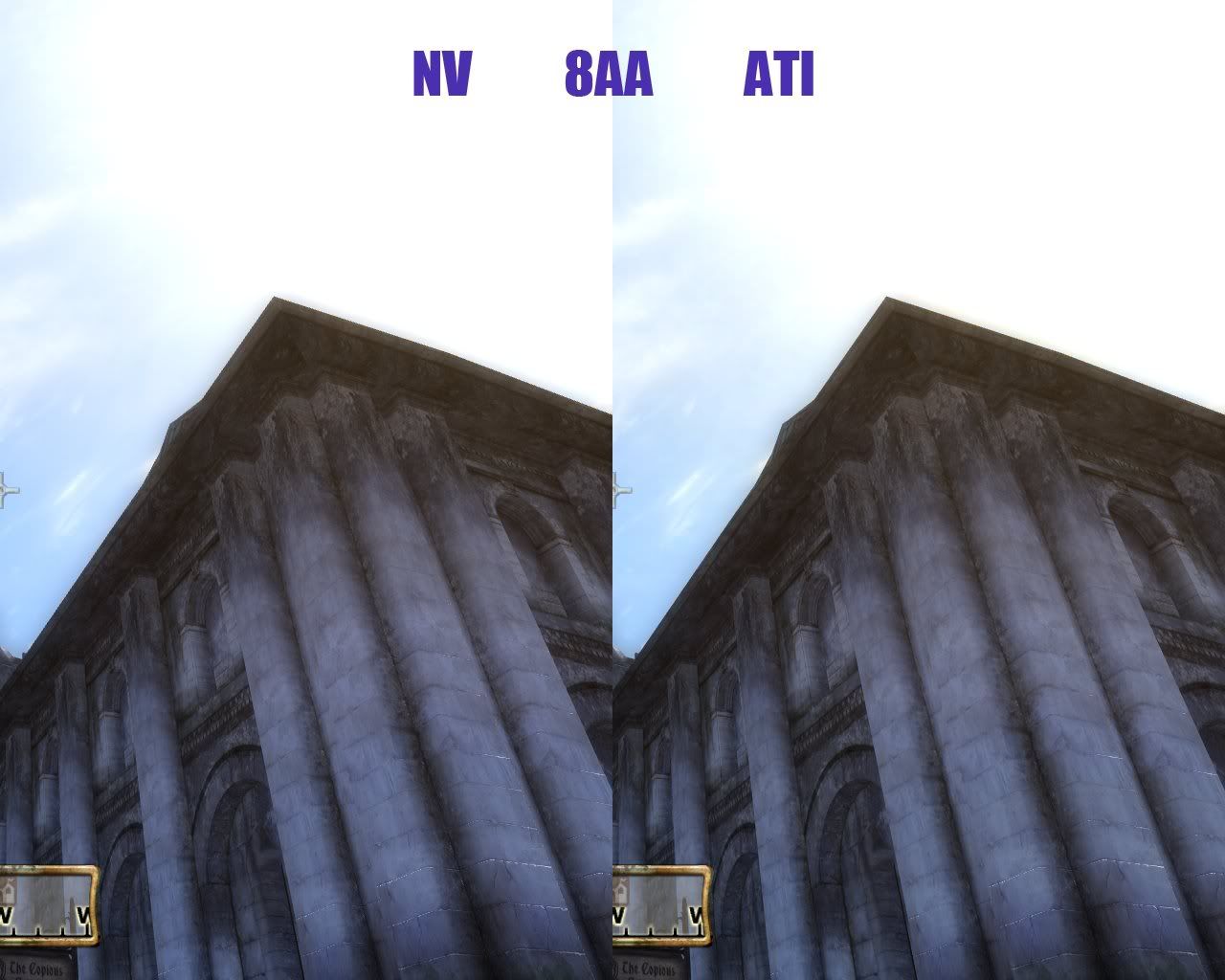

However, ATI has usually always had better AA quality, and IMO nothing has changed with this generation.

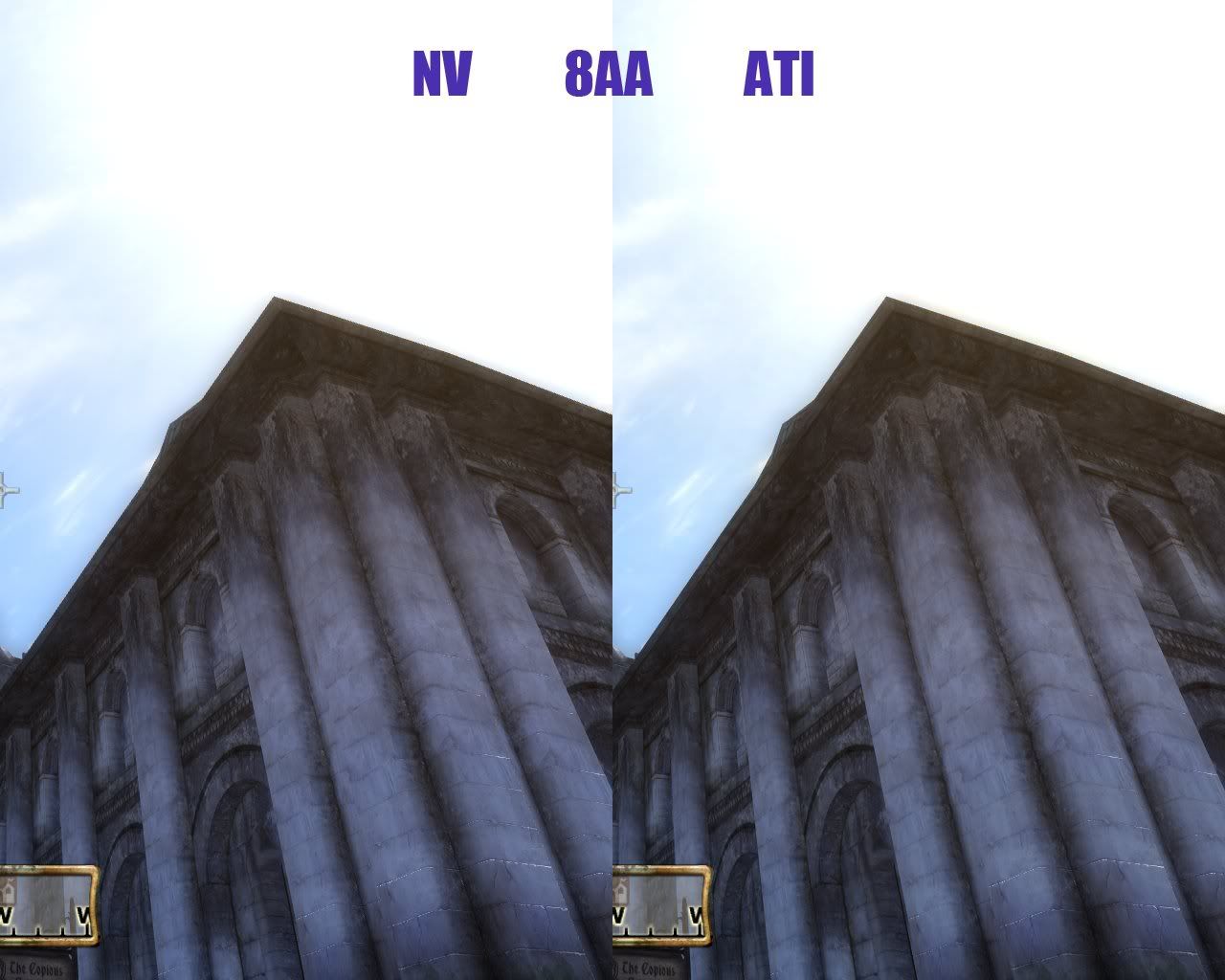

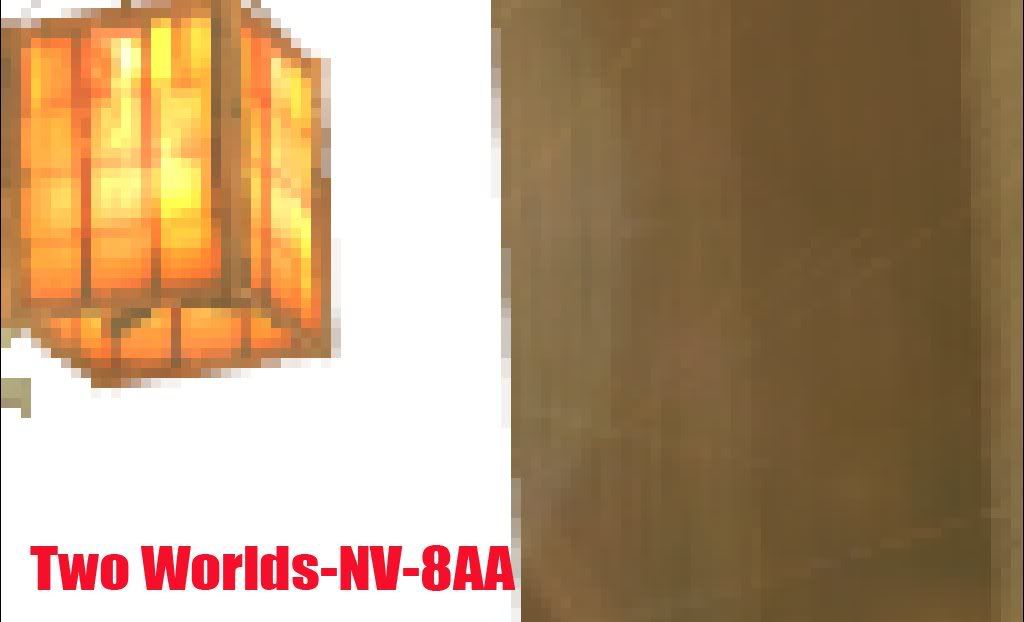

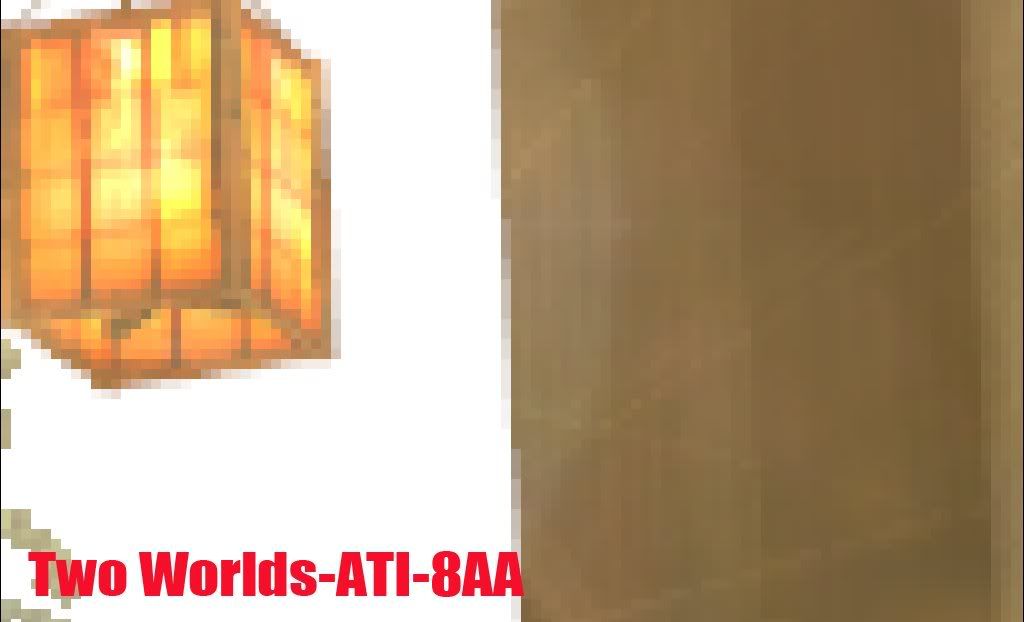

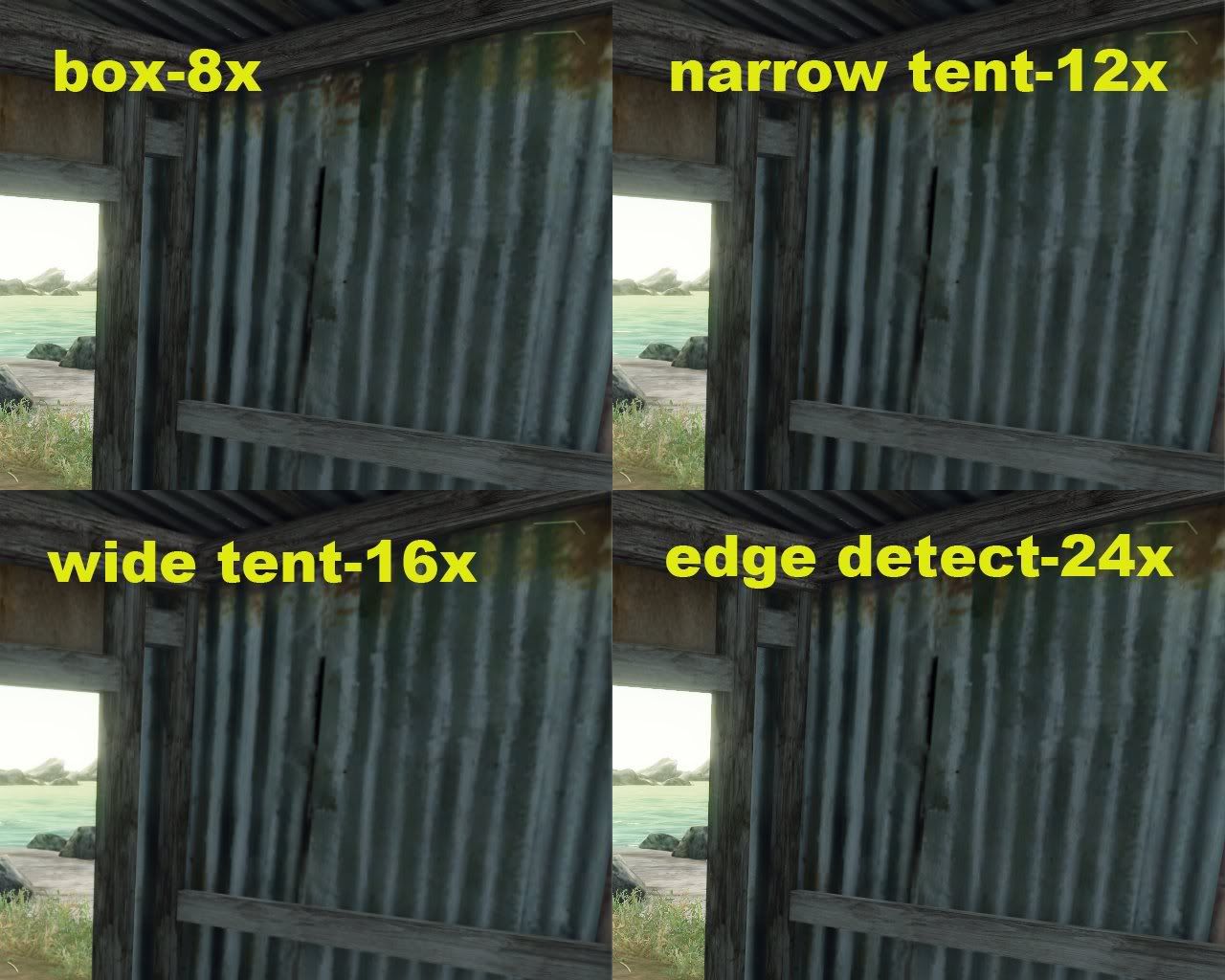

AA comparison of ATI and NV in games

I find that Atis AA is better than NV,

but while enjoying a game you may ignore the difference.

Generally speaking, more than 4AA setting in game will be hard to discover the difference, but if you have some patience to take a look, you will find they are not always the same!

I use two computers to test the AA quality of ATI and NV,

but with the same monitor(benq fp737s).

computer 1:

E6300, 2GB RAM, HD4850

computer 2:

E8400, 2GB RAM, MSI 8800GT OC

The games I tested were TES4:OBLIVION and Two Worlds,

AF was set to 8x by driver,and AA set to 2x、4x、8x,

so I can compare the difference.

Although the drivers of ATI and NV both have advanced settings in AA,

I ignore them. I only test the general settings of AA that everybody may use in game,

and many benchmarks found on www may be the same!

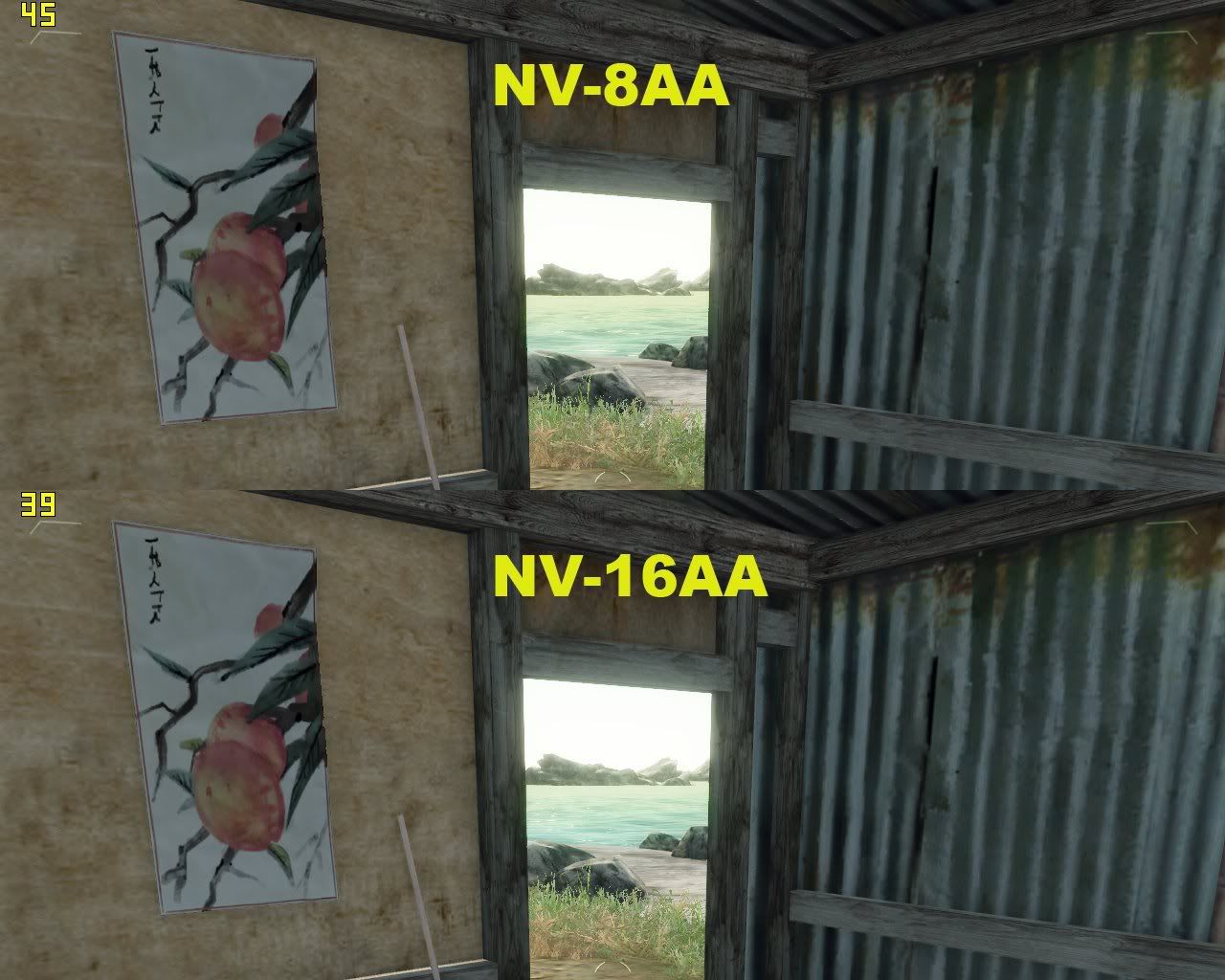

oblivion

8aa

oblivion