Doozer

2[H]4U

- Joined

- May 30, 2001

- Messages

- 2,495

So, I still am waiting for the last house to sell to get my inheritance from when my Mom died.

I want to build something for folding at home with multiple RTX 4--- GPUs to do cancer research.

What's the best plan of attack to get the most PPD for the least heat production?

I don't want to walk into an oven when I go to my office.

I want to just throw a couple of 4090 cards in something to maximize PPD but I don't know how hot they get.

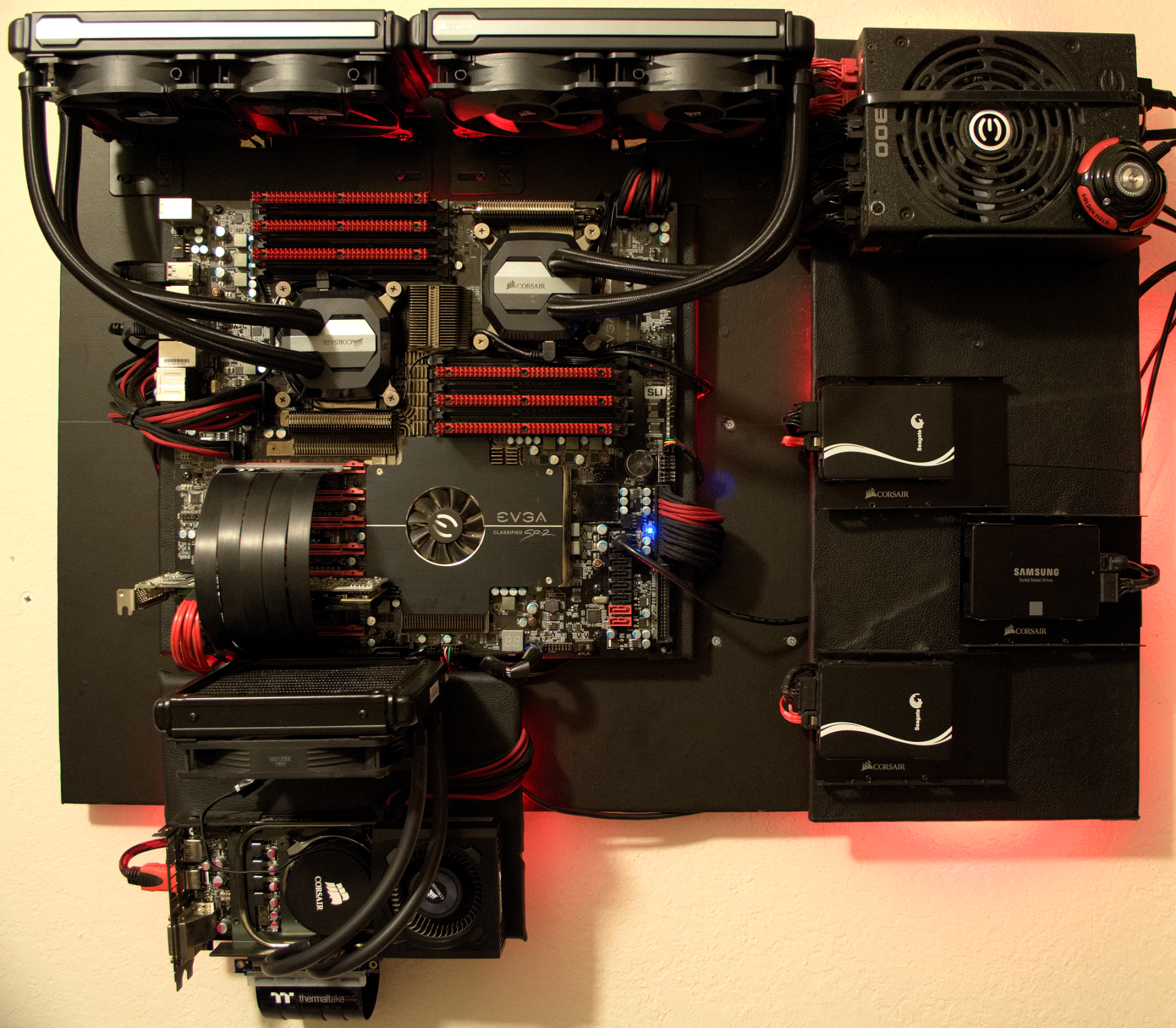

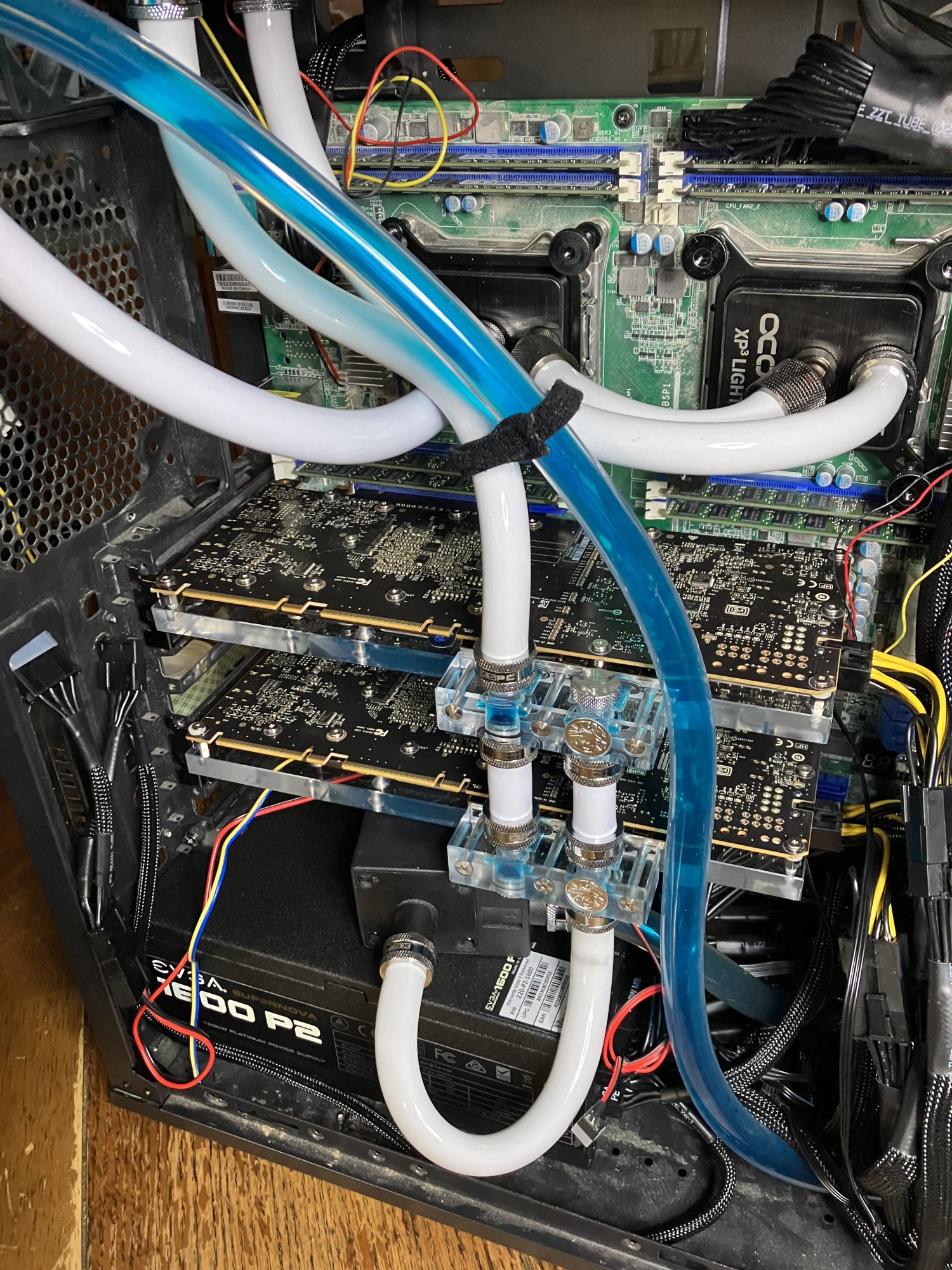

As far as what motherboard, etc. goes what would support multi-card folding? Just anything that has enough PCIe slots?

Would one of the mining boards make sense?

For those of you running more than one RTX card on one PC for folding, what is your setup? I'm looking for ideas.

I want to build something for folding at home with multiple RTX 4--- GPUs to do cancer research.

What's the best plan of attack to get the most PPD for the least heat production?

I don't want to walk into an oven when I go to my office.

I want to just throw a couple of 4090 cards in something to maximize PPD but I don't know how hot they get.

As far as what motherboard, etc. goes what would support multi-card folding? Just anything that has enough PCIe slots?

Would one of the mining boards make sense?

For those of you running more than one RTX card on one PC for folding, what is your setup? I'm looking for ideas.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)