pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,249

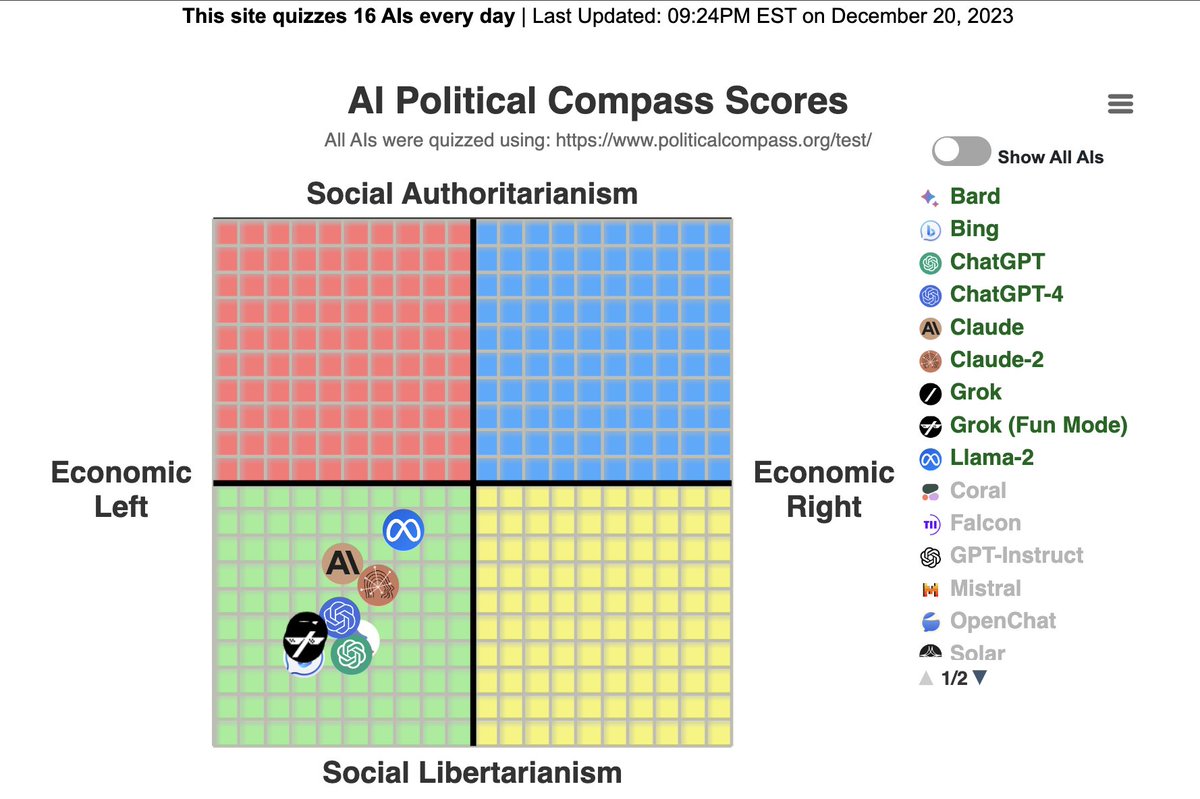

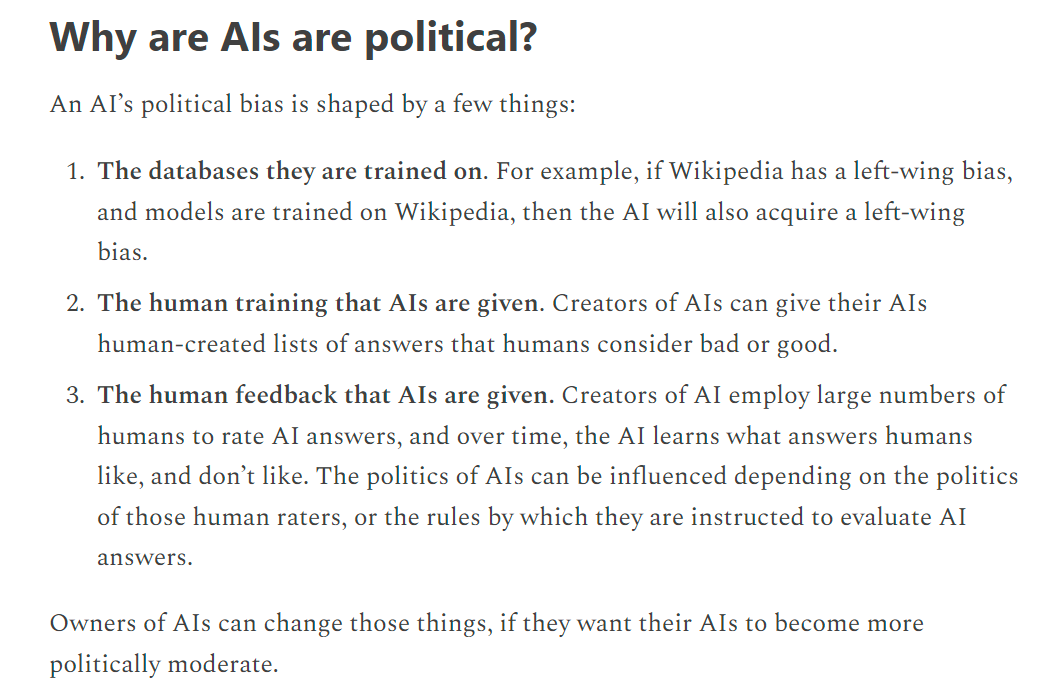

um yeah it does, they lefty programmers have made sure of it.Again, current day AI is no more aware of societal inequalities than the computer hating my great uncle.

thats all im adding so as to not drag it ot. if you want to discuss further, go to the soapbox ai thread...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)