JHefile

Necrophilia Makes Me [H]ard

- Joined

- Jun 22, 2003

- Messages

- 1,180

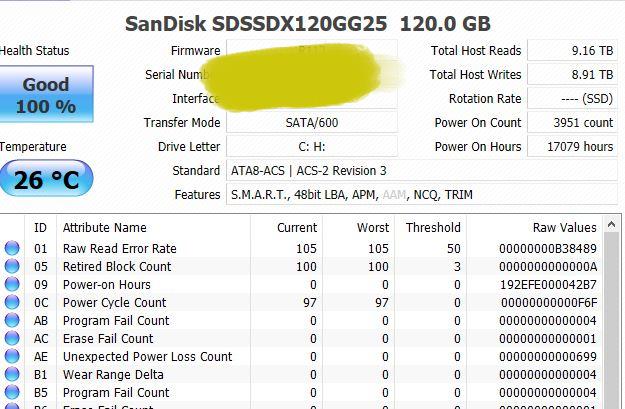

Well Mine never did. I used it like I abused it.

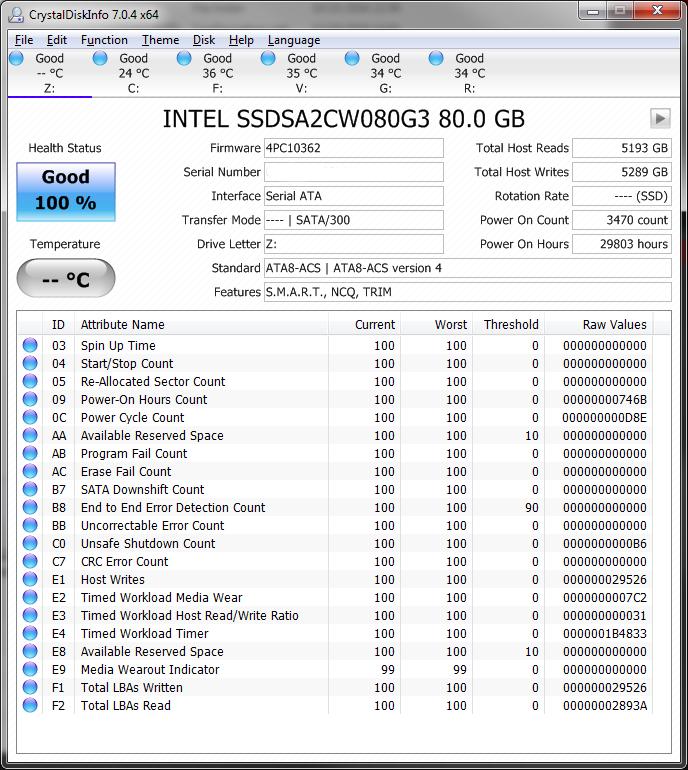

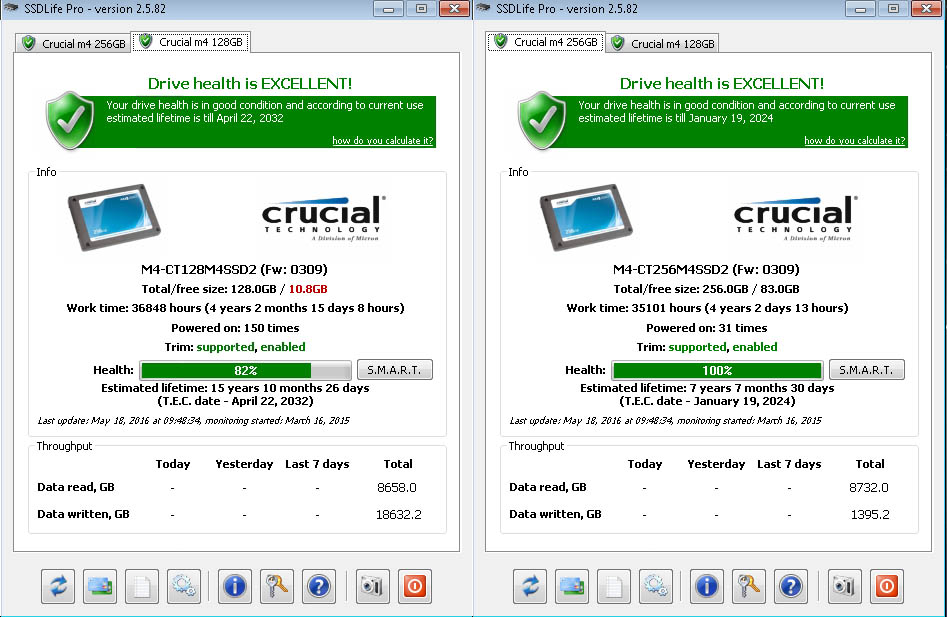

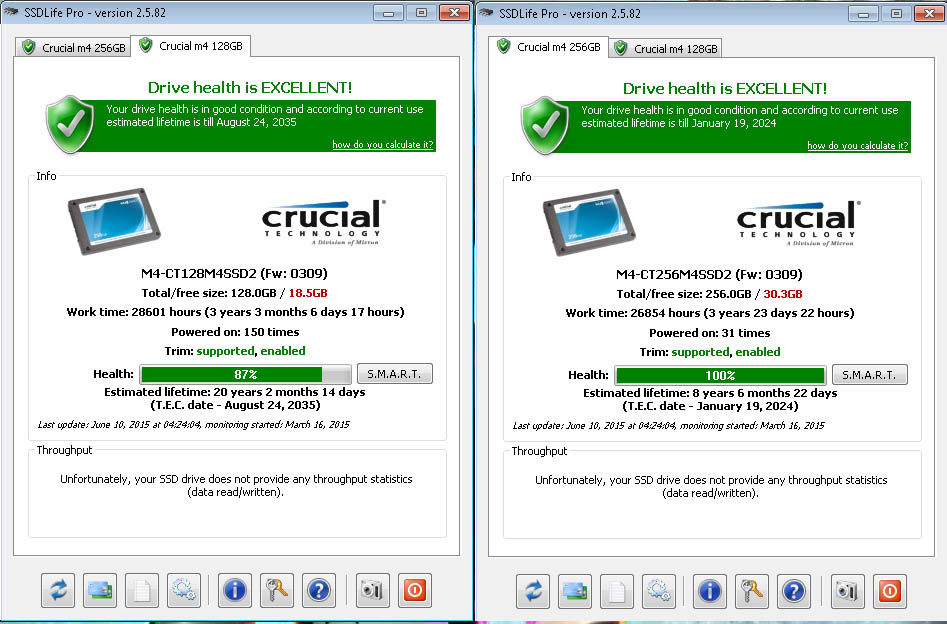

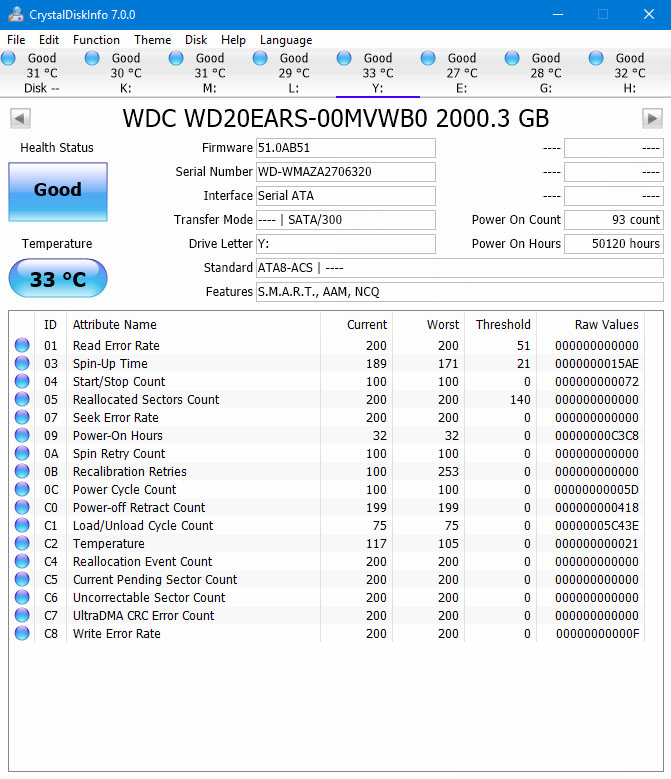

Look how amy hours I have on my SSD and turn ons and you tell me I can't use my SSD cause its as tender as a baby deer

Look how amy hours I have on my SSD and turn ons and you tell me I can't use my SSD cause its as tender as a baby deer

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)