Hello!

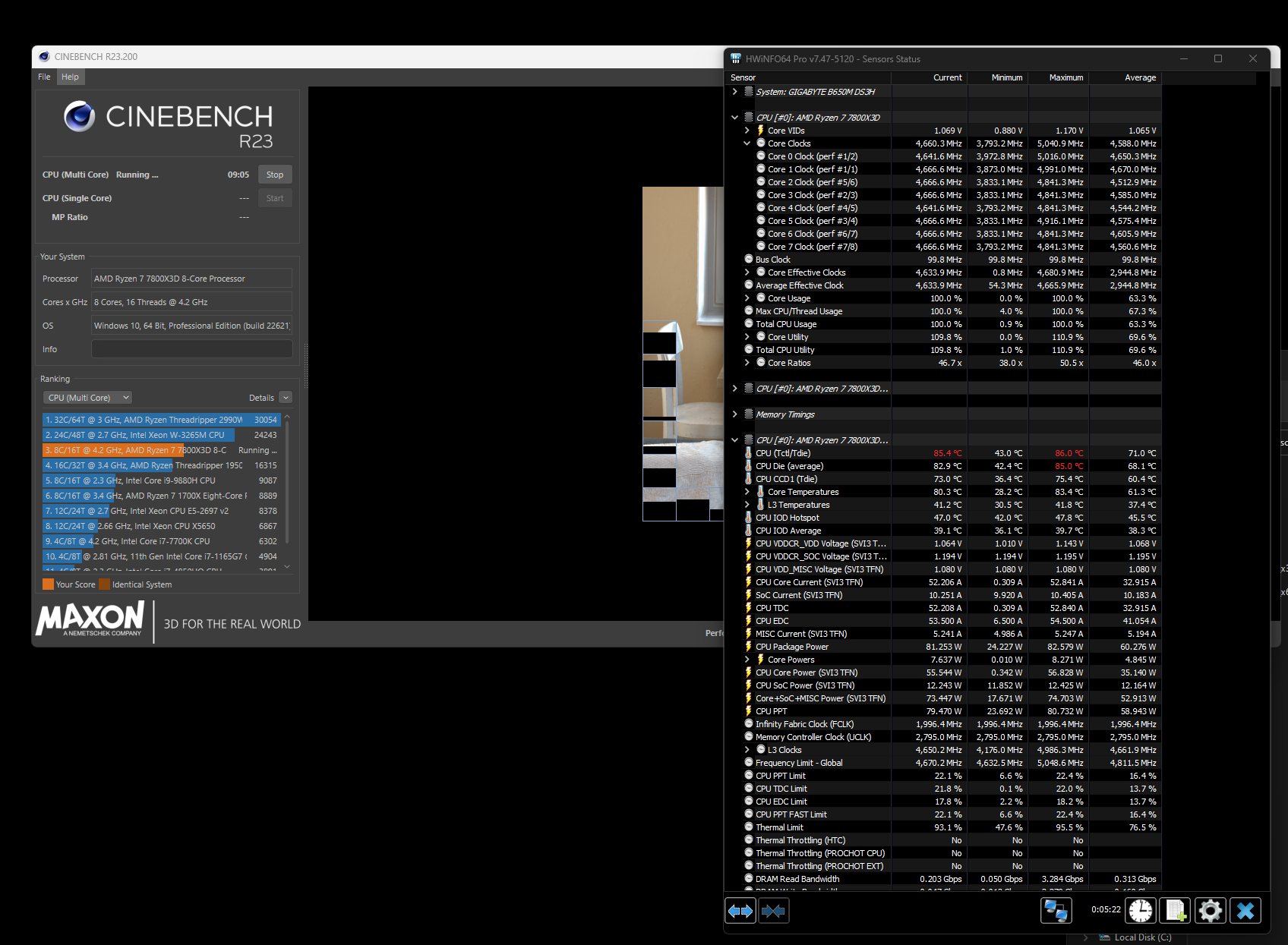

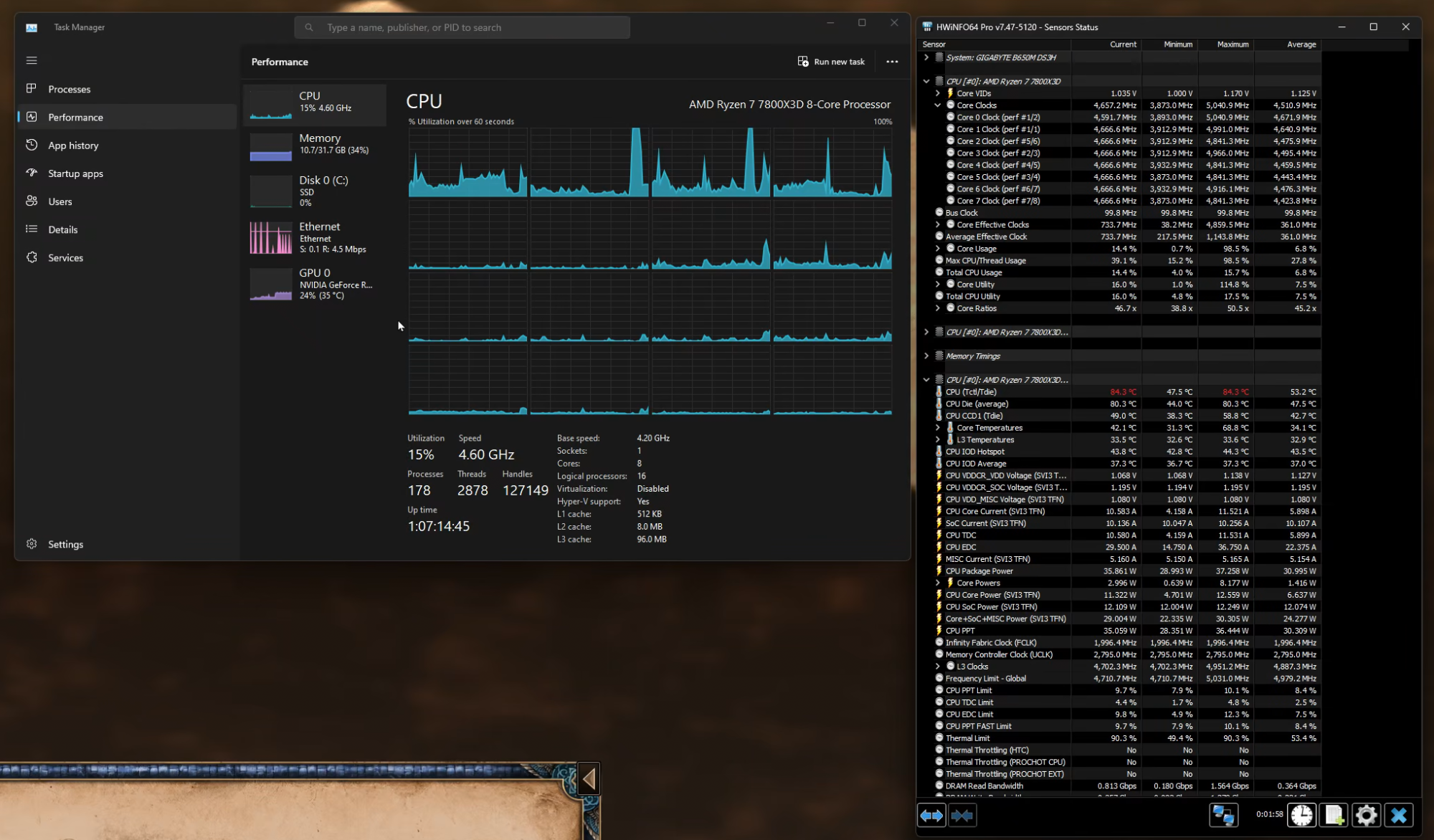

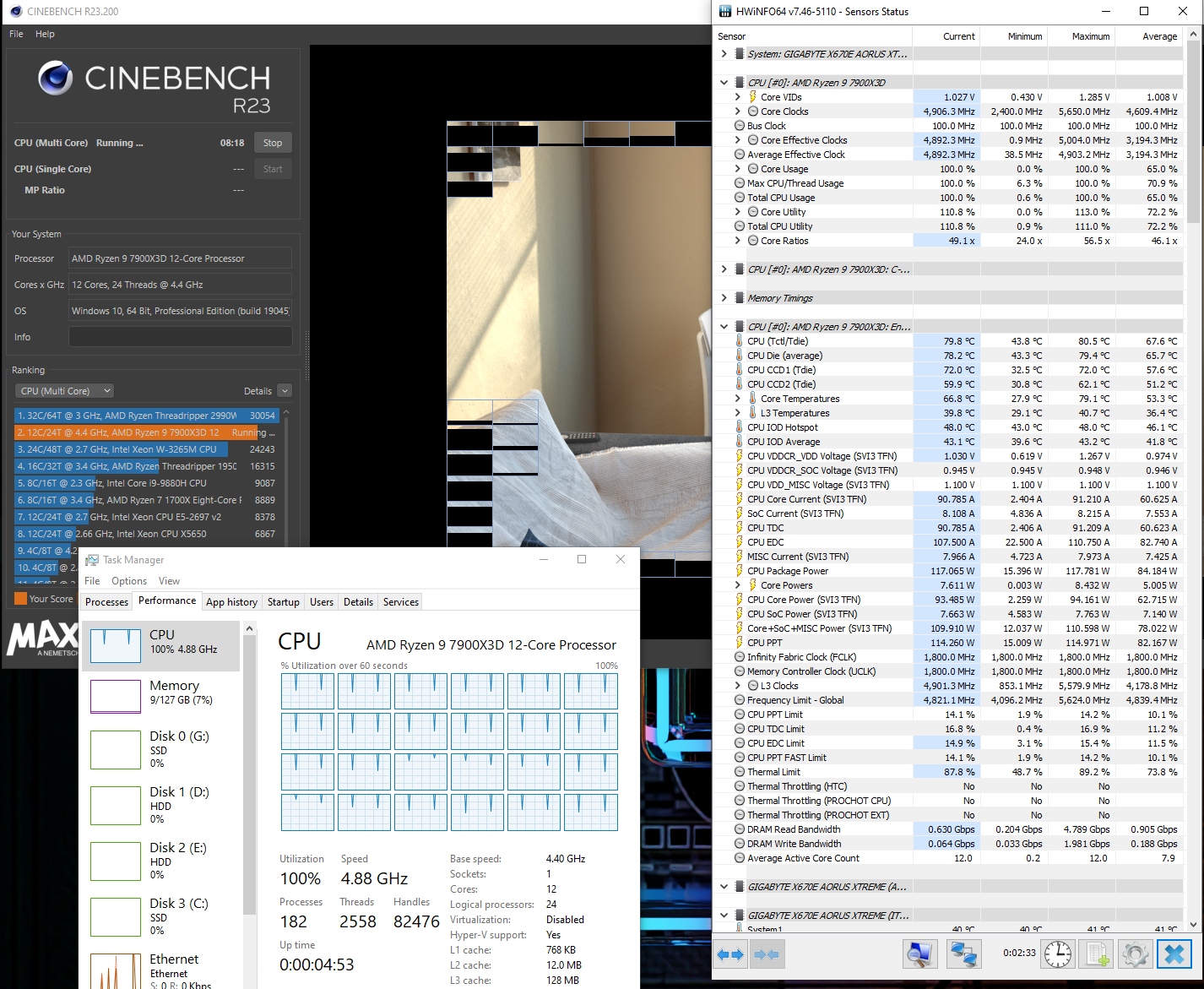

I accidentally stumbled upon an interesting X3D torture scenario. A single threaded game loading screen that pushes temperatures similar or higher than CR23 multi core test for me (which has until now been the hottest test for a 7800 X3D).

What you need: the game Age of Empires 2 DE (I have all DLCs and was testing this in Return of Rome but I highly doubt it matters) and a X3D CPU (well any CPU I guess but for sure nothing special happens on my Intel rigs)

Setup:

-enable and install the free UHD graphics DLC

-host a single player or multiplayer skirmish match with random map, standard settings

-ALL slots filled with a random AI

-extreme difficulty

-random map type with maximum size (Megarandom+ludicrous is perfect for this)

Then press start and watch your temps and fan speeds, it will last a few seconds. I'm running the game off a Gen 4 NVME but I'm pretty sure it doesn't matter one bit.

I don't know how this works but this ramps up my CPU fans to practicalyl 100% and HWinfo records CPU temperatures in the 86c range. (Fractal Torrent and PA 120 SE cooler, 5600MT / CL28 RAM with SOC of 1.2)

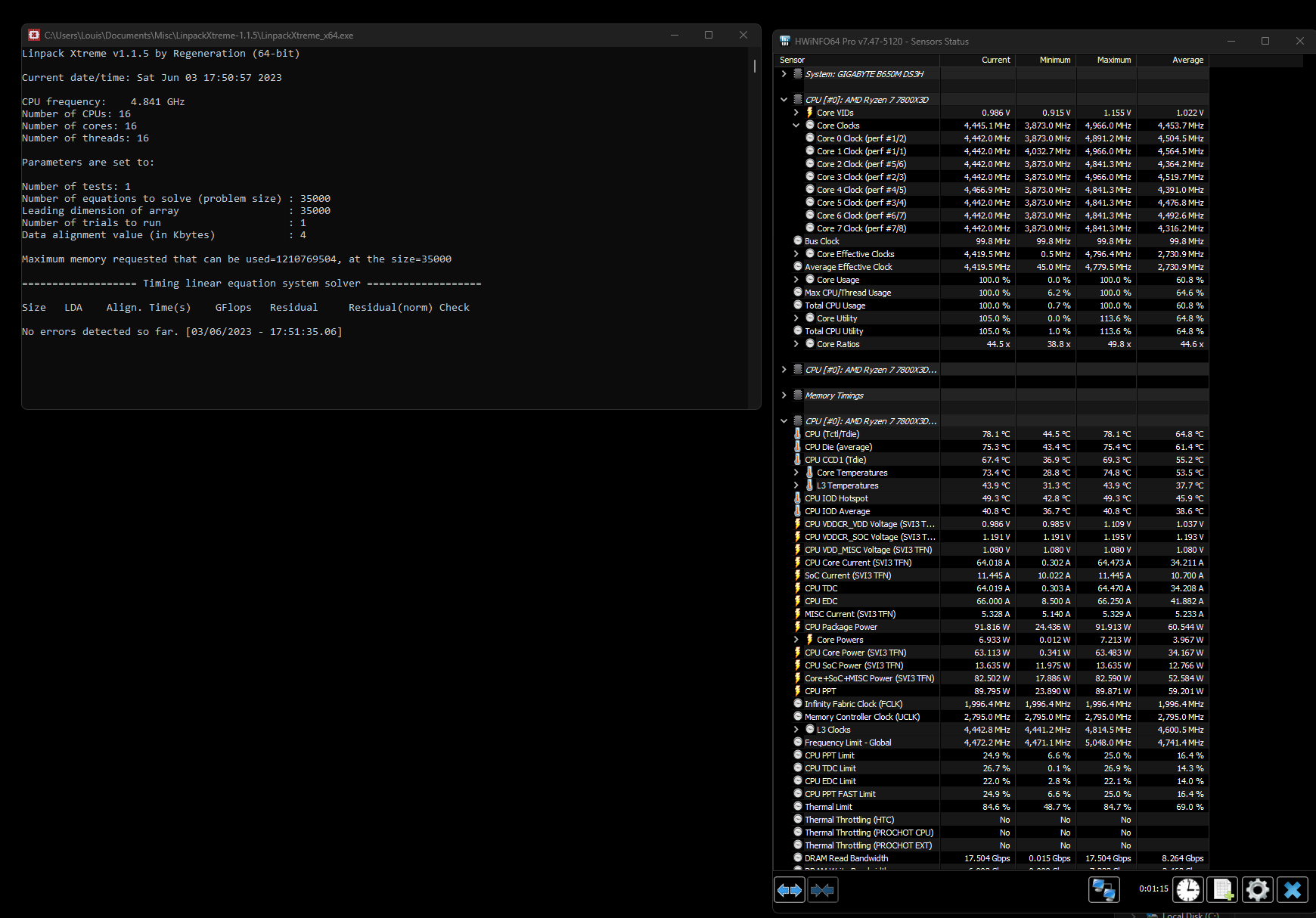

The fan speed and temperature will of course vary depending on silicon and your preferred curve, but you get the idea. The really crazy part is that wattage is really low, in the 35w range and if you check task manager one core is pegged at 100% but that's it!

I am not crashing or erroring or anything, I'm just baffled by this unique behaviour and would love to hear results from other people. Also I really wonder how this is even possible, is it just the Vcache getting absolutely trashed in this test or something?!

Thanks!

I accidentally stumbled upon an interesting X3D torture scenario. A single threaded game loading screen that pushes temperatures similar or higher than CR23 multi core test for me (which has until now been the hottest test for a 7800 X3D).

What you need: the game Age of Empires 2 DE (I have all DLCs and was testing this in Return of Rome but I highly doubt it matters) and a X3D CPU (well any CPU I guess but for sure nothing special happens on my Intel rigs)

Setup:

-enable and install the free UHD graphics DLC

-host a single player or multiplayer skirmish match with random map, standard settings

-ALL slots filled with a random AI

-extreme difficulty

-random map type with maximum size (Megarandom+ludicrous is perfect for this)

Then press start and watch your temps and fan speeds, it will last a few seconds. I'm running the game off a Gen 4 NVME but I'm pretty sure it doesn't matter one bit.

I don't know how this works but this ramps up my CPU fans to practicalyl 100% and HWinfo records CPU temperatures in the 86c range. (Fractal Torrent and PA 120 SE cooler, 5600MT / CL28 RAM with SOC of 1.2)

The fan speed and temperature will of course vary depending on silicon and your preferred curve, but you get the idea. The really crazy part is that wattage is really low, in the 35w range and if you check task manager one core is pegged at 100% but that's it!

I am not crashing or erroring or anything, I'm just baffled by this unique behaviour and would love to hear results from other people. Also I really wonder how this is even possible, is it just the Vcache getting absolutely trashed in this test or something?!

Thanks!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)