- Joined

- Aug 20, 2006

- Messages

- 13,000

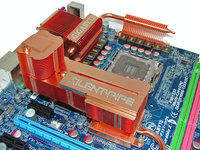

Overclocker der8auer is contending that X299 boards have bad VRM coolers: temperatures reached as high as 100°C on the board he tested. He estimates that the VRM modules are probably at Tjunction max, and the single 8-pin connectors on PSUs can hit 60°C when overclocking the 10-core models. “If I take off the heatsink and just put a fan over it, there's no problem whatsoever.”

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)