I've always wondered why AMD cards look so great on paper but can't take the crown.

I know architectures are not comparable, but the radeon 7 looks like a monster (as did Vega for that matter). Spec wise it looks like it could pretty much kill the RTX2080, but only manages to keep up with it.

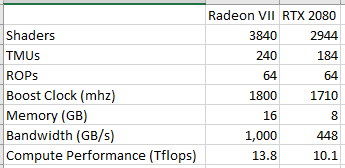

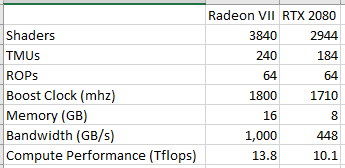

Just take a look at the specs

I mean the Radeon VII has better specs on all counts but ROPs. It even has twice the memory size, more than twice the bandwidth and 38% more compute power.

Then again it draws more power at 300w compared to RTX 2080 225W and even RTX 2080Ti 260w

AMD still has a lot to learn on performance and efficiency.

I know architectures are not comparable, but the radeon 7 looks like a monster (as did Vega for that matter). Spec wise it looks like it could pretty much kill the RTX2080, but only manages to keep up with it.

Just take a look at the specs

I mean the Radeon VII has better specs on all counts but ROPs. It even has twice the memory size, more than twice the bandwidth and 38% more compute power.

Then again it draws more power at 300w compared to RTX 2080 225W and even RTX 2080Ti 260w

AMD still has a lot to learn on performance and efficiency.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)