Gottfried Leibnizzle

Limp Gawd

- Joined

- Apr 29, 2015

- Messages

- 253

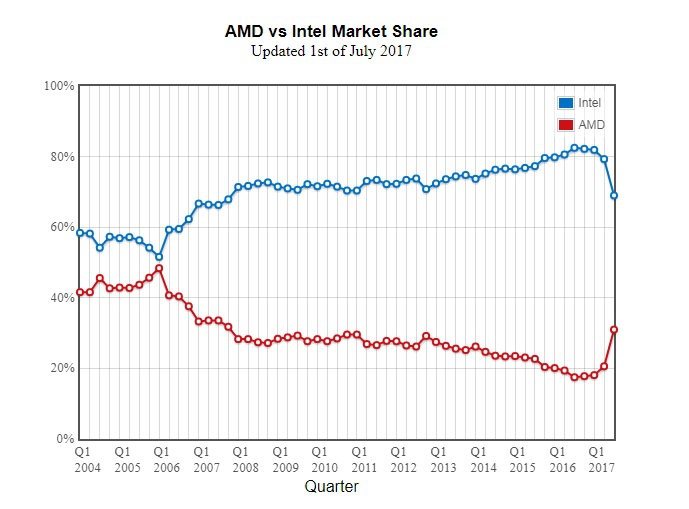

For MOST of the single-core era, AMD's CPUs were faster than Intel's, and when netburst hit, they were STUPID faster.

Okay, chief. 'Tever ya say.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

For MOST of the single-core era, AMD's CPUs were faster than Intel's, and when netburst hit, they were STUPID faster.

I dunno if they will be able to do 8 Ryzen cores, for heat reasons. I could see 6. Definitely 4.

“From a gaming perspective, do we really need octo-core processors with insanely high clocks?” Eurogamer believes so, and not only for more obvious reasons such as overhead and running games on high-refresh displays: next-gen consoles are expected to get huge CPU upgrades, which will reset the baseline.

Most console ports can easily run at higher than 60fps on PC, even factoring in the oppressive overhead introduced by Windows, but with next-gen likely to feature Ryzen and possibly two quad-core modules for eight cores in total, games will inevitably become much more complex and the PC versions will see system requirements rise significantly for the top-end experience.

developers also asked that the hardware use no more than eight CPU cores because "the consensus was that any more than eight, and special techniques would be needed to use them, to get efficiency."

To answer the question:

Because we were set back 5 years by meltdown / spectre.

P4EE was competitive with A64

All for the low, low price of $999.99!

AMD whooped intel's butt with Athlon 64. Intel's response was to take illegal actions to keep AMD's products from being bought. This irreparably affected AMD's bottom line and also the way they were perceived by vendors. And it left them without the money to properly R&D future products. Leavng them to settle for relatively lower performing solutions by the time Sandy Bridge came out. AMD is only just now starting to appreciably rebound.

Former [AMD] CFO [Fran] Barton is not convinced that the company has much a future. “[Even without the lawsuit against Intel,] it wouldn’t have mattered,” he said. “[Sanders] took his shot, and the game’s been played.”

The K8 architecture was successful on the desktop in the form of the Athlon 64 lineup, but it was the Opteron server variants that brought AMD real success in the high-margin market.

[...]

Despite technical successes, AMD's financial situation had become precarious. Processor unit sales were falling, and margins on most chips dropped quickly after 2000. AMD also had problems with producing too much inventory; in the second half of 2002, AMD actually had "to limit shipments and to accept receipt of product returns from certain customers," it announced, because the chips it made weren't selling fast enough. The company had a net loss of $61 million in 2001, $1.3 billion in 2002, and $274 million in 2003.

What was sucking away the company's money? It was those darned fabs, just as Raza had feared. In the company's 2001 10-K, AMD estimated, "construction and facilitation costs of Dresden Fab 30 will be approximately $2.3 billion when the facility is fully equipped by the end of 2003." There was also a $410 million to AMD Saxony, the joint venture and wholly owned subsidiary that managed the Dresden fab.

By the following year, AMD upped its estimated costs to fund Dresden to $2.5 billion and added that by the end of 2001, it had invested $1.8 billion. The estimated costs continued to rise, as per the 2003 10-K: "We currently estimate that the construction and facilitation costs of Fab 30 will be $2.6 billion when it is fully equipped by the end of 2005. As of December 29, 2002, we had invested $2.1 billion in AMD Saxony." That same year, AMD plowed ahead with a new Dresden fab ("Fab 36"), investing $440 million into it by the end of the year.

The money for these huge investments all relied on AMD's ability to sell chips, and AMD's ability to sell chips was made easier by its competitive edge over Intel. Unluckily for AMD, Intel didn't take this challenge lying down.

As Raza tells the story today, his boss insisted on building a fab in Dresden, Germany, over Raza's objections. (That fab, which still operates today as part of AMD spin-off GlobalFoundries, was completed in the spring of 2000.)

"The trouble in the entire economic model was that AMD did not have enough capital to be able to fund fabs the way they were funding fabs," Raza said. "The point at which I had my final conflict was that [Sanders] started the process of building a new fab with borrowed money prematurely. We didn't need a fab for at least another year. If we had done it a year later, we would have accumulated enough profits to afford the fab in Germany. He laid the foundation for a fundamentally inefficient capital structure that AMD never recovered from. I told him: don't do it. I put the [purchase orders] on hold. He didn't tell me and accelerated the entire process."

Intel still owes money to AMD, from the resulting legal actions.

This is a myth has been debunked by AMD people.

What is him mentioning? He is mentioning that bad decisions taken by AMD managers, including the building of fabs

It is time to stop repeating old myths again and again. What Intel did was both unfair and illegal, no one can negate that, but AMD finances were more impacted by the dozens of mistakes made by AMD managers and engineers than by Intel illegal practices.

Also it is interesting how this Intel illegal action is mentioned again and again but no one of the illegal actions of AMD are mentioned. What when AMD used illegal reverse engineering practiques to copy Intel chips? What when AMD violated the x86 agreement with Intel?

Another myth spread by the suspect media.

Intel did pay all the money to AMD. What Intel didn't pay was the EU fine to the EU. Intel appealed the EU sentence and won the right to get the whole issue reviewed

https://www.ft.com/content/f460ef98-930f-11e7-bdfa-eda243196c2c

I repeat this is a dispute bettwen the EU and Intel, not between AMD and Intel.

Always the same song:

- Bulldozer will bring eight cores to mainstream. Soon games will require eight cores.

- Piledriver will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles (PS4 and Xbox1) will have eight cores. Soon games will require eight cores.

- Mantle will free multithreading API limits. Soon games will require eight cores.

- DX12 will free multithreading API limits. Soon games will require eight cores.

- Vulkan will free multithreading API limits. Soon games will require eight cores.

- Zen will bring eight cores to mainstream. Soon games will require eight cores.

- Zen+ will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles will have eight cores. Soon games will require eight cores.

I'm sure you want to believe that. But AMD was not struggling to survive, they were fighting and striving to win. If Intel hadn't brought out their "mother of all programs" that was deemed illegal, AMD would have taken market dominance..

Intel was contractually REQUIRED to give AMD their designs for creating x86 processors as per Intel's agreement with IBM. By IBM's contract, Intel had to allow AMD to duplicate the chips as a second source provider. Intel violated this contract, and did not share designs with AMD, and AMD in-turn, reverse-engineered Intel's tech, and actually made it faster.

Source

The lawsuit is between the EU and Intel, Over Intel's anti-competitive actions. you left that part out.

and in a way it changed gaming. When it came out it was far behind a 2500. By then end of their few year, it had closed the gap to almost nothing. Windows 10 had a lot to do with this.

8 cores is mainstream. As much as 4 and 6 are. Even if games use less cores, side applications. streaming and robust chat rooms make it preferable.

The implication was inside of a Playstation.Ryzen 7 2700x 8 cores/16 threads. Available everywhere CPUs are sold for approx. $300.

Now continue to the part that says how Intel sued AMD in 1990 for cloning and finally won in 1996 the right to stop AMD copying its designs. The Am5x86, an Intel 486 clone, was the last clone by AMD. The next chip, the K5, was an in-house design at AMD. And what curious the clones were fast and popular in the market but the first all-AMD desing failed because it "was late to market and did not meet performance expectations" and never gained the acceptance among large computer manufacturers that the 486 clones had.

1968--Intel is founded by Bob Noyce and Gordon Moore.

1969--AMD is founded by Jerry Sanders along with a team of former Fairchild Semiconductor employees.

Early 1980s--IBM chooses Intel's so-called x86 chip architecture and the DOS software operating system built by Microsoft. To avoid overdependence on Intel as its sole source of chips, IBM demands that Intel finds it a second supplier.

1982--Intel and AMD sign a technology exchange agreement making AMD a second supplier. The deal gives AMD access to Intel's so-called second-generation "286" chip technology.

1984--Intel seeks to go it alone with its third-generation "386" chips using tactics that AMD asserts were part of a "secret plan" to create a PC chip monopoly.

1987--AMD files legal papers to settle the 386 chip dispute.

1991--AMD files an antitrust complaint in Northern California claiming that Intel engaged in unlawful acts designed to secure and maintain a monopoly.

1992--A court rules against Intel and awards AMD $10 million plus a royalty-free license to any Intel patents used in AMD's own 386-style processor.

1995--AMD settles all outstanding legal disputes with Intel in a deal that gives AMD a shared interest in the x86 chip design, which remains to this day the basic architecture of chips used to make personal computers.

1999--Required by the 1995 agreement to develop its own way of implementing x86 designs, AMD creates its own version of the x86, the Athlon chip.

2000--AMD complains to the European Commission that Intel is violating European anti-competition laws through "abusive" marketing programs. AMD uses legal means to try to get access to documents produced in another Intel antitrust case, this one filed by Intergraph. The Intergraph case is eventually settled.

2003--AMD's big technology breakthrough comes when it introduces a 64-bit version of its x86 chips designed to run on Windows, beating Intel, which for the first time has to chase AMD to develop equivalent technology. AMD introduces its Opteron line of chips for powerful computer server machines and its Athlon line for desktops and mobile computers.

If the new consoles are going to have an 8 core, 16 thread CPU, then the new 8 core 8 thread Intel i7 is just not going to cut it, IMO. I'm not quite sure what Intel is thinking with that move. Maybe it's because they don't have a CEO right now? It's a bone headed move. And $450 is too expensive for the new i9. I don't mind I guess. It makes my 8700k relevant for one more year because Intel is getting greedy.

Gaming didn't change in the way that fans claimed. Back then fans claimed that FX-8350 was the future and that i5-2500k would be soon obsolete for gaming. Even today the i5-2500k is better for gaming in AMD-favorable titles as BF1.

We are discussing games.

Not even close to what I said. Things can be ahead of their time and the industry does not turn on a dime. It evolves. But if you want your little cookie. Yes the 2500 is still better. My point that you seemed to misconstrue, the gap closed significantly!

No, it was not - and lord were those expensive (not in a competitive way) CPUs for what they were.P4EE was competitive with A64

You see the world through odd color glasses. Any improvement for any CPU is good. The inertia is there. Even if AMD leads from below, the 8 core segment will grow at some rate.

Always the same song:

- Bulldozer will bring eight cores to mainstream. Soon games will require eight cores.

- Piledriver will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles (PS4 and Xbox1) will have eight cores. Soon games will require eight cores.

- Mantle will free multithreading API limits. Soon games will require eight cores.

- DX12 will free multithreading API limits. Soon games will require eight cores.

- Vulkan will free multithreading API limits. Soon games will require eight cores.

- Zen will bring eight cores to mainstream. Soon games will require eight cores.

- Zen+ will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles will have eight cores. Soon games will require eight cores.

Eurogamer seems to forget that when the PS4 and the Xbox1 were in the blackboard phase, game programmers were asked about the core configuration and

The implication was inside of a Playstation.

*Ryzen 2 isn't a large enough advancement to squeeze 8 cores into a playstation. Heat/power/and price are all working against that.

**I think they will probably shoot for a six core. Albeit cut down/modified, since it will be an APU. And probably clocked around 3 ghz, to keep the power and heat down.

It's important to point out that even though the current generation of consoles have an 8-core APU, only 6 of them are available to games. The PS4 unlocked the ability to use a seventh a couple years ago. I'm not sure if the Xbox One followed suit. Seems to me that the 6C/12T 8700K is the real sweet spot if that is the case.Always the same song:

- Bulldozer will bring eight cores to mainstream. Soon games will require eight cores.

- Piledriver will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles (PS4 and Xbox1) will have eight cores. Soon games will require eight cores.

- Mantle will free multithreading API limits. Soon games will require eight cores.

- DX12 will free multithreading API limits. Soon games will require eight cores.

- Vulkan will free multithreading API limits. Soon games will require eight cores.

- Zen will bring eight cores to mainstream. Soon games will require eight cores.

- Zen+ will bring eight cores to mainstream. Soon games will require eight cores.

- Next gen consoles will have eight cores. Soon games will require eight cores.

Eurogamer seems to forget that when the PS4 and the Xbox1 were in the blackboard phase, game programmers were asked about the core configuration and

It's important to point out that even though the current generation of consoles have an 8-core APU, only 6 of them are available to games. The PS4 unlocked the ability to use a seventh a couple years ago. I'm not sure if the Xbox One followed suit. Seems to me that the 6C/12T 8700K is the real sweet spot if that is the case.

I'm not convinced.

For years now we have heard fanboys decrying all those lazy developers, and claiming that if they would only do their job, and if there were only enough widespread adoption of many core systems, we'd see games and engines that with perfect efficiency could spread across limitless numbers of CPU cores.

Unless we have some major breakthrough in technology (sub instruction processing, chiplets, etc.) I just don't think this will be the case.

True multithreaded coding is simply not possible on a majority of code, no matter how hard you try. It results in threadlocks, performance degradation and crashes. There are some tasks for which it works very well, when you have many small repeatable tasks, like rendering or encoding type activities. In games, graphics rendering is a good example of this, but the GPU is already doing this way more effectively than any CPU could. Physics processing is also an area which could be multithreaded very well. This is why we have seen so many GPU Physics implementations (PhysX, OpenCL based, etc). The rest of the tasks a game engine does are more tricky, because the have dependencies and could result in thread locks. DX12 also does a good job of multithreading the CPU tasks responsible for feeding the GPU with the data it needs in order to render. Main game engine, NPC AI, audio processing, etc. - however - are in most cases not going to be that easy, or even possible to multithread at all.

Of course, there will be some exceptions. Civilization V and VI for instance, are able to effectively multithread the end of turn times, because these can be effectively broken down to subtasks that don't strongly depend on eachother (but even then, the engine sometimes locks, and you can tell it sits there a while not really sure what to do until - presumably - a watchdog timer kicks in, kills the tasks that have been running too long, and re-starts them, hoping it doesn't lock this time.

This is who you see the current patterns in CPU loads in most games. You usually have one core either pinned or with very high CPU load. This is the main game thread. It usually can't be multithreaded due to all the state dependencies. Then you have one or two additional threads that have a a smaller (20-30%) amount of load which are handling other spun off tasks that are single threaded, but separate from the main process. This could be audio processing, AI processing, or poorly coded physics. Then you have a few more cores with low 5-10% CPU loads. These cores are running the code that can be properly multithreaded, like properly coded physics, DX12 draw calls, etc. It's not a significant portion of the load. Sometimes in some titles, these will spread out over all the cores in a system. Other times, depending on how the code is written there will be some sort of max. Either way, this is generally not where the bulk of the CPU load comes from, and thus spreading it out over more cores is unlikely to make a huge difference.

The thing is, many of these limitations above are fundamental to computer science. They are unlikely to change even over the long term. This is why I think for gaming purposes 4-6 big cores are going to be better than a larger quantity of smaller cores indefinitely. I really don't see this changing much. Also I'm not sure we would want it to.

An example is DX12, and Civilization VI as mentioned above. On a lower end, system with small cores DX12 has a distinct improvement. It is able to better spread the draw calls over all cores and better balance the system, but on a high end big core system enabling DX12 actually kills performance, because the big cores are capable of handling the draw calls on their own without multithreading it, so now, all you are doing with multithreeading is thrashing the CPU caches and causing the system to have to go back to RAM for data so much more often, reducing performance.

I know opinions will differ on this, but I feel fairly certain that no, the great multithreaded gaming renaissance is not upon us, and this is due to computing fundamentals. It simply can't get much better than it is today. It doesn't matter if 8 cores gain higher adoption.

The many core chips will still be awesome for rendering and encoding though.

The right tool for the right job.

You mean, "assert that it's wrong but can't be arsed to back it up"?

Well get to it, then. I'll be interested to know how I'm wrong.

https://www.logicallyfallacious.com/tools/lp/Bo/LogicalFallacies/150/Red-Herring

"(also known as: beside the point, misdirection [form of], changing the subject, false emphasis, the Chewbacca defense, irrelevant conclusion, irrelevant thesis, clouding the issue, ignorance of refutation)"

More specifically: no one here claimed that any improvement for any CPU is not good. No one claimed that the 8 core segment will not grow at some rate...

It's important to point out that even though the current generation of consoles have an 8-core APU, only 6 of them are available to games. The PS4 unlocked the ability to use a seventh a couple years ago. I'm not sure if the Xbox One followed suit. Seems to me that the 6C/12T 8700K is the real sweet spot if that is the case.

Indeed. There are algorithms are just serial. So they cannot be programmed in parallel. A game has one or two master threads, so single core performance will be the limit.

And even with parallel algorithms, getting an efficient parallel code is hard. I just read

Why Parallelization Is So Hard

it is not about gaming, but highlights the main problems with parallel programing: synchronization, overheads, Amdahl’s Law...

For MOST of the single-core era, AMD's CPUs were faster than Intel's, and when netburst hit, they were STUPID faster.

Intel had to pay 1 billion dollars per year to some OEMs to prevent them from using the Opteron chips in their server SKUs, and that isn't a made up number: Intel literally gave quarterly payments of 250 million to Dell and HP during the later years of "the mother of all programs".

Because Intel was loosing in just about every category against the Athlon and Opteron, they paid literally billions of dollars to OEMs in order to keep AMD's logo off of products.

Wow, I didn't know 8 core CPUs were a thing of the future. I've been using AMD FX 8 core CPUs for several years now. I didn't know I was FutureMan !As someone who has done it many times: future-proofing is dumb, a waste of money, most of the time.

I'm not going to buy an eight-core CPU just because AMD or Intel made one, I'll buy one when the game I want to play actually performs better on one.

As someone who has done it many times: future-proofing is dumb, a waste of money, most of the time.

I'm not going to buy an eight-core CPU just because AMD or Intel made one, I'll buy one when the game I want to play actually performs better on one.

That's just not accurate at all.

In the 8086, 8088 and 286 era, they performed more or less identically, because AMD was just manufacturing Intel's designs , because IBM required them to have a second source supplier in order to get the contract to supply chips to the original IBM PC contract.

By the time the 386 came around Intel no longer needed to abide by IBM's second source agreements, as the "IBM Compatible" market was booming. Intel cut AMD off and didn't allow them to manufacture their 386's. There was a protracted legal battle, and AMD eventually designed their own AM386, which WAS faster than Intel's 386, but by this time Intel had already launched the 486, so it was moot.

In the Pentium and Pentium II era, AMD's 586, 686, K6, K6-2 and K6-3 chips always trailed Intel in most workloads due - in large part - to their slower FPU units. There were some specialized tasks in which they could tie and sometimes even beat Intel's performance during this era, but in more general tests, they fell significantly behind.

Then when k7 (Athlon / Duron) came along, AMD finally gained rough performance parity with Intel's Pentium 3. Clock for clock they were fairly even. Intel wasn't able to scale up the clocks on the Pentium 3 much beyond 1 Ghz though. We all remember the 1.13Ghz Pentium 3 fail, when they released a chip that wasn't stable at stock clocks, and how Kyle and Tom Pabst of Tom's hardware collaborated to compare their samples.

As I recall, AMD only briefly had the performance crown after this, as Intel was able to compete with the higher clocked early P4's. Intel got made fun of a lot, as it would take a P4 a much higher clock to tie an Athlon, but my memory is that they were fairly equivalent at first. Then in ~2003 or so, Intel hit the 2.8Ghz wall on the P4, and AMD took the performance crown and held it until 2006 when Intel released the Core2 chips.

Ever since 2006, AMD has been behind Intel performance wise. The first Phenom had that TLB bug that sabotaged performance vs Intel's chips. Phenom II fixed it, but never performed quite up to speed with intel's chips, and Intel's performance lead only grew as time went on, right before the Bulldozer fiasco, the Phenom II's were thoroughly being outclassed by Intel's Core chips. The Bulldozer Bulldozer launch was a fiasco and didn't do much to help. (In fact, in many workloads, the FX-8150 was actually SLOWER than their previous Phenom II x6 chips.)

Ryzen has been a welcome addition. AMD has caught up most of the way with Intel at this point. Intel still has a small lead in per core performance, but AMD has mostly closed it. In HEDT highly threaded workloads though, AMD is actually very competitive now though.

I cant wait to see what happens next. With Intel's 10nm woes, it will be interesting to see if AMD can close the per core performance gap in the next gen

You are correct. But this was only for a short time. During most of AMD's history in making CPU's they have been behind Intel in performance, usually by a not so small margin.

The above was for client CPU's as well. Their illicit backroom deals to exclude AMD (and their compiler manipulations) eventually resulted in a $1B settlement to AMD, but that - IMHO - was only a fraction of the damage they did to AMD. If AMD had been able to compete freely during ~1999-2003 when they had performance parity and ~2003-2006 when they had the outright dominant lead, they would likely have had a larger budget for R&D and the Bulldozer/FX fiasco would never have happened.

I'm not, really.And you are totally pulling all of this straight out of your a**. Not buying a single word.

It will be an APU. Probably with some trimmed down caches to save some cost. and I'm betting 4 - 6 cores.

I'm not, really.

Due to heat and power, an 8 core Ryzen 2 is not going to work in something the size of a Playstation, unless it is severely underclocked. A couple less cores, with about 3ghz is probably doable. And makes more sense for individual thread performance.

Also, I doubt AMD is gonna put a CPU in a playstation, which would roughly cost $250 - $300 retail (if it were a standalone chip), in a console which is only supposed to cost $300-$400 at launch. Because, a chip maybe that expensive (according to early speculation it cost between $150 - $230), has only been used in a console once before. Sony's Cell processor. But the days of selling consoles at huge losses are probably behind us. A big goal with PS4 and Xbone was to sell them at cost or even for some profit, from the start.

It will be an APU. Probably with some trimmed down caches to save some cost. and I'm betting 4 - 6 cores.

Console games are all highly-threaded, and while I don't doubt that it will be an APU or based on Ryzen, it will most likely be either a little-core variant (like Jaguar on the current consoles) or much lower-clocked version of Ryzen due to heat, power consumption for the form-factor, and TDP.It will be an APU. Probably with some trimmed down caches to save some cost. and I'm betting 4 - 6 cores.

I don't think it will be a "little-core" variant, as that would not be a very big upgrade for IPC. And right now, the largest performance issue in consoles, are the weak Jaguar CPUs. I mean, a dual core i3 would spank them, with 6 less cores and 4 less threads available.Console games are all highly-threaded, and while I don't doubt that it will be an APU or based on Ryzen, it will most likely be either a little-core variant (like Jaguar on the current consoles) or much lower-clocked version of Ryzen due to heat, power consumption for the form-factor, and TDP.

This obviously is speculation at this point, but the one thing that would surprise me is if they went backwards on core-count, unless of course the cores had multi-threading like the current Ryzen processors do.

The system RAM is also going to need to be increased to at least 12-16GB, more so for the GPU if they are truly going to start pushing native or near-native 4K resolutions without additional graphical techniques (checker-boarding, super/sub-sampling, upscaling, etc.).

It will be interesting to see what they come up with, but the cost, even for a bulk-discount of millions of components, will still need to at least break-even, as the others above (and you) have stated, since Sony and Microsoft announced years ago that they are no longer interested in selling their consoles at a loss (loss-leader), and including a full-version of Ryzen would be very surprising.