Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,215

Wow I thought I was bad with my 2500K....Very Happy with it so far but i upgraded from and old X58 system with a i7 950 so my jump was huge.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Wow I thought I was bad with my 2500K....Very Happy with it so far but i upgraded from and old X58 system with a i7 950 so my jump was huge.

Wow I thought I was bad with my 2500K....

memtest86 can be booted directly into from a livedisk/flashdrive

If you're going to really push your ram, that's a far safer and logical way to do it than put your hdd at risk of data corruption.

Where do you notice this the most? I wouldn't have expected there would be much difference.The 3700X in multi threading tasks (which is my main priority) is over 70% faster than the 6800K was.

Everywhere, the whole system feels more responsive. Apps load faster, and I can work much faster in 3D editing software and Resolve.Where do you notice this the most? I wouldn't have expected there would be much difference.

I went from a 2500k to an r5 1600x, and now have a 3600. The jump to the 3600 is way bigger. I feel it was well worth the $200 cost to replace the 1600x, and over time the electricity savings will help offset some of that.

I have a custom loop with an old swiftech block that used to cool the 2500k. Well, the temps I get with the 3600 are insane. It uses so little power that it idles just above ambient, about 30C, and peak load temps are in the low 50C range.

So, considering the low power consumption it is hard for me to wrap my mind around how fast it is. FPS is up everywhere I wasn't badly GPU bound, and even then I see improvements. There is less stuttering.

Finally, my memory actually works at the rated speed. I set the XMP profile and haven't had to touch it. With the 1600x, I could never get my memory faster than 2933 MHz. I'm sure this only accounts for a small part of the speed difference, but it is nice to get the performance out of my ram that I paid for. That stuff was expensive when I bought it!

I'll wrap up by adding that the old 1600x lives on as a server (domain controller, hyper-v host and Plex server). When I bought the 3600 I added an open box B350 motherboard for $20 more (love microcenter), and grabbed 16gb of 2400MHz ddr4 for another $40 from newegg. So $60 out of pocket and it blows away the G3248 / z97 combo I used to have in the basement.

Glad you like your 3600, but just to clarify, you'll never realistically see cost savings in electricity over the useful life of the chip unless you were running it 24/7/365 at 100% load for YEARS. The difference is 30W at load (at idle it's pretty much a wash). 95W at 24/7 load is 2.28 KWh a day = $0.23 and 65W at 24/7 load is 1.56 KWh a day = $0.16 So a $0.07 a day difference. $0.07 a day would take 2857 days to make $200 or 7.83 years. The reality is you probably aren't going to be running it at 24/7/365 100% load, so you will likely NEVER see the cost offset. This is calculated at $0.10 a KWh so the math changes a little here and there depending on your rates.

Long story short - electricity is such a small factor for home users it's almost negligible.

You're quoting lower pricing than I get, for sure. Here in the Northeast we can expect almost 22c to 25c per kwh including delivery charges etc. That would bring your estimate down to 3 years. Still quite some time... But within reach.

Glad you like your 3600, but just to clarify, you'll never realistically see cost savings in electricity over the useful life of the chip unless you were running it 24/7/365 at 100% load for YEARS. The difference is 30W at load (at idle it's pretty much a wash). 95W at 24/7 load is 2.28 KWh a day = $0.23 and 65W at 24/7 load is 1.56 KWh a day = $0.16 So a $0.07 a day difference. $0.07 a day would take 2857 days to make $200 or 7.83 years. The reality is you probably aren't going to be running it at 24/7/365 100% load, so you will likely NEVER see the cost offset. This is calculated at $0.10 a KWh so the math changes a little here and there depending on your rates.

Long story short - electricity is such a small factor for home users it's almost negligible.

This is a lesson in making assumptions. I pay about $.15 per KWh and I do in fact run the CPU at 100% load around the clock. When I'm not playing games, my PC is used for mining crypto. This was a significant consideration for upgrading, because the hashrate and hash / watt are both much higher for the 3000 series Ryzen chips.

Correcting your numbers for my electricity cost, I'm saving $.108 per day. I can tell you I will absolutely notice the $3 a month my bill drops. That's also the difference between mining at a virtual break-even point vs. mining for a profit at the current prices.

Another key point is I said "over time the electricity savings will help offset some of that" referring to the $200 cost of the 3600. Notice I did not say ALL, but SOME of the cost. If I mine for a year on it I'll have saved $36, or 18% of the purchase price. I can't say for sure how long I'll be mining on the CPU but it should be at least a year, maybe more. I'll probably be playing games on this processor for 6 or 7 years barring a miracle breakthrough in tech.

And finally, even if I wasn't using the CPU at 100% load around the clock what I said initially is absolutely correct. Using less electricity saves money. It may not be a lot, or significant to you, but even someone who only plays games on their PC and pays less for power than I do is going to save some money on their electric bill by upgrading from a 1600x to a 3600.

This is a lesson in making assumptions. I pay about $.15 per KWh and I do in fact run the CPU at 100% load around the clock. When I'm not playing games, my PC is used for mining crypto. This was a significant consideration for upgrading, because the hashrate and hash / watt are both much higher for the 3000 series Ryzen chips.

Correcting your numbers for my electricity cost, I'm saving $.108 per day. I can tell you I will absolutely notice the $3 a month my bill drops. That's also the difference between mining at a virtual break-even point vs. mining for a profit at the current prices.

Another key point is I said "over time the electricity savings will help offset some of that" referring to the $200 cost of the 3600. Notice I did not say ALL, but SOME of the cost. If I mine for a year on it I'll have saved $36, or 18% of the purchase price. I can't say for sure how long I'll be mining on the CPU but it should be at least a year, maybe more. I'll probably be playing games on this processor for 6 or 7 years barring a miracle breakthrough in tech.

And finally, even if I wasn't using the CPU at 100% load around the clock what I said initially is absolutely correct. Using less electricity saves money. It may not be a lot, or significant to you, but even someone who only plays games on their PC and pays less for power than I do is going to save some money on their electric bill by upgrading from a 1600x to a 3600.

What are you talking about? CSGO?Wish the games I play used the AMD more effectively. I only play CSGO and DCS Combat Sim. Priority is frequency in these games and my Intel 8086k@5ghz is faster. Once AMD can match Intel in my games I'll jump ship, but I'm not going to spend $ on an "upgrade" and lose speed. I am very impressed with the benches and for people who play more typical games and have workloads that can scale cores, AMD has an advantage IMO, esp considering bang for the $. If I was starting from scratch and didn't have this pc, I would buy a new AMD though. Glad they are bringing some competition back to the game.

3900x outruns the 9900k overclocked or not...

You haven't tested it one stick at a time to see if it's a real hardware fault vs just some bad settings being auto-chosen by the system?

4 sticks of new ram are always 4 potential points of failure in a new build regardless of what you're putting them in.

I'd test each stick one at a time, once at stock, then at 3600 then at 4000 all at the rated voltage it's supposed to be at (not manually pushing it higher). all in the same ram slot. If they all fail or your first stick begins to fail, try another slot and repeat.

You can narrow down the issue and remove the most variables that way. If you have a stick passing in a slot and another failing, you know the slot isn't the problem, the stick is. If they all fail in a given slot but not in another, you may have a bad motherboard. If they all fail in all the slots, then it could be many things ...

I'd go in that direction first before giving up or getting buyers remorse. Also, when doing these ram tests, I'd use default core voltages to just remove any other external variables.

edit: also, pay attention after booting with a single stick to the detected timings and dram settings. There's always the chance a stick from a different set of ram got mixed in with the rest at the factory/reseller and the mixed ram is the source of the problem. They'd all pass individually in that case, but not play well together.

Thanks for the reply. It’s a two stick kit 2 x 16. I did run a test of each stick alone in slot A2. Both failed, but I wasn't running stock speed. Like I said, the system will pass with all settings at stock, but it sets RAM to 2133 CAS 15 1.20v. Maybe I'll try each stick alone at default and see if one defaults faster. The fact that I made it through with all 32 GB at 3600 16 16 16 16 32 is what befuddled me. That would seem to point out that the RAM is fine, it just doesn't want to work together with my board, CPU, BIOS any faster. At this point though, I'm open to trying anything. This was fairly expensive RAM and I'm pissed that I'm not able to run it faster than RAM at half the price. I know that dual rank sticks are tough on the system though.

Glad you like your 3600, but just to clarify, you'll never realistically see cost savings in electricity over the useful life of the chip unless you were running it 24/7/365 at 100% load for YEARS. The difference is 30W at load (at idle it's pretty much a wash). 95W at 24/7 load is 2.28 KWh a day = $0.23 and 65W at 24/7 load is 1.56 KWh a day = $0.16 So a $0.07 a day difference. $0.07 a day would take 2857 days to make $200 or 7.83 years. The reality is you probably aren't going to be running it at 24/7/365 100% load, so you will likely NEVER see the cost offset. This is calculated at $0.10 a KWh so the math changes a little here and there depending on your rates.

Long story short - electricity is such a small factor for home users it's almost negligible.

You are reminding me of the issues I had with 2 different Micron D/E based 32GB kits...would boot and even OC to 3600c16 and I could run things fine, but any kind of ram stress test would fail with a reboot 30min-3hrs in...No windows hardware errors, just a generic bsod..

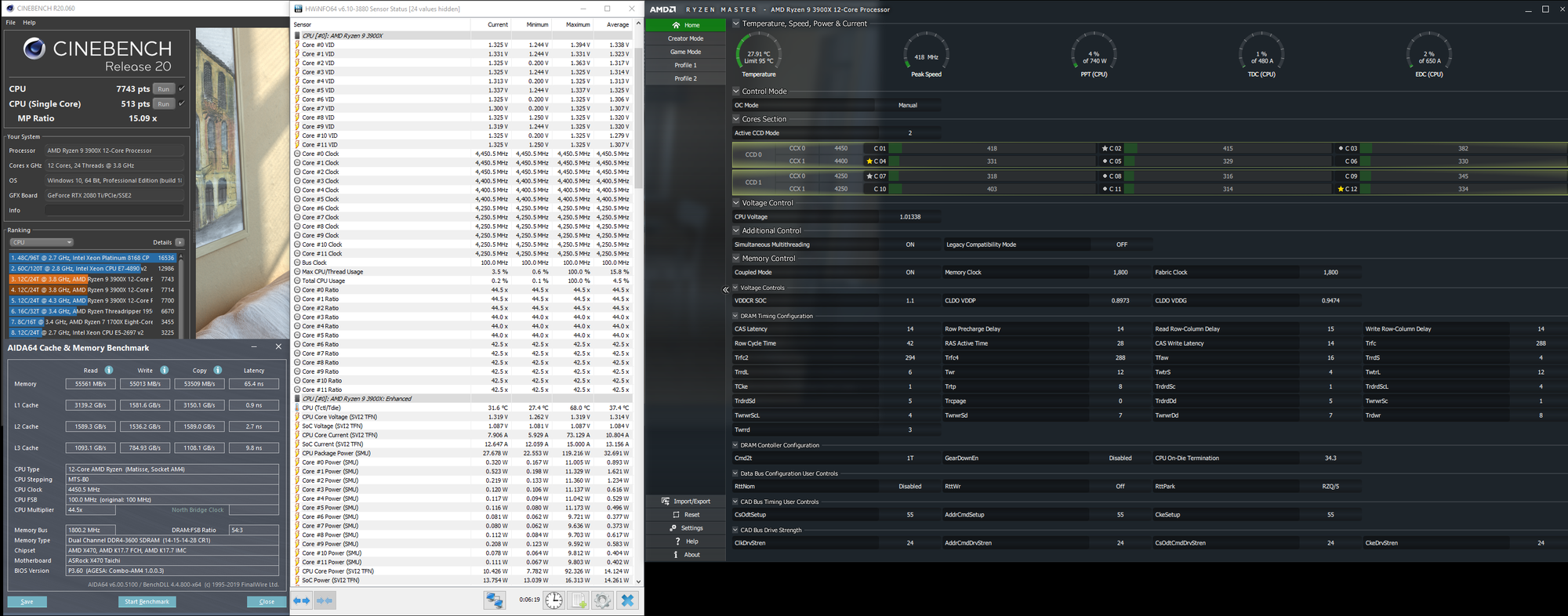

You mentioned you are running SoC at 1.1V...Have you monitored the actual SoC voltage from something like HWinfo64? The reason I ask is that mine was set at 1.1V but it took increasing the SoC LLC to level 2 to keep it there under load. It would dip to 1.087V otherwise.

It's just a thought in case you had not thought of it...I really feel for you..I know you spend some decent coin on 4000 rated B die and to not have it work properly really sucks.

I'm running 64GB 3200mhz 14 14 14 g skill ram at the xmp settings (no manual dram settings) and it's working _great_

You mention it passed at 3600 but then failed to boot after rebooting. Does your motherboard have a dram boot voltage setting? Asus tends to differentiate their dram voltage setting from their dram boot voltage setting. If you require 1.42 volts to run at 3600 and never adjusted the boot voltage, the boot voltage may be at the stock dram boot voltage of like 1.25 volts instead of even 1.35 common to most > 2133 speeds. You'd think the xmp profile would handle that stuff but ...that'll vary motherboard to motherboard i'm sure. Might also be good to give yourself dram current headroom and load line calibration boosts from stock when running at these really high frequencies that require higher than 1.35 volts to be stable.

dram chips also have temp sensors in them, which is probably not visible in memtest86, but you should be able to see them in monitoring software in your regular OS during stress tests, just to make sure your ram isn't overheating.

edit: I always go through my digital power controls in the motherboard and boost load line calibration and current limits for basically everything ...that's why there's those big heatsinks on these premium boards

I looked in BIOS for DRAM boot voltage, but couldn't find it. I've set all the other LLC and duty cycle stuff per the calculator. Hopefully, I can find that setting tonight.

Some Asus boards do not have it...That is why on early BIOS on 1.0.0.7 we had issues with XMP not working since the board was setting 1.2V as the boot voltage with no way to change it...You had to manually set XMP speed and timings after setting the voltage to 1.4V and then rebooting. I still do not have it on my board, using the latest bios (AFIAK) released on August 1st.

Odd, I wasn't aware that so many of the boards in their lineup lacked that setting. Very odd choice on their part to make that something only appear in certain ones. It's not like it requires anything physically special.

Did you visit the link that shows the graph? It was graciously posted by someone under your post, I know graphs can be hard to understand sometimes.You mean the other way around?

What? You mean for the Intel chip? Yes, the and chip will save on your ac bill too in this case, he said it is using less watts = less therms generates overall.You also have to cool the heat from your PC/ Run the AC more. (In Alabama here with a 8320/290x and 1700/rx580 system, I would know..................)

Odd, I wasn't aware that so many of the boards in their lineup lacked that setting. Very odd choice on their part to make that something only appear in certain ones. It's not like it requires anything physically special.