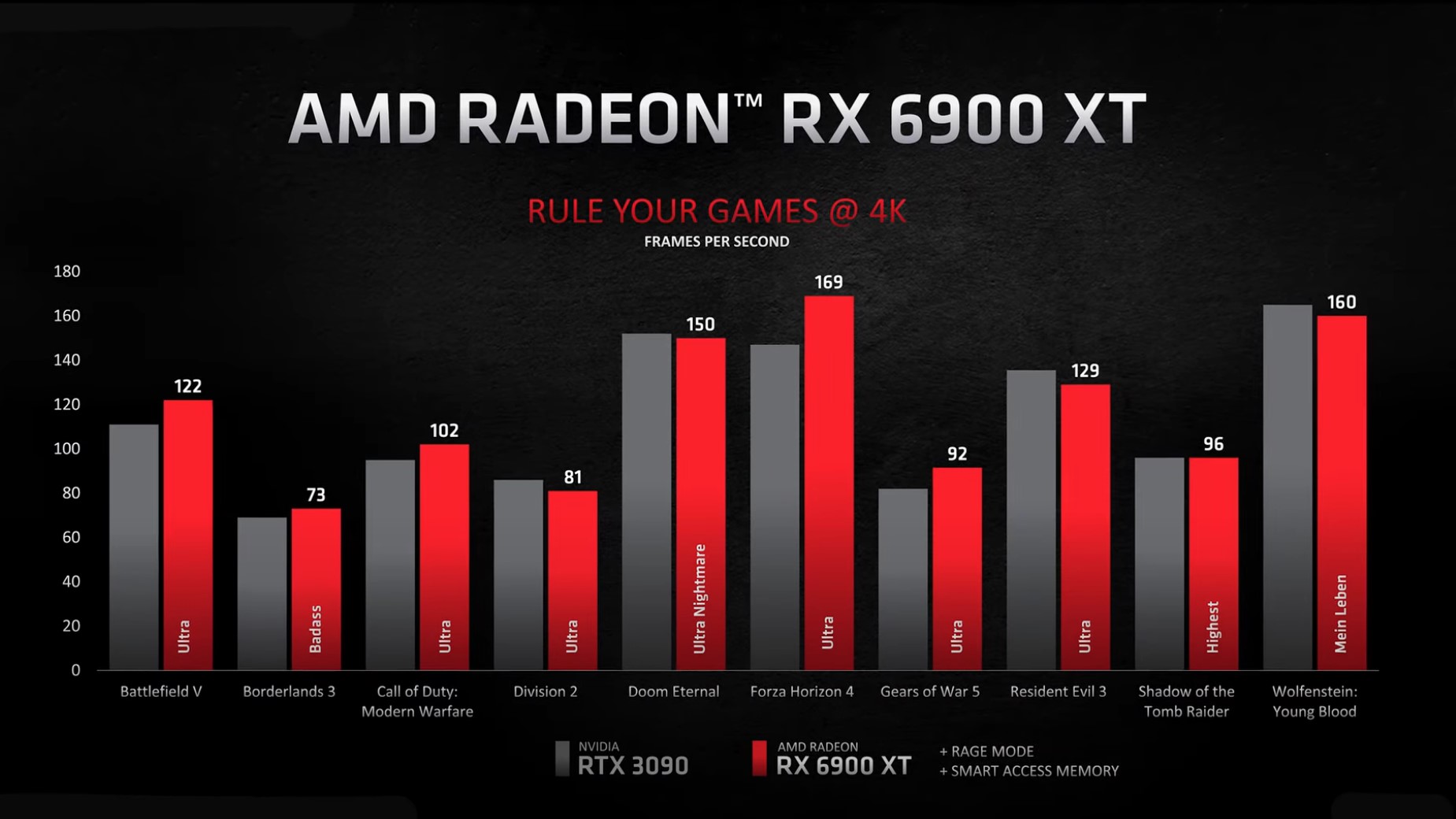

Probably will keep the price as it is as there are folks out there that still needs 24GB of ram and probably offered better RayTracing.Oof. 6900XT trading blows with the 3090 for $500 less. It's going to be interesting to see how Nvidia reacts.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Where Gaming Begins: Ep. 2 | AMD Radeon™ RX 6000 Series Graphics Cards - 11am CDT 10/28/20

- Thread starter FrgMstr

- Start date

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

Why are we talking about proprietary DLSS? A resolution scaler that can take a 1440p image and make it 4K to increase frames? Why would we make such a comparison with an AMD card rendering at native resolution?And where does it say DLSS is enabled in those limited titles? AMD would definitely show that they are beating a 3080 with DLSS enabled if that was the case. They wouldn't waste an opportunity like that.

Also, how does it make sense that AMD would beat Nvidia in games where DLSS is supported but trading blows in DLSS unsupported games?

You are spreading completely made up, unsupported fud.

Ok, Nvidia doesn't have that AMD 5000 boost which beats the 3080 in everything. Should we now ask Nvidia to release their own CPUs to do the same thing?

Well there are tens of thousands of PC games that do not support DLSS. The question maybe should be how many in the future will and/or if other similar technology will be brought out. The industry is not standing still on one proprietary technology, TAA is being improved, AMD has non proprietary tech that enhances IQ that works with Nvidia hardware as well, how nice of them. I wonder if they expanded the Radeon Boost game support which would allow you to run a game at a much higher resolution, better quality and yet when in action get great frame rates? As for RT, probably only newer titles that uses DXR 1.1 or higher, AMD hardware, optimized will we really know the performance. Current RT games are optimized for Nvidia RTX for the most part.

Now for those who care less for 4K resolution, want higher frame rates with 240hz+ monitors, looks like AMD further the lead over Nvidia. That will probably be talked about a lot.

I just cant base my gaming card purchase on some magic super computer fluff like DLSS when there is so little support across the gaming spectrum for it. Oh mercy me 14 games support DLSS or whatever. But just like you said there are thousands of gaming titles that have or give no shits about DLSS and that is where a pure raster monster is the best choice. Until DLSS is in every damn game out, its moot to waste any more time arguing over the internet about it.

Good point mate!

And I guarantee that AMD, will have an answer for DLSS, hell they are releasing, maybe already have, their El Capitan Exascale Super computer to research and Govt entities. Whats to stop them from using such tech to do exactly the same spell casting as Nvidia's DLSS's resolution buffoonery?

It was being discussed because tangoseal made up unsupported claims that AMD beats Ampere cards in games even when DLSS is enabled. That’s the only reason it was brought up.Why are we talking about proprietary DLSS? A resolution scaler that can take a 1440p image and make it 4K to increase frames? Why would we make such a comparison with an AMD card rendering at native resolution?

Ok, Nvidia doesn't have that AMD 5000 boost which beats the 3080 in everything. Should we now ask Nvidia to release their own CPUs to do the same thing?

I'm interested in Raytracing for Cyberpunk 2077 and the Witcher 3 patch that will add it, but aside from that I think we're still a generation away, maybe two, before it's ubiquitious. At the same price I'd probably opt for a better RT card, but 6900XT is $500 cheaper than the 3090, and looks to offer better performance than the 3080, so it's not so easy to compare.Right now if you ask me DLSS and RT are useless features. I say in 2-3 generations they will be worth it then.

But, as of right now in my eyes they are useless. Just like the Nvidia latency BS they have, all marketing bullpoop.

I will probably decide between the 6900XT or a 3080, although that decision will be made for me if I can't actually find a 3080 for sale.

Digital Viper-X-

[H]F Junkie

- Joined

- Dec 9, 2000

- Messages

- 15,115

the 6900XT Had both the "Rage Mode" On and "SAM" (required 5000 cpu) enabled, so likely, it will be slightly slower than a 3090 without those on, and require some OCing to catch up.

My best guess is we'll see RT catch on in SP games first, where 60fps is just fine and people can appreciate the visual goodies. Multiplayer games will take longer, especially the competitive ones as that's where 120Hz+ really shines.Correct. There's not enough games, performance and overall visual impact for it to matter. Once we can get it run beyond 120Hz, it's will catch on big time.

the 6900XT Had both the "Rage Mode" On and "SAM" (required 5000 cpu) enabled, so likely, it will be slightly slower than a 3090 without those on, and require some OCing to catch up.

At 5 to 700 bones less too! You can keep the 3090 Jensen. Drop the price and then you have people's attention again. Productivity though you can't shake that sweet 24GiB of vram though. Thats a good amount for doing real work.

Last edited:

I agree for development work the 3090 still has a place. That amount of memory will matter. But for gaming? At that price? Naw.At 5 to 700 bones less too! You can keep the 3090 Jensen. Drop the price and then you have people's attention again. Productivity though you can't shake that sweet 24GiB of vram though. Thats a good amount for doing real work.

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

Ok I see now.It was being discussed because tangoseal made up unsupported claims that AMD beats Ampere cards in games even when DLSS is enabled. That’s the only reason it was brought up.

That was at 4K, at 1440p, Ultra Wide resolutions and so on it will be even faster. We still don't know how much further past Rage settings these cards will go. In any case it is beating more games than not from this AMD picked selection. We just need reviews to find out how it performs in RT and so on as well as how much headroom. Since performance for RT was not presented with performance numbers, might have to wait for the reviews and if that is important to one's buying decision.the 6900XT Had both the "Rage Mode" On and "SAM" (required 5000 cpu) enabled, so likely, it will be slightly slower than a 3090 without those on, and require some OCing to catch up.

It is always best practice to wait for review, that being said, I am just glad no one has to wait for a long time for a 3080/3090 competitor unlike its previous release.That was at 4K, at 1440p, Ultra Wide resolutions and so on it will be even faster. We still don't know how much further past Rage settings these cards will go. In any case it is beating more games than not from this AMD picked selection. We just need reviews to find out how it performs in RT and so on as well as how much headroom. Since performance for RT was not presented with performance numbers, might have to wait for the reviews and if that is important to one's buying decision.

View attachment 293505

Nvidia could extend support to the RTX 3090 with Titan class drivers, which will open up features for professional applications to be able to be used. I expect AMD to slowly dish out more card info as time goes on as well as how Nvidia responds. Like a Radeon RX 6800 8gb version, 18% faster than a 2080Ti for $499 if Nvidia reduces price on the RTX 3070.I agree for development work the 3090 still has a place. That amount of memory will matter. But for gaming? At that price? Naw.

As to how Nvidia responds to the RTX 6900XT, lol, a 3080Ti 12gb with less performance and ram than a 6900XT? $899? Still Nvidia has stuff that some folks will want so not all bad. AMD also has room to drop price on the RX 6900XT at any point in time, baiting Nvidia again?

Last edited:

Teenyman45

2[H]4U

- Joined

- Nov 29, 2010

- Messages

- 3,239

The 3080 (not the 3090) brought prices somewhat back down to a more normal level after the 10XX series and especially the extreme overcharging of the 20XX series.

AMD's 6900XT seems competitively if not "reasonably" priced mainly because the 3090 is super over-priced for the performance, notwithstanding the 24GB of VRAM, in this generation.

The 680XT has a plus mainly for having the extra VRAM over a 380, and a small plus for the board power savings (we'll see how it overclocks). Conversely, when using the same CPU platform the 3080 likely slightly outperforms the the 6800XT and because nobody at AMD is extolling at length RDNA2's ray tracing prowess Nvidia almost certainly has a big lead in that department. Even though ray tracing is still more of a gimmick that is not ready for prime time for the home consumer market, Nvidia is almost certain to trumpet its superiority. Then there are the consumer perceptions of Nvidia having better mind share especially in the area of driver support and convincing people that DLSS with its "zoom and enhance-like" ability is a good thing. All told, the 6800XT is a good $50 - $100 overpriced if more 3080's ever show up around the $700 price point.

The 6800 regular, even with its extra VRAM over the 3070, seems grossly overpriced for an upper midrange card. I'd expect Nvidia was originally planning on pricing the 3070 at $600 or just under that. Presuming the 3070 is ever actually in stock at roughly $500 there is very little reason to buy the 6800 unless you are really worried about 1080p or 1440 using a lot more VRAM than the 3070's presently offer.

AMD's 6900XT seems competitively if not "reasonably" priced mainly because the 3090 is super over-priced for the performance, notwithstanding the 24GB of VRAM, in this generation.

The 680XT has a plus mainly for having the extra VRAM over a 380, and a small plus for the board power savings (we'll see how it overclocks). Conversely, when using the same CPU platform the 3080 likely slightly outperforms the the 6800XT and because nobody at AMD is extolling at length RDNA2's ray tracing prowess Nvidia almost certainly has a big lead in that department. Even though ray tracing is still more of a gimmick that is not ready for prime time for the home consumer market, Nvidia is almost certain to trumpet its superiority. Then there are the consumer perceptions of Nvidia having better mind share especially in the area of driver support and convincing people that DLSS with its "zoom and enhance-like" ability is a good thing. All told, the 6800XT is a good $50 - $100 overpriced if more 3080's ever show up around the $700 price point.

The 6800 regular, even with its extra VRAM over the 3070, seems grossly overpriced for an upper midrange card. I'd expect Nvidia was originally planning on pricing the 3070 at $600 or just under that. Presuming the 3070 is ever actually in stock at roughly $500 there is very little reason to buy the 6800 unless you are really worried about 1080p or 1440 using a lot more VRAM than the 3070's presently offer.

So I am strongly considering a 6900XT. But need to know the following before making the jump:

1) How does it perform without Rage and Smart Access Memory enabled.

2) What is the RT performance like.

AMD was clever making an exclusive feature for Ryzen 5000 CPU/X570 owners. I wonder what it’s overall performance gains are as that is enticing for me as someone who will soon purchase a 5900X.

1) How does it perform without Rage and Smart Access Memory enabled.

2) What is the RT performance like.

AMD was clever making an exclusive feature for Ryzen 5000 CPU/X570 owners. I wonder what it’s overall performance gains are as that is enticing for me as someone who will soon purchase a 5900X.

dave343

[H]ard|Gawd

- Joined

- Oct 17, 2000

- Messages

- 1,869

It’s odd they didn’t show ANY RAy Tracing performance numbers... I wonder if this is a situation like back with the 5000 series where with first gen tesselation hardware AMD couldn’t compete. I still want the 6800xt but would have been nice to see some numbers of RT performance.

Didn't they reveal that in the presentation? Rage offers 3-9% or something like that. A little more than the nvidia cards seem to be able to overclock.So I am strongly considering a 6900XT. But need to know the following before making the jump:

1) How does it perform without Rage and Smart Access Memory enabled.

2) What is the RT performance like.

AMD was clever making an exclusive feature for Ryzen 5000 CPU/X570 owners. I wonder what it’s overall performance gains are as that is enticing for me as someone who will soon purchase a 5900X.

I believe that was Rage + SAM.Didn't they reveal that in the presentation? Rage offers 3-9% or something like that. A little more than the nvidia cards seem to be able to overclock.

The 6800XT seems like the best bargain for price and performance. 3090 IS overpriced, but I also feel the 3090 is not a gaming card but a productivity card given it's just barely better than a 3080.

It also seems like to get 3090 beating performance, needs a CPU upgrade to zen3. Reviews in coming months will confirm. This makes the 6800XT more enticing as the benches were without rage + sam.

It also seems like to get 3090 beating performance, needs a CPU upgrade to zen3. Reviews in coming months will confirm. This makes the 6800XT more enticing as the benches were without rage + sam.

It definitely adds an interesting twist. Means I'll be more inclined to upgrade my CPU if I go the AMD route. I think I'm still leaning 3080 but if the reviews for the 6800 XT and/or 6900 XT are compelling I could be swayed.AMD was clever making an exclusive feature for Ryzen 5000 CPU/X570 owners. I wonder what it’s overall performance gains are as that is enticing for me as someone who will soon purchase a 5900X.

Rumor had it that RT performance was on around the 2080 Ti level (not sure which card, possibly the 3800XT), but waiting for reviews appears to be the only way to find out.It’s odd they didn’t show ANY RAy Tracing performance numbers... I wonder if this is a situation like back with the 5000 series where with first gen tesselation hardware AMD couldn’t compete. I still want the 6800xt but would have been nice to see some numbers of RT performance.

You just know that if it was at the same/near performance level that rasterisation was at compared to the 3080/3090 that they'd have included some sort of numbers in the launch. There's no way that they wouldn't have.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,573

Dang, they brought us a console-like exclusive memory access, between CPU and GPU. That's so cool!

I expect those sorts of synergistic hardware features to be even better, with their next motherboard platform.

I expect those sorts of synergistic hardware features to be even better, with their next motherboard platform.

Last edited:

Maybe it was the implementation but the RT game demos they showed didn't look very impressive. I think it will be interesting to compare Nvidia and AMD on both RT performance and quality.Rumor had it that RT performance was on around the 2080 Ti level (not sure which card, possibly the 3800XT), but waiting for reviews appears to be the only way to find out.

You just know that if it was at the same/near performance level that rasterisation was at compared to the 3080/3090 that they'd have included some sort of numbers in the launch. There's no way that they wouldn't have.

Dont blame me, if I didn't mention it, 50 other people would have.Ok I see now.

You are not going to be able to discern fidelity on a youtube stream.Maybe it was the implementation but the RT game demos they showed didn't look very impressive. I think it will be interesting to compare Nvidia and AMD on both RT performance and quality.

Kalabalana

[H]ard|Gawd

- Joined

- Aug 18, 2005

- Messages

- 1,313

Super resolution is their answer to DLSS, and they do it via shaders being loaded dynamically as I understand on what data is out there. (Not AI based)

I also am wondering, if "Full API" means, that the benchmarks actually did include ray tracing on, for both nVidia and AMD.

Imagine that.

(Edit, it was best api, and that is not setting related, as I've come to find.)

I also am wondering, if "Full API" means, that the benchmarks actually did include ray tracing on, for both nVidia and AMD.

Imagine that.

(Edit, it was best api, and that is not setting related, as I've come to find.)

Last edited:

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

I am simply blown away. The performance they showed is a breath of fresh air. I have had ATi/AMD cards for a long time. Only this last year did I do a switch or nVidia after my aging RX 480 was starting to push daisies and the new AMD offerings were not worth the price. I currently have an nVidia RTX 2070 Super and it runs peach-keen. AMD is now showing a more competitive card(s) and it brings back the nostalgia of the past ATi/AMD releases before nVidia started to wipe the floor with them for a while there. Before my 2070 Super, last nVidia card was the GeForce MX 200. Looks like I may go back to AMD. According to what I have seen/read, in order for best performance it needs the 5000 series of CPU? Is this Dragon all over again, but with real performance uplifts? I might pick up a 6800 XT or even save up for the 6900 XT. Another thing to note is the power usage to achieve the performance. This is a big one for me. I am still using my faithful OCZ Fatal1ty 750 Watt Semi-modular PSU. Currently powering its 4th PC. I had seen the power usage of the 3080/3090 and my power socket shit its pants. AMDs cards show way better power usage and it means there could be headroom for OC. I may get a new PSU just so I can run a 6800xt/6900xt and my 2070 super in tandem for mixed GPU rendering for Ashes.

Isn’t a 6800XT 300 watts and a 3080 320 watts?

It was actually called "Best API" if my memory serves me well (and it often doesn't). I gathered that they were talking about DX12 vs Vulkan.Super resolution is their answer to DLSS, and they do it via shaders being loaded dynamically as I understand on what data is out there. (Not AI based)

I also am wondering, if "Full API" means, that the benchmarks actually did include ray tracing on, for both nVidia and AMD.

Imagine that.

Kalabalana

[H]ard|Gawd

- Joined

- Aug 18, 2005

- Messages

- 1,313

Oh shoot, I totally misinterpreted in both regards then. I appreciateIt was actually called "Best API" if my memory serves me well (and it often doesn't). I gathered that they were talking about DX12 vs Vulkan.

Kalabalana

[H]ard|Gawd

- Joined

- Aug 18, 2005

- Messages

- 1,313

Yep, and the 6900XT is also 300 watts. The 3090 spikes to 450 watts. Huge difference! Of course, we'll have to wait and see what the 6900XT spikes to with Rage enabled, but the PPW for AMD is way better than nVidia this gen it seems.Isn’t a 6800XT 300 watts and a 3080 320 watts?

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

It was actually called "Best API" if my memory serves me well (and it often doesn't). I gathered that they were talking about DX12 vs Vulkan.

Best API for who?

AMD has done super shady things with benchmarks in the past but I think they’ve been pretty straight since Raja was sacked.

Yep, and the 6900XT is also 300 watts. The 3090 spikes to 450 watts. Huge difference! Of course, we'll have to wait and see what the 6900XT spikes to with Rage enabled, but the PPW for AMD is way better than nVidia this gen it seems.

Yeah no one compares stock to OC TDP... 3090 stock is 350W iirc.

Hell I got my Titan X Maxwell to 400W. I wouldn’t use that number to compare against a 290x lol.

AMD looks to finally put a little shit in nvidia's pants.

The 6800 XT looks like a very nice card......but......

1) the game choice was a little weak. AMD really didn't choose any games that make GPUs sweat.

2) they were really vague on the use/ability of RT, though they did mention their RDNA2 fully supports DX12 RT....but how well?

Now, I still am not sold on RT and there aren't that many choices in games I'd play, so does it matter?

I could give a shit about automatic overclocking, that's why God made Afterburner.

I don't understand what their memory sharing thing does, but I don't think it would be enough to get me to trade in my 3700X quite yet.

Still if the real review sites give me reason to believe....I'm on a 6800 XT day one IF AMD has product to sell me and the bots don't beat me to it.

The 6800 XT looks like a very nice card......but......

1) the game choice was a little weak. AMD really didn't choose any games that make GPUs sweat.

2) they were really vague on the use/ability of RT, though they did mention their RDNA2 fully supports DX12 RT....but how well?

Now, I still am not sold on RT and there aren't that many choices in games I'd play, so does it matter?

I could give a shit about automatic overclocking, that's why God made Afterburner.

I don't understand what their memory sharing thing does, but I don't think it would be enough to get me to trade in my 3700X quite yet.

Still if the real review sites give me reason to believe....I'm on a 6800 XT day one IF AMD has product to sell me and the bots don't beat me to it.

Maybe. Maybe not. We always have screenshots, and reviewer impressions. If it's close enough in quality you can't discern the difference on a Youtube video, that's a big win for AMD IMO.You are not going to be able to discern fidelity on a youtube stream.

I'm not trying to nitpick pixels. Just thought that their RT demos looked more like raster than RT.

Revenant_Knight

Gawd

- Joined

- Nov 18, 2011

- Messages

- 696

I wonder how the 6800XT will perform in Adobe work loads compared to Ampere. My system does double duty.

The way it was said I felt like the B550 would also had it, from memory it was the 500 motherboard, I could be wrong there but I would imagine that it was included in there, otherwise that made the B550 a really bad product.So I am strongly considering a 6900XT. But need to know the following before making the jump:

1) How does it perform without Rage and Smart Access Memory enabled.

2) What is the RT performance like.

AMD was clever making an exclusive feature for Ryzen 5000 CPU/X570 owners. I wonder what it’s overall performance gains are as that is enticing for me as someone who will soon purchase a 5900X.

One thing that seem interesting if I believe those screenshots:

https://www.theverge.com/2020/10/28...pecs-release-date-price-big-navi-gpu-graphics

The 6900xt vs 3900 had rage mode and smart access memory,

the 6800 vs 2080 ti has only the smart access memory on

And the 6800xt nothing

According to the screenshots at least, maybe it was to be able to say that the 6800xt used less power even if just by 20 watts and that was worth more than what they would have gained and maybe that one do not profit much of the rage mode, but maybe there is still some performance less on that table on those non 6800xt graphs.

Rage Mode and Smart Access Memory, what is it?

https://www.wepc.com/news/what-is-amd-smart-access-memory/

https://www.wepc.com/news/what-is-amd-smart-access-memory/

crazycuz20

Limp Gawd

- Joined

- Oct 3, 2017

- Messages

- 219

The 6800 doesn't compete with the 3070, it competes with a a future 3070 TI/Super. It's 15% faster that the 3070 and 15% more expensive, so for a frames/dollar ratio it's equivalent.

- In a couple of months when they can ramp up manufacturing and demand slows down for th 6800+ they'll release the 6600/6700XT variants that'll compete with Nvidia at the true midrange.

- $579 for a graphics card is not "mid-range" IMHO, especially when it's more expensive that the consoles.

- In a couple of months when they can ramp up manufacturing and demand slows down for th 6800+ they'll release the 6600/6700XT variants that'll compete with Nvidia at the true midrange.

- $579 for a graphics card is not "mid-range" IMHO, especially when it's more expensive that the consoles.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)