You know repeating nonsense over and over doesn’t make it true right?You do realize that AMD was beating 3080snwith DLSS enabled and thr AMD doesnt use such voodoo right?

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Where Gaming Begins: Ep. 2 | AMD Radeon™ RX 6000 Series Graphics Cards - 11am CDT 10/28/20

- Thread starter FrgMstr

- Start date

Teenyman45

2[H]4U

- Joined

- Nov 29, 2010

- Messages

- 3,244

The 6800 doesn't compete with the 3070, it competes with a a future 3070 TI/Super. It's 15% faster that the 3070 and 15% more expensive, so for a frames/dollar ratio it's equivalent.

- In a couple of months when they can ramp up manufacturing and demand slows down for th 6800+ they'll release the 6600/6700XT variants that'll compete with Nvidia at the true midrange.

- $579 for a graphics card is not "mid-range" IMHO, especially when it's more expensive that the consoles.

The 3070 is pretty much a drop in replacement for a 2080ti at less than half the price (and nearly the same power envelope) if your only metric is frame rates. We'll see how the 3070 does with a Zen 3 chip compared to a 6800 on Zen 3, but from the slides the 6800 seems closer to the 3070 than a future ti card.

You know repeating nonsense over and over doesn’t make it true right?

You know quoting me and saying that doesnt make you right either.

You guys said that Zen 3 would never amount to Intel, well hows the crow soup? Because Zen 3 is 10% faster than intels fastest product on the market.

Im not going to argue over the internet any further than this reply. Wait till reviews drop. Youre gonna have tasty crow sandwiches for a week.

When you get called out for making one thing up, PIVOT and make something else up instead!You guys said that Zen 3 would never amount to Intel

Kalabalana

[H]ard|Gawd

- Joined

- Aug 18, 2005

- Messages

- 1,313

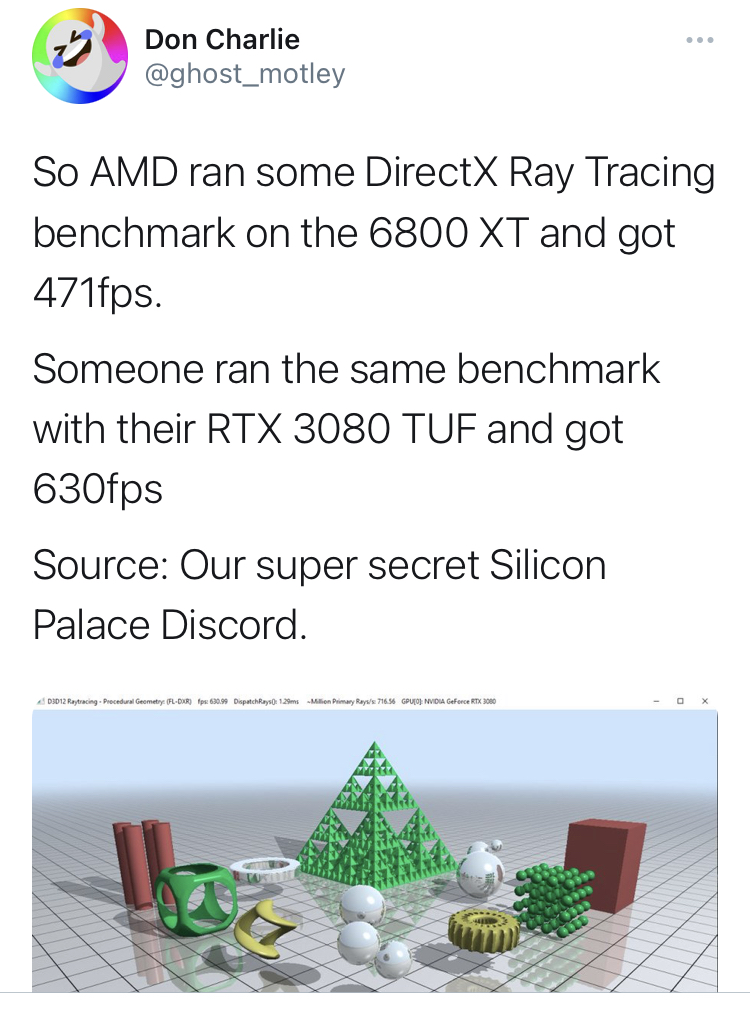

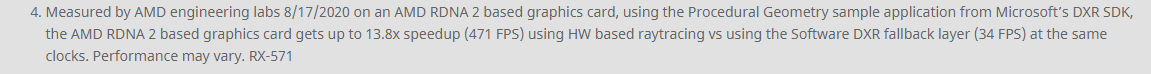

Ouch, what's the context behind this post, satire? Or is this based on an actual review that I am not familiar with? I am trying to gauge where the AMD RT performance is at, and I can't find the source data for the above.

Ouch, what's the context behind this post, satire? Or is this based on an actual review that I am not familiar with? I am trying to gauge where the AMD RT performance is at, and I can't find the source data for the above.

Looks like it's some Microsoft sample app.

https://www.amd.com/en/technologies/rdna-2

Teenyman45

2[H]4U

- Joined

- Nov 29, 2010

- Messages

- 3,244

I just ran Control on my 3080 at 4K, max settings, DLSS (1440p render), and it was a stable 50-60 FPS.

If the AMD 6900 XT can do this, I will be impressed... but I bet it can't.

So you ran it at 720p with some post processing?

I said 1440p upscaled to 4K with DLSS, but sure. You can twist my words however you like.So you ran it at 720p with some post processing?

Revenant_Knight

Gawd

- Joined

- Nov 18, 2011

- Messages

- 696

I just ran Control on my 3080 at 4K, max settings, DLSS (1440p render), and it was a stable 50-60 FPS.

If the AMD 6900 XT can do this, I will be impressed... but I bet it can't.

I believe, and I may be wrong, that AMD is working on something similar to DLSS, but it’s on the developer side. I hope they are and we can see more competition.

I believe, and I may be wrong, that AMD is working on something similar to DLSS, but it’s on the developer side. I hope they are and we can see more competition.

Yes they are and its called Super Resolution.

Kalabalana

[H]ard|Gawd

- Joined

- Aug 18, 2005

- Messages

- 1,313

Oh shoot, I'm hoping they've improved since then, otherwise, the 3080 edges out in value I think.Looks like it's some Microsoft sample app.

https://www.amd.com/en/technologies/rdna-2

View attachment 293580

Yeah super resolution, and unlike DLSS 2.0, it's not dev sided and does not use an AI neural network. Though it's performance remains to be evaluated.I believe, and I may be wrong, that AMD is working on something similar to DLSS, but it’s on the developer side. I hope they are and we can see more competition.

Nvidia's magic happens because they are able to utilize the Tensor cores to intelligently upscale. If AMD is doing software trickery to compete, there is ZERO chance it will work as well as DLSS. AMD has to find a way to implement a hardware-based solution, or Nvidia will walk all over them.Oh shoot, I'm hoping they've improved since then, otherwise, the 3080 edges out in value I think.

Yeah super resolution, and unlike DLSS 2.0, it's not dev sided and does not use an AI neural network. Though it's performance remains to be evaluated.

Revenant_Knight

Gawd

- Joined

- Nov 18, 2011

- Messages

- 696

Oh shoot, I'm hoping they've improved since then, otherwise, the 3080 edges out in value I think.

Yeah super resolution, and unlike DLSS 2.0, it's not dev sided and does not use an AI neural network. Though it's performance remains to be evaluated.

So some sort of hardware/shader upscaling and reconstruction with maybe a next gen TAA? That could be pretty cool. Makes sense as the consoles will need it as well.

Nvidia's magic happens because they are able to utilize the Tensor cores to intelligently upscale. If AMD is doing software trickery to compete, there is ZERO chance it will work as well as DLSS. AMD has to find a way to implement a hardware-based solution, or Nvidia will walk all over them.

Math is math. IF you can make it run as fast as it needs to on something generic (eg, software), then you don't need a hardware solution to do so - especially since most things have very capable hardware to accelerate certain mathematical functions as-is anyway. Not saying this is true in this case, but we thought at one point it would always take hardware decoders to play DVDs, for instance - and then we got fast enough CPUs with enough extensions to do it there. It's all math in the end - we'll just have to wait and see what they did.

It's all cyclical too - hardware comes out, software finds a way of doing it just as well, then dedicated hardware to accelerate certain portions, and so on. Thus is the wheel of computing.

Viper87227

Fully [H]

- Joined

- Jun 2, 2004

- Messages

- 18,017

I don't think so.Yes they are and its called Super Resolution.

By AMD's own definition, Super Resolution is their version of DSR. In their example, say you game on a 1080p display but have extra GPU horsepower, you could use Super Resolution to render the game at 4K and then output at 1080p, giving a better image than native 1080p. This is not what DLSS is, which renders at a lower resolution and then uses AI to upscale the image. They also have Radeon Imagine Sharpening, which, best I can tell, is literally just a sharpening filter. Neither of these technologies are intended to give a 4K-like picture without actually rending at 4K. Thus, neither are comparable to DLSS.

Unless AMD has made claims that they intend to update the technology to do that?

VirtualMirage

Limp Gawd

- Joined

- Nov 29, 2011

- Messages

- 470

Well, the RTX 2080 Ti was getting at least 390 fps in that sample application (CPU and other system info unknown). It would be interesting to know how much better the 3080 and 3090 get in it as well to be able to use as a comparison. I know with other benchmarks, the 3080 was roughly 35-45% faster in ray tracing alone over the 2080 Ti and 85-100% faster than a standard 2080. If that performance bump can be equally translated to the Procedural Geometry sample, then that would put the 3080 at around 526-565 fps. That's around 11.6-20% faster than what AMD lists if all things were equal.Looks like it's some Microsoft sample app.

https://www.amd.com/en/technologies/rdna-2

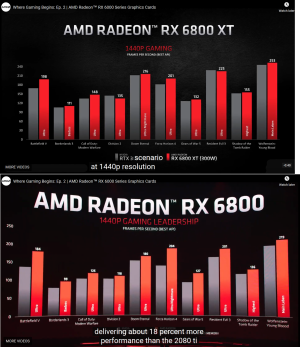

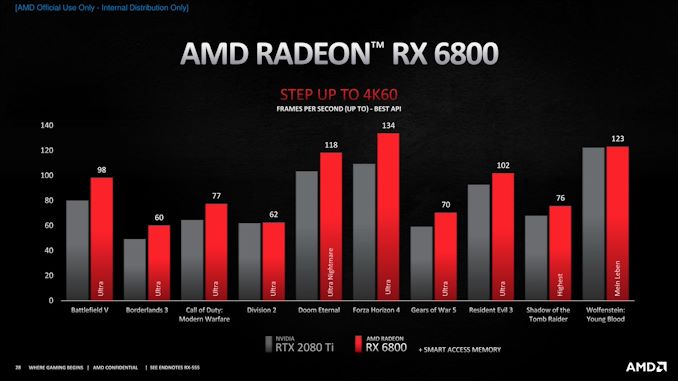

View attachment 293580

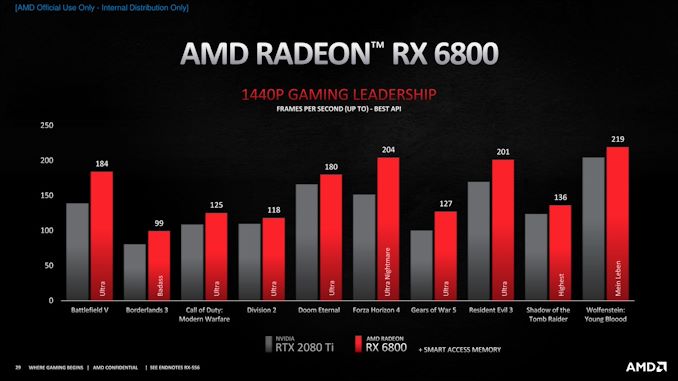

Do people have different eyes than me or something? The 6800 is pretty far ahead of a 3070. This is at 4K.The 3070 is pretty much a drop in replacement for a 2080ti at less than half the price (and nearly the same power envelope) if your only metric is frame rates. We'll see how the 3070 does with a Zen 3 chip compared to a 6800 on Zen 3, but from the slides the 6800 seems closer to the 3070 than a future ti card.

This is at 2K:

Saying these two cards are close is nonsense.

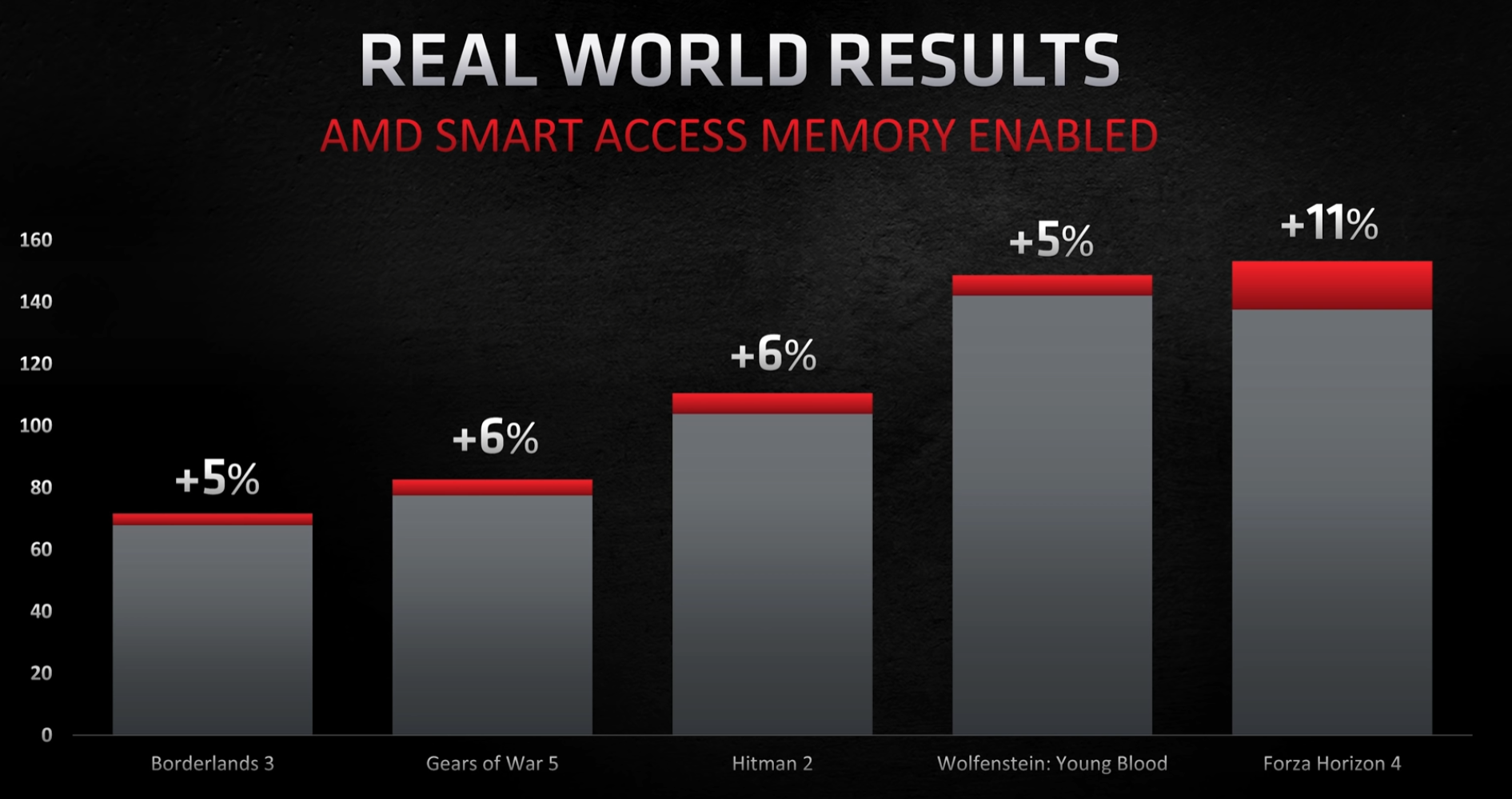

Those graphs are killing me with the "+ Smart Access Memory" point at the bottom. I want to know what performance is like WITHOUT product stacking. Lots of people bought Intel 10 series chips and have no plans to buy a Zen 3 and X570 motherboard. What is performance like for those people?Do people have different eyes than me or something? The 6800 is pretty far ahead of a 3070. This is at 4K.

View attachment 293594

This is at 2K:

View attachment 293595

Saying these two cards are close is nonsense.

Look, I want AMD to win. Competition means lower prices for everyone... but this smells like marketing, very similar to how Nvidia said the 3080 was "2x the performance of a 2080", and reality was more like 60-80% more. Just keep expectations in check until reviews hit. The RX 6000 cards will be fast, no doubt, but it just feels like people are setting themselves up for disappointment... and that's if drivers work correctly, unlike the RX 5000 series.

You're incorrect. From Anand:I don't think so.

By AMD's own definition, Super Resolution is their version of DSR. In their example, say you game on a 1080p display but have extra GPU horsepower, you could use Super Resolution to render the game at 4K and then output at 1080p, giving a better image than native 1080p. This is not what DLSS is, which renders at a lower resolution and then uses AI to upscale the image. They also have Radeon Imagine Sharpening, which, best I can tell, is literally just a sharpening filter. Neither of these technologies are intended to give a 4K-like picture without actually rending at 4K. Thus, neither are comparable to DLSS.

Unless AMD has made claims that they intend to update the technology to do that?

https://www.anandtech.com/show/1620...-starts-at-the-highend-coming-november-18th/2

"AMD is also continuing to work on its FidelityFX suite of graphics libraries. Much of this is held over from AMD’s existing libraries and features, such as contrast adaptive sharpening, but AMD does have one notable addition in the works: Super Resolution.

Intended to be AMD’s answer to NVIDIA’s DLSS technology, Super Resolution is a similar intelligent upscaling technique that is designed to upscale lower resolution images to higher resolutions while retaining much of their sharpness and clarity. Like DLSS, don’t expect the resulting faux-detailed images to completely match the quality of a native, full-resolution image. However as DLSS has proven, a good upscaling solution can provide a reasonable middle ground in terms of performance and image quality."

If it was called Nvidia Sunshine or maybe even DLSS would you care? Because so far it's DLSS nation around here like it's in every game when it's not. Nor is ray tracing btw.Those graphs are killing me with the "+ Smart Access Memory" point at the bottom. I want to know what performance is like WITHOUT product stacking. Lots of people bought Intel 10 series chips and have no plans to buy a Zen 3 and X570 motherboard. What is performance like for those people?

I would like to know that too BTW. But then again, that's why you buy the highest performing systems. If you don't well....

But you're right I would like to know the difference for an Intel system vs an AMD one.

Last edited:

VirtualMirage

Limp Gawd

- Joined

- Nov 29, 2011

- Messages

- 470

Looks, like we have the answer here to that:Well, the RTX 2080 Ti was getting at least 390 fps in that sample application (CPU and other system info unknown). It would be interesting to know how much better the 3080 and 3090 get in it as well to be able to use as a comparison. I know with other benchmarks, the 3080 was roughly 35-45% faster in ray tracing alone over the 2080 Ti and 85-100% faster than a standard 2080. If that performance bump can be equally translated to the Procedural Geometry sample, then that would put the 3080 at around 526-565 fps. That's around 11.6-20% faster than what AMD lists if all things were equal.

https://videocardz.com/newz/amd-ray...dia-rt-core-in-this-dxr-ray-tracing-benchmark

Yes, the 6800 appears to just blow away the 3070, maybe Nvidia should reduce the price to something more reasonable for a 8gb card such as $379 and the 3070Ti with 12gb $499 which may still loose to the 6800. Frankly I would rather have a 6800 card over the 3800 due to the ram and OC headroom which Nvidia has none. Debating 6800XT or 6900XT myself, probably 6800XT unless AMD drops the price for the 6900XT, then again. . .Do people have different eyes than me or something? The 6800 is pretty far ahead of a 3070. This is at 4K.

View attachment 293594

This is at 2K:

View attachment 293595

Saying these two cards are close is nonsense.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,567

Who gives a crap about dlss and RT on older games no one is even playing. You don’t buy a 3080, and 3090 to go back and play control again or metro exodus. Both are older games people just use for benchmarks that won’t be used in 2-3 years. Also remember dlss has to be put in by the developer.

RT is still 2-3 generations away from being able to implement it well.

Sorry but trying to defend the nvidia cards by constantly bringing up dlss, and RT is just a huge stretch.

RT is still 2-3 generations away from being able to implement it well.

Sorry but trying to defend the nvidia cards by constantly bringing up dlss, and RT is just a huge stretch.

People keep bringing up DLSS because it's a hardware accelerated feature that AMD doesn't appear to have (nor do they have an answer for), and when it's implemented, it can yield a sizeable performance boost that puts Nvidia ahead. I'm not doubting that AMD has monstrous Rasterization perf with their cards, but AI-based rendering is here to stay, and Nvidia has proven that it works in a very real way.If it was called Nvidia Sunshine or maybe even DLSS would you care? Because so far it's DLSS nation around here like it's in every game when it's not. Nor is ray tracing btw.

I would like to know that too BTW.

DLSS is still a somewhat new feature, so we're going to see it be used more and more as time goes on. The killer app, though, is going to be Cyberpunk 2077. It will be interesting to see how the RX 6000 cards fare against RTX 3000 cards in that game.

As for Control, it's basically a playable benchmark. So? It was sort of like Crysis; a technology showcase and a glimpse into the future. As of right now, it's the most real way we have of gauging the performance potential of these cards until Cyberpunk 2077 hits in December.

People keep bringing up DLSS because it's a hardware accelerated feature that AMD doesn't appear to have (nor do they have an answer for), and when it's implemented, it can yield a sizeable performance boost that puts Nvidia ahead. I'm not doubting that AMD has monstrous Rasterization perf with their cards, but AI-based rendering is here to stay, and Nvidia has proven that it works in a very real way.

DLSS is still a somewhat new feature, so we're going to see it be used more and more as time goes on. The killer app, though, is going to be Cyberpunk 2077. It will be interesting to see how the RX 6000 cards fare against RTX 3000 cards in that game.

They do have answer for it if you watched the stream. And I'm not buying a card for one game especially since it's delayed until December. Will I get cyberpunk? Yes. But I'm not going gah gah over features that only a hand full of games support. Raster performance is still king and will be for years to come. That's what I'm basing cards on.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,567

People keep bringing up DLSS because it's a hardware accelerated feature that AMD doesn't appear to have (nor do they have an answer for), and when it's implemented, it can yield a sizeable performance boost that puts Nvidia ahead. I'm not doubting that AMD has monstrous Rasterization perf with their cards, but AI-based rendering is here to stay, and Nvidia has proven that it works in a very real way.

DLSS is still a somewhat new feature, so we're going to see it be used more and more as time goes on. The killer app, though, is going to be Cyberpunk 2077. It will be interesting to see how the RX 6000 cards fare against RTX 3000 cards in that game.

As for Control, it's basically a playable benchmark. So? It was sort of like Crysis; a technology showcase and a glimpse into the future. As of right now, it's the most real way we have of gauging the performance potential of these cards until Cyberpunk 2077 hits in December.

Yes it’s a benchmark that is built on nvidia software to showcase how well nvidias dlss is. You really think Amd has a chance to do it better on nvidia sponsored games? Now sure when comparing ray tracing I can see that argument, but dlss is nvidia sponsored. So using dlss as an argument is stupid.

Free performance is stupid? That's news to me.Yes it’s a benchmark that is built on nvidia software to showcase how well nvidias dlss is. You really think Amd has a chance to do it better on nvidia sponsored games? Now sure when comparing ray tracing I can see that argument, but dlss is nvidia sponsored. So using dlss as an argument is stupid.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,567

With lower image quality. I mean yeah sure it’s free when you lower the image quality lol.Free performance is stupid? That's news to me.

That’s like nvidia saying my 3090 is an 8k gaming machine! O yeah that’s right with dlss which isn’t really 8k!

I don't think any of the current RT games will be a good benchmark for RNDA2 cards unless significantly updated to take any advantage or use RNDA2 hardware effectively. If any RT capable Console games come out for the XBox Series S or X, that might be the first good indication. If one wants to play current crop of RT games, top priority, then Nvidia Ampere is probably the better solution. Still if AMD is 22% behind so to speak, better than the 2080Ti I just don't see that as significant based on the lack of the number of RT games and their quality improvements when used.

- Joined

- May 18, 1997

- Messages

- 55,634

Laura ran the last live announcement at CES last year.I don't remember ever seeing this woman before, the lead designer of RTG.

I still think there will be some trading blows in there without that as well, but I would have shown it off in my ecosystem too.6900 XT is trading blows with 3090, but only coupled with the new AMD processor. It's a few percent lower than that.

Still, at 999, that's one hell of a better buy than 3090, RIP Nvidia, no wonder they canceled high vram GPUs, lol.

Patience young padawan.The only thing they're missing is a DLSS equivalent.

hmm. has anybody else noticed how little difference there seems to be between the 6800 vs the 6800XT?

AMD's own slide for the RX 6800XT at 1440 shows it is 201 fps for Forza Horizon 4, whereas the non-XT 6800 is 204.

Why is the 6800 non-XT faster than the XT version in Forza Horizon 4?

Screenshots below.

Also, I thought it'd be useful to see the fps difference between the two. So I made a table comparing 1440p performance.

It looks like about a 13% average performance gain after omitting the Forza Horizon 4 anomaly. I assume they made a mistake there.

oh, that's strange. AMD labelled the graphics setting on Forza 4 as "Ultra Nightmare" for the RX 6800. But the 6800XT they labelled the setting as "Ultra". Does Forza Horizon 4 even have an "Ultra Nightmare" graphics setting?

Other than that, the price points line up almost exactly. 12% more cost, 13% more performance.

AMD's own slide for the RX 6800XT at 1440 shows it is 201 fps for Forza Horizon 4, whereas the non-XT 6800 is 204.

Why is the 6800 non-XT faster than the XT version in Forza Horizon 4?

Screenshots below.

Also, I thought it'd be useful to see the fps difference between the two. So I made a table comparing 1440p performance.

It looks like about a 13% average performance gain after omitting the Forza Horizon 4 anomaly. I assume they made a mistake there.

oh, that's strange. AMD labelled the graphics setting on Forza 4 as "Ultra Nightmare" for the RX 6800. But the 6800XT they labelled the setting as "Ultra". Does Forza Horizon 4 even have an "Ultra Nightmare" graphics setting?

Other than that, the price points line up almost exactly. 12% more cost, 13% more performance.

RX 6800 | RX 6800 XT | % XT diff | |

| BF V | 184 | 198 | 108% |

| Borderlands 3 | 99 | 111 | 112% |

| CoD MW | 125 | 148 | 118% |

| Div 2 | 118 | 135 | 114% |

| Doom Eternal | 180 | 216 | 120% |

| Forza 4 | 204 | 201 | 99% |

| Gears 3 | 127 | 132 | 104% |

| RE3 | 201 | 225 | 112% |

| SotTR | 136 | 155 | 114% |

| Wolf YB | 219 | 253 | 116% |

Attachments

Last edited:

- Joined

- May 18, 1997

- Messages

- 55,634

Here are some numbers on percentage boost on SAM. Whole video...That was at 4K, at 1440p, Ultra Wide resolutions and so on it will be even faster. We still don't know how much further past Rage settings these cards will go. In any case it is beating more games than not from this AMD picked selection. We just need reviews to find out how it performs in RT and so on as well as how much headroom. Since performance for RT was not presented with performance numbers, might have to wait for the reviews and if that is important to one's buying decision.

View attachment 293505

CyberJunk

Supreme [H]ardness

- Joined

- Nov 13, 2005

- Messages

- 4,242

Nvidia is in serious trouble right now. They have to lower their prices to compete and they don't have any product to sell at market. I'm not sure what they're gonna do maybe the current prices will deter sales and increase stock level naturally I don't know?Does nVidia have cards hiding in warehouses, waiting until Nov. 18th, and then surprise everyone ,saying, oh here's thousands of cards coming to store shelves right away LOL

It's not better image quality. Depending on implementation its a reconstructed image. Meaning it's not the same to native. So it's not better image quality. It can be a different image all together. I'm not saying it's not a good thing. But for something that only exists in like 19 games, I'm sorry there's no need to be pretending like it's everywhere when it's not.DLSS 2.0 is literally the same or BETTER image quality than native res. This isn't a compromise. It's progress.

Welcome to 2020 when marketing team come into forums to promote features that are not universal or available universally. So yeah they would.No one here would be touting DLSS as a feature if it wasn't at least on-par or better than the native renderer.

Nvidia is in serious trouble right now. They have to lower their prices to compete and they don't have any product to sell at market. I'm not sure what they're gonna do maybe the current prices will deter sales and increase stock level naturally I don't know?

I agree, but this doesn't happen until Nov 18th, and we see what kind of supply AMD has for everyone. If AMD has the same back orders and limited stock, then nVidia isn't in trouble at all, and AMD will be in the same boat. But at least AMD has big time sales coming due to the PS5 and new Xbox release, which I would imagine are HUGE sales for AMD.

Will be itneresting to see how this plays out this holiday season, when AMD should have cards on the street then, and nVidia?

Yup. It's starting to get ridiculous. I actually like Nvidia's stuff but it's becoming a little too much for a technology that's not universal and very limited title to title.Who gives a crap about dlss and RT on older games no one is even playing. You don’t buy a 3080, and 3090 to go back and play control again or metro exodus. Both are older games people just use for benchmarks that won’t be used in 2-3 years. Also remember dlss has to be put in by the developer.

RT is still 2-3 generations away from being able to implement it well.

Sorry but trying to defend the nvidia cards by constantly bringing up dlss, and RT is just a huge stretch.

The only thing they're missing is a DLSS equivalent.

While AMD is promising to go head to head with Nvidia in 4K gaming and more, the one big missing piece of this battle is a lack of an equivalent to Nvidia’s DLSS...Nvidia’s AI-powered super sampling technology has been transformative for the games that support it, bringing great image quality and higher frame rates by simply toggling a game setting...AMD tells me it has a new super sampling feature in testing, which is designed to increase performance during ray tracing

The company is promising its super sampling technology will be open and cross-platform, which means it could come to next-gen consoles like the Xbox Series X and PS5...AMD is working with a number of partners on this technology, and it’s expecting strong industry support...unfortunately, this won’t be ready for the launch of these three new Radeon RX 6000 Series cards...

https://www.theverge.com/2020/10/28...pecs-release-date-price-big-navi-gpu-graphics

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)