I was watching an XP build on youtube by AkBKuKu on his TechTangents channle and he is using an old P4 550, now I forgot untill I was watching this video but back then intel was running there FSB at 800MHz... now i think AMD was also doing this with there AMD64 platform, i do recall seening a FSB for an AMD cpu at the time being like 400MHz, now i know these speeds were the system bus speed of around 100MHz hit with a muiliplyer as it came to the CPU for the mad bus speeds and at the time it looked like that was the direction of CPU's, intel and AMD were hitting higher and higher FSB speeds and soon the 10GHz CPU that intel was talkinga about was going to be the future. Anyway I personally fell off of the PC train around 2005 to about 2009 and when i got back it was all Core2duo and Phenom CPU's that had taken over the market and the days of insain FSB speeds was over so now being reminded of the past I'm curious on what happend to the philosophy of faster and faster FSB speeds, like if you asked me to predict the future of CPU's back in 2005 I would have thought that the FSB speeds would have kept increasing along with CPU speeds.

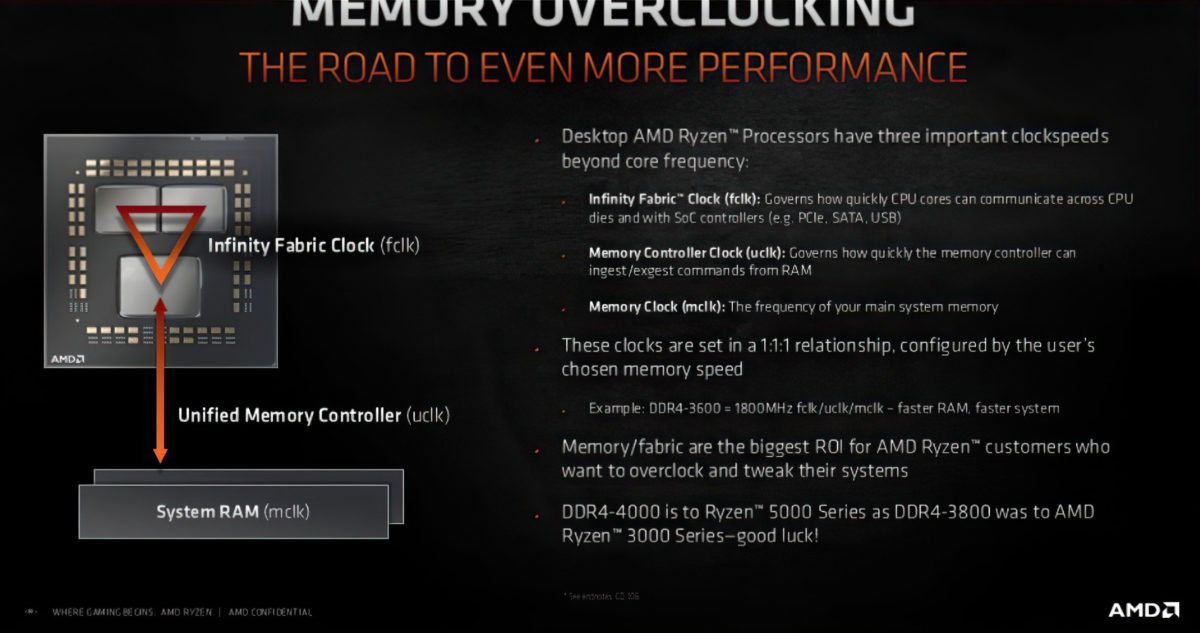

Now I do have a theory as to why, the 800MHz FSB speed that intle was using was generated on the MOBD probly by the north bridge and so making a faster FSB you needed to be depended on the MOBD chip set, so I'm gussing that when the memory controller and most of the NB chipset got integrated in to the CPU so did the crazy FSB speeds that the CPU needed, keeping the system Bus speed at 100MHz, really now it's just the memory speed that is faster then the Bus speed now as far as I can tell and that talks directly to the CPU so ya I gusse no more need for 800MHz FSB.

Anyway this was a fun little think on the past that I grew up with, durring one of the more interesting times in computer hardware history and figured I would ask what happend to the insain FSB of yesteryear.

Now I do have a theory as to why, the 800MHz FSB speed that intle was using was generated on the MOBD probly by the north bridge and so making a faster FSB you needed to be depended on the MOBD chip set, so I'm gussing that when the memory controller and most of the NB chipset got integrated in to the CPU so did the crazy FSB speeds that the CPU needed, keeping the system Bus speed at 100MHz, really now it's just the memory speed that is faster then the Bus speed now as far as I can tell and that talks directly to the CPU so ya I gusse no more need for 800MHz FSB.

Anyway this was a fun little think on the past that I grew up with, durring one of the more interesting times in computer hardware history and figured I would ask what happend to the insain FSB of yesteryear.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)