DejaWiz

Fully [H]

- Joined

- Apr 15, 2005

- Messages

- 21,825

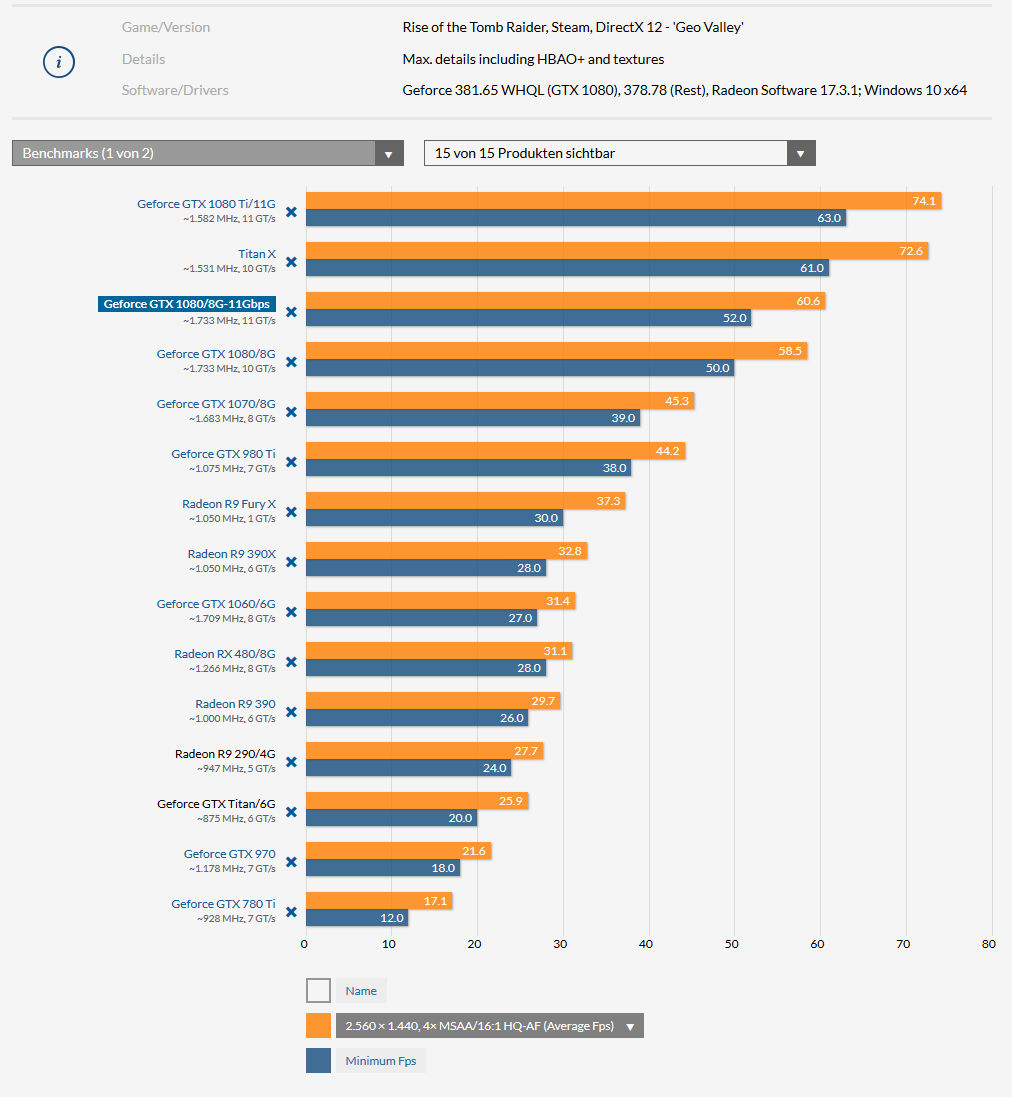

My 980Ti just needs to keep truckin' along for a little while longer...Volta or Vega will be my next GPU; whichever one will provide over double the performance at a minimum, lower power draw, and less heat output in the < $600 range I'm sticking to.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)