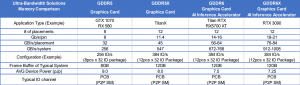

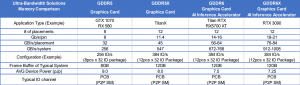

Micron document inadvertently confirms 3090 Product Name, and memory config (12 GB GDDR6X, 384 bit bus), while shooting down claims that it would be 20GB-24GB. This table was cropped out of the Micron doc:

https://videocardz.com/newz/micron-confirms-nvidia-geforce-rtx-3090-gets-21gbps-gddr6x-memory

Edit. As pointed out. This Does NOT preclude a 24GB card.

Given everything we have seen, there likely will be 24GB cards. I start to wonder if there will be 12GB cards as well...

https://videocardz.com/newz/micron-confirms-nvidia-geforce-rtx-3090-gets-21gbps-gddr6x-memory

Edit. As pointed out. This Does NOT preclude a 24GB card.

Given everything we have seen, there likely will be 24GB cards. I start to wonder if there will be 12GB cards as well...

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)