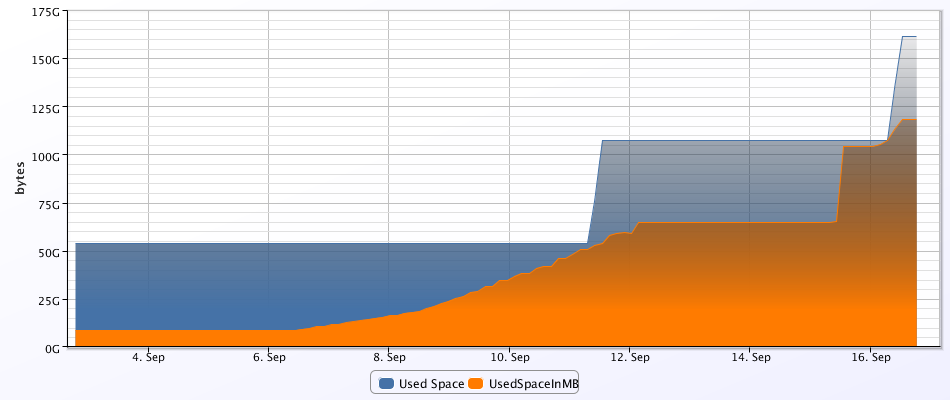

Not sure what is causing the issue, but the MS SQL DB has grown over 100 GB in the past 10 days. I found some good information online about seeing where the large tables are and reducing size, but not really what may be causing the issue. No settings have changed. It just started growing and keeps growing. I was looking at these tables.

vpx_hist_stat1 to vpx_hist_stat4

vpx_sample_time1 to vpx_sample_time4

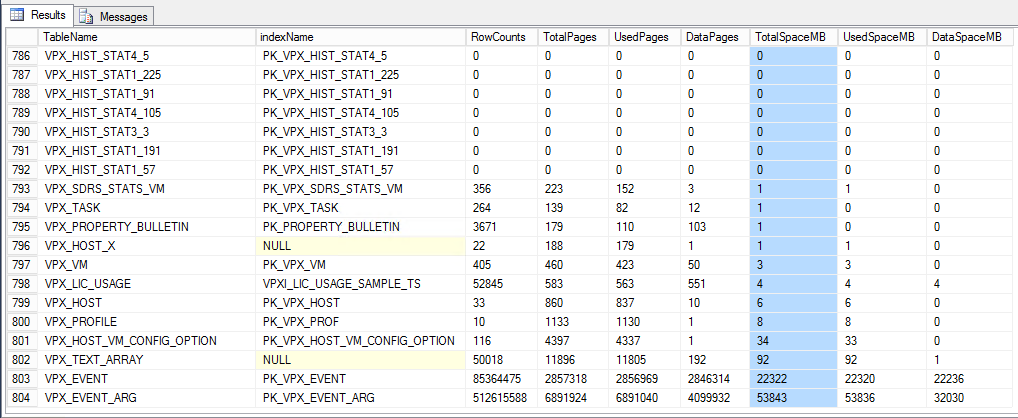

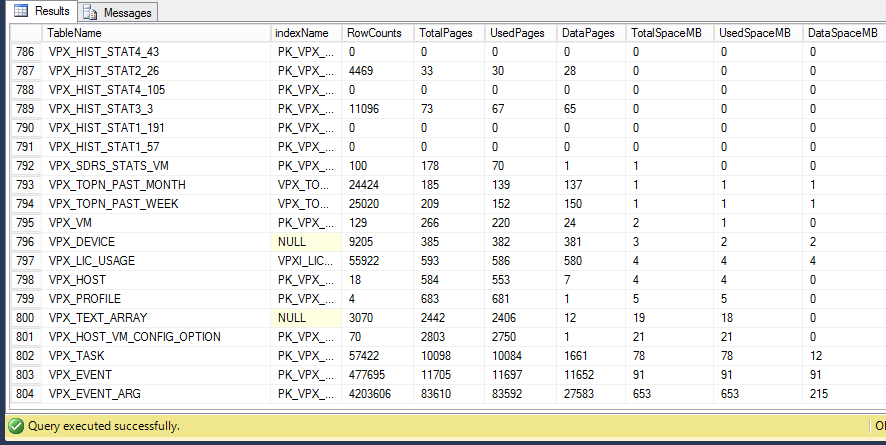

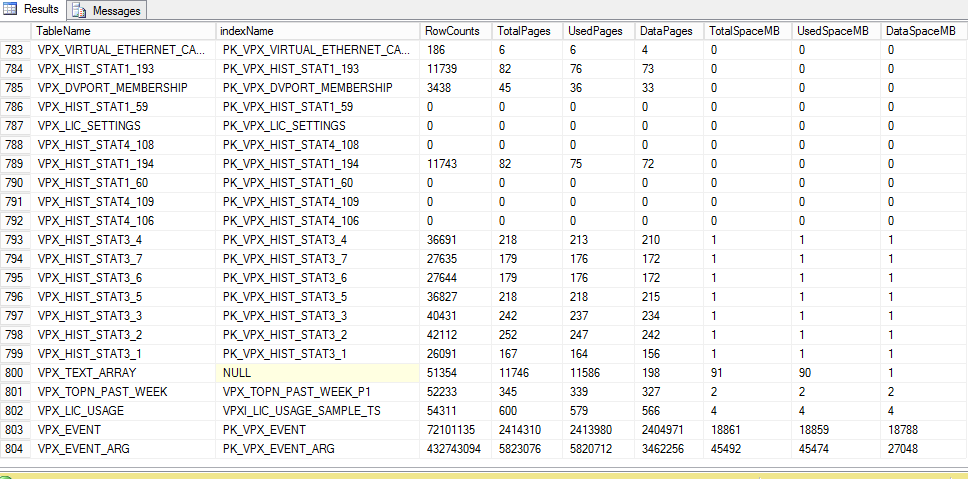

vpx_event and vpx_event_arg

vpx_task

What I found is that instead of hist_stat1-stat4, there are about 240 tables. Although it seems most have a size of 0 MB.

A lot of the space is in the event and event_arg tables. Event is 19 GB and event_arg is 46 GB.

This is one of the articles I found, http://kb.vmware.com/selfservice/se...ype=kc&docTypeID=DT_KB_1_1&externalId=1007453, but it seems more focused on the vpx_hist tables. Any recommendations on how to clean this up and stop it from growing out of control?

vpx_hist_stat1 to vpx_hist_stat4

vpx_sample_time1 to vpx_sample_time4

vpx_event and vpx_event_arg

vpx_task

What I found is that instead of hist_stat1-stat4, there are about 240 tables. Although it seems most have a size of 0 MB.

A lot of the space is in the event and event_arg tables. Event is 19 GB and event_arg is 46 GB.

This is one of the articles I found, http://kb.vmware.com/selfservice/se...ype=kc&docTypeID=DT_KB_1_1&externalId=1007453, but it seems more focused on the vpx_hist tables. Any recommendations on how to clean this up and stop it from growing out of control?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)