So, switched up how my home lab works. Now have my 3960X (main workstation) and a 1950X (HTPC/Plex Server) running virtualized ESX hosts on NVMe drives, which means even as nested, performance is more than adequate on the guests - and I was able to consolidate down the number of running machines quite a bit too. Plus, both can game/encode/etc while running the nested hosts without even blinking...

Debating on picking up one more 1950X combo for the final part, or I could grab a modern x570 board and a 3950X. Would feed it 64G of ram for now and an NVMe drive or two, plus a 10G card if needed, and drop linux on it - giving 8c/32G to the nested host, leaving half (ish) for other workloads. This would give me 24C/96G of nested capacity to work with, which is adequate for my current needs

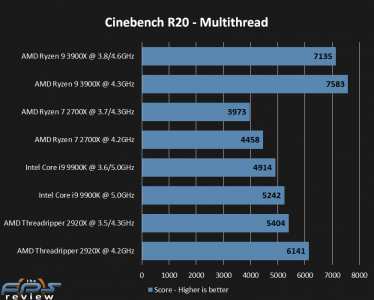

The advantage of the x570 is that it's Zen2/Zen3, which is more powerful, and tends to be more ram tolerant (better IMC) - and it doesn't have the crappy x399 socket mess that is a PITA to deal with. But, it's more expensive - there are a couple of x399 combos for sale for ~500/600, which only covers the 3950 CPU... And it tops out at 16C and dual-channel ram, plus it won't really like 128G if I ever jump there.

The x399, however, has quad-channel ram (matters a ~bit~ for this workload), is cheaper, and can go up to 32C later on if needed (2990wx), plus potentially take more NVMe drives on the PCIe bus... but is more finicky, is older Zen 1/1+, costs more, and pulls a lot more power...

It's a bit of a weird workload, for sure - and I'm trying to keep cost down as much as possible, so the TR is tempting... but while the board/cpu for TR are cheaper, everything ~else~ is more expensive...

Debating on picking up one more 1950X combo for the final part, or I could grab a modern x570 board and a 3950X. Would feed it 64G of ram for now and an NVMe drive or two, plus a 10G card if needed, and drop linux on it - giving 8c/32G to the nested host, leaving half (ish) for other workloads. This would give me 24C/96G of nested capacity to work with, which is adequate for my current needs

The advantage of the x570 is that it's Zen2/Zen3, which is more powerful, and tends to be more ram tolerant (better IMC) - and it doesn't have the crappy x399 socket mess that is a PITA to deal with. But, it's more expensive - there are a couple of x399 combos for sale for ~500/600, which only covers the 3950 CPU... And it tops out at 16C and dual-channel ram, plus it won't really like 128G if I ever jump there.

The x399, however, has quad-channel ram (matters a ~bit~ for this workload), is cheaper, and can go up to 32C later on if needed (2990wx), plus potentially take more NVMe drives on the PCIe bus... but is more finicky, is older Zen 1/1+, costs more, and pulls a lot more power...

It's a bit of a weird workload, for sure - and I'm trying to keep cost down as much as possible, so the TR is tempting... but while the board/cpu for TR are cheaper, everything ~else~ is more expensive...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)