CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,731

I want to congratulate you guys on being a guinea pig for the rest of us who just couldn't muster up the courage to be a guinea pig.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Why are you using FSR when you have a Nvidia card? It supports DLSS.I reinstalled from scratch using the update & DX12.

Ehhhhh... at DX12 1080p FSR2 Quality with dynamic resolution scaling ON (and obv no RTX) - Ultra+ looks better but the fps is lingering in the high 50's around Novigrad.

And whether Gsync On and Vsync on or off, there are still some weird stutters.

- DX11 client bumps the fps to approx 75 in the same scenarios but without FSR available and still... random stuttering

I don't expect this to be a Cyberpunk-esque situation where it'll take a year, but I'd wager 2-3 months "in the oven" will smooth things out.

.... Shame cuz 1.32 runs like greased butter /w mods (I used a 90 fps cap but it def went wayyyyy higher)

It really should've been a seperate installation/client in Steam. Ala Skyrim/Bioshock etc

He's using a GTX 1080.Why are you using FSR when you have a Nvidia card? It supports DLSS.

Game runs perfect for me. HDR looks great. Max settings with DLSS quality on a 4080.

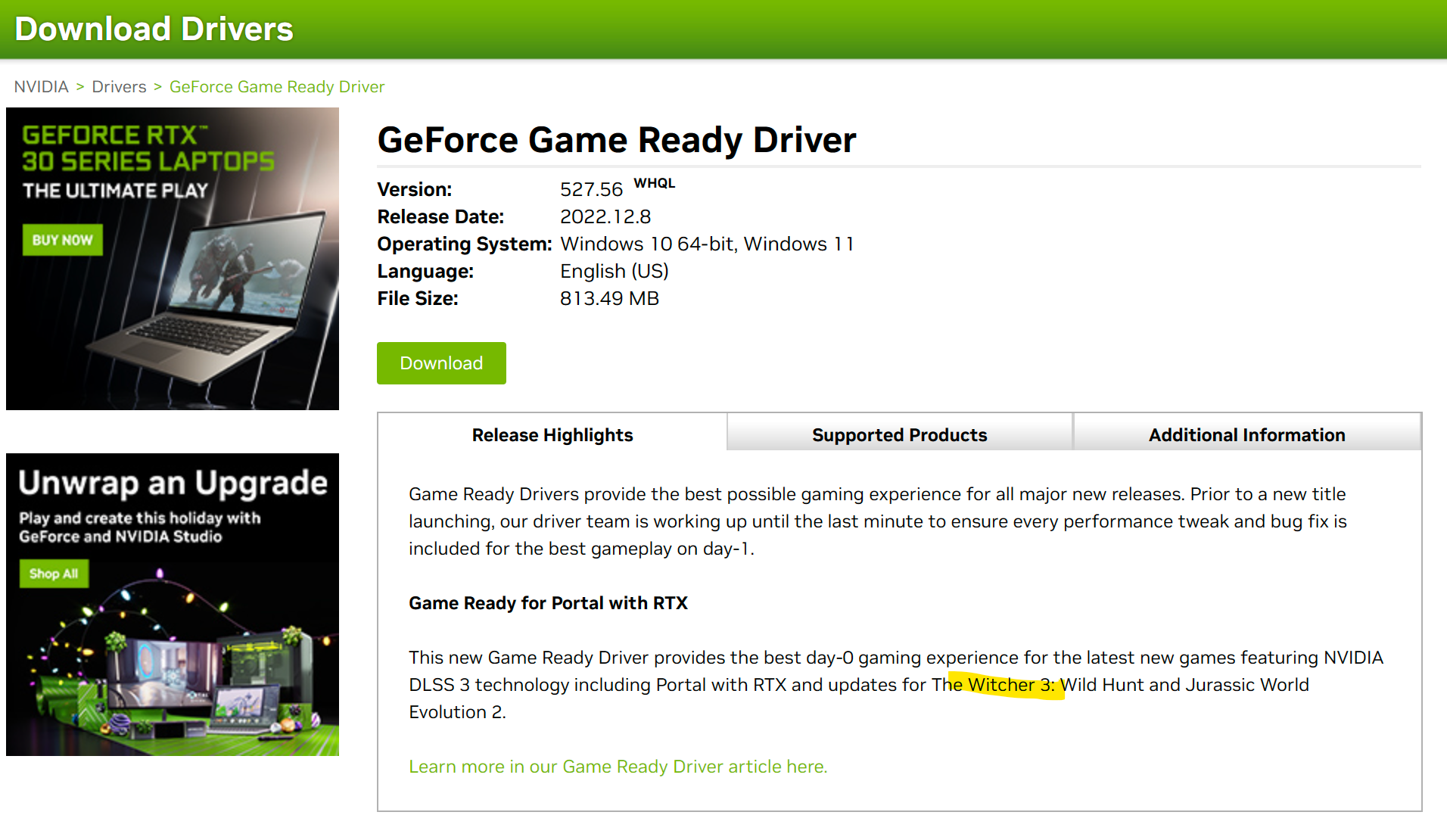

Did you guys update your Nvidia drivers?

4k?Updated AMD drivers to the 22.11.2 and I get a solid 85 FPS on full Ultra settings without RT. RT doesn't add that much IMO. 5950X/6900XT here just for reference. I still think it can be optimized more since it Radeon software says I am utilizing 6-8 GB out of 16GB

Yes 4k on LG C1 Oled (also FSR Quality for clarification)

This is the first game I've played that makes me want to buy a 4090.

Almost as if there's an entire company that helps developers implement these features to illicit such a feeling for their own benefit...

I have a 4090, I get 100fps in general play in caves, fields etc with RT off. I tried enabling RT this morning, it crashed. I do not see this as an upgrade besides the ray tracing, but RT is not worth losing 70% of my FPS.It's playable on my 12700K with a 3080 at 4K resolution when I set DLSS to balanced and everything else to Ultra. No it's not 60FPS per second, but it looks really really good on my LG C2 42" TV/Monitor. Make sure dynamic resolution is off. I turned off motion blur, and blur, and chromatic aberration. Everything else is maxed out. I tried lowering the resolution but it affects the picture quality too negatively and softens everything up. Dynamic Resolution being on looks horrible.

I'm toying with turning Ray Tracing off and on for great performance vs. passable performace. I think you give up more than I want to with Ray Tracing off. There's some special lighting happening I think with Ray Tracing. Since the game doesn't need 120Hz motion to be playable - I think I'm leaning towards keeping the eye candy as high as possible and still have an above 30FPS experience.

This is the first game I've played that makes me want to buy a 4090.

The game crashes if you try to turn ray tracing on when you are already in the game. You need to change the option before loading a save game or starting a new game.I have a 4090, I get 100fps in general play in caves, fields etc with RT off. I tried enabling RT this morning, it crashed. I do not see this as an upgrade besides the ray tracing, but RT is not worth losing 70% of my FPS.

Every Witcher 3 PC player knows Novigrad is the true benchmark. So with everything maxed, standing in Hieirarch square: 34 FPS RT on, 55 FPS RT off. 62 FPS Hairworks off. I am just turning down graphics left and right to get to where I was.

Used to be 180 FPS prepatch

It's definitely working on me TBH. Game looks incredible with RTGI and AO.FACT.

LmaoThe only reason I'd maybe play again is to pick Yen for once. That's been my plan a couple times but soon as I see Triss in that green dress it's a wrap.

Lot of assets ingame has been upgraded and draw distance substantial increased eg.Glad its not just me

https://www.rockpapershotgun.com/th...ed-worse-performance-even-without-ray-tracing

"I found she’d previously averaged 102fps from the RTX 3070 when running the game’s Ultra preset back in 2020. 27fps therefore represents a 74% ray tracing tax, or 67% when using Balanced DLSS to make it just about playable. But it’s even worse than that, because I re-ran the Ultra preset with this next-gen update and averaged only 90fps. "

Ja, people seem to be forgetting that there is a lot more in this update besides ray tracing.Lot of assets ingame has been upgraded and draw distance substantial increased eg.

That does not come for free, yet is seems it that the upgrades surprises a lot of people in requiring more computational power.

Right. Just look at the foliage levels. It’s a pretty significant difference! And it looked good before — but it’s pretty amazing now considering this was a 2015 game. I’m definitely just enjoying exploring and taking in the game world. Last night while playing a thunderstorm happened in the game and a few villagers ran towards the cover of a small clump of trees. They had a torch initially, but the rain put the torch out. The lighting flashing and storm in HDR with ray tracing was a sight to behold.Ja, people seem to be forgetting that there is a lot more in this update besides ray tracing.

I suspect my system has a problem related to the same issue.Unplugged my second monitor, and it fixed the crashing on load bug I had with RT on yesterday. Looks like there is no switch to turn off/on HDR in game. If you have an HDR monitor it is on automatically if windows has it enabled. So if you are having crash issues with the game and have 2 monitors, try disconnecting your second one physically from the card. I have 2 monitors one is off and not used most of the time, but Windows still sees it as does the nvidia control panel. I think the game is getting confused somewhere when RT is on and multiple monitors are detected even if the monitor is off.

Completely agree that some are forgetting it. I am not. I am still disappointed to see it inefficiently improving graphics.Ja, people seem to be forgetting that there is a lot more in this update besides ray tracing.

How is it inefficient? What metric are you using to judge that? This is one of the most demanding raytracing titles yet. Obviously, hardware that can't deal with this much raytracing well (Pretty much everything outside of a 4080/4090) is going to struggle.Completely agree that some are forgetting it. I am not. I am still disappointed to see it inefficiently improving graphics.

My man, I’m on a 4090 running 3ghz core clock and getting 35 fps in novigrad at 3440x1440p with everything turned up. Turning off RT gets me to 60fps. I’m willing to accept I might be trading off some performance with my lowly 5900x but I’m not even at a full 4k, I’m running the latest drivers and my system is optimized/stable.How is it inefficient? What metric are you using to judge that? This is one of the most demanding raytracing titles yet. Obviously, hardware that can't deal with this much raytracing well (Pretty much everything outside of a 4080/4090) is going to struggle.

I'm on a stock 4080 and a 5800x3d. At 1440 with max settings and DLSS quality (DLSS only, not frame generation) i'm seeing around 80FPS. Perfectly smooth and playable at that FPS with g-sync.My man, I’m on a 4090 running 3ghz core clock and getting 35 fps in novigrad at 3440x1440p with everything turned up. Turning off RT gets me to 60fps. I’m willing to accept I might be trading off some performance with my lowly 5900x but I’m not even at a full 4k, I’m running the latest drivers and my system is optimized/stable.

As to inefficiency, that’s obvious - game looks somewhat better than before, costs 75% of my frames. Not efficient.

Look at the photos above and let me know if the impression is also that RT just looks darker.

running RT as well?I'm on a stock 4080 and a 5800x3d. At 1440 with max settings and DLSS quality (DLSS only, not frame generation) i'm seeing around 80FPS. Perfectly smooth and playable at that FPS with g-sync.