Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

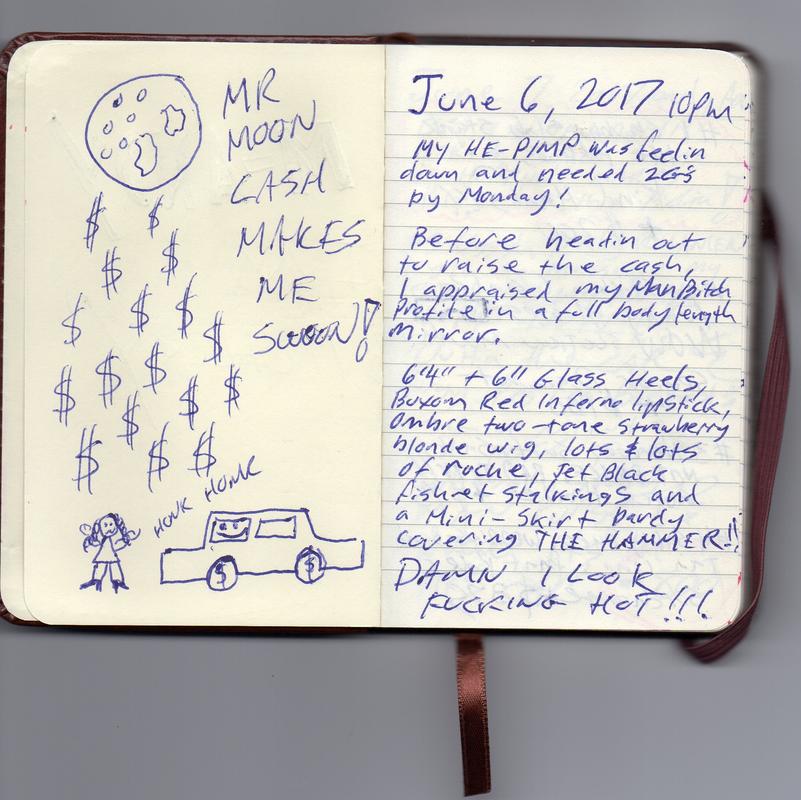

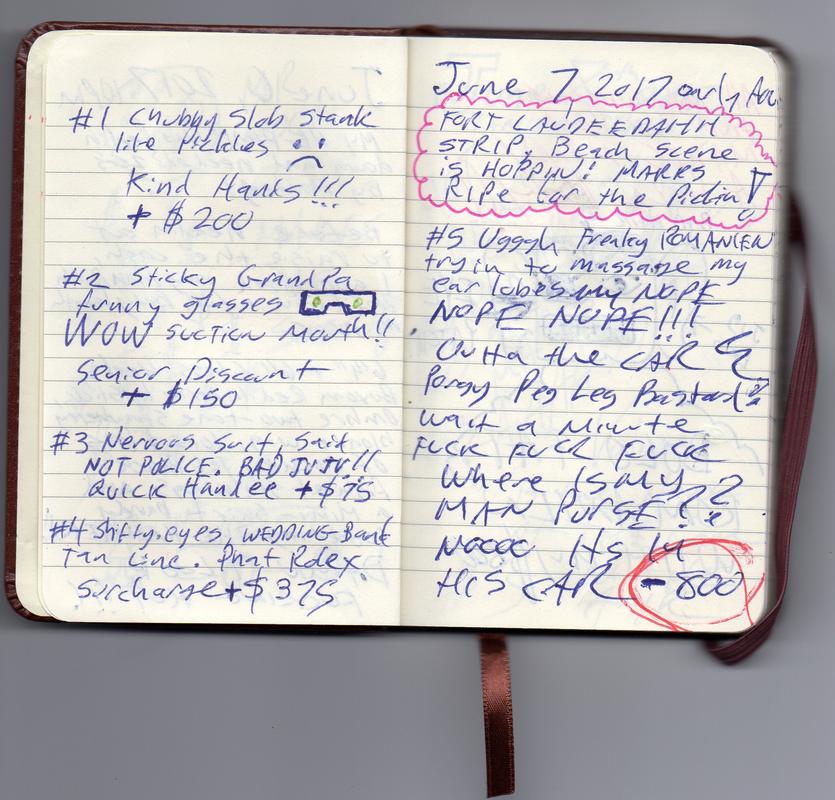

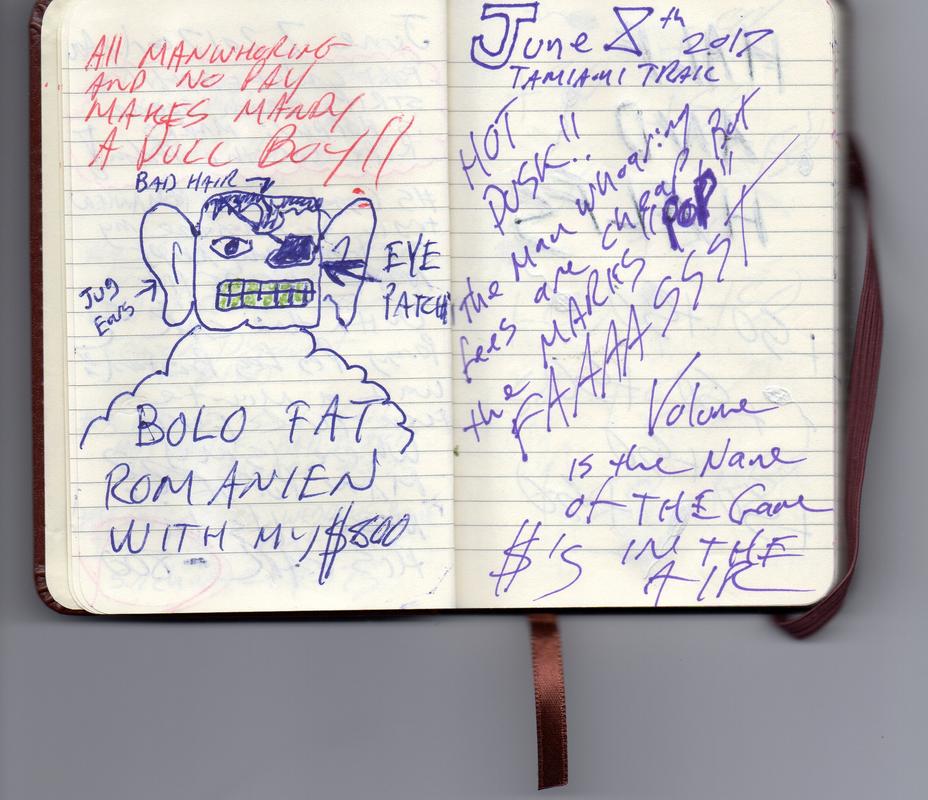

Here is my C9 setup. Just got it going a few days ago. Gaming at 1440 120hz right now with VRR. Works very well with latest Nvidia drivers and latest OLED firmware. Sorry for mess, still straightening it up. For the $1200 I paid for it, it works great and will last me just fine until an hdmi 2.1 card is available. Id rather keep the $2800 difference in my pocket. IMHO

View attachment 195836

Just curious, is it 1440 1:1 pixels with black boxes around or is it interpolated and fills the entire panel?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)