DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,926

So was Pascal, but I don't see anyone else complaining.Navi is not a redesign. It is Vega 2.0. It could even be a simple node shrink.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

So was Pascal, but I don't see anyone else complaining.Navi is not a redesign. It is Vega 2.0. It could even be a simple node shrink.

I was guesstimating, you might be right. But looking at the wikipedia for jaguar, based on clock speed (which is not a great comparison, but I can't find GFlops apples to apples) the Liverpool Jaguar (in the ps4) is 800mhz. So roughly x5 just on clockspeed. I would like to see a GFlops rating for both though. And this is just CPU, not talking about GPU... current Vega has to be 3-5x better than the PS4.

https://en.wikipedia.org/wiki/Jaguar_(microarchitecture)

That's a good point actually. I guess my big issue is that AMD tends to disappoint. So I am keeping my expectations low intentionally. But you could well be right. Thanks for that.So was Pascal, but I don't see anyone else complaining.

Even if they kept the same GPU in the PS4 Pro (roughly equivalent to an RX 480 from what I've seen examples of) and just upgraded the CPU on it, the PS4 Pro would be capable of AAA games with native 4K 60fps.

The Jaguar CPU is what is holding the current generation back, and being clocked at 2.3GHz is just not enough for that architecture to keep the GPU fed data properly - maybe if it were clocked at 4.5GHz or faster, but those are really weak x86_64 cores.

I'm not knocking the Jaguar architecture at all, it's just that when it was developed back in 2013, it was designed for low-power systems, thin clients, and embedded systems of that era - hardly a high performance contender of that era, let alone in 2018 or the 2020s!

I would not be surprised at all to see Sony and/or Microsoft move to ARM processors at this point, just like Nintendo did, regardless of whether they are streaming or dedicated systems.

Heck, even an ARMv8 A57 CPU core is clock-for-clock around ~1.5 times faster than an x86_64 Jaguar CPU core, and it requires far less electrical power plus it produces much less heat; it might also be potentially cheaper due to the licensing of x86_64, but that is pure speculation on my part.

The CPU that'll end up in the PS5 would likely be clocked much lower than your traditional Ryzen 8 core. I wouldn't be shocked if it ran at 1.8Ghz or around there. HBM makes sense, even though its expansive. Console makers tend to like to avoid price fluctuation and would rather get a contract that guarantees them a fixed cost for the production of HBM. We're also years away from when a PS5 is released, so who knows what'll happen in the memory market by then.

Not really, Nvidia does not do Semi-custom. If the next gen of consoles wants something other than what's already on the market, they can't commission it, even then, if they want something more powerful than an ARM chip, they will need a separate chip commissioned for the CPU, as Nvidia only does ARM.

That makes total sense, and I remember the then high-end ARM CPUs back in 2013 being the A17/A15, which really were not that great overall for price-performance compared to the Jaguar-based x86-64 processors, though they were somewhat close in general-processing power.Both Sony and Microsoft wanted ARM. x86-64 was chosen only because 64bit ARM wasn't ready then. However, I think it is simpler for them now to continue with AMD and x86-64 instead switch to ARM. Long time consoles will not be x86.

Cortex cores are also smaller than Jaguar cores.

Keep in mind that if we do go the route of Ray-Tracing then wouldn't AMD's GPU with higher compute power do better than Nvidia?

Yes, Intel might actually be able to do it better. They demoed it on Larrabee many years ago. Remember Larrabee? Hopefully Intel can get it right this time. And God help AMD if they do.Current architectures?

Sure!

But it'd be too slow. If ray-tracing is the way forward, new, faster hardware will need to be developed, and given AMD's development speed, I'd bet on Nvidia if I had to place one. Hell, given the relative simplicity of ray-tracing, I'd bet on Intel.

Ah, now you are right about that as well.I800MHz is the GPU clock in the first PS4. the Jaguar cores in last consoles (e.g. PS4 Pro) run >2GHz. Assuming Zen cores clocked around 4GHz (which is an overoptimistic assumption) we obtain performance gap is

2.5 * 4/2 = 5

This is the ~5x factor I mentioned in my former post between a console with 8 jaguar cores and one with 8 Zen cores.

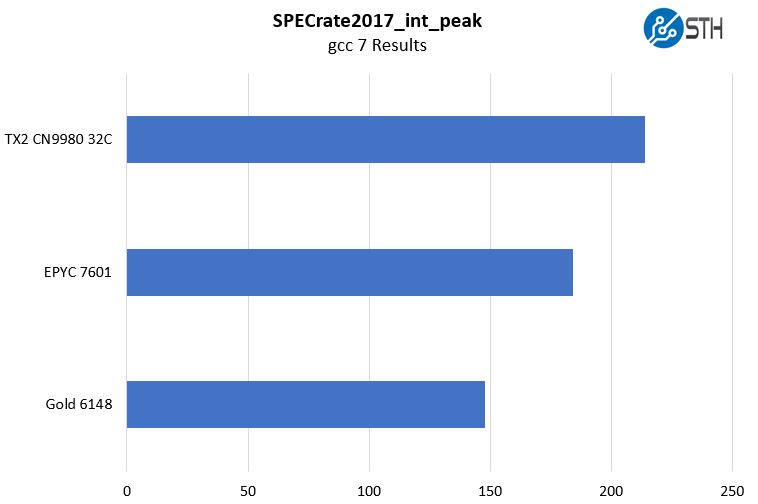

So far nobody has made a ARM CPU that even comes close to what Intel or AMD has with x86.

Yes I remember Larrabee!Yes, Intel might actually be able to do it better. They demoed it on Larrabee many years ago. Remember Larrabee? Hopefully Intel can get it right this time. And God help AMD if they do.

As is backed by much info in this thread as well: https://hardforum.com/threads/arm-server-status-update-reality-check.1893942/page-2This ThunderX2 chis is being used in a supercomputer built by Cray. Supposedly HPC benchmarks will be released in the Cray User Group conference this week.

Yea but they achieve this with many cores on two CPUs. Now use two Threadripper chips and see what happens. This is kinda what Intel did with Larrabee and that kinda failed. Kinda cause Larrabee turned into a CPU.If you say so

View attachment 75688

This ThunderX2 chis is being used in a supercomputer built by Cray. Supposedly HPC benchmarks will be released in the Cray User Group conference this week.

If it's an APU with a heavy duty GPU, the heat production would be high and consoles aren't known for amazing cooling. So I'd expect it to be clocked really low.1.8GHz looks too small. I would expect clocks in the 2--3GHz range.

Ray Tracing has been done with older DX11 GPUs, and hybrid Ray-Tracing has been around for a while. You can download a demo running on your PC from 6 years ago. I expect Nvidia to have a proprietary API cause Nvidia. You obviously don't need one, but this way it sells far more graphic cards and you can't blame Nvidia for lacking compute power in their older GPU's because of proprietary API.Current architectures?

Sure!

But it'd be too slow. If ray-tracing is the way forward, new, faster hardware will need to be developed, and given AMD's development speed, I'd bet on Nvidia if I had to place one. Hell, given the relative simplicity of ray-tracing, I'd bet on Intel.

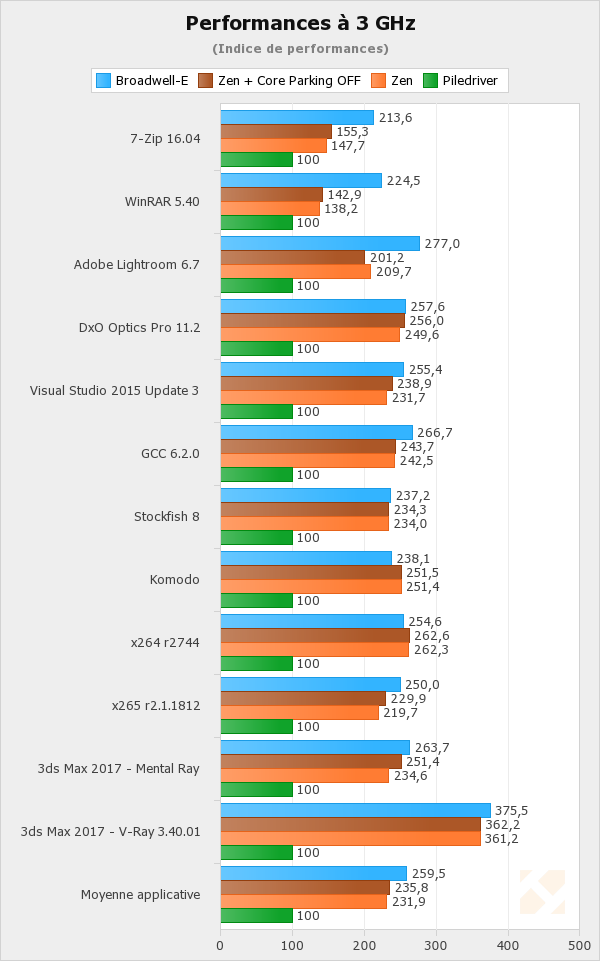

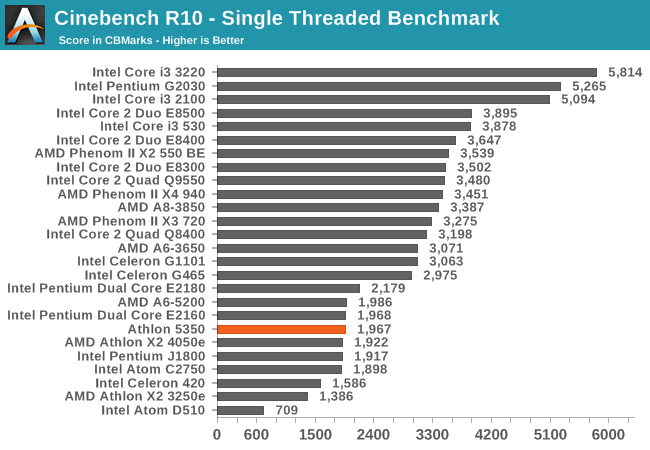

I am also talking about CPU. Zen IPC is ~2.5x greater than Piledriver

View attachment 75681

And Piledriver IPC is roughly equivalent to Jaguar IPC (+- 10%).

Ray Tracing has been done with older DX11 GPUs, and hybrid Ray-Tracing has been around for a while. You can download a demo running on your PC from 6 years ago. I expect Nvidia to have a proprietary API cause Nvidia. You obviously don't need one, but this way it sells far more graphic cards and you can't blame Nvidia for lacking compute power in their older GPU's because of proprietary API.

Go ahead, download the demo, it looks amazing. I wouldn't be surprised if AMD puts Ray Tracing on the CPU considering how little the CPU is used in games, and its not like AMD doesn't benefit from people needing faster CPUs. Traditionally CPU's have done Ray-Tracing. Would be more amazing if AMD mixed in GPU compute with CPU's. I forget that's something I think OpenCL can do, and maybe now Vulkan as well.

I know this thread is getting big, but I have already done the general-performance clock-for-clock comparison directly between Ryzen and Jaguar (see post #30), and Ryzen is roughly 2.66 times faster than Jaguar.Not really. Here you can see the Athlon 5350 is more than half as slow as the AMD A8 3850. The Athlon 5350 is a Jaguar chip while the A8 3850 is a Liano chip. And the FX 8350 is 20% faster in IPC than that chip and it was based on Trinity. So yea, the Jaguar chips were half as slow in IPC compared to Trinity, and Trinity is 50% slower than Ryzen.

That is NOT "IPC".I am also talking about CPU. Zen IPC is ~2.5x greater than Piledriver

View attachment 75681

And Piledriver IPC is roughly equivalent to Jaguar IPC (+- 10%)

800MHz is the GPU clock in the first PS4. the Jaguar cores in last consoles (e.g. PS4 Pro) run >2GHz. Assuming Zen cores clocked around 4GHz (which is an overoptimistic assumption) we obtain performance gap is

2.5 * 4/2 = 5

This is the ~5x factor I mentioned in my former post between a console with 8 jaguar cores and one with 8 Zen cores.

I just showed you a demo from 6 years ago that you could run 6 years ago with hybrid ray tracing. Hardware 6 years ago could do ray tracing, so why would it be impossible today? There have been modified Quake and Doom engines that actually used Ray Tracing.I... what?

No, CPUs will not be fast enough. Adding them to the render loop will likely slow things down due to syncing issues- multi-GPU but worse- and no current GPU is fast enough to render modern graphics with ray-tracing.

It will take new hardware.

You're hilarious in your tech expertise.Well first of all, Vega was delayed for well over a year. So even if AMD is saying early 2018 for Navi, I would say early 2019 is a safer and more reasonable estimate. So when is the next architecture after Navi coming out? 2020? Does it even have a name? And should we bank on a 2021 release due to delays? I really don't think Sony can wait that long. Perhaps they can. But really waiting on a new from the ground up architecture from AMD when their lead chip designer just left them seems extremely risky to me. Perhaps it would pay off. But perhaps not. You are right though. They are currently in a very good position.

I think he was talking about the GPU technology in general and how those specific architectures could potentially be used in the future consoles, assuming AMD and/or x86-64 is used.You're hilarious in your tech expertise.

None of your above quotes matters. Sony/MS do not buy off the shelf GPU's. AMD design's what its contractors desire. You keep grasping at the retail PC world and equating it to future console constructs.

That is idiotic. None, I will repeat NONE of the x86 consoles have ever used off the shelf, stock GPU's!

Everything is custom. x86 based offers so much freedom and (KEY) compatibility. Intel is years out, and Nvidia has no license.

I hope you have worked out some issues in your public insanity though.

Shame if you haven't.

Ah, now you are right about that as well.

Clock-for-clock, Zen is about 2.66 times faster than Jaguar, but if the clock speed were to double (2.0GHz to 4.0GHz) then that would most certainly result in a 5+ times boost in performance overall in general.

Again, the saddest part about the newest iteration of the current-generation consoles is the weak CPUs, which in turn is heavily holding back both the GPU (Destiny 2 - devs stated this) and game logic (Fallout 4 has this issue), at least at 4K resolutions and a few 1080p resolutions, depending on the game.

Well, that's the polar opposite of what the Destiny 2 developers stated:This is my beef with your statement it implies that you know things through the destiny developers which coincide with your statement. Which is rather odd because if you look at the hardware then look at your statement you would think it is true.

The hardware itself is not at fault it is that Destiny 2 developers can't get more out of it.

I ask what, specifically, Series X offers the team that current-gen consoles couldn't: "It's mostly evolutionary improvements that are going to make the biggest difference. The most important one is elimination of the CPU bottleneck that exists in the current-gen consoles and much faster loading of assets thanks to the SSD. It's all about responsiveness and not having to wait on things."

You are just repeating yourself, the developer is talking about their engine not what the hardware can do or can not. In the design for both consoles the cpu are just there to send data to the gpu why would you else use a processor that is 5 years old, not because it is the fastest.Well, that's the polar opposite of what the Destiny 2 developers stated:

http://gearnuke.com/destiny-2-project-lead-confirms-30-fps-due-cpu-limits-evaluating-4k-xbox-one-x/

(article is from June 15, 2017)

The consoles are CPU-bound, which in turn limits the frames per second on every resolution, regardless of whether or not it runs at 720p, 1080p, 2K, or 4K.

Mark Noseworthy himself states just that in the third link.

EDIT:

I might also add that the XBone X Jaguar CPU is clocked at 2.3GHz compared to the PS4 Pro Jaguar CPU clocked at 2.13GHz.

So when they say the XBone X CPU is bottlenecking the GPU and game logic, just imagine how much worse it will be on the PS4 Pro.

I really would enjoy your response to this.

I just showed you a demo from 6 years ago that you could run 6 years ago with hybrid ray tracing. Hardware 6 years ago could do ray tracing, so why would it be impossible today? There have been modified Quake and Doom engines that actually used Ray Tracing.

Intel has shown you can do it in real time with just using the CPU. You obviously wouldn't ray trace the entire game, cause the hardware can't do that, but if the math for ray-tracing was just pure math then you could feed it to both the GPU and the CPU, assuming ray-tracing doesn't care which is faster.

Nvidia wouldn't want assistance from the CPU where AMD would.

I'm kind of shocked that an experienced technology reviewer like yourself doesn't understand what "CPU bound" means.You are just repeating yourself, the developer is talking about their engine not what the hardware can do or can not. In the design for both consoles the cpu are just there to send data to the gpu why would you else use a processor that is 5 years old, not because it is the fastest.

He says his engine can't do more fps nowhere does he mention that they have done anything different remember Battlefield on the PS4 that did a respectable job.

You did not explain why would MS or Sony for that matter use the same hardware if the software would not be able to do resolutions they wanted.

That has to be the most awkward thing when you release hardware that can not push the gpu 2 times in a row.

On both counts your arguments make no sense , one developer struggles with his engine and manufacturers that wilfully ignores serious bottleneck in their hardware design.

What exactly is optimized for Ray-Tracing? Are we talking about fixed functions built into GPU's that can accelerate Ray-Tracing? Cause its been tried and doesn't give real-time Ray-Tracing.And I ignored it, because we're talking about AAA-games, not tech demos. Of course you can run ray tracing on CPUs- that's how it's always been done, and of course you can run it on a GPU, because it is just math, but in neither case is current hardware up to par. We don't just need faster hardware, we need hardware that is optimized for the purpose!

Exactly how much of a 8 core 16 thread CPU you think is used during todays games? Also, my religion is competition. I'd totally rock a blue Intel graphics card. And guess what, Intel would be for using the GPU+CPU to do Ray-Tracing. Guess why?Your religion is showing.

Further, the idea of relying on the CPU for ray-tracing is just plain silly. You'll be burning resources that could be used for AI, or for keeping response times down, etc.

What exactly is optimized for Ray-Tracing? Are we talking about fixed functions built into GPU's that can accelerate Ray-Tracing? Cause its been tried and doesn't give real-time Ray-Tracing.

Exactly how much of a 8 core 16 thread CPU you think is used during todays games? Also, my religion is competition. I'd totally rock a blue Intel graphics card. And guess what, Intel would be for using the GPU+CPU to do Ray-Tracing. Guess why?

How else would you do Ray-Tracing? PowerVR does show a RTU (Ray Tracing Unit) in their GPU design that does Ray-Tracing but I would think that todays GPU's have enough compute power to do it anyway. The PowerVR chip is meant for tablets.What else would we be talking about? And how does 'tried' equate to future hardware? Are you implying that ray-tracing should only be done in software on CPUs and as GPU shaders?

Like what games specifically? Far Cry 5 on a Ryzen 1700 used 24% of the CPU.Today's games- most of the CPU. Tomorrow's games? Likely all of today's CPUs, and then some.

Good lord, the developer directly states in the tweets:You are just repeating yourself, the developer is talking about their engine not what the hardware can do or can not. In the design for both consoles the cpu are just there to send data to the gpu why would you else use a processor that is 5 years old, not because it is the fastest.

It's because the game engine (logic, AI, etc.) is too much for the XBone X (and by extension PS4 Pro) Jaguar CPU to handle in order to keep the GPU properly fed data, thus having to limit the frame rate to 30fps at all resolutions.He says his engine can't do more fps nowhere does he mention that they have done anything different remember Battlefield on the PS4 that did a respectable job.

I'm not sure what this has to do with anything, or even why I should have to explain this, but by using logic and deduction (and history), I will tell you.You did not explain why would MS or Sony for that matter use the same hardware if the software would not be able to do resolutions they wanted.

The original GPUs were barely mid-range back in 2013, and the Jaguar CPUs in each console were actually enough to deliver enough data for 30fps and in some cases 60fps, depending on the game and resolution.That has to be the most awkward thing when you release hardware that can not push the gpu 2 times in a row.

Well, yeah, my arguments do make sense and your statements have literally nothing to do with anything.On both counts your arguments make no sense , one developer struggles with his engine and manufacturers that wilfully ignores serious bottleneck in their hardware design.

Good lord, the developer directly states in the tweets:

"All consoles will run at 30fps to deliver D2's AI counts, environment sizes, and # of players. They are all CPU-bound."

"1080 doesn't help. CPU bound so resolution independent."

I'm pretty sure when they are talking about the CPU, they are talking about hardware, and even say that it is CPU bound, just like I stated - the game engine also runs on, you guessed it, the CPU of all things, so go figure.

This is a very basic concept, how do you not get this???

Yea but they achieve this with many cores on two CPUs. Now use two Threadripper chips and see what happens. This is kinda what Intel did with Larrabee and that kinda failed. Kinda cause Larrabee turned into a CPU.

Also single threaded vs multithreaded. That's really good for servers but we need good single threaded performance for the desktop.

Not really. Here you can see the Athlon 5350 is more than half as slow as the AMD A8 3850. The Athlon 5350 is a Jaguar chip while the A8 3850 is a Liano chip. And the FX 8350 is 20% faster in IPC than that chip and it was based on Trinity. So yea, the Jaguar chips were half as slow in IPC compared to Trinity, and Trinity is 50% slower than Ryzen.

View attachment 75698

View attachment 75699

That is NOT "IPC".

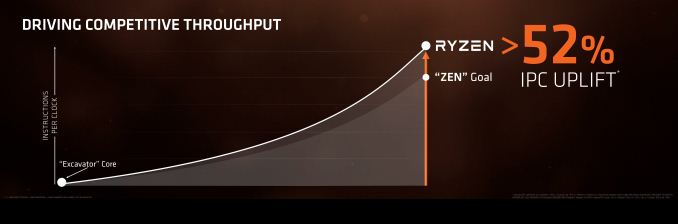

The goal was to achieve 40% IPC and they ended up with a hair over 50% improvement in IPC.

EDIT: Yep took only a few seconds to see that: https://www.anandtech.com/show/1117...-7-review-a-deep-dive-on-1800x-1700x-and-1700

"Then it was realised that AMD were suggesting a +40% gain compared to the best version of Bulldozer, which raised even more question marks. At the formal launch last week, AMD stated that the end goal was achieved with +52% in industry standard benchmarks such as SPEC from Piledriver cores"

View attachment 75720

Further, the idea of relying on the CPU for ray-tracing is just plain silly. You'll be burning resources that could be used for AI, or for keeping response times down, etc.

That makes total sense, and I remember the then high-end ARM CPUs back in 2013 being the A17/A15, which really were not that great overall for price-performance compared to the Jaguar-based x86-64 processors, though they were somewhat close in general-processing power.

You are right, though, the fact that they were not 64-bit was a total deal breaker back then, as 4GB of addressable RAM+hardware was not nearly enough... maybe back in 2010, but not in 2013.

As for the existing ARM processors, if I remember correctly (did the math on this a while back), I believe ARMv8 A57 cores are about 1.2 times faster clock-for-clock compared to Jaguar-based x86-64 cores.

It's kind of funny that clock-for-clock the Nintendo Switch A57 (four) cores are technically faster than the Jagaur cores in the PS4/XBone, but because they are only clocked at 1GHz, Jaguar ends up beating it due to its much higher clock speed.

That is impressive about the A72 cores having that amount of processing power - I will have to read up a bit more on those, thanks for the info!

Using a quad-core CPU for raytracing is silly. Using a manycore CPU is not.

I don't even think using a quad-core for ray-tracing itself is silly- what's silly is trying to use a CPU at all for it given that most of a CPU is dedicated to out of order processing that is totally unneeded for a pure compute job, if you have a choice.

I could see using excess CPU resources for ray-tracing, but this wouldn't be viable in a consumer AAA-game real-time environment. For desktop games, the developer would not be able to guarantee the available resources, and for consoles/mobile, those circuit and power resources would be better spent on dedicated hardware.

Alright, I'm not going to disagree there, and you do bring up a good point about memory usage- but it's still looking at current and past hardware.

Ray-tracing is still very simple math, which again means that CPUs are way, way overbuilt. Further, when talking about memory and cinematic-level renders, I think that the scale would likely be different for AAA-games and real-time renders. I don't know how different, no one does, but I'm betting that 'less' would be reasonable.

So I expect that future GPU iterations will start including hardware specifically designed to adapt their architectures for ray-tracing where they are currently deficient today. I'd bet that the transition from pure fixed function to more flexible units will happen over time like the DX7 -> DX8 -> DX9 ->DX10/11/12 transition from fixed-function to universal shaders did with raster graphics.

Further, I agree on the 'mix', where ray-tracing would likely be added to enhance certain effects in games as an introduction with greater utilization going forward.