spine

2[H]4U

- Joined

- Feb 4, 2003

- Messages

- 2,720

I was surprised when nvidia didn't seem to actively go after mining last year. Surely they could have whipped up some basic asic or something, but no.

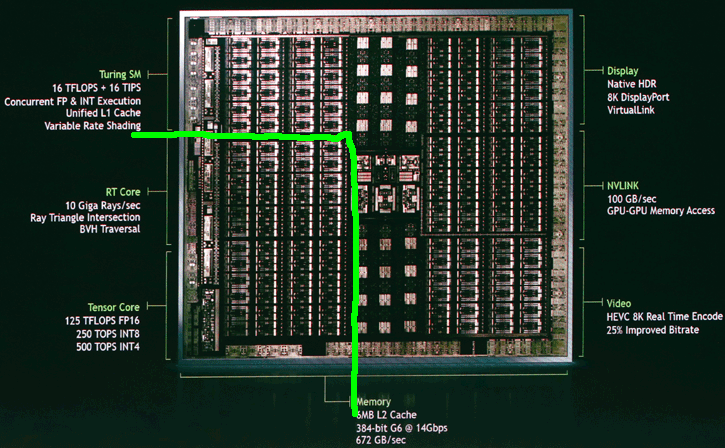

I'm sure the RTX cores absolutely do their job as Ray tracing accelerators, but that isn't going to help gamers any time soon. Maybe a year from now some dude will get a Ray Traced modded Bioshock running on two RTX Titans via nvlink, but realistically, I can't see any application outside of development.

I get that nvidia need to prime the market with hardware ahead of actually pushing Ray Traced games, but given the cost of these chips on the node they are, it would have made alot more sense to shave off a 3rd of the die space if they're targeting gamers. Well I guess they have with 2060. The 2080/2070 must be cut down Quadro rejects, so just happens to have that RTX core. I think AdoredTV went over this recently with a supposed internal leak.

Seems an odd move to me though, so I'm fully expecting another mining explosion based around Turing. I mean it *is* called Turing after all!

I'm sure the RTX cores absolutely do their job as Ray tracing accelerators, but that isn't going to help gamers any time soon. Maybe a year from now some dude will get a Ray Traced modded Bioshock running on two RTX Titans via nvlink, but realistically, I can't see any application outside of development.

I get that nvidia need to prime the market with hardware ahead of actually pushing Ray Traced games, but given the cost of these chips on the node they are, it would have made alot more sense to shave off a 3rd of the die space if they're targeting gamers. Well I guess they have with 2060. The 2080/2070 must be cut down Quadro rejects, so just happens to have that RTX core. I think AdoredTV went over this recently with a supposed internal leak.

Seems an odd move to me though, so I'm fully expecting another mining explosion based around Turing. I mean it *is* called Turing after all!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)