- Joined

- May 18, 1997

- Messages

- 55,598

Ampere Launch Day Survey! Take it now, don't wimp out.

https://hardforum.com/threads/after-ampere-launch-survey.2001351/

https://hardforum.com/threads/after-ampere-launch-survey.2001351/

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Again, what do they mean by "used"? We need to know how they're getting their numbers. 95% chance they're reporting allocation not usage. The game probably just requests more VRAM when you set it to high textures.

Again, what do they mean by "used"? We need to know how they're getting their numbers. 95% chance they're reporting allocation not usage. The game probably just requests more VRAM when you set it to high textures.

Again, what do they mean by "used"? We need to know how they're getting their numbers. 95% chance they're reporting allocation not usage. The game probably just requests more VRAM when you set it to high textures.

Right, so in my experience frame rate does not drop down to 17FPS due to allocation? It drops down by over 50% due to usage? At least this is my understanding. If it was just an allocation issue, we would not see frame go down to 17fps then back up to 42fps once textures size is reduced? Or am I wrong, do games tank based just on allocation alone?

It could be any number of factors. VRAM isn't the only metric that determines GPU performance. We don't know how much memory the game is actually using, all we know is how much is requested. For example, maybe it's not the amount of memory that's the limiting factor. Maybe it's the memory bandwidth.

Right, so in my experience frame rate does not drop down to 17FPS due to allocation? It drops down by over 50% due to usage? At least this is my understanding. If it was just an allocation issue, we would not see frame go down to 17fps then back up to 42fps once textures size is reduced? Or am I wrong, do games tank based just on allocation alone?

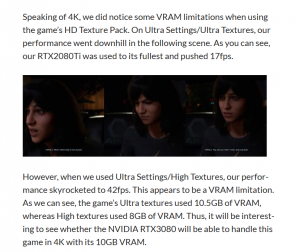

Ranting and raving about one poorly coded console port outlier, and accusing everyone disagreeing of being paid Nvidia shills.Once you have to start copying from main RAM, the framerate tanks, and it makes sense with the numbers they're putting out there. The OS using some VRAM, the game wants over 10.5GB. It follows that there isn't going to be enough.

Ranting and raving about one poorly coded console port outlier, and accusing everyone disagreeing of being paid Nvidia shills.

Here's an idea, don't buy the GPU if it won't suit your perceived needs.

Uh, there's tons of proof.

https://www.dsogaming.com/pc-performance-analyses/marvels-avengers-pc-performance-analysis/

And this is last generation crapola. You really think that next gen games aren't going to need more? Stop bsing. Honestly, the internet's so compromised I don't trust anything people write on forums anymore. Most of the people here could be astroturfing accounts. How much is Nvidia paying you?

Outlier my ass. I'm just making sure that people have all the information and aren't just getting pumped full of Nvidia PR.

Here's an idea, don't tell people to stop sharing information just because it gets your panties in a bunch.

No, you're acting like an overly-aggressive child mad that the adults won't listen to your inane rambling. If you don't think it'll be enough, that's fine, you can share your opinion in a calm, reasonable, manner. Instead you act like Nvidia kicked your dog, fucked your mom, and shot your dad.

I don't get it... you're probably the same guy arguing that 16gb of system memory isn't enough for gaming.

Gamers Nexus pointed out in their 3080 review that a lot of people concerned about 10GB VRAM are confusing memory allocation vs memory use. People see 10GB or 11GB in software and think the game is using all of that, but it's just allocation.

There are some old COD games that will allocate 100% of your GPU memory and only use 5 gbs of it at 4k. I own an 11gb 1080ti and I couldn't give two shits about dropping down to 10gb. I've literally never used that extra memory in the last 5 years, including while playing 4k games on my OLED.

I'd suggest that you get them to test with GPU usage shown. Or you can do it, if that game is out. (Games like that don't interest me, so I won't be)I know reading's difficult, but if you actually tried it, you would have seen that the framerate tanks with ultra textures. It isn't some academic allocation vs. usage scenario. It literally doesn't have enough VRAM.

Dude you have to understand, they are trying to feel better about their 2080s right now so they will grab on to anything to deal with this.And I wish people would stop complaining about those that are complaining about 10 gigs not being enough.

If it doesn't bother you then great but it's going to be a talking point for quite a while now so deal with it if you're going to be on a public forum.

Lol, memory bandwidth.. that's funny. That does not cause frame rates to drop and come back like that. It's when it runs out of vram and has to shuffle data through the pcie bus, aka not enough room to fit everything in vram. Games don't suddenly tank due to bandwidth. Streaming 10% more textures doesn't give you a 60-70% hit in performance... it'd be around 10%.It could be any number of factors. VRAM isn't the only metric that determines GPU performance. We don't know how much memory the game is actually using, all we know is how much is requested. For example, maybe it's not the amount of memory that's the limiting factor. Maybe it's the memory bandwidth.

several companies, including id Software, saying 8GB of VRAM is going to be the MINIMUM required spec for next generation games

Source for id and several companies stating this? Edit: saw your identical post on the prior page with the id source at least. Buying the most VRAM a budget allows for is pretty obvious advice but still, we have a 10GB card so there's obviously a little mental justification going on given we don't have official word on upcoming alternate models and availability is shit now anyway.

I currently game at 4K with a 2080 Super which only has 8GB without issue.Getting really bored of obtuse bullshit being spouted like this. He said 8GB MINIMUM. Hi. MINIMUM. He probably wasn't even talking about 4k, which makes it even worse. Even TODAY, there's a 3GB+ difference between usage with the lowest graphics settings versus the highest settings. This is only going to increase with next generation games.

Everyone with a brain is waving the warning signs and telling everyone that it's not going to be enough for next generation games at max settings, and you weirdos are burying your heads in the sand.

Fine. Get fucked over. Buy a paperweight. No skin off my back.

Buy a paperweight.

Getting really bored of obtuse bullshit being spouted like this. He said 8GB MINIMUM. Hi. MINIMUM. He probably wasn't even talking about 4k, which makes it even worse. Even TODAY, there's a 3GB+ difference between usage with the lowest graphics settings versus the highest settings. This is only going to increase with next generation games.

Everyone with a brain is waving the warning signs and telling everyone that it's not going to be enough for next generation games at max settings, and you weirdos are burying your heads in the sand.

Fine. Get fucked over. Buy a paperweight. No skin off my back.