MrGuvernment

Fully [H]

- Joined

- Aug 3, 2004

- Messages

- 21,812

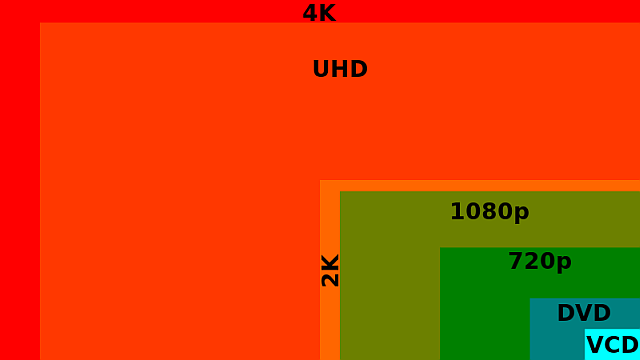

So, prices are low cause 2018 models are coming out so grabbed a 4k TV (Samsung MU8000 55') I wanted the Sony X900E but couldn't justify the added $300 for it.

With that, I have been using an older Dell Optiplex 990 with an i5 2400 CPU and integrated video, as it was fine for 1080p content to my crap-tastic LG 43" TV.

So now, grabbing some 4k content, CPU is pegging around 80-100% and dropping audio.

The Optiplex has room for a half height GPU.

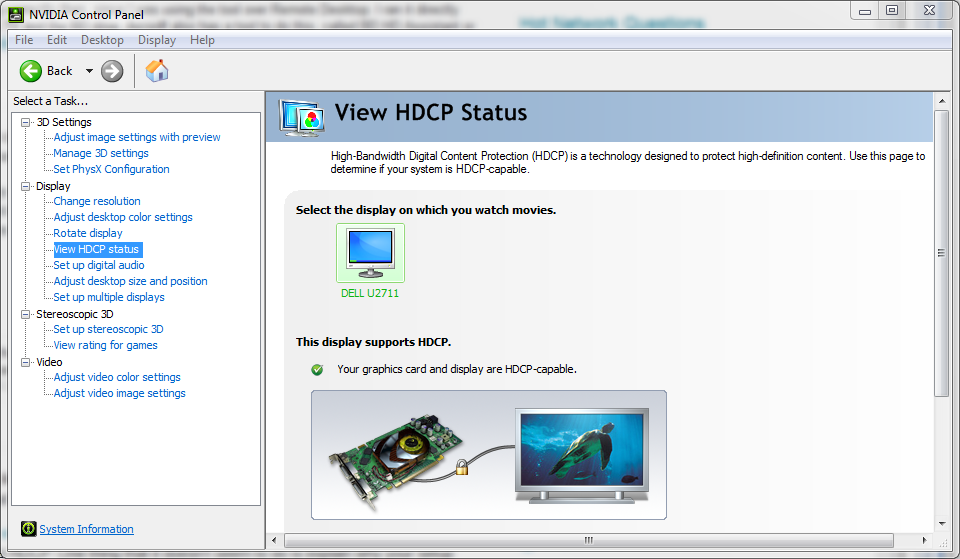

I know for NetFlix you need Kaby Lake for HDCP crap to stream 4k, i presume that is still the case even if your using a dedicated GPU? Or even if you use the Win 10 netflix App.

Also, i do want to be able to play streams not from netflix so offloading to the GPu would be ideal..

Can i get around the kaby lake stuff with something like a Nvidia 1030?

With that, I have been using an older Dell Optiplex 990 with an i5 2400 CPU and integrated video, as it was fine for 1080p content to my crap-tastic LG 43" TV.

So now, grabbing some 4k content, CPU is pegging around 80-100% and dropping audio.

The Optiplex has room for a half height GPU.

I know for NetFlix you need Kaby Lake for HDCP crap to stream 4k, i presume that is still the case even if your using a dedicated GPU? Or even if you use the Win 10 netflix App.

Also, i do want to be able to play streams not from netflix so offloading to the GPu would be ideal..

Can i get around the kaby lake stuff with something like a Nvidia 1030?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)