Syntax Error

2[H]4U

- Joined

- Jan 14, 2008

- Messages

- 2,781

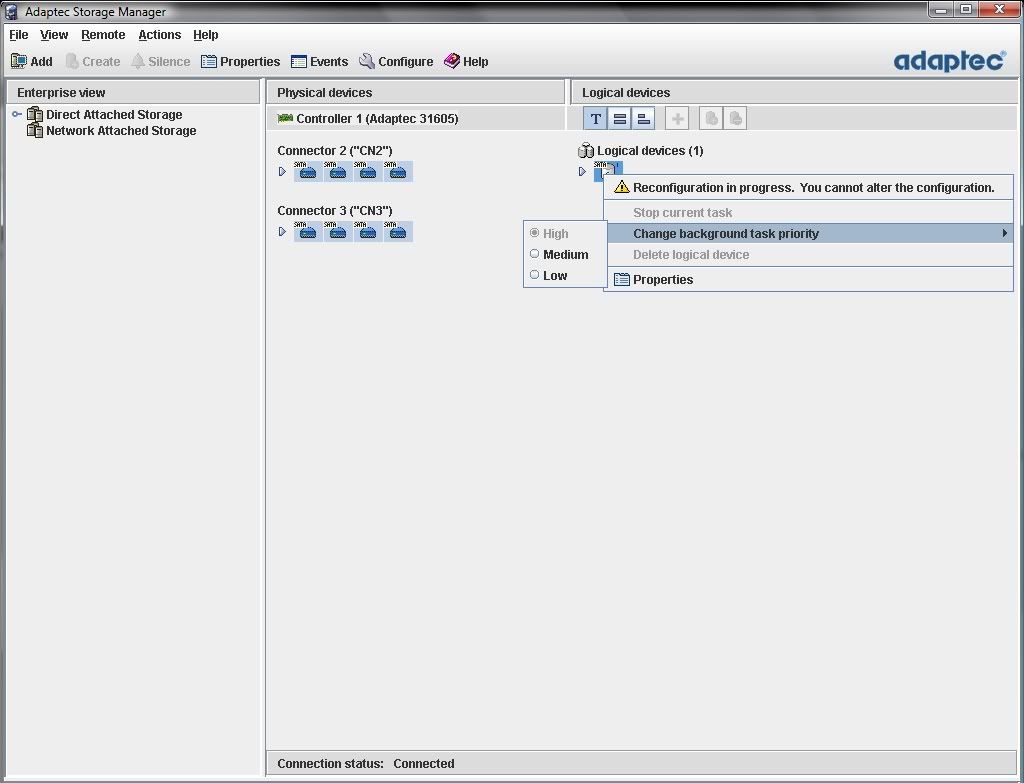

So I have an Adaptec 31605 and I've been doing experiments on how OCE and other nifty features work out, as it's a 16-port and expandability is an important feature for me in terms of uptime and general convenience (not having to find space to dump all my data and rebuild an array is a BIG plus).

However, when I do add on another drive (I use Adaptec's software, Adaptec Storage Manager), it's REALLY slow in rebuilding an array.

How slow? 11 days now rebuilding an array from RAID5 6-disk to RAID6 8-disk and it's at 58% . Windows diagnostic shows that the disk is only being accessed like from 680KB/s - 1MB/s, so there must be something wrong, it can't be that slow to rebuild an array, is it?

. Windows diagnostic shows that the disk is only being accessed like from 680KB/s - 1MB/s, so there must be something wrong, it can't be that slow to rebuild an array, is it?

Specs of my FS is in my sig, what could be the cause of this problem?

However, when I do add on another drive (I use Adaptec's software, Adaptec Storage Manager), it's REALLY slow in rebuilding an array.

How slow? 11 days now rebuilding an array from RAID5 6-disk to RAID6 8-disk and it's at 58%

Specs of my FS is in my sig, what could be the cause of this problem?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)