UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

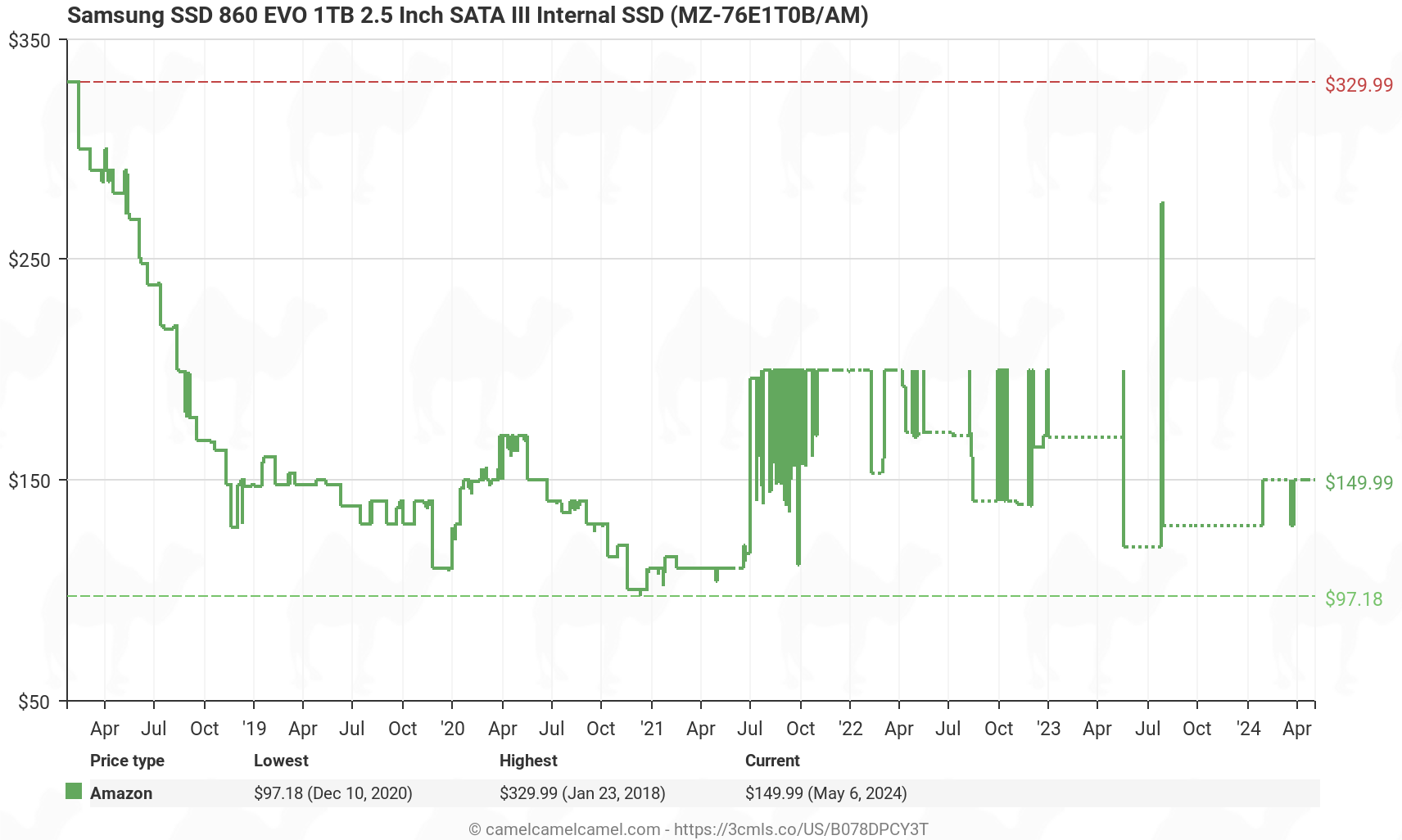

The all SSD future can't come fast enough. However if the silicon shortage has showed us anything, when push comes to shove there isn't enough manufacturing space to pump out all the devices that everyone needs.SSD's are certainly the long term future for almost all applications.

Medium term - however - hard drives will still be around for mass storage for some time. Maybe another decade or two.

Thankfully the days of being forced to boot and run your OS off of a hard drive are mostly over though.

Its been well over a decade at this point and still we don't have consumer class drives that are 4TB that don't cost more than some laptops. Let alone 16+TB density. It's not for the inability to do it, it's obviously due to the insane cost to put that many chips on a single NVME or SAS drive. It's a thing in the high end rack world, but the trickle down on that is so far away.

I think your assessment of closer to two decades is more likely than the shorter. As much as I hate the very idea of that. The only way that will reverse is if all of these different fabs spin up and all the channels get inundated with product driving SSD prices into the ground. But I highly doubt that is going to happen.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)