gerardfraser

[H]ard|Gawd

- Joined

- Feb 23, 2009

- Messages

- 1,366

Sorry no testings at lower resolution below 2560 x 1440 or low/medium setting. There are plenty of others who have shared there findings with the lower settings and lower resolutions

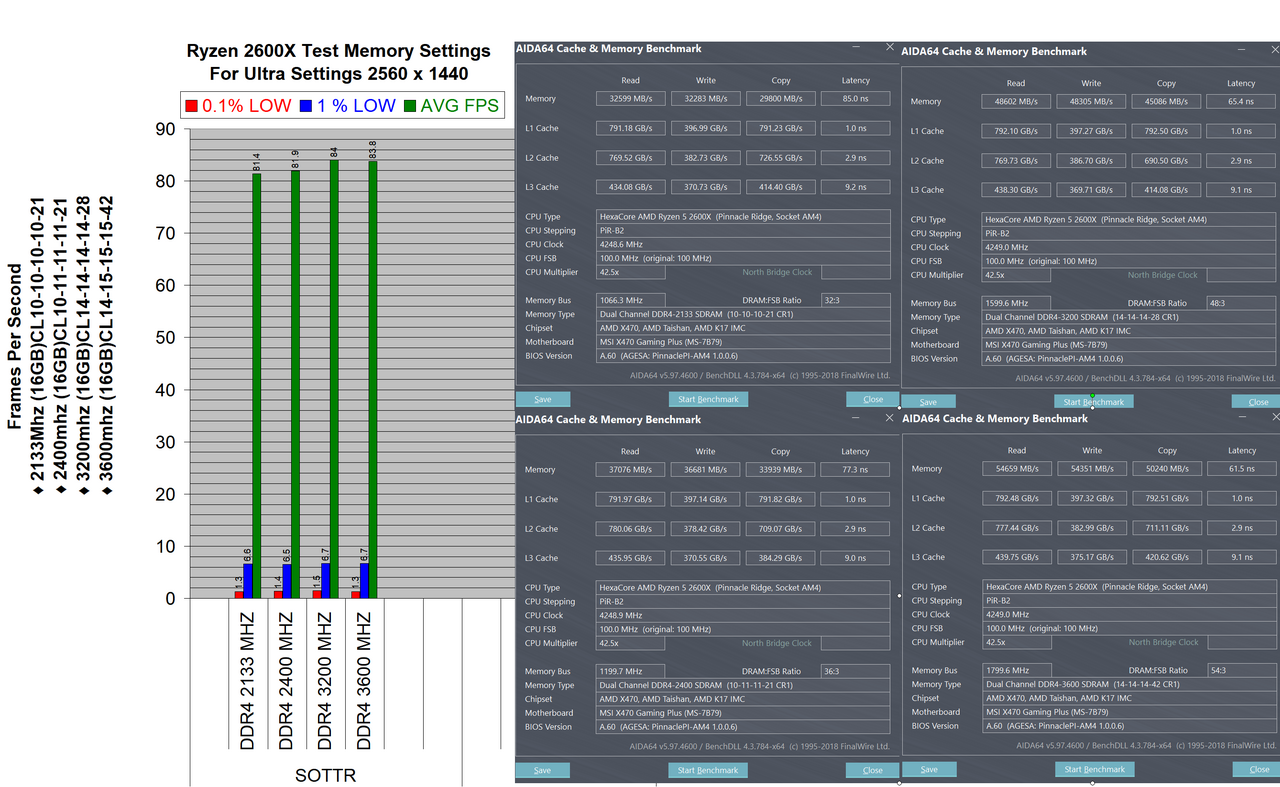

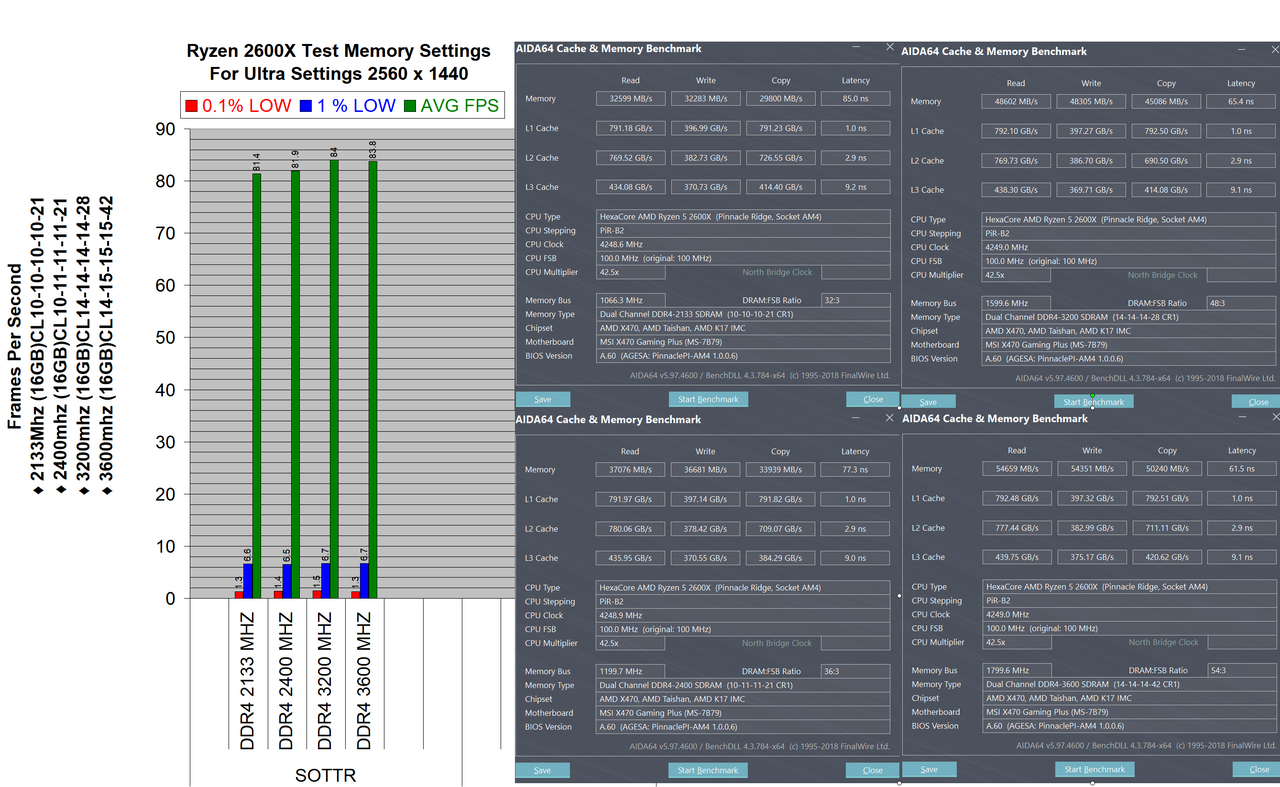

Test are to see if there is a difference when gaming at ultra settings with high resolution 2560 x 1440.

EDIT:Added Feb 01 2019

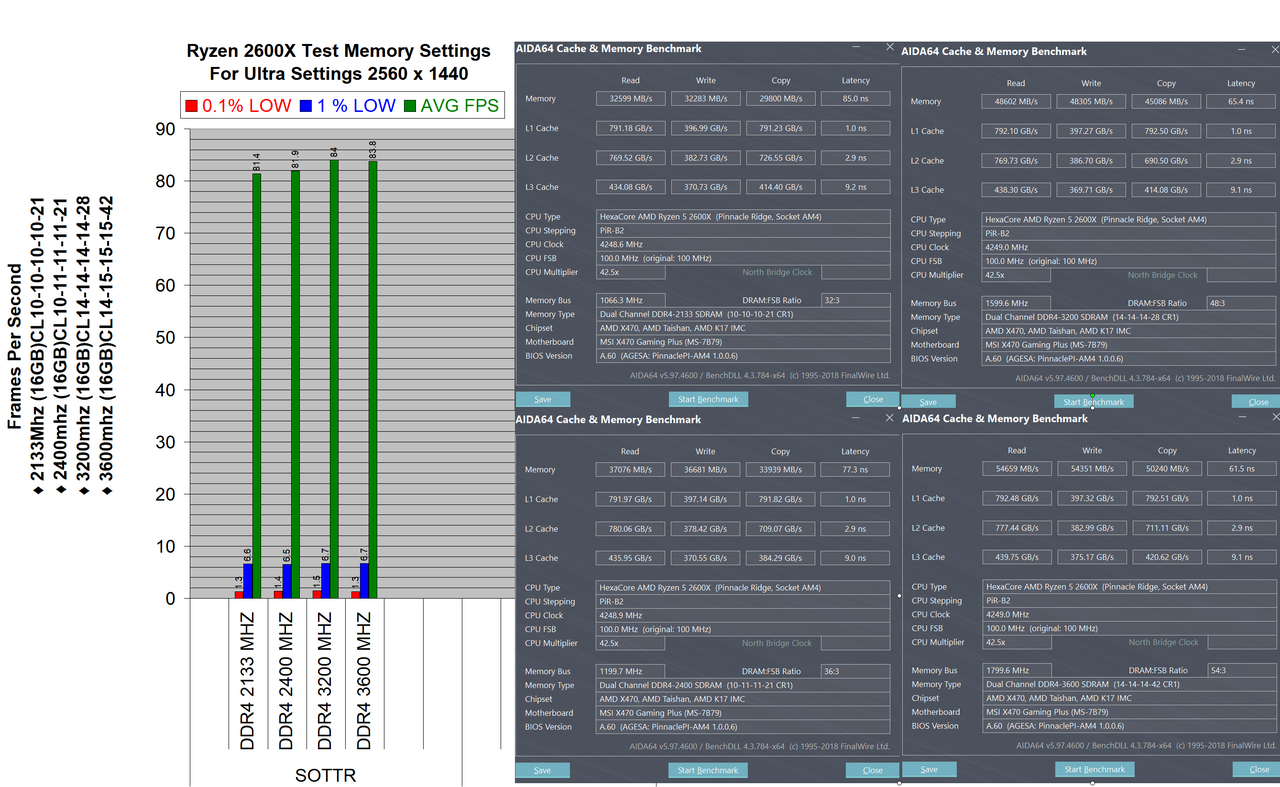

Ryzen vs RAM 2133MHz vs 2400MHz vs 3200MHz vs 3600MHz With Tweaked Timings 2560 x 1440 Ultra setting Shadow Of The Tomb Raider.

♦ 2133Mhz (16GB)CL10-10-10-10-21

♦ 2400mhz (16GB)CL10-11-11-11-21

♦ 3200mhz (16GB)CL14-14-14-14-28

♦ 3600mhz (16GB)CL14-15-15-15-42

Chart of test

Video Of Test

Video Of Test

COMPUTER USED

EDIT: 10 test from a few months ago

%Difference

Test are to see if there is a difference when gaming at ultra settings with high resolution 2560 x 1440.

EDIT:Added Feb 01 2019

Ryzen vs RAM 2133MHz vs 2400MHz vs 3200MHz vs 3600MHz With Tweaked Timings 2560 x 1440 Ultra setting Shadow Of The Tomb Raider.

♦ 2133Mhz (16GB)CL10-10-10-10-21

♦ 2400mhz (16GB)CL10-11-11-11-21

♦ 3200mhz (16GB)CL14-14-14-14-28

♦ 3600mhz (16GB)CL14-15-15-15-42

Chart of test

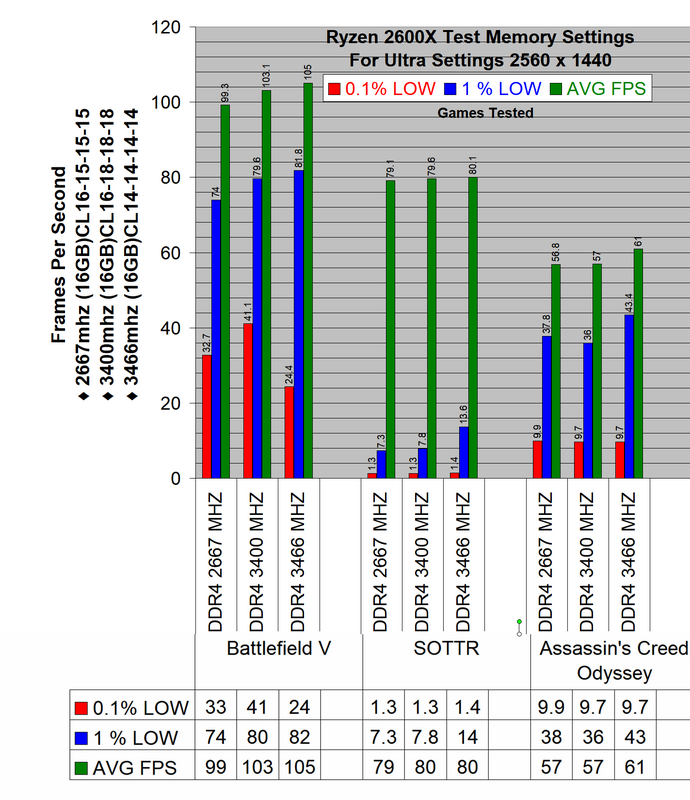

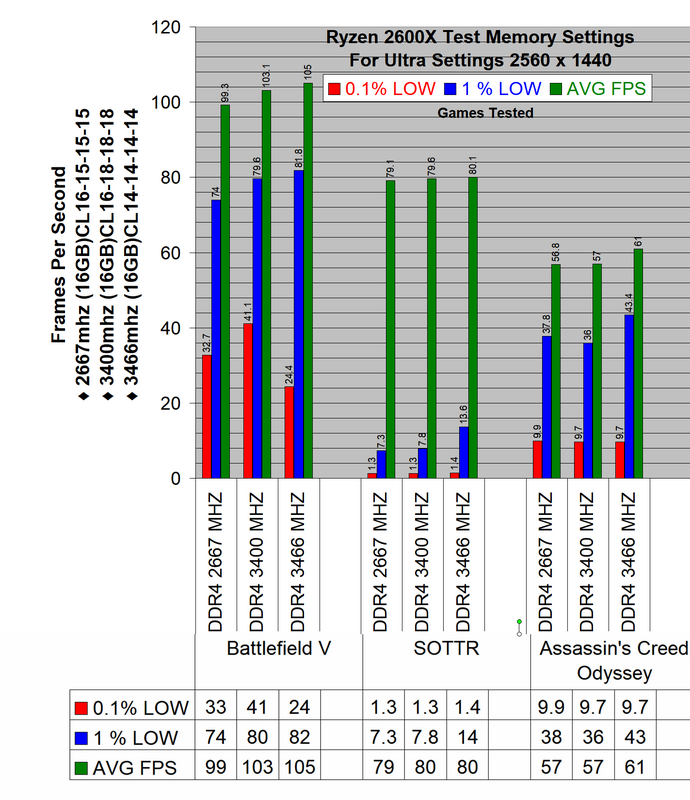

DDR4 Speeds tested

♦ 2667mhz (16GB)CL16-15-15-15-39 Command Rate 1T All sub-timings manual set

♦ 3400mhz (16GB)CL16-18-18-18-36 Command Rate 1T All sub-timings manual set

♦ 3466mhz (16GB)CL14-14-14-14-28 Command Rate 1T All sub-timings manual set

Ryzen Memory Tested Is It Worth Buying New Faster Ram

I have tested various combination of DDR4 Ram on Ryzen+ before in past six months.Always about the same results.

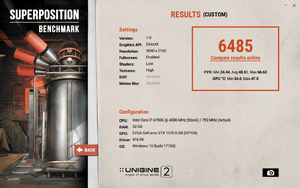

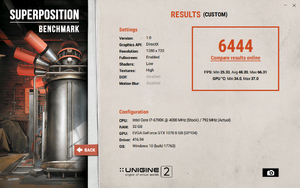

Today I tested a GTX 1080TI gaming at 2560 x 1440 Ultra/Max setting.

If gaming at higher resolutions and you need to know does the ram make a big difference.I would say no need to waste cash on new ram.

♦ 2667mhz (16GB)CL16-15-15-15-39 Command Rate 1T All sub-timings manual set

♦ 3400mhz (16GB)CL16-18-18-18-36 Command Rate 1T All sub-timings manual set

♦ 3466mhz (16GB)CL14-14-14-14-28 Command Rate 1T All sub-timings manual set

Ryzen Memory Tested Is It Worth Buying New Faster Ram

I have tested various combination of DDR4 Ram on Ryzen+ before in past six months.Always about the same results.

Today I tested a GTX 1080TI gaming at 2560 x 1440 Ultra/Max setting.

If gaming at higher resolutions and you need to know does the ram make a big difference.I would say no need to waste cash on new ram.

COMPUTER USED

♦ CPU - AMD 2600X With MasterLiquid Lite ML240L RGB AIO

♦ GPU - Nvidia GTX 1080 Ti

♦ RAM - G.Skill Trident Z 16GB DDR4(F4-4000C18D-16GTZ) (2x8)

♦ Mobo - MSI X470 - Gaming Plus

♦ SSD - M.2 2280 WD Blue 3D NAND 500GB

♦ DSP - LG 27" 4K UHD 5ms GTG IPS LED FreeSync Gaming Monitor (27UD59P-B.AUS) - Black

♦ PSU - Antec High Current Pro 1200W

► FPS Monitoring : MSI Afterburner/RTSS

► Gameplay Recorder : Nvidia Shadowplay

► VSDC Free Video Editor

♦ GPU - Nvidia GTX 1080 Ti

♦ RAM - G.Skill Trident Z 16GB DDR4(F4-4000C18D-16GTZ) (2x8)

♦ Mobo - MSI X470 - Gaming Plus

♦ SSD - M.2 2280 WD Blue 3D NAND 500GB

♦ DSP - LG 27" 4K UHD 5ms GTG IPS LED FreeSync Gaming Monitor (27UD59P-B.AUS) - Black

♦ PSU - Antec High Current Pro 1200W

► FPS Monitoring : MSI Afterburner/RTSS

► Gameplay Recorder : Nvidia Shadowplay

► VSDC Free Video Editor

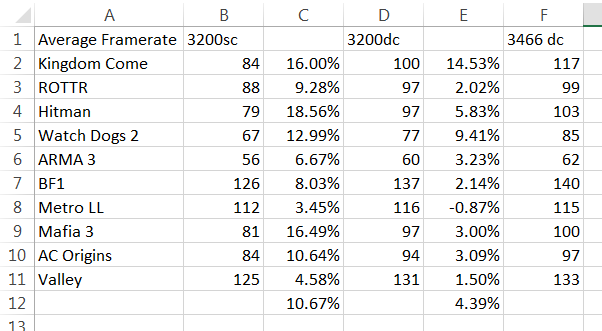

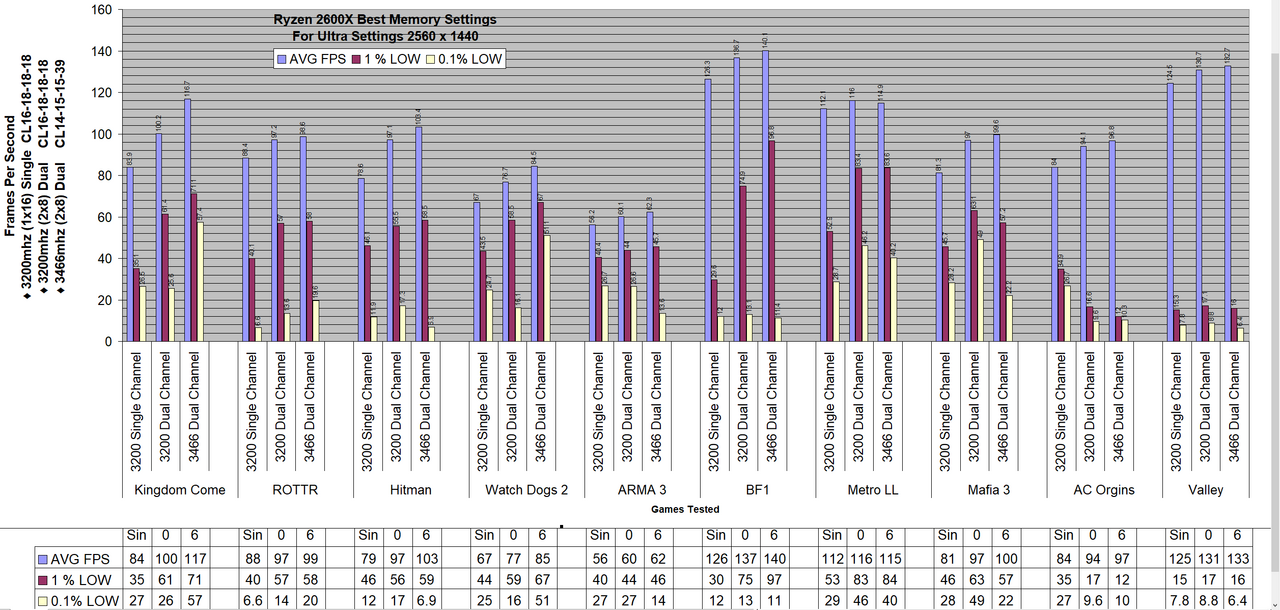

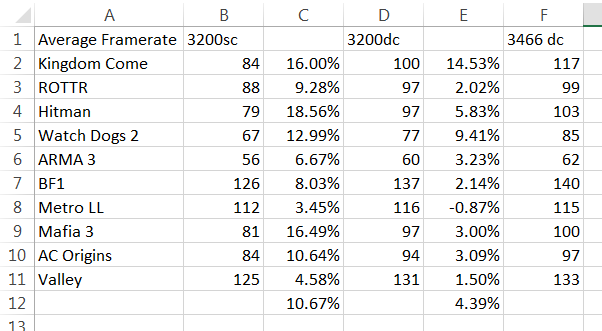

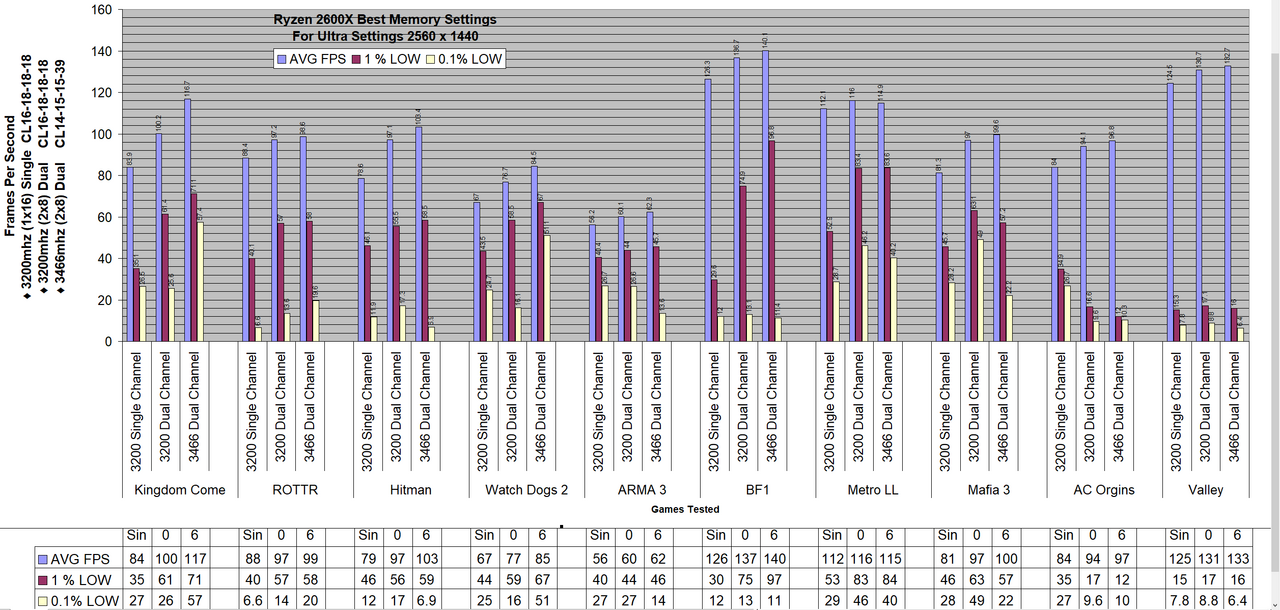

EDIT: 10 test from a few months ago

%Difference

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)