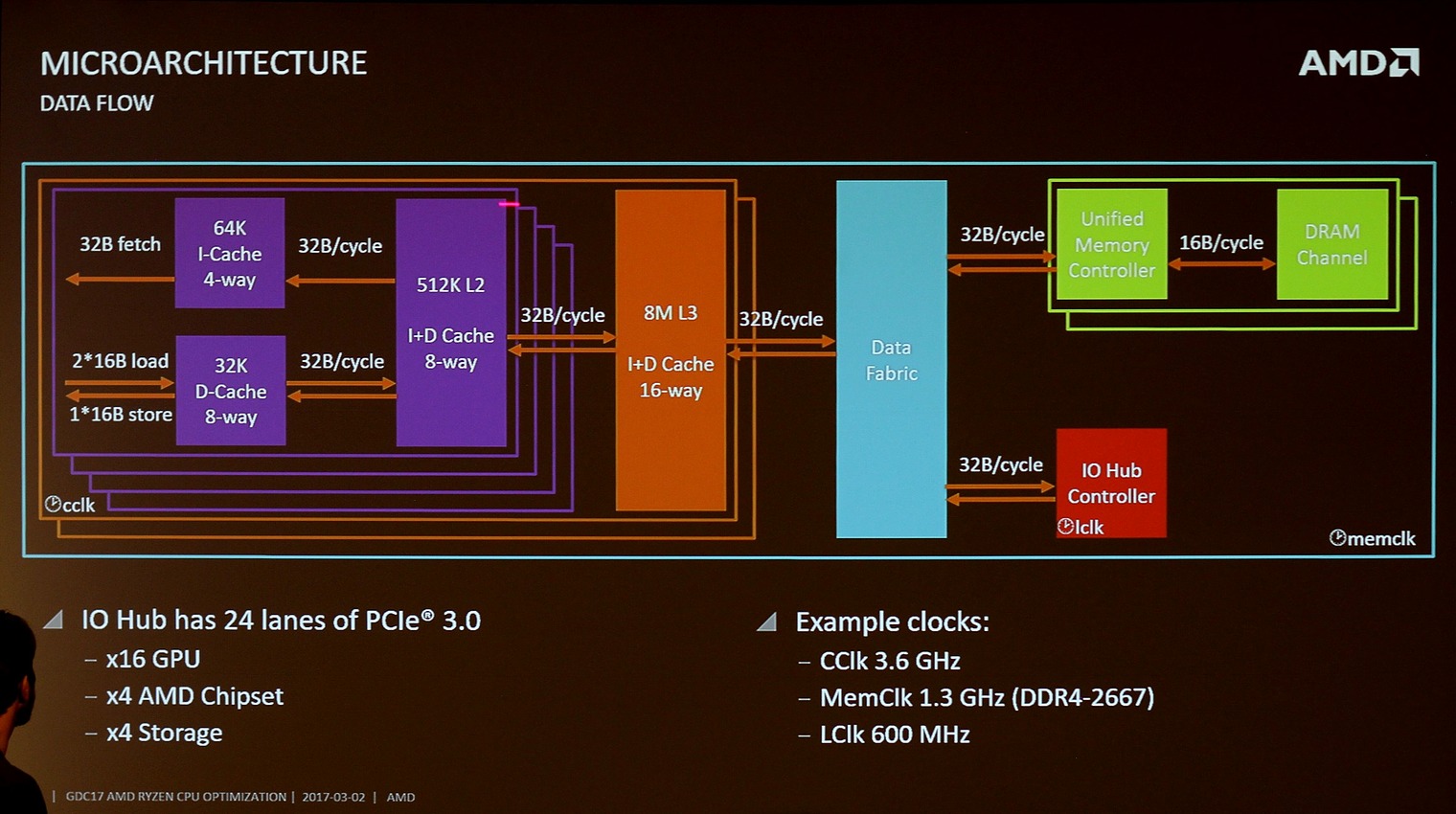

OMFG.if the infinity fabric is linked to ram speed and it is the speed at which the L3 runs and based on the graphs that peg the CCX issue to the latency then Yes I would like to see if ram speed will make any discernable difference. I am not making any claims here just interested in testing using these variables.

AGAIN games tested by a few review sites going from 2133MHz to 3000MHz have the SAME relative gains on both Intel and Ryzen.

What does that tell you?

Are you suggesting Intel has the Ryzen fabric/CCX and L3 cache?

Because that is the only way you can make your point.

You are the one making assumptions while games have been tested on both Intel and Ryzen at both memory frequencies.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)